Why you can trust Tom's Hardware

GPUs are also used with professional applications, AI training and inferencing, and more. We're hoping to add some AI benchmarks soon… but we ran out of time. For now, we've got several 3D rendering applications that leverage ray tracing hardware along with the SPECviewperf 2020 v3 test suite.

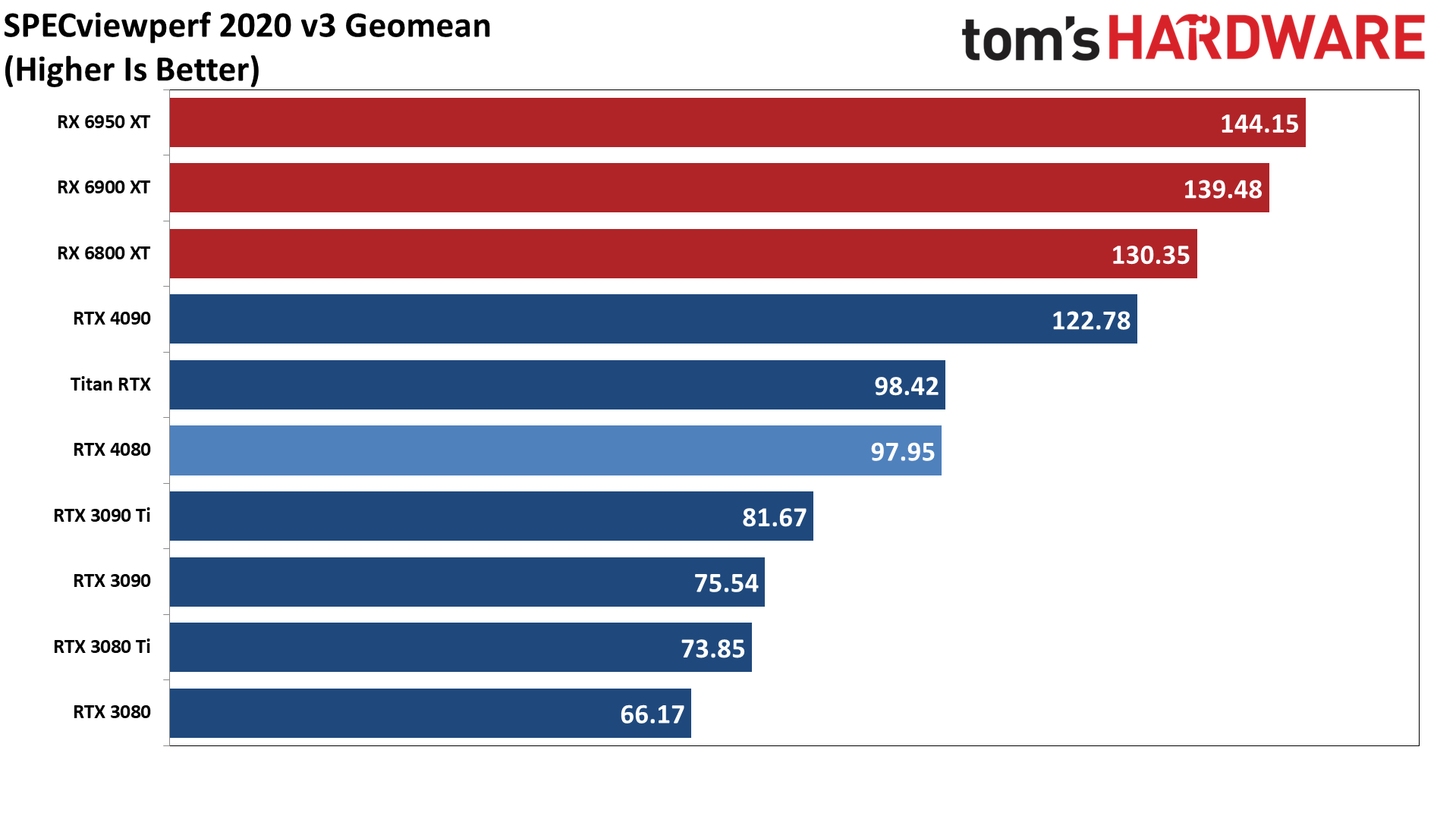

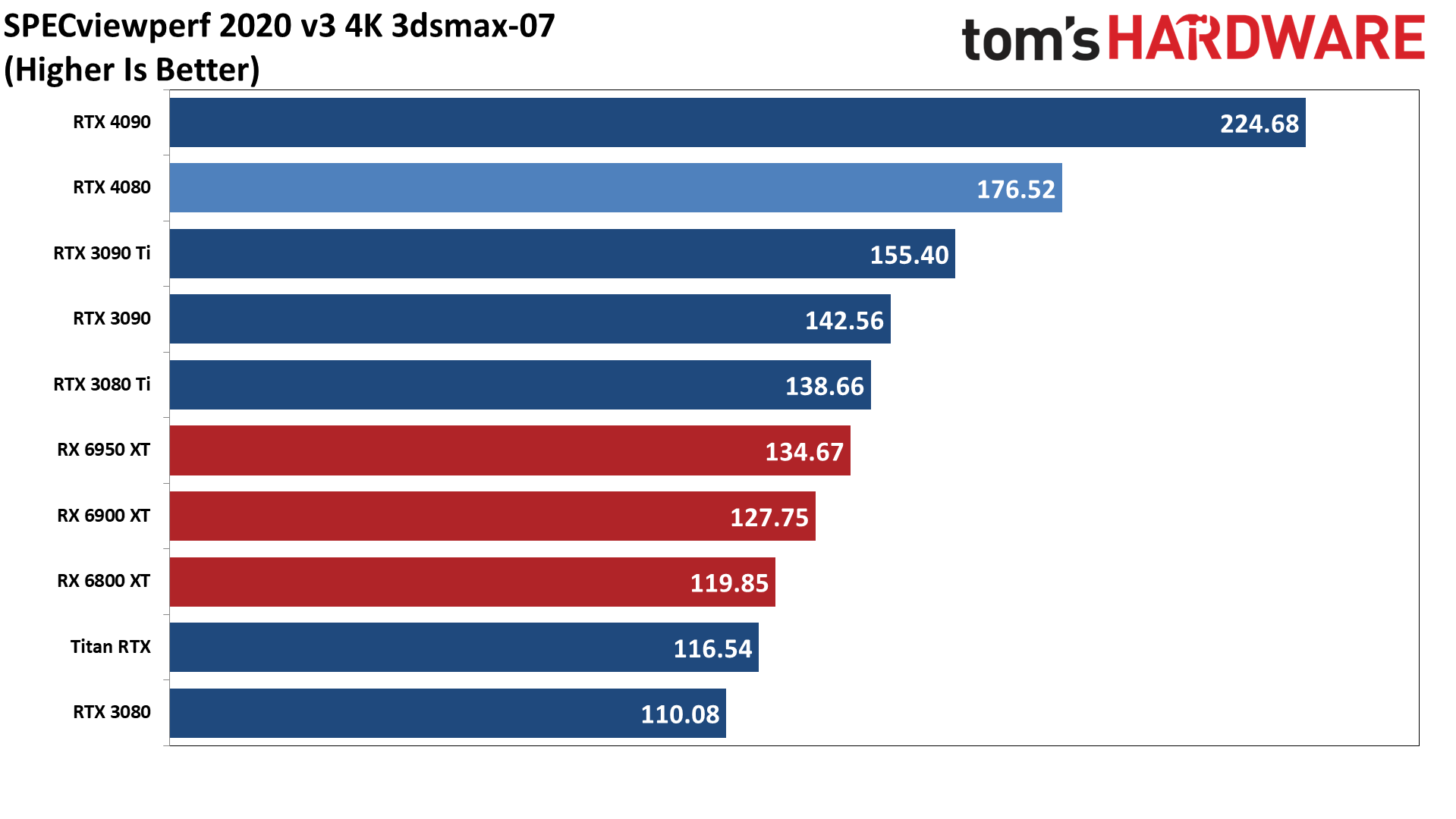

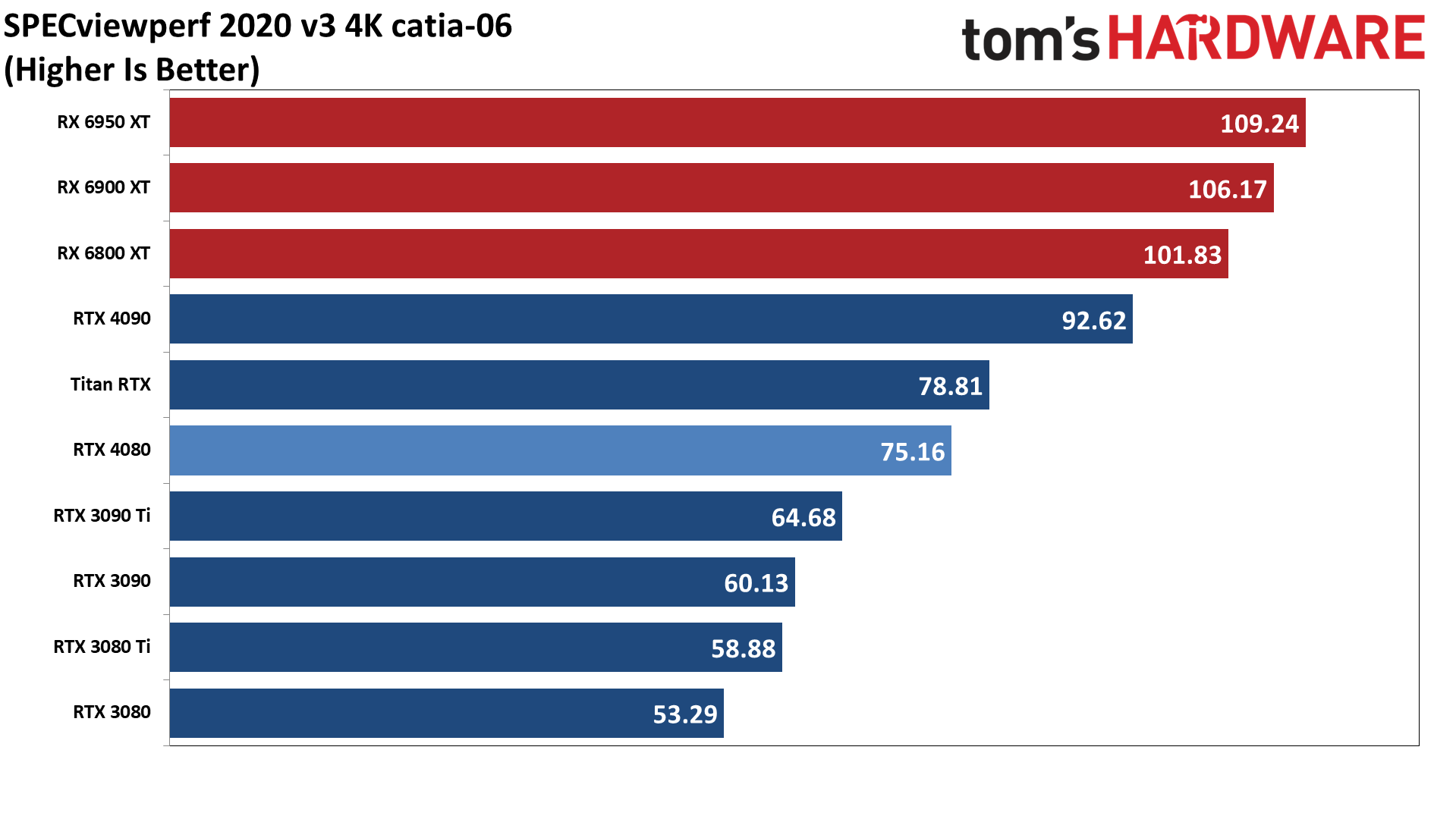

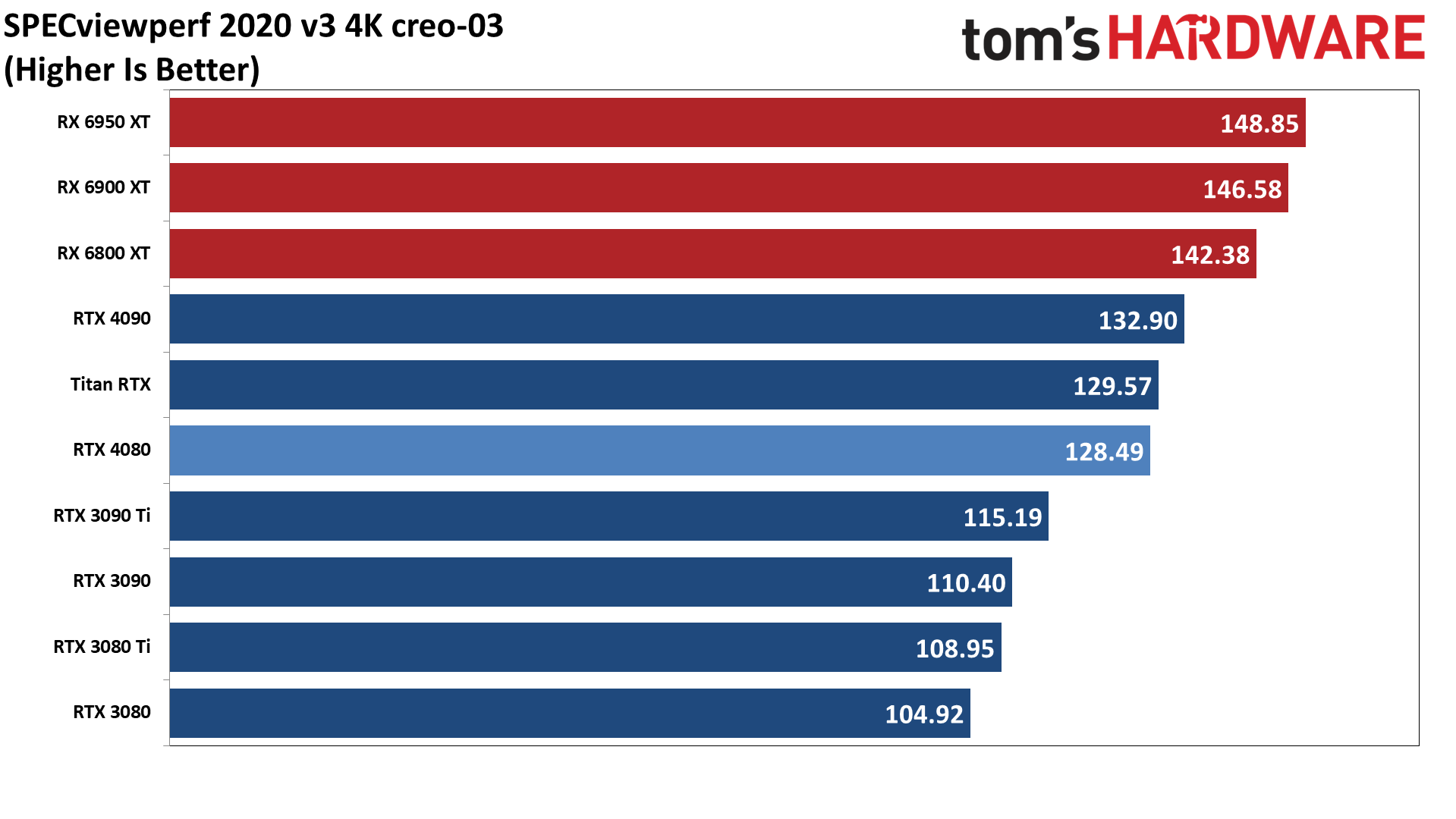

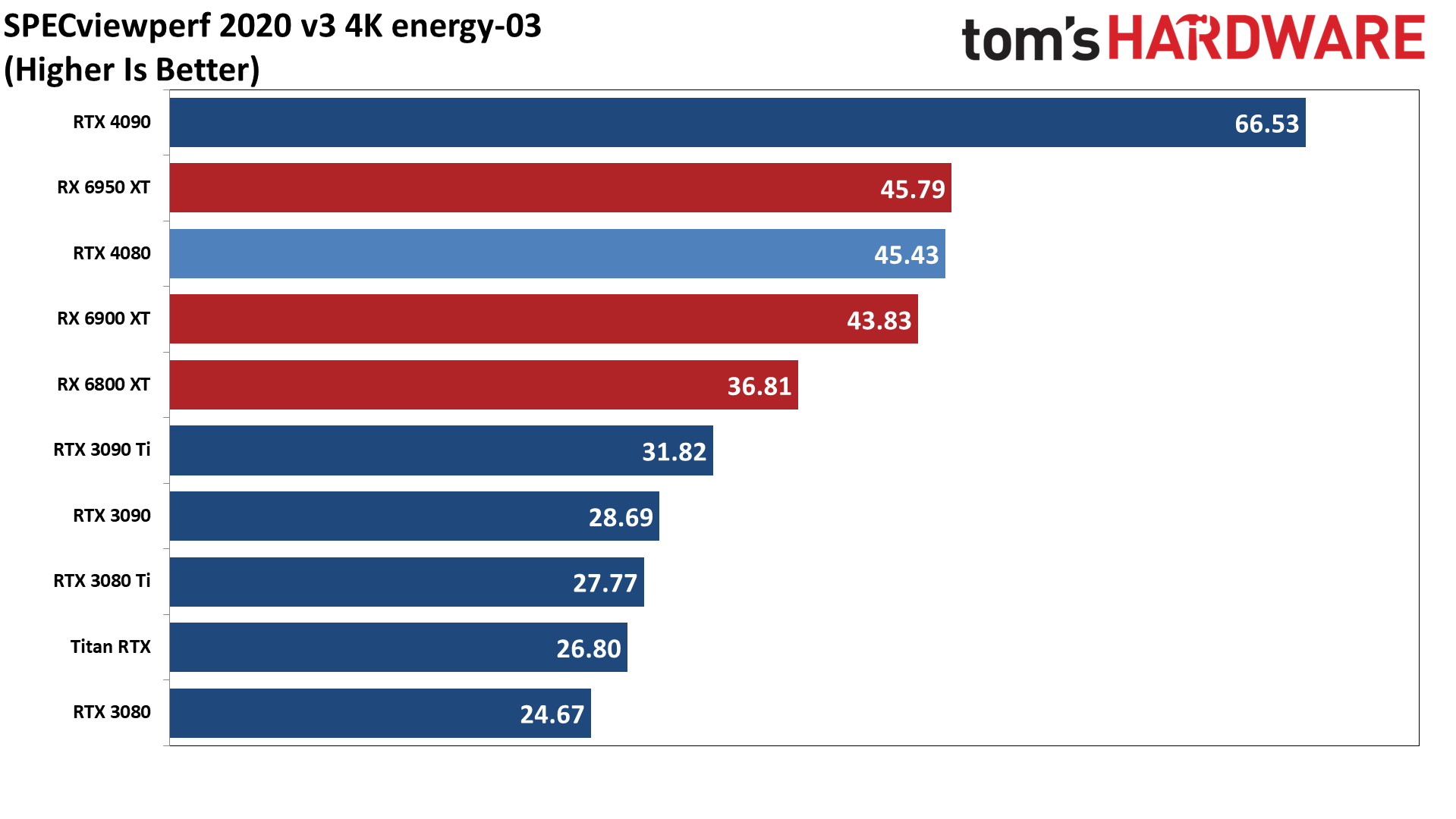

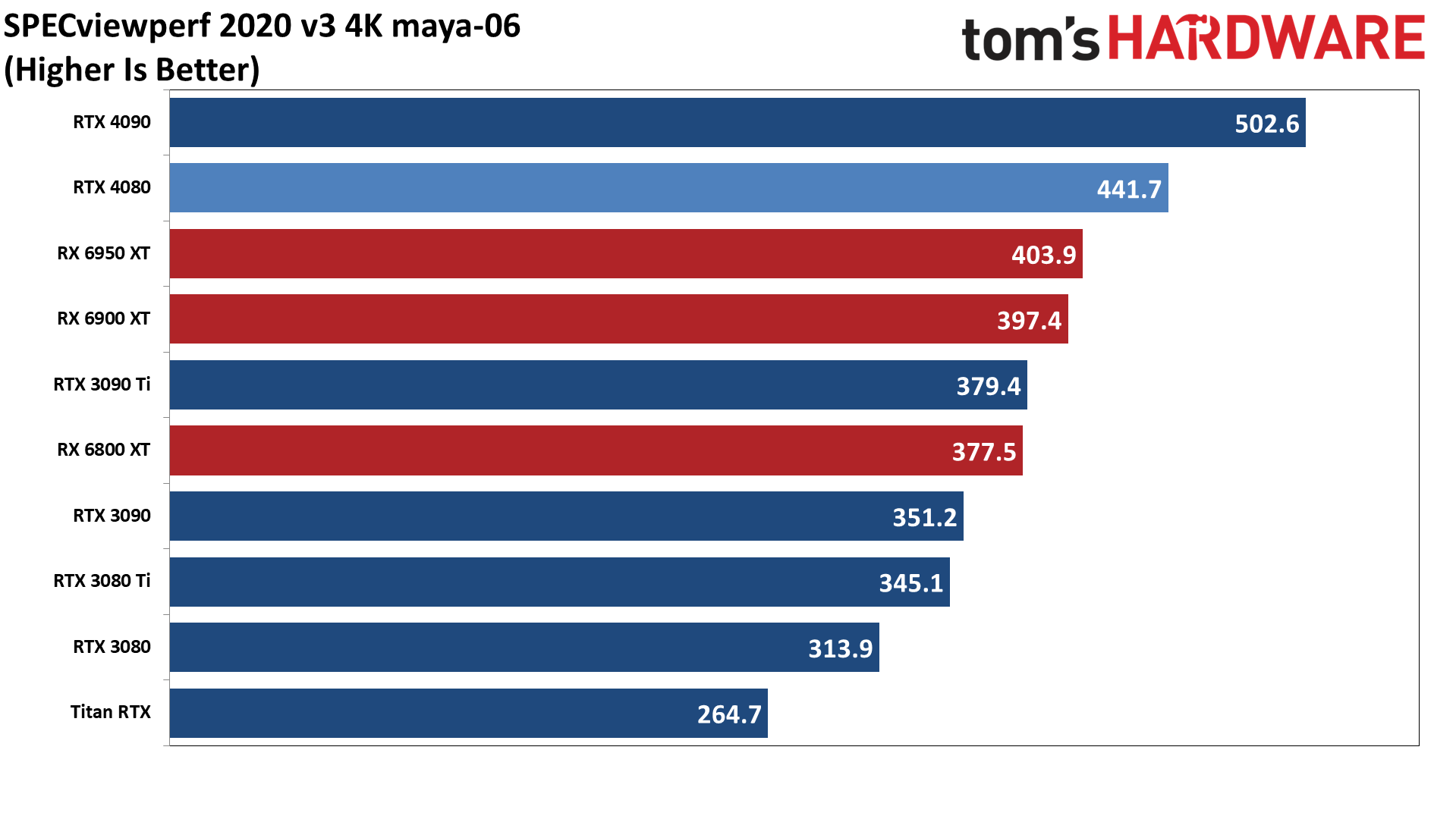

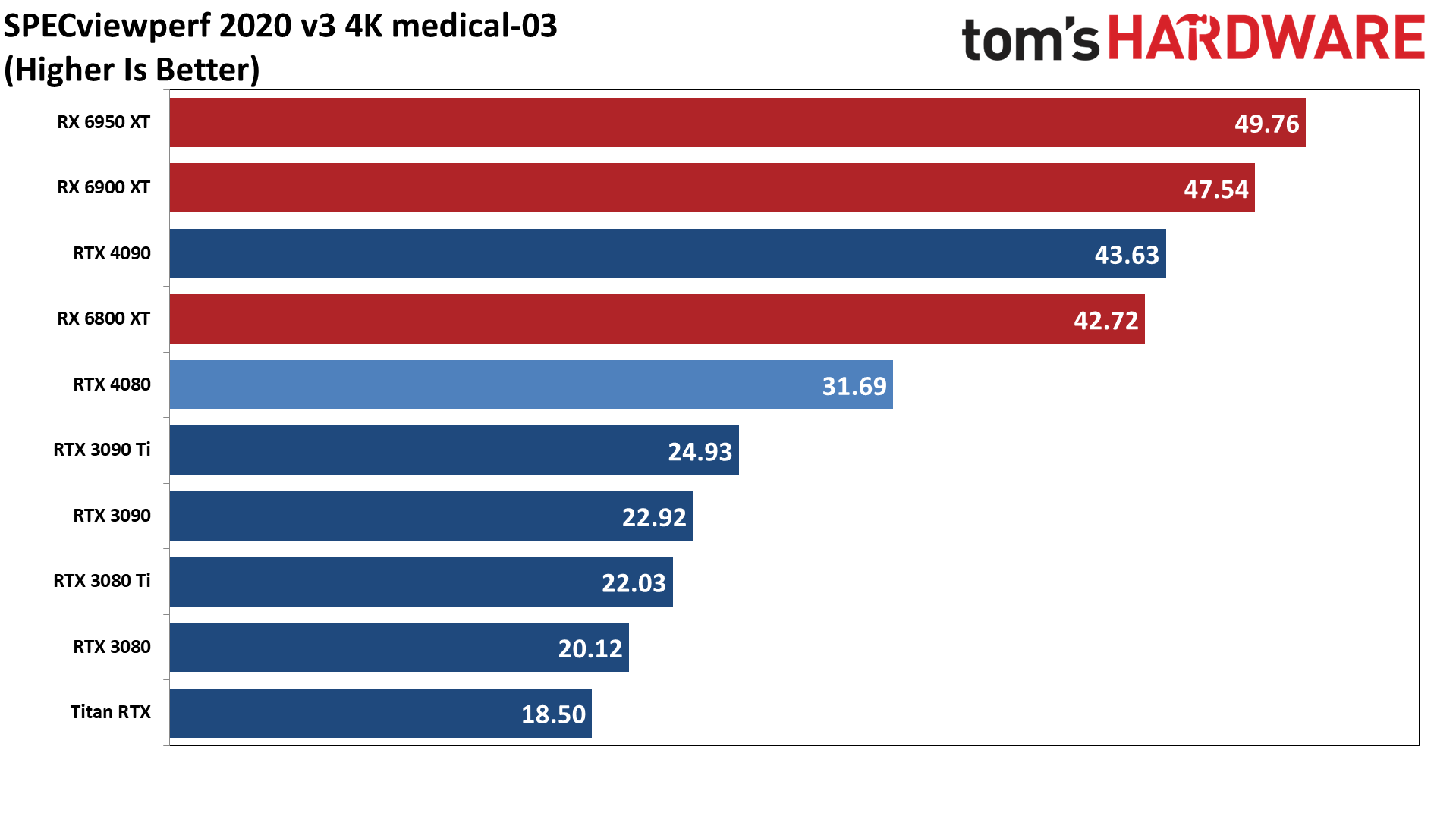

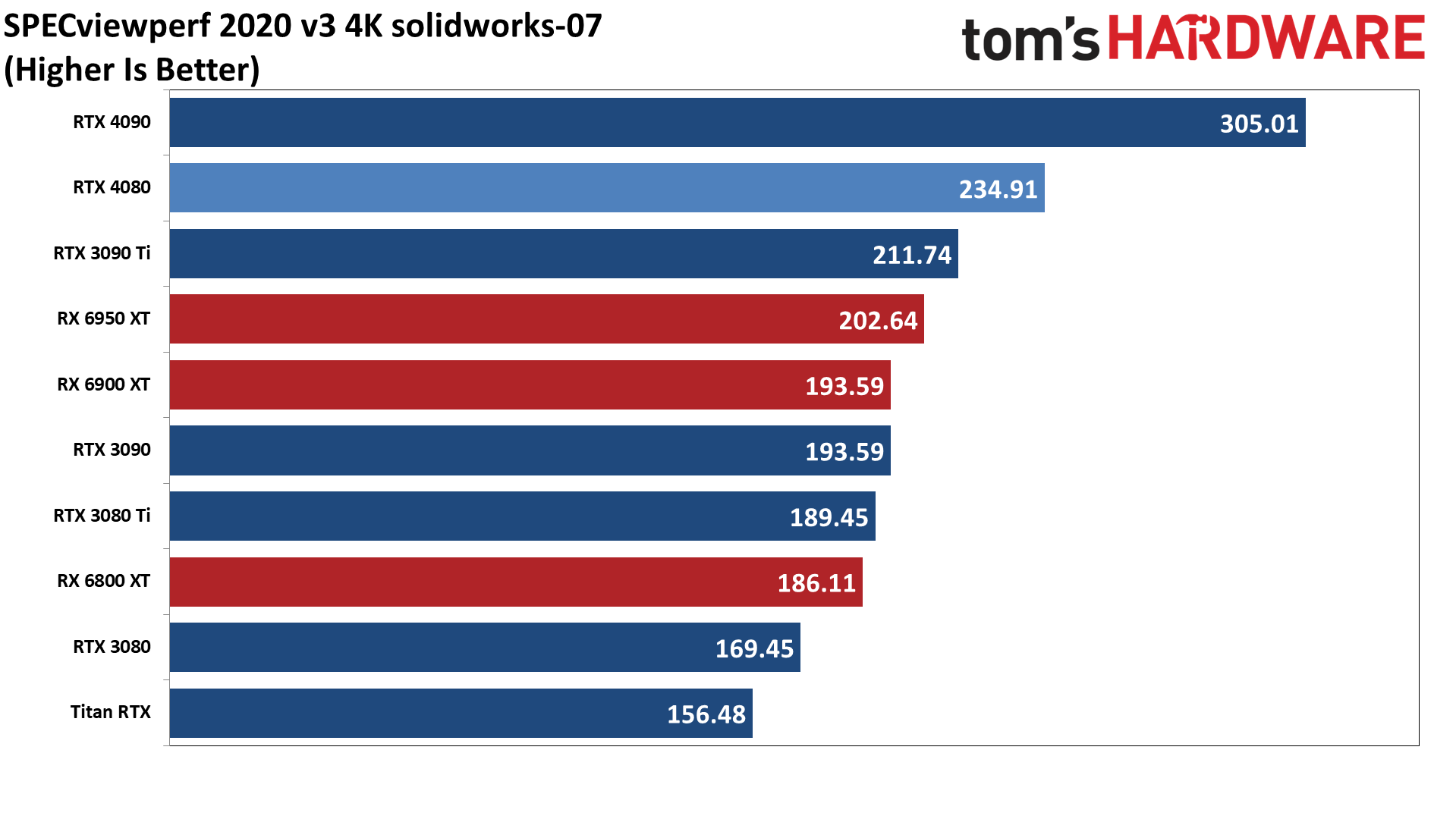

SPECviewperf 2020 consists of eight different benchmarks, and we use the geometric mean from those tests to generate an aggregate "overall" score. Note that this is not an official score, but it gives equal weight to the individual tests and provides a nice high-level overview of performance. Few professionals use all of these programs, however, so it's generally more important to look at the results for the applications you plan to use.

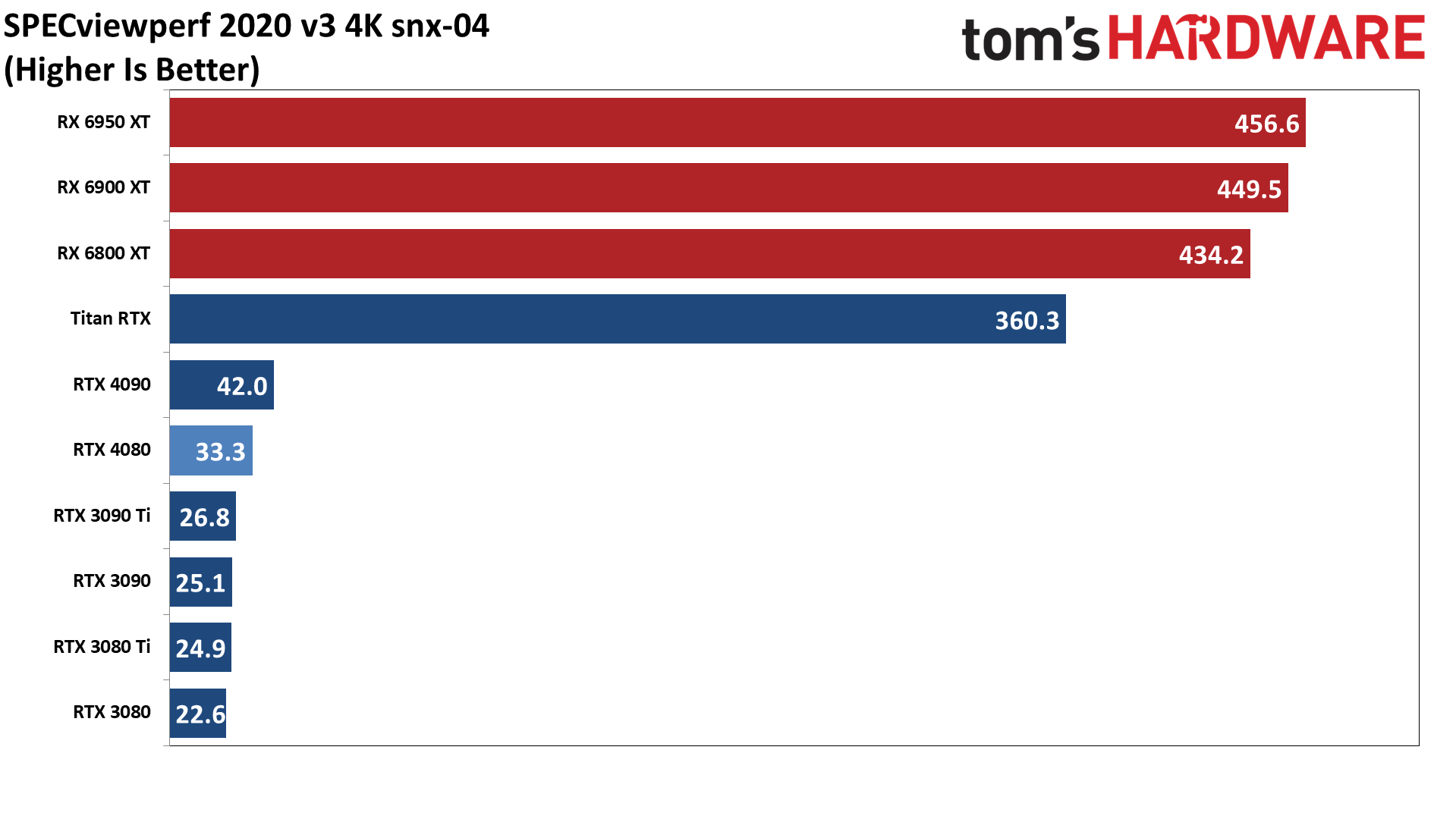

Across the eight tests, Nvidia's RTX 4080 trails the costlier 4090 by 20% on average. Performance is nearly tied in creo-03. Energy-03 and medical-03 favor the 4090 a bit more, but the performance difference is relatively similar to what we saw in our 4K gaming results. In turn, the 4080 beats the 3090 Ti by 20%, leads the 3080 Ti by 33%, and comes out 48% faster than the RTX 3080. It's only tied with the Titan RTX, thanks to the significantly higher score that card gets in snx-04.

AMD tends to be a bit more generous with professional application optimizations on consumer hardware, and the latest drivers gave a sizeable boost to AMD's SPECviewperf scores. That results in AMD delivering better performance than the RTX 4080 in half of the tests, and the major Nvidia deficit in snx-04 gives AMD's GPUs the overall lead as well. However, if your professional application of choice is 3D Studio Max, Maya, or SolidWorks, the RTX 4080 takes the lead.

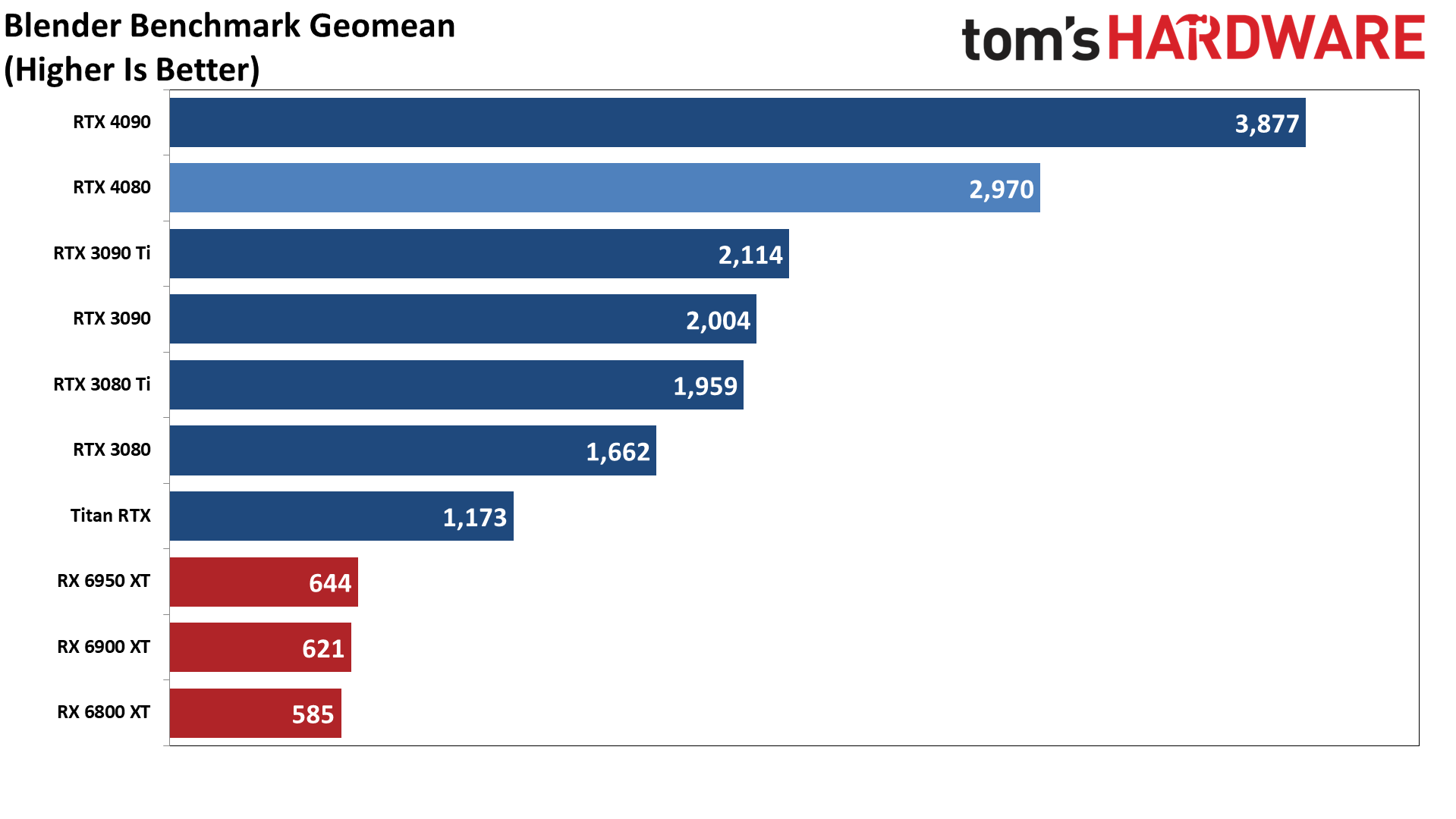

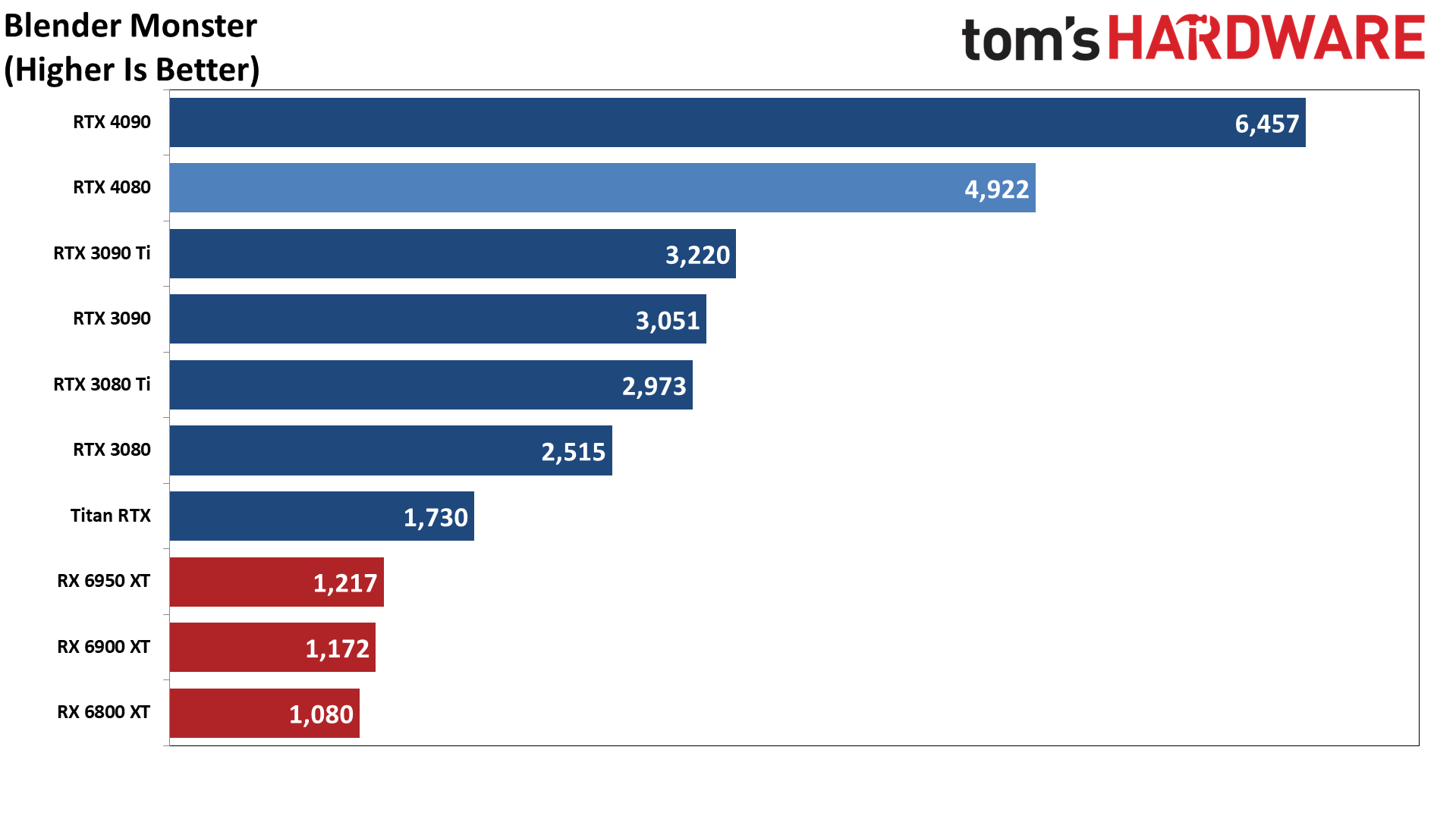

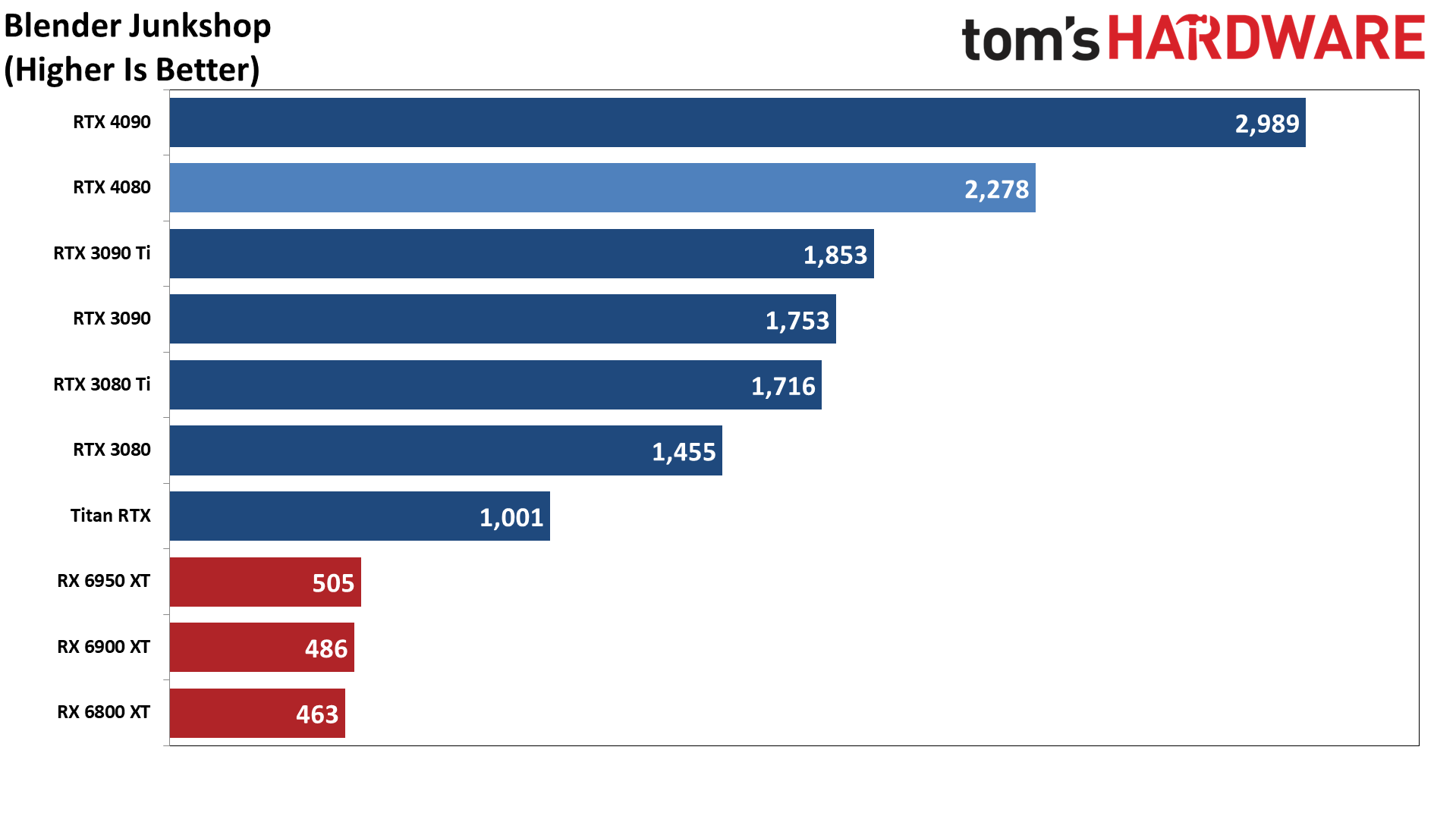

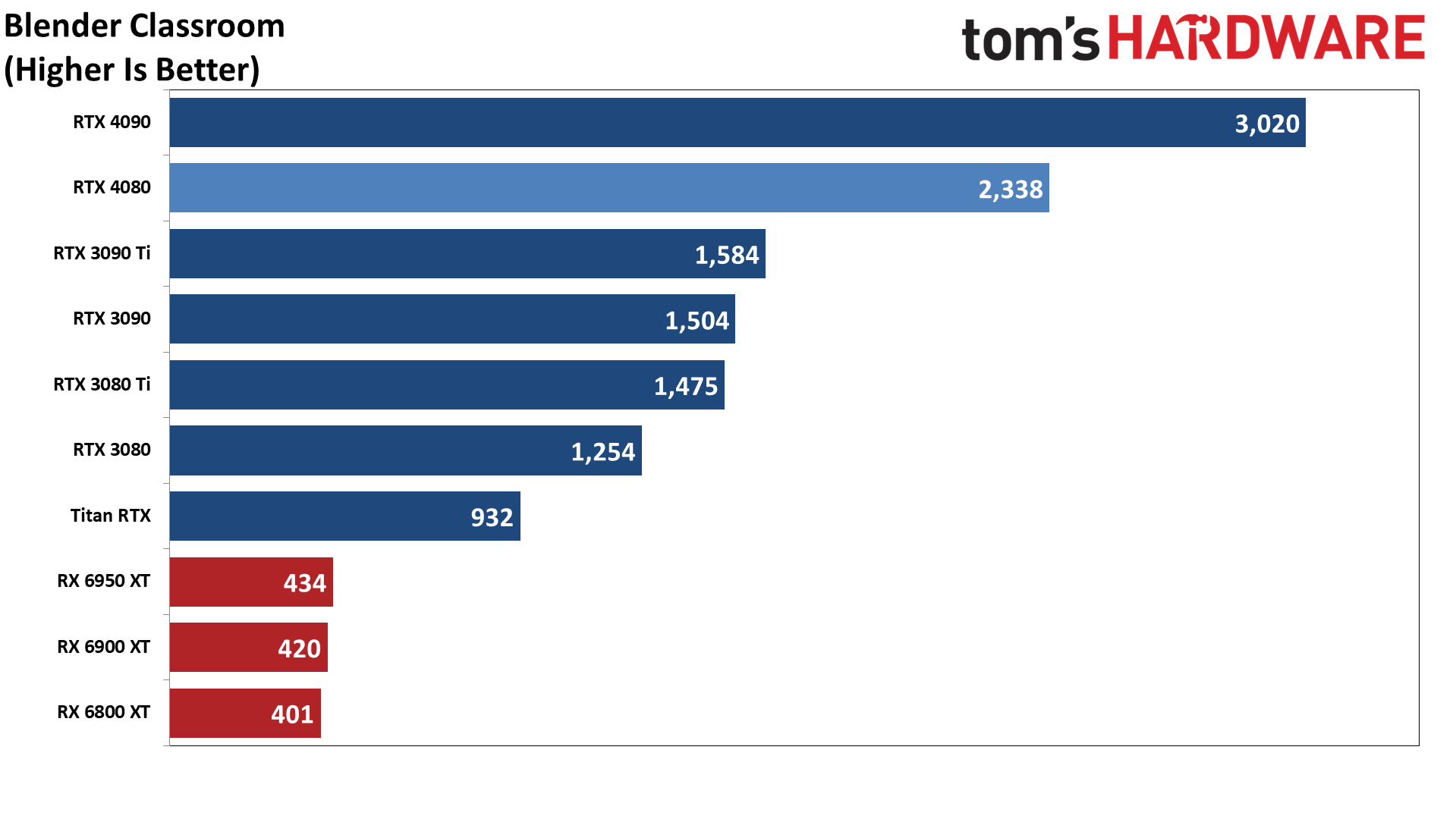

Blender is a popular open-source rendering application, and we're using the latest Blender Benchmark, which uses Blender 3.30 and three tests. Blender 3.30 also includes the new Cycles X engine that leverages ray tracing hardware on AMD, Nvidia, and even Intel Arc GPUs. It does so via AMD's HIP interface (Heterogeneous-computing Interface for Portability), Nvidia's CUDA or OptiX APIs, and Intel's OneAPI.

Being open-source has one major advantage, and it's the ability of the various companies to deliver their own rendering updates. This gives us the closest option for an apples-to-apples performance comparison of professional 3D rendering using the various GPUs. Blender and other 3D rendering applications tend to show the raw ray tracing hardware performance, though architectural elements can come into play.

In this case, Nvidia's latest Ada Lovelace GPUs thoroughly crush the competition. The RTX 4080 delivers about four times the performance of the RX 6950 XT, and even the old Titan RTX comes in ahead of AMD's "best" with about double the performance.

That said, we have to wonder how much of the gap comes down to better optimizations in the code for Nvidia's CUDA and OptiX APIs. Maybe AMD will release a driver at some point that massively improves its Blender performance. Barring that, even if the RX 7900 XTX is twice as fast as the 6950 XT, it will still fall behind Nvidia's RTX 40-series cards.

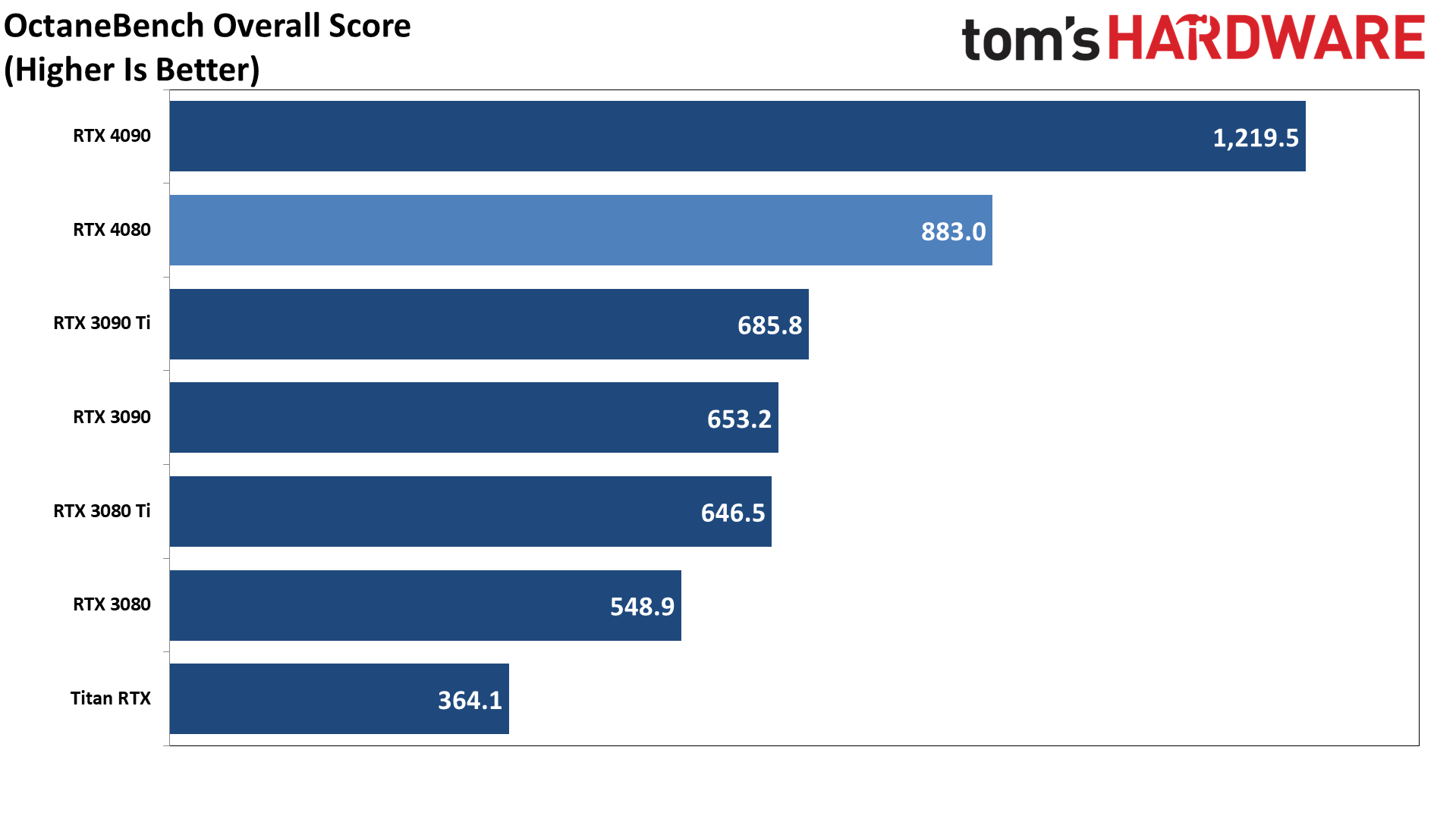

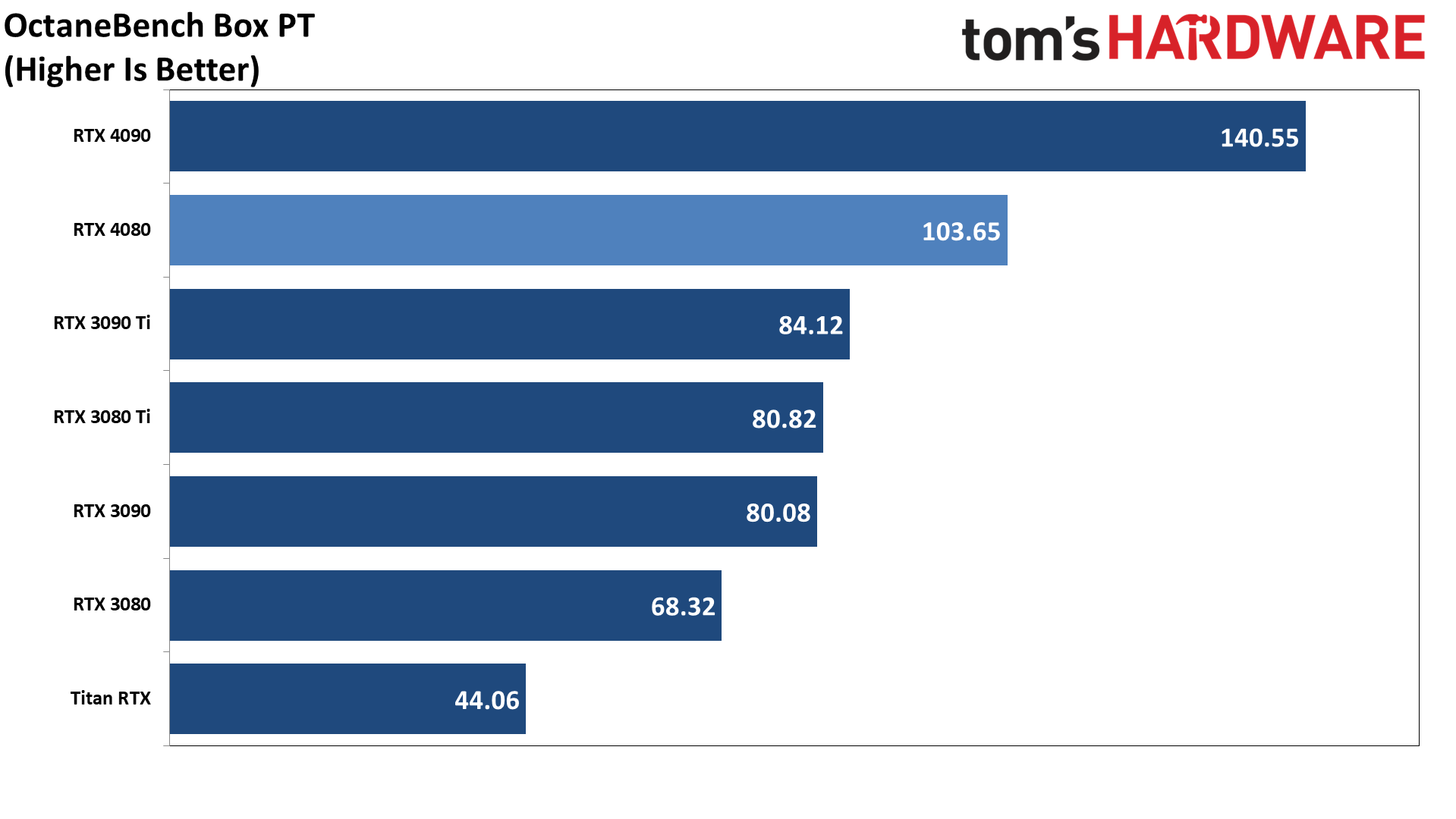

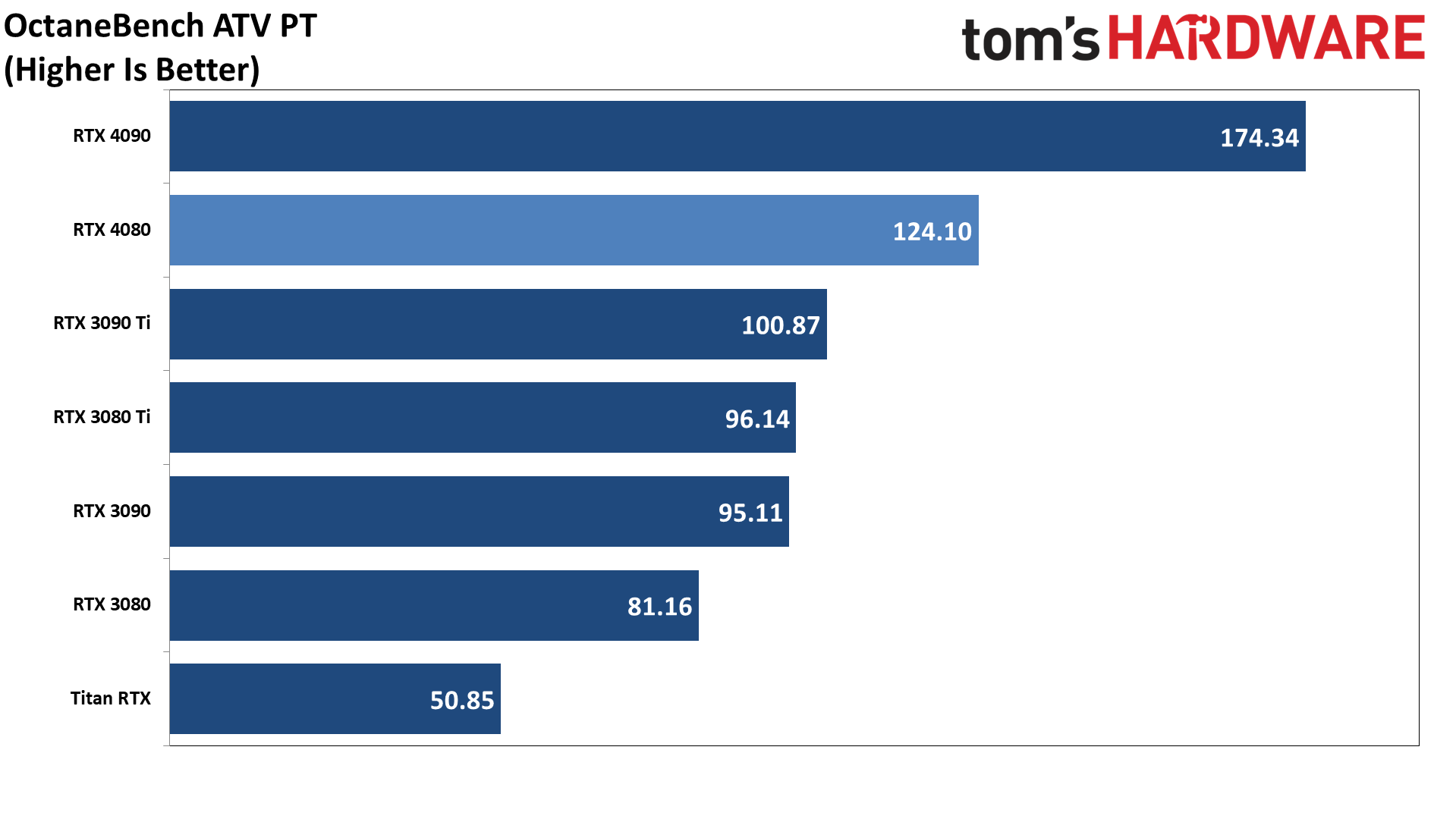

Our final two professional applications only have ray tracing hardware support for Nvidia's GPUs. We reached out to the companies to find out why that's the case, and it largely comes down to reliability and stability — for example, Optane dropped OpenCL support because the API was no longer seeing active development.

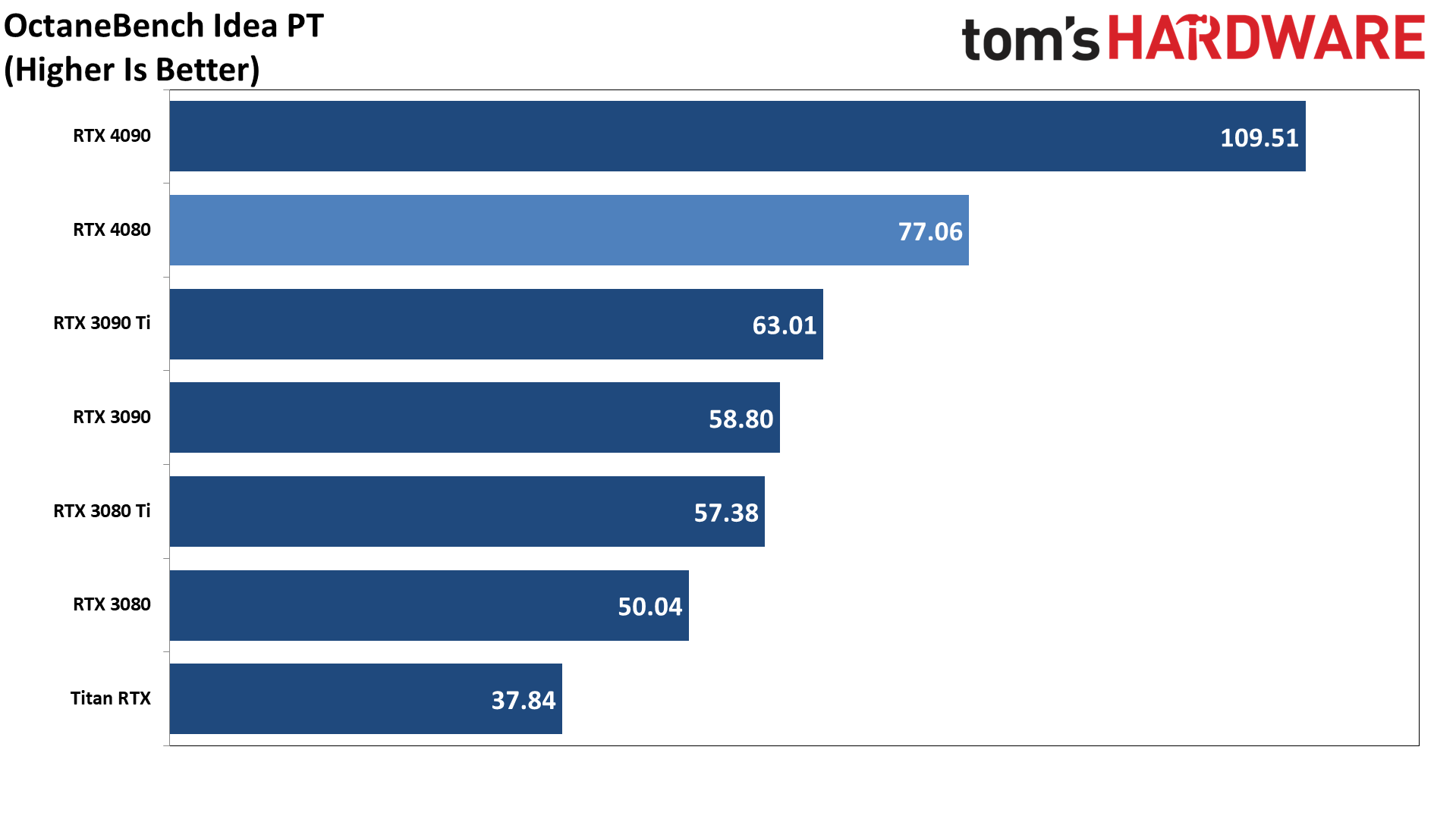

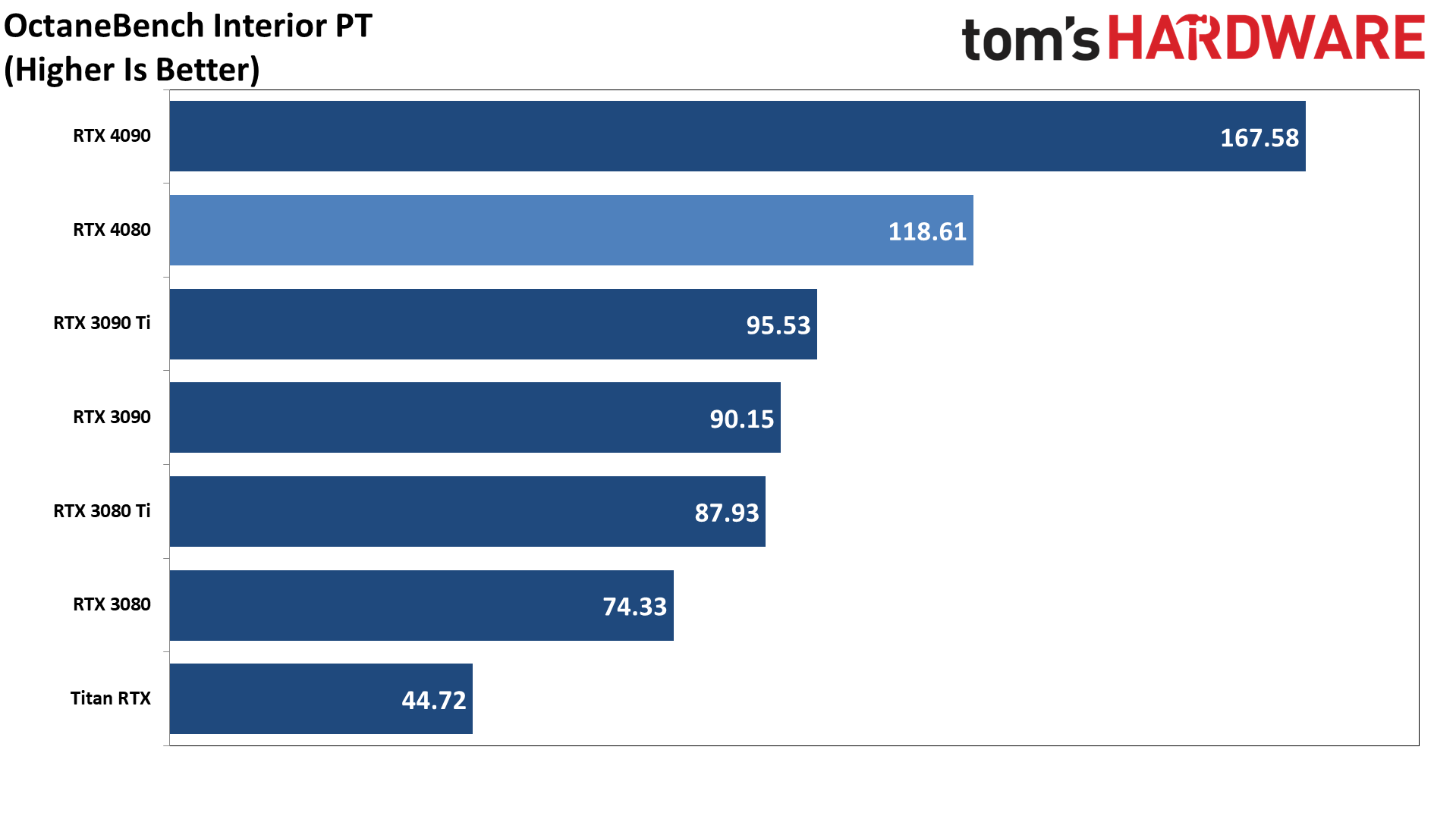

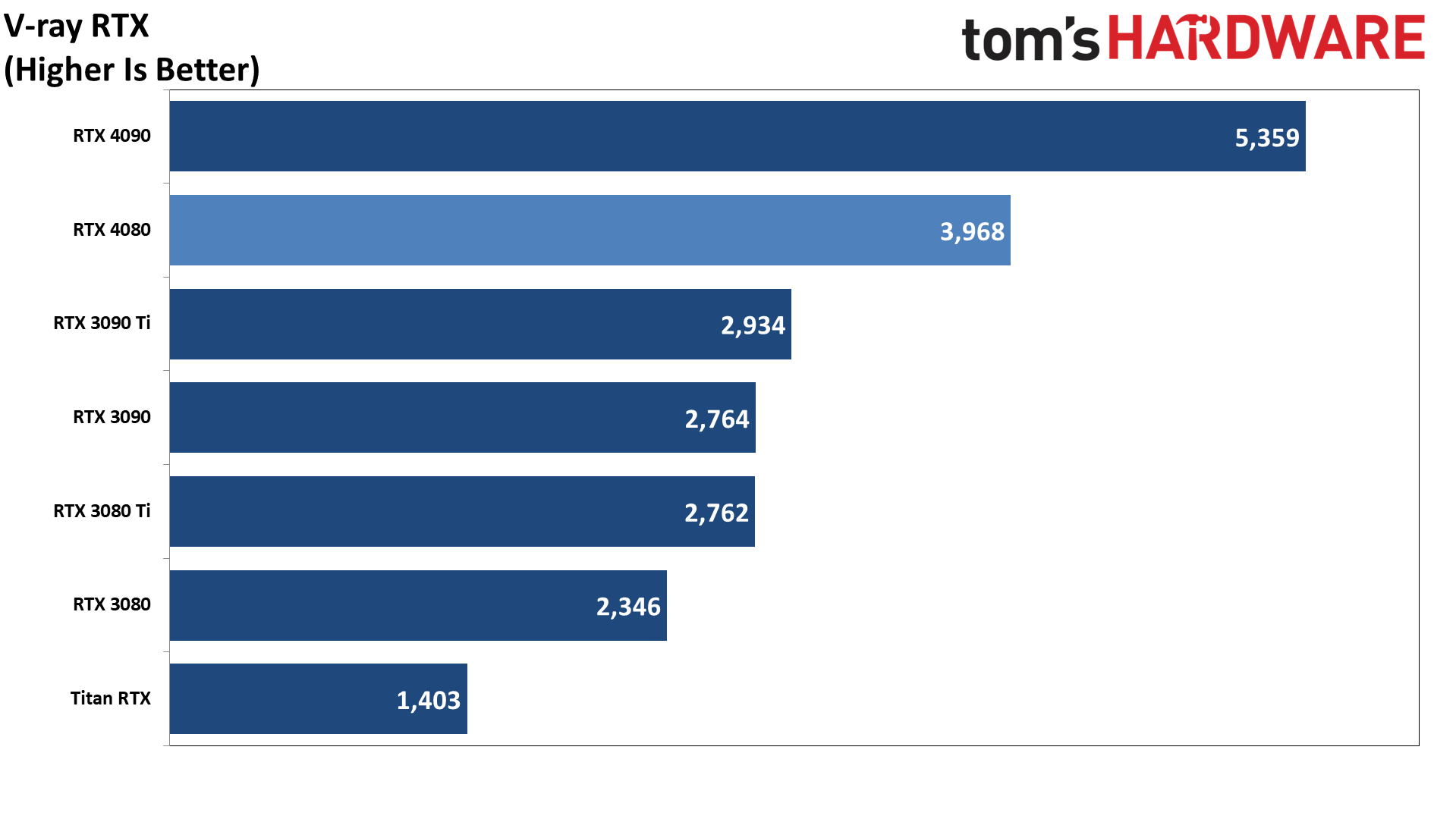

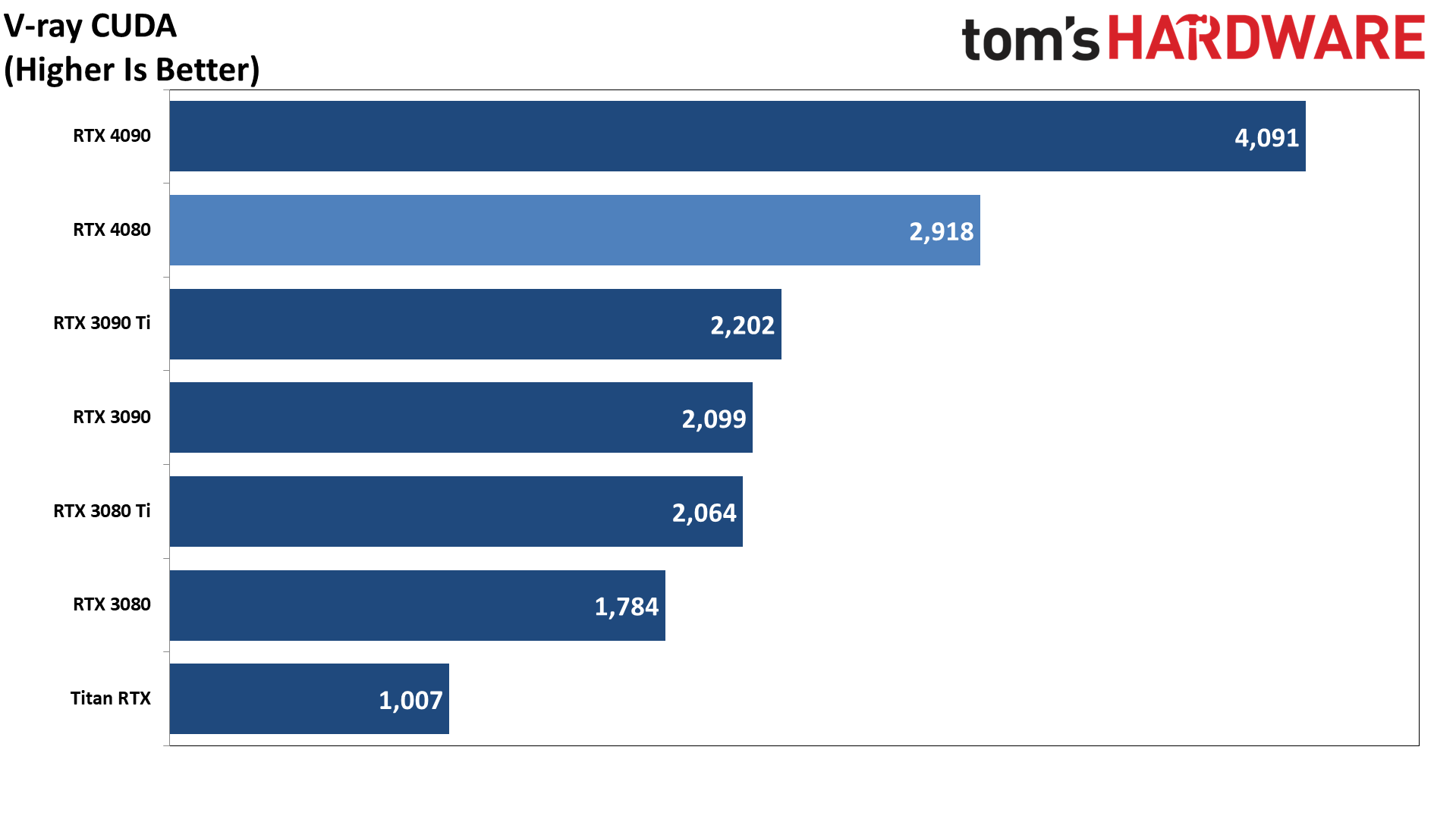

Without AMD GPUs in the mix, all we can really see is how the RTX 4080 fares against the 4090 and 30-series cards. Even though it has less memory and memory bandwidth, the 4080 still leads the 3090 Ti by nearly 30% on average in Octane and closer to 35% in V-Ray. It's also 60–70 percent faster than the RTX 3080. Basically, Nvidia's RTX GPUs are the gold standard in professional 3D rendering applications for a reason: It was first with hardware, and it provided and continues to provide active developer support.

[I've run out of time (again), but I'm going to try to add some machine learning benchmarks in the near future. Stay tuned…]

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4080: Professional and Content Creation Performance

Prev Page RTX 4080: DLSS 3, Latency, and 'Pure' RT Performance Next Page GeForce RTX 4080: Power, Clocks, Temps, Fans, and Noise

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

btmedic04 At $1200, this card should be DOA on the market. However people will still buy them all up because of mind share. Realistically, this should be an $800-$900 gpu.Reply -

Wisecracker ReplyNvidia GPUs also tend to be heavily favored by professional users

mmmmMehhhhh . . . .Vegas GPU compute on line one . . .

AMD's new Radeon Pro driver makes Radeon Pro W6800 faster than Nvidia's RTX A5000.

My CAD does OpenGL, too

AMD Rearchitects OpenGL Driver for a 72% Performance Uplift : Read more

:homer: -

saunupe1911 People flocked to the 4090 as it's a monster but it would be entirely stupid to grab this card while the high end 3000s series exist along with the 4090.Reply

A 3080 and up will run everything at 2K...and with high refresh rates with DLSS.

Go big or go home and let this GPU sit! Force Nvidia's hand to lower prices.

You can't have 2 halo products when there's no demand and the previous gen still exist. -

Math Geek they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......Reply

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

chalabam Unfortunately the new batch of games is so politized that it makes buying a GPU a bad investment.Reply

Even when they have the best graphics ever, the gameplay is not worth it. -

gburke I am one who likes to have the best to push games to the limit. And I'm usually pretty good about staying on top of current hardware. I can definitely afford it. I "clamored" to get a 3080 at launch and was lucky enough to get one at market value beating out the dreadful scalpers. But makes no sense this time to upgrade over lest gen just for gaming. So I am sitting this one out. I would be curious to know how many others out there like me who doesn't see the real benefit to this new generation hardware for gaming. Honestly, 60fps at 4K on almost all my games is great for me. Not really interested in going above that.Reply -

PlaneInTheSky Seeing how much wattage these GPU use in a loop is interesting, but it still tells me nothing regarding real-life cost.Reply

Cloud gaming suddenly looks more attractive when I realize I won't need to pay to run a GPU at 300 watt.

The running cost of GPU should now be part of reviews imo.

Considering how much people in Europe, Japan, and South East Asia are now paying for electricity and how much these new GPU consume.

Household appliances with similar power usage, usually have their running cost discussed in reviews. -

BaRoMeTrIc Reply

High end RTX cards have become status symbols amongst gamers.Math Geek said:they'll cry foul, grumble about the price and even blame retailers for the high price. but only while sitting in line to buy one.......

man how i wish folks could just get a grip on themselves and let these just sit on shelves for a couple months while Nvidia gets a much needed reality check. but alas they'll sell out in minutes just like always sigh -

sizzling I’d like to see a performance per £/€/$ comparison between generations. Normally you would expect this to improve from one generation to the next but I am not seeing it. I bought my mid range 3080 at launch for £754. Seeing these are going to cost £1100-£1200 the performance per £/€/$ seems about flat on last generation. Yeah great, I can get 40-60% more performance for 50% more cost. Fairly disappointing for a new generation card. Look back at how the 3070 & 3080 smashed the performance per £/€/$ compared to a 2080Ti.Reply