Core i7-870 Overclocking And Fixing Blown P55-Based Boards

Step 2: Examining Power Consumption

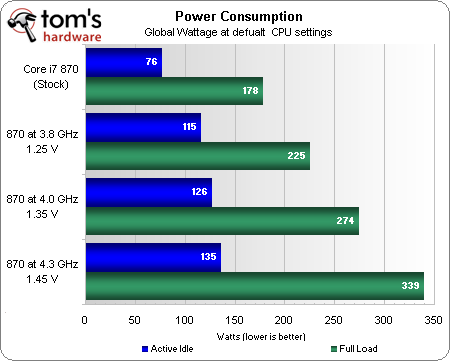

Overclocking our Core i7 processor at moderate voltage levels was enough to blow the voltage regulators of three budget/performance motherboards, and the primary purpose of this article was to define the cause of that damage. How much of a power monster is an overclocked Core i7-870?

Two important numbers are needed to get a reasonably-accurate estimate of the CPU power draw when given the above global wattage numbers. The first is Intel’s 95W maximum TDP for the stock Core i7-870 processor and the second is power-supply efficiency, which has been independently rated at 90%. Assuming we really did reach full TDP for the stock processor by using eight Prime95 threads, the full system power (178W) minus power supply inefficiency (17.8W) and processor power leaves 65W remaining for “everything but the processor.” Because the GPU remained idle throughout this power-consumption test, this number is believable.

At 3.8 GHz and 1.25V, the system consumed 225W. If we subtract the 22.5W of power supply inefficiency and the 65W used by other components, we’re left with a processor that uses 137W of power. That’s getting pretty close to the 150W ASRock said its board was designed to provide.

At 4.0 GHz and 1.35V, the system consumed 274W. Subtracting the 27.4W of power supply waste and 65W used by other components leaves us with a processor power-consumption figure of 181W.

And now for the “big” number from our standard 1.45V test: at 4.3 GHz, our system consumed 339W, which, subtracting for 33.9W of power supply loss and 65W for other components, yields a CPU power consumption number of 240W. CPU power consumption exceeds the “sane” limit of 200W even if our “other component” estimate is off by as much as 40W.

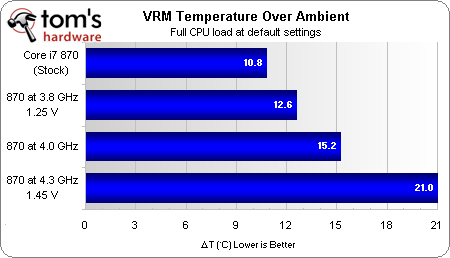

Though we seriously doubt component power consumption estimates are off by so large an amount, CPU voltage regulator efficiency is one more variable that can’t be assessed because the motherboard itself stands in our way. Efficiency is usually inversely proportional to heat, and overclocking really pushed Asus’ voltage regulator hard.

With temperatures and stress changing the efficiency of other components, the best we can hope to do is to look at the numbers above and take an educated guess. Our data above proves that the processor consumes no more than 240W when fully overclocked, and our best estimate is that actual power consumption falls somewhere between 200W and 240W with the processor under full load at our maximum overclock.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

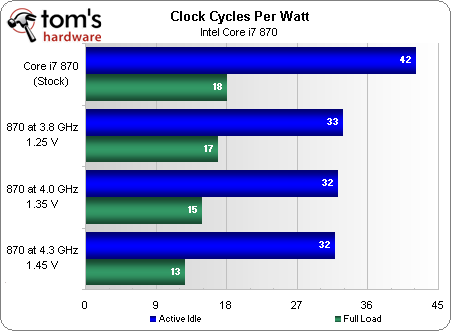

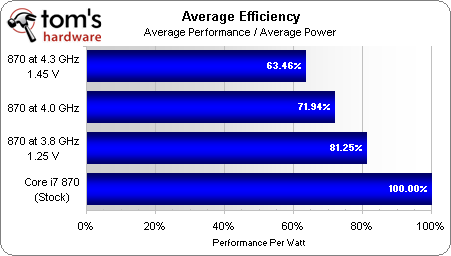

If program performance were exactly proportional to clock speed, our system would lose 7%, 18%, and 30%, respectively, in full-load efficiency by overclocking at 1.25V, 1.35V, and 1.45V CPU core, respectively. On the other hand, idle efficiency only drops markedly in response to shutting off power-savings features.

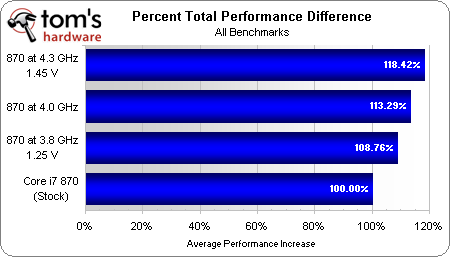

To determine actual efficiency, we have to consider the actual performance benefit from overclocking. This is mitigated somewhat by graphics-centric benchmarks that did not benefit much, with our highest overclock showing a mere 18% performance boost.

The result of huge power losses with moderate performance gains is a decrease in efficiency of over one third at our highest settings.

Current page: Step 2: Examining Power Consumption

Prev Page Benchmark Results: Synthetics Next Page Step 3: Evaluating The Solutions-

cyberkuberiah but some of us would rather give some extra beans and go 920 , and have dual pcie2.0 x16 . a few extra watts doesn't matter too .Reply -

FYI: Power consumption of switching cmos silicon increases with the square of voltage, and linear with frequency. The increases shown here seem to be in line with that, rather than the stated decrease in voltage regulator efficiency (which certainly does decrease, but probably much less).Reply

-

Crashman dan__gFYI: Power consumption of switching cmos silicon increases with the square of voltage, and linear with frequency. The increases shown here seem to be in line with that, rather than the stated decrease in voltage regulator efficiency (which certainly does decrease, but probably much less).Reply

Can you turn that into a more accurate estimate than 200W to 240W, where all that can be proven is that it's "high, but less than 240W"? -

jeffunit Are your power consumption measurements of the cpu, dc power or wall socket power? If they are the latter, which I suspect they are, then you have to factor in the power supply efficiency, as 150w socket, means 150w DC.Reply

-

bucifer I would be great to see how the more popular i7 860 or at least i5 750 scale with the voltage.Reply

I don't think i7 870 is a popular choice because of it's price (people would go for socket 1336) -

ctbaars Thanks for article.Reply

For me - This and previous articles have convinced me to game at stock, w/ tb+ settings on, and a high end GPU card and the i5 is most appropriate for my usage. I need to condition myself to turn off the computer esp. when noone is home. -

avatar_raq Although Thomas labels Asrock as "succeeds" I will not buy their motherboards, you'll never know what else this company ignores in the bios, and do you think they would fix that issue if it weren't for THG? After how many failing boards?Reply -

tecmo34 cyberkuberiahbut some of us would rather give some extra beans and go 920 , and have dual pcie2.0 x16 . a few extra watts doesn't matter too .I agree with you 110%... :DReply

Also, I would like to see the voltage scaling using the i5 750, as mentioned by bucifer -

Onus A few extra watts being "used" is fine. A few extra watts being "wasted" is something else entirely.Reply

I don't see a howling difference on these overclocks either. If I bought an i7, that probably means I'd have little reason to OC it.

While ASRock seems to be taking a "successive approximations" approach to improving their products, the ones I've bought so far have all been solid, but any OC has been mild.

And, once again (even if it isn't quite epic), MSI = FAIL.

-

jerreece I was glad to see this article. I was just thinking about this whole debacle this morning. :)Reply