R600: Finally DX10 Hardware from ATI

Texture Units

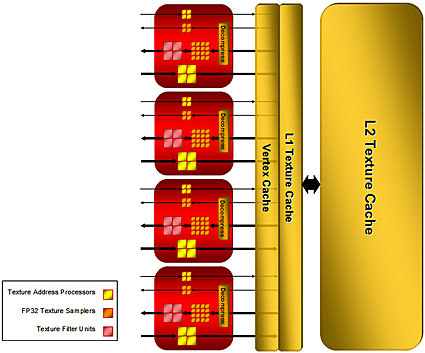

Texture Unit - Architecture

Click for a larger image

Associated on the side of the shader core diagram are the texture units. ATI has chosen four texture units for R600. Each unit has eight texture addresses per cycle while four of those are used for bilinear and four are used for four unfiltered lookups. The vertex cache can be used for vertex accesses or other structured accesses. It can even be used for displacements, which will probably become more prevalent in DX10 games.

Associated within each unit are 20 texture samplers for a total of 80 samplers in R600. These samplers fetch and return the data. According to ATI, it does not matter if it is floating point or integer data. It will return four filtered floating point values per cycle and it will return four unfiltered floating-point, or any other type of data per cycle. The 2400 and 2600 core functionality will remain the same but they won't be able to return as much because they have fewer units.

Compared to the previous generations, the texture caches are a bit more complicated, as they are broken up into several caches. There is a 32K L1 unified for all of the SIMD arrays. In comparison, the R500 series only had an 8K cache (per SIMD it is four times larger). It is backed up by a second 256K L2 (2600 has 128K L2 and the 2400 has no L2). The secondary cache allows for very large data structures like fat pixels or very large textures. The aim is to reduce the bandwidth they use for texture.

In concert with the texture cache subsystem, there is also a vertex cache system. It is called a vertex cache because that is one of its primary uses, but it can be used for unfiltered texture lookups as well. It is quite common to use the cache with displacement mapping, structured lookup into arrays and render-to-vertex arrays where data is fetched back. Since it deals primarily with vertex data, it was called a vertex cache. For all intents and purposes, it is a structured linear cache working in parallel. It is not necessarily as important how much data actually goes through any of these caches as much as it is the availability of resources when work needs to be done. The availability of resources for which the cache can be arbitrated is more crucial. In the case of the HD 2400, it actually fetches its vertices through the texture cache. The hardware looks at all of these units as a general resource and will have the compiler take the shader code and convert it. The key to all of this architecture is how well the compiler can convert code, which will determine how things are going to work and what kind of throughput you will actually end up with.

In tasks such as render to texture, it is common to create a texture and then immediately use it. Issues can arise by doing that. The texture needs to finish being drawn before it is used. On older processors (ATI and current Nvidia), the chip would idle to finish rendering the texture before moving on to the next command. There is a performance hit involved. ATI has changed this on the 2000 series. As mentioned before, self checking has been moved down into the hardware so when the rendering of textures occurs there is a coherency check within the chip across the texture units and the raster back ends. The driver doesn't care anymore. It just sends the commands down to the chip and fills it up. The processor itself handles all of the synchronizations between all of the units.

Texture Unit - Features

Given the 6 TB of bandwidth that is available inside the processor, ATI wanted to make 64 pixels and HDR rendering in general "first class citizens." It was decided that the rate for 8-bit INT, 10-bit INT, 16-bit INT, and float16 would all be the same compared to the X1K series, which supported float unfiltered with filtering done in the shader for the LOD computations first. Then, four bilinear fetches plus the actual weighted sum could make six-seven cycles to do the work. 128-bit filters are supported completely but the rate is cut in half when doing 32x4 for 128-bit textures, as it becomes a bandwidth-limited case.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Trilinear and anisotropic filtering are supported on every single format, and as already done in previous driver revisions, the high-quality AF mode is the default mode. Depth stencil textures (DST) with percentage closer filtering (PFC) has been inside Nvidia's hardware for some time but was officially introduced in DX10 so ATI included them with the 600 series. This addition translates into potentially faster rendering for effects like soft shadows.

ATI has also introduced a new standard format. It is a 32-bit HDR shared exponent texture format (9:9:9:5) where the RGB is normalized and then one exponent is used for all of them. The texture unit supports high-resolution textures (8192x8192) and can also perform two texture fetches per cycle (one filtered and one unfiltered) with an option to get four unfiltered fetches in place of one filtered using Fetch4.