R600: Finally DX10 Hardware from ATI

SIMD Arrays

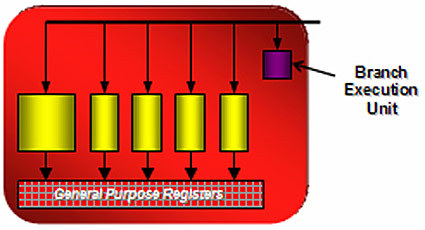

As mentioned before, ATI has used a very large instruction (VLIW) word inside of its arrays. The company uses this approach because each of the arrays is made up of a stream processors, which has five different ALU units, a branch execution unit, register files and some other items. Register file is considered to be the entire group of general purpose registers (GPR). Overall, there are approximately 6 TB/s of bandwidth for all of the SPs and the GPRs to utilize. Each read / write is 32-bit, which can be combined to make 64-bit and 128-bit

To make it work effectively, each unit has to work independently of each other in a superscalar way as there are separate instructions. When all of it is put together, it looks like a large word but each SIMD will get one of these and each element will get their own little set of instructions. Arbitration for SIMDs and fetches from the caches are executed simultaneously with instructions being co-issued during every single cycle (this was discussed under sequencing).

"For all intents, ATI decoupled the vector into four scalar units. For the previous design, ATI used the five ALUs to handle a Vec4+1 or vector plus a scalar. ATI kept the same arrangement but made them superscalar and independent of each other. It is not in a vector format anymore. They are organized as five scalar units, each of which is capable of doing a floating point MADD (multiply, accumulate, add and divide) and integer operations (add compares) because there is both kinds of data going through them under DX10's shader model 4.0 (SM 4.0).

The larger unit (AKA "the fat one") does some of the extra stuff that ATI did not want to distribute to all of them. Some of these operations are float-to-fixed conversions as well as transcendentals like sin, cos, log, square root, e, pi (?) and other calculations. This is very much like Nvidia's special instruction unit inside of G80 but will be utilized for regular operations most of the time. These "special" calculations are done less frequently than the core functions so ATI felt only having one unit out of the five would be sufficient. If you look at the typical ratios of ALU operations, transcendentals are not very common. MADD type operations are still the most popular for developers to do on the vertex side. Over on the pixel side, it is not the same. Transcendentals are needed for normalizations which can hinder performance. Typically these can be amortized over a long period of time minimizing the impact. ATI stated that its transcendentals have not been an issue to date or for the foreseeable future.

Once again ATI has a branch execution unit because there are two kinds of branches that exist. There is a heavy duty one, which means the whole SIMD array has to branch to a whole new part of the code. That is handled by the sequencers, arbiters and the ultra threaded dispatch processor (controller). In other cases, it makes sense to branch by skipping one or two instructions and instead do some simple types of instructions through predication. This prediction can be handled directly by the ALU. It figures out typical results of operations and flags to kill writes, skip instructions, etc. so the arbiters and sequencers do not have to handle it. All of the ALUs have logic to handle this form of predication.

Attached to the ALUs is a large amount of local storage. These are the GPRs (general purpose registers). While the scale of in the block diagram makes the ALUs look larger, it actually is quite the opposite. This is where all of the sleeping thread data temporary variables, like the results of computations and the source data for those computations, are held.

It is a unified shader so it shouldn't care what shader you are running. Vertex, geometry and pixel are exactly the same so all of the resources are available to you every single cycle. Whether it is doing vertex texturing using displacement maps or doing some oddball lookup in your geometry shader, all of the resources are the same. There shouldn't be any an advantage from being in a vertex, geometry or pixel shader because they are just instructions that need to be executed regardless of the type of shading. Whether or not it really matters is something we are trying to test.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With new hardware comes all of the new ideas on how to use it. Developers are going to start doing strange things compared to what they did in the past. One example we saw was doing tessellation from a displacement map to create a high-geometry complex environment as was done in the new "Ruby: Whiteout" demo. With two sets of DX10-capable hardware available, we should expect an explosion of new ideas because of the kind of firepower available.