R600: Finally DX10 Hardware from ATI

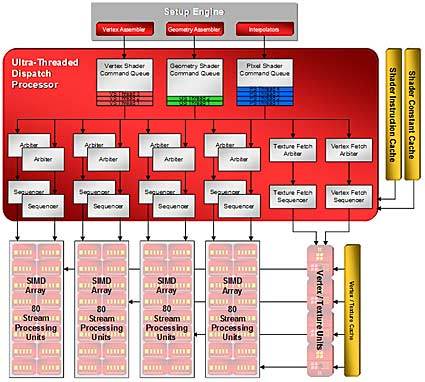

Ultra-Threaded Dispatch Processor

Arbitration

This piece of hardware was first introduced in the R500 series processors in Radeon X1000 series graphics cards. The processors were introduced to offer threaded instruction sets in flight to maximize utilization. The logic's work is to make sure that the shader is as busy as possible. More importantly than just being busy, it must do the right kind of work and it must finish the work as quickly as possible.

The setup engine waits to send data into the shader core from the three queues for vertex, geometry or pixel shading. There is an arbitration process that occurs to figure out the best piece of data to work on when a slot opens up. This arbitration is a dynamic process based on what the shader is currently working on, what it has been doing, how much data there is to be processed and various other parameters.

Once inside the shader, there is one of two things happening: the threads are either being executed or "slept" until they can be worked on. There are various kinds of threads working in the shader, and while they sleep, they go into their own queue. Beyond that there is even more arbitration. It has to figure out when to wake up sleeping threads and in the order they should be woken up.

In conjunction with thread arbitration, there needs to be resource arbitration. With a limited number of resources such as SIMD arrays and caches that threads need to work on, these resources need to be prioritized for specific threads. Threads want to work on specific resources, but have to take turns. For example, if a thread wants to work on an ALU, it will wait to win arbitration so it can work on the next one. If it wants to do a texture fetch, it needs to wait for the next texture fetch resource.

"[It is] a very complex system," Demers said. "It is more complex than anything we have done in the past."

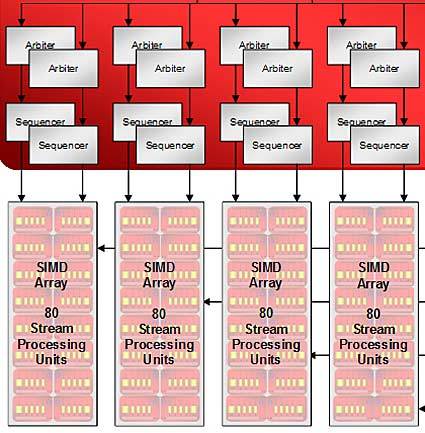

From the diagram above you can see that there are two arbiters per SIMD array. During every cycle there is one thread submitted and during every other cycle another is submitted. There are two threads at each SIMD array all the time so it can choose what it should work on. When one thread is finished it will receive another thread to arbitrate and complete. The concept is to keep each SIMD array as busy as possible.

The flow passes into the SIMD arrays working on alternating clock cycles. This can be demonstrated as clock 1 - thread 1, clock - 2 thread 2, clock 3 - thread 1, clock 4 - thread 2, etc. until each is finished. The setup engine creates threads and groups them together into blocks of 64 things. The vertex assemblers will group 64 items and send them off as a single block. It is the same for the scan converter (rasterizer) and geometry assembler.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

There are eight arbiters for ALU type operations, arbiters for the vertex and texture caches as well as arbiters for a few other things that are not shown in the block diagrams. To clarify what types of things need arbitration, Demers stated that "every shared resource has to have arbitration."

Demers said: "Arbitration for ALU occurs in pairs of threads, though, from an overall perspective, it doesn't really matter; we simply interleave 2 threads in ALUs all the time, to maximize their usage. There is arbitration for every resource, which does mean texture requests, export out of the shader (i.e. completed threads), requests to the unfiltered cache, requests for the memory read and writes, requests for interpolation and access to the shader. Those are the main ones that come to mind. The whole point is to make sure that all these threads of work can have access to all the needed resources and to keep these resources as busy as possible. Sequencing occurs above and beyond that, to sequence the data through the resource."