AMD Radeon HD 7970 GHz Edition Review: Give Me Back That Crown!

Overclocking With PowerTune

We chose Crysis 2 for our overclocking tests. In our experience, this title is extremely sensitive to variations in GPU frequency, reacting negatively when a graphics card is near its edge (even more so than FurMark).

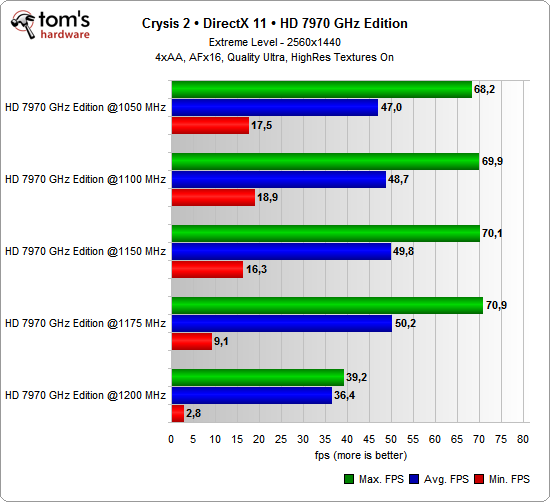

Both the Radeon HD 7970 and 7970 GHz Edition are set to 1050, 1100, 1150, and 1175 MHz. We were unable to reach anything higher than 1175 MHz on either of our GHz Edition samples without encountering serious visual artifacts.

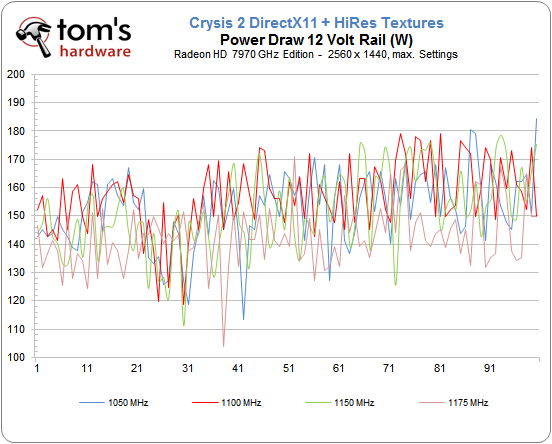

First, let’s compare power consumption across the four runs at each frequency setting.

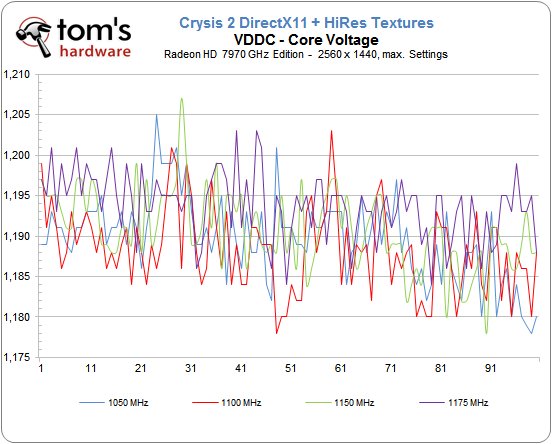

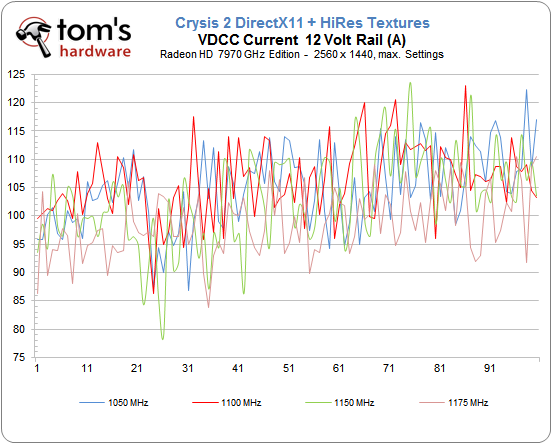

As we can see, increased power consumption corresponds to a reduction in GPU voltage. We would have expected the opposite.

Starting at 1175 MHz, we began to see minor visual artifacts (missing texture transparencies and polygon errors) that we were able to remedy by increasing VDDC. Apparently, PowerTune is stepping in very early on to turn voltage down, way before power consumption actually reaches a critical level.

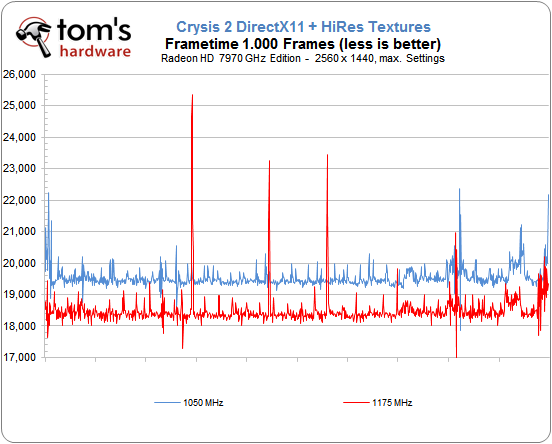

The results of this intervention are quite obvious, manifesting as sporadically-dropped frames, texture errors, absent light sources, and other missing effects. Strangely, although the average and maximum frame rates continually increased, the minimum frame rates started to go down with each clock rate increase starting at 1150 MHz.

Our Radeon HD 7970 GHz Edition cards aren’t any more capable than the original Radeon HD 7970 overclocked. In our opinion, you reach the best results at 1150 MHz using AMD’s own tools. Push any harder and you’ll find the card dropping frames (even if its average frame rates seem to suggest better performance).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Overclocking With PowerTune

Prev Page Radeon HD 7970 Vs. Radeon HD 7970 GHz Edition Next Page Will Your Old 7970 Take A GHz Edition Firmware?-

Darkerson My only complaint with the "new" card is the price. Otherwise it looks like a nice card. Better than the original version, at any rate, not that the original was a bad card to begin with.Reply -

wasabiman321 Great I just ordered a gtx 670 ftw... Grrr I hope performance gets better for nvidia drivers too :DReply -

mayankleoboy1 nice show AMD !Reply

with Winzip that does not use GPU, VCE that slows down video encoding and a card that gives lower min FPS..... EPIC FAIL.

or before releasing your products, try to ensure S/W compatibility. -

vmem jrharbortTo me, increasing the memory speed was a pointless move. Nvidia realized that all of the bandwidth provided by GDDR5 and a 384bit bus is almost never utilized. The drop back to a 256bit bus on their GTX 680 allowed them to cut cost and power usage without causing a drop in performance. High end AMD cards see the most improvement from an increased core clock. Memory... Not so much.Then again, Nvidia pretty much cheated on this generation as well. Cutting out nearly 80% of the GPGPU logic, something Nvidia had been trying to market for YEARS, allowed then to even further drop production costs and power usage. AMD now has the lead in this market, but at the cost of higher power consumption and production cost.This quick fix by AMD will work for now, but they obviously need to rethink their future designs a bit.Reply

the issue is them rethinking their future designs scares me... Nvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite. True, Nvidia is able to produce more gaming performance for less, but this is pushing anyone who wants GPU compute to get an overpriced professional card. now before you say "well if you're making a living out of it, fork out the cash and go Quadro", let me remind you that a lot of innovators in various fields actually do use GPU compute to ultimately make progress (especially in academic sciences) to ultimately bring us better tech AND new directions in tech development... and I for one know a lot of government funded labs that can't afford to buy a stack of quadro cards -

DataGrave ReplyNvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite.

100% acknowledge

And for the gamers: take a look at the new UT4 engine! Without excellent GPGPU performace this will be a disaster for each graphics card. See you, Nvidia. -

cangelini mayankleoboy1Thanks for putting my name in teh review now if only you could bold it;-)Reply

Excellent tip. Told you I'd look into it!