AMD Radeon HD 7970 GHz Edition Review: Give Me Back That Crown!

PowerTune With Boost: Is The Accelerator Stuck?

With a basic understanding of what PowerTune with Boost is, we wanted to explore its behavior in more depth.

According to AMD, the Radeon HD 7970 GHz Edition’s base clock is no longer end-user editable. It’s stuck at 1 GHz. When you move the slider in the Catalyst Control Center’s Overdrive tab, you’re actually moving what the company calls a boost state. And because the boost state is accompanied by a higher voltage, overclocking potential should be higher than modifying the base clock. From there, you’re supposed to be able to push Overdrive’s power control slider up even further to keep the card running at its higher P-state for longer without bouncing off of its protection circuitry and back to 1 GHz.

So, in theory, AMD is introducing an extra P-state, allowing the card to either operate at its base clock or a higher frequency. As long as the card stays within its TDP, it’s able to utilize the high clock, which can be adjusted through the Catalyst Control Center. If TDP is exceeded, the clock reverts to 1 GHz. The idea that AMD’s graphics hardware runs at one speed, selected for its applicability to real-world games, but then drops to another speed if some synthetic workload pushes it over the edge is not new at all. The existing Radeon HD 7970 could already do that. Getting more voltage to help augment a higher boost frequency would be a fresh concept, though.

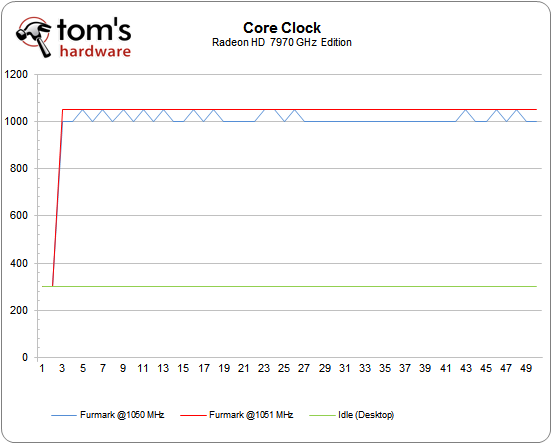

Per AMD’s suggestion, we started bumping up the clock rate slider in Overdrive, hoping to see a higher voltage that’d help us hold onto a correspondingly higher overclock. What we saw instead was that altering the boost frequency by even 1 MHz up or down prevented the card from dynamically switching back to 1 GHz at all. Instead, the GPU remained at its dialed-in boost speed, temps kept going up, and we shut ‘er down to prevent a more serious problem.

We reproduced this behavior on two cards in two different countries running on four different platforms and multiple installations of Windows. Interestingly, we didn’t even need to adjust the power slider in Overdrive to maintain our overclocked boost state. We simply set a higher frequency, fired up FurMark, and watched the temp crest 90° in under a minute.

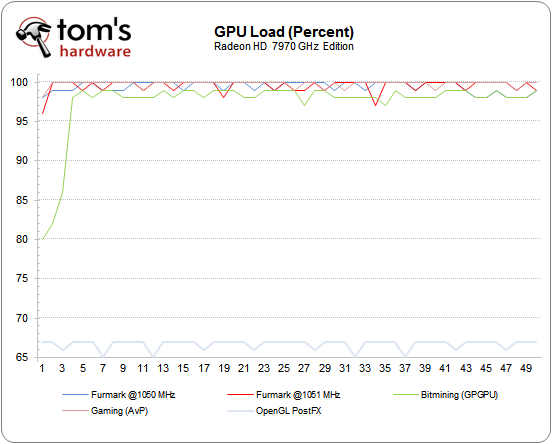

Our interest piqued, we decided to follow up by testing the card’s load in a number of scenarios. These included FurMark with boost operating as it should (between 1050 and 1000 MHz), as well as completely pegged at 1051 MHz. Bitmining serves as our compute workload, while the Aliens vs. Predator demo represents gaming. As you can see, several tests remained at full load with the card running at its maximum clock rate.

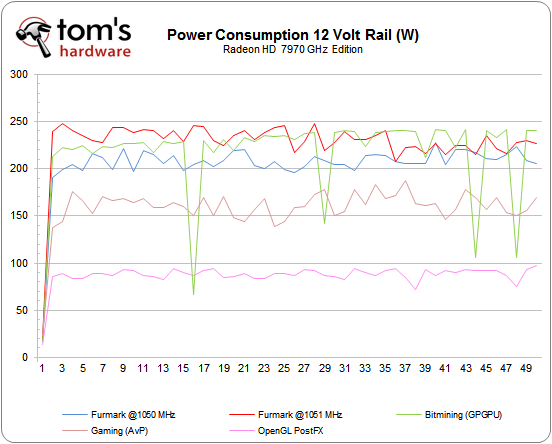

So, what are we looking at here? A protection mechanism that only works at its default settings, or a power-limiting feature asleep on the job? In order to answer our questions, we’ll look at power draw on the 12 V rail during each load scenario.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Bitmining client and FurMark, both running at a constant 1051 MHz, pretty much max out the typical board power rating set forth by AMD. When the clock-limiting feature works properly, though, FurMark’s consumption drops notably. AvP and our OpenCL-based test are both able to run at full speed without any need to jump down to a lower frequency.

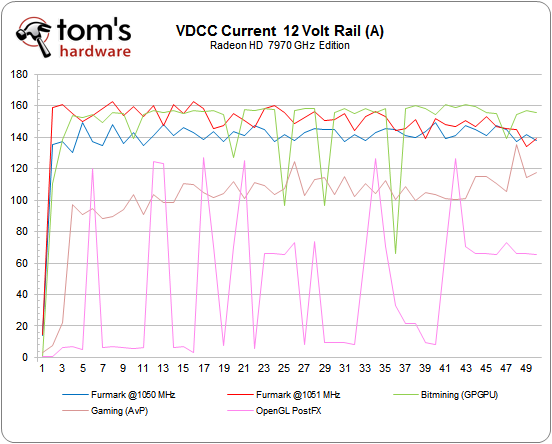

Now, if we take a look at the currents involved, we can see that at a partial load of less than 70 percent, the OpenCL test demonstrates some interesting spikes. Since the graph for the chip’s power draw, being a product of amperage and voltage, is a lot straighter, we can surmise that the GHz Edition card’s core voltage is constantly rising and falling.

It looks like we end up with a “persistent boost state.” Does that mean we can get by without any throttling thanks to built-in headroom? As of now, we still don’t know why overclocking confounds PowerTune.

We also wondered why only applications like FurMark and OCCT were able to trigger the feature, and only when we left the boost clock at its default value of 1050 MHz. Pure compute-oriented workloads able to generate similar load levels didn’t trigger the same reaction.

Digging deeper still, we’re going back to re-test everything on the original Radeon HD 7970. After all, AMD promises that this new card offers better performance at the same power levels using the same GPU. That’s be great, if it pans out.

Current page: PowerTune With Boost: Is The Accelerator Stuck?

Prev Page Is An Overclocked Radeon HD 7970 Greater Than GeForce GTX 680? Next Page Radeon HD 7970 Vs. Radeon HD 7970 GHz Edition-

Darkerson My only complaint with the "new" card is the price. Otherwise it looks like a nice card. Better than the original version, at any rate, not that the original was a bad card to begin with.Reply -

wasabiman321 Great I just ordered a gtx 670 ftw... Grrr I hope performance gets better for nvidia drivers too :DReply -

mayankleoboy1 nice show AMD !Reply

with Winzip that does not use GPU, VCE that slows down video encoding and a card that gives lower min FPS..... EPIC FAIL.

or before releasing your products, try to ensure S/W compatibility. -

vmem jrharbortTo me, increasing the memory speed was a pointless move. Nvidia realized that all of the bandwidth provided by GDDR5 and a 384bit bus is almost never utilized. The drop back to a 256bit bus on their GTX 680 allowed them to cut cost and power usage without causing a drop in performance. High end AMD cards see the most improvement from an increased core clock. Memory... Not so much.Then again, Nvidia pretty much cheated on this generation as well. Cutting out nearly 80% of the GPGPU logic, something Nvidia had been trying to market for YEARS, allowed then to even further drop production costs and power usage. AMD now has the lead in this market, but at the cost of higher power consumption and production cost.This quick fix by AMD will work for now, but they obviously need to rethink their future designs a bit.Reply

the issue is them rethinking their future designs scares me... Nvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite. True, Nvidia is able to produce more gaming performance for less, but this is pushing anyone who wants GPU compute to get an overpriced professional card. now before you say "well if you're making a living out of it, fork out the cash and go Quadro", let me remind you that a lot of innovators in various fields actually do use GPU compute to ultimately make progress (especially in academic sciences) to ultimately bring us better tech AND new directions in tech development... and I for one know a lot of government funded labs that can't afford to buy a stack of quadro cards -

DataGrave ReplyNvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite.

100% acknowledge

And for the gamers: take a look at the new UT4 engine! Without excellent GPGPU performace this will be a disaster for each graphics card. See you, Nvidia. -

cangelini mayankleoboy1Thanks for putting my name in teh review now if only you could bold it;-)Reply

Excellent tip. Told you I'd look into it!