AMD Radeon HD 7970 GHz Edition Review: Give Me Back That Crown!

Benchmark Results: GPU Compute

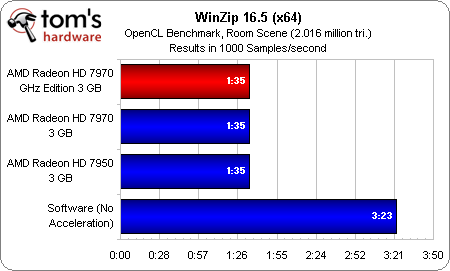

Last week, I got my first look at AMD’s Trinity-based APU. The story kicked off with x86-based benchmarks. The architecture’s Piledriver-based cores didn’t disappoint, but they won’t be Trinity’s biggest strength, either. Then we hit graphics—an AMD forte, no question. Finally, we dipped our toes into OpenCL with WinZip 16.5 and witnessed the potential of GPU acceleration in a productivity-oriented title.

Or so it seemed.

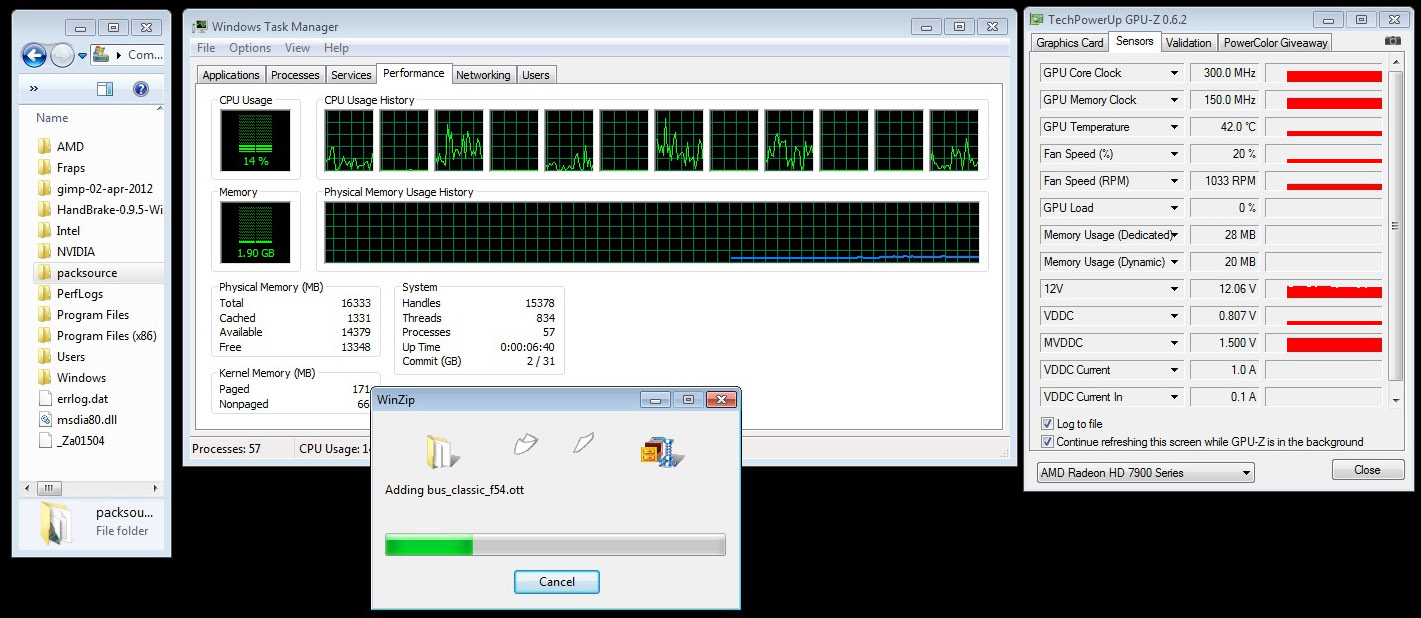

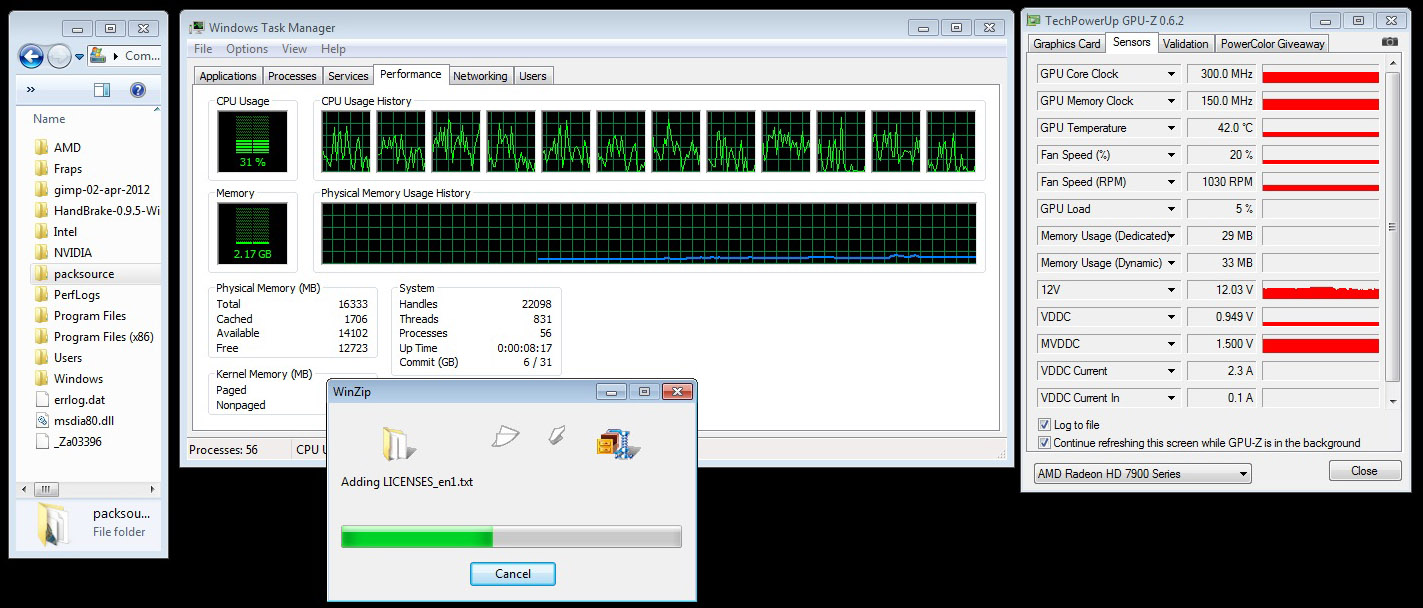

Reader mayankleoboy1 asked me to look into the resource utilization of WinZip 16.5, and it turns out that enabling OpenCL puts minimal load on a Radeon HD 7970. In the screenshots below, our Core i7-3960X is only at a 14% duty cycle with OpenCL disabled and activity on six of its available threads. Turn the feature on, though, and much more of our host processor is utilized. While I managed to catch GPU-Z showing 5% utilization on the Radeon HD 7970, it spent far more time at 0 and 1%.

With that said, you’re only able to turn OpenCL support on with an AMD graphics card, so it remains an exclusive feature for now. And it is effective, cutting our workload in more than half. It just doesn't seem like a very "GPU-accelerated" capability.

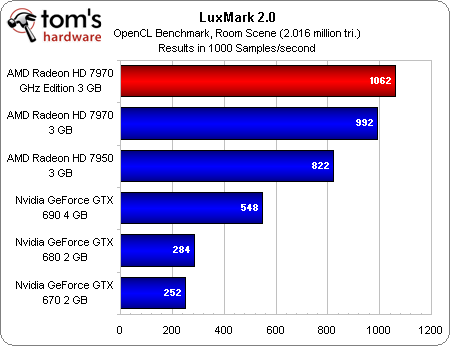

The Radeon HD 7970’s good result is improved upon with the GHz Edition card in LuxMark 2.0, which centers on the LuxRender ray tracing engine. The new board nearly doubles the performance of Nvidia’s dual-GPU GeForce GTX 690. Although WinZip doesn’t seem to owe its speed-up to AMD graphics, LuxMark certainly does.

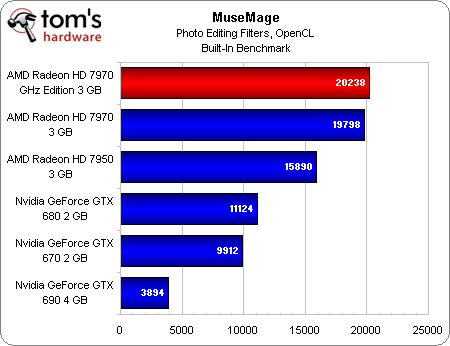

So does MuseMage, a photo editing application with a number of OpenCL-accelerated filters. With scaling that corresponds to shader processors, clock rate, and, clearly, architecture, the new GHz Edition board finishes in first place.

There were a number of additional tests we wanted to run, including Photoshop CS6, a beta build of HandBrake, and a beta build of GIMP. However, retesting all of our cards using the latest drivers in games took precedence. But the expanding list of OpenCL-optimized titles makes it clear that, finally, all of the talk about heterogeneous computing is giving way to real software we can test. More important than that self-serving state of affairs, apps that people actually use on a daily basis are being affected.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Consequently, we’ll be including a lot more compute-oriented testing in our graphics card reviews moving forward. Depending on how Nvidia responds, this could remain a real bastion for AMD, which is already betting so much of its success on the Heterogeneous System Architecture as Nvidia largely ignores client workloads in favor of its Quadro/Tesla business.

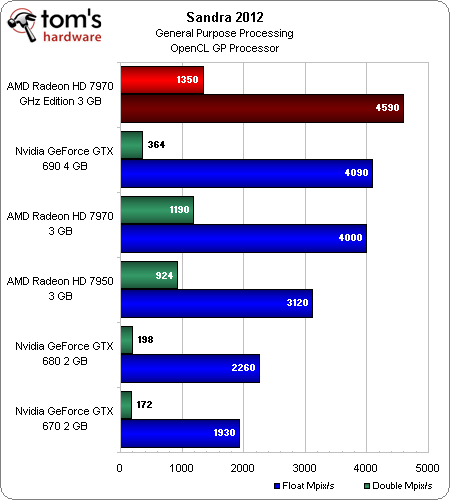

As developers get better about identifying the aspects of their workloads able to benefit from a GPU’s parallelized architecture, and then maximize the performance they’re able to extract from the hardware, Sandra helps demonstrate that the potential of AMD’s GCN far outstrips Kepler as it is implemented in GK104. And with GK110 not expected on the desktop until 2013, our placing isn’t expected to change this year.

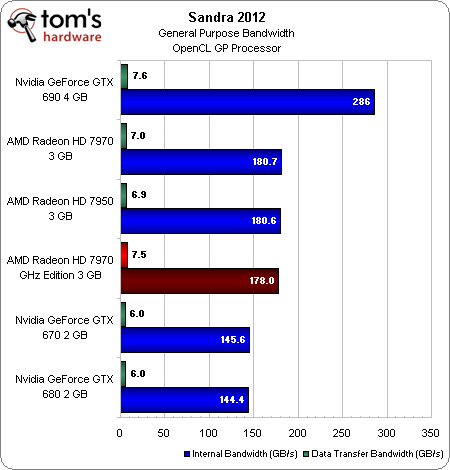

Interesting also is that Nvidia's latest drivers still don't allow for PCI Express 3.0 support on Sandy Bridge-E platforms, resulting in less interface bandwidth than what AMD's cards achieve. However, you can now manually force it on through a patch from the company.

Current page: Benchmark Results: GPU Compute

Prev Page Benchmark Results: Metro 2033 (DX 11) Next Page Benchmark Results: MediaConverter 7.5-

Darkerson My only complaint with the "new" card is the price. Otherwise it looks like a nice card. Better than the original version, at any rate, not that the original was a bad card to begin with.Reply -

wasabiman321 Great I just ordered a gtx 670 ftw... Grrr I hope performance gets better for nvidia drivers too :DReply -

mayankleoboy1 nice show AMD !Reply

with Winzip that does not use GPU, VCE that slows down video encoding and a card that gives lower min FPS..... EPIC FAIL.

or before releasing your products, try to ensure S/W compatibility. -

vmem jrharbortTo me, increasing the memory speed was a pointless move. Nvidia realized that all of the bandwidth provided by GDDR5 and a 384bit bus is almost never utilized. The drop back to a 256bit bus on their GTX 680 allowed them to cut cost and power usage without causing a drop in performance. High end AMD cards see the most improvement from an increased core clock. Memory... Not so much.Then again, Nvidia pretty much cheated on this generation as well. Cutting out nearly 80% of the GPGPU logic, something Nvidia had been trying to market for YEARS, allowed then to even further drop production costs and power usage. AMD now has the lead in this market, but at the cost of higher power consumption and production cost.This quick fix by AMD will work for now, but they obviously need to rethink their future designs a bit.Reply

the issue is them rethinking their future designs scares me... Nvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite. True, Nvidia is able to produce more gaming performance for less, but this is pushing anyone who wants GPU compute to get an overpriced professional card. now before you say "well if you're making a living out of it, fork out the cash and go Quadro", let me remind you that a lot of innovators in various fields actually do use GPU compute to ultimately make progress (especially in academic sciences) to ultimately bring us better tech AND new directions in tech development... and I for one know a lot of government funded labs that can't afford to buy a stack of quadro cards -

DataGrave ReplyNvidia has started a HORRIBLE trend in the business that I hope to dear god AMD does not follow suite.

100% acknowledge

And for the gamers: take a look at the new UT4 engine! Without excellent GPGPU performace this will be a disaster for each graphics card. See you, Nvidia. -

cangelini mayankleoboy1Thanks for putting my name in teh review now if only you could bold it;-)Reply

Excellent tip. Told you I'd look into it!