AI research team claims to reproduce DeepSeek core technologies for $30 — relatively small R1-Zero model has remarkable problem-solving abilities

It's cheap and powerful.

An AI research team from the University of California, Berkeley, led by Ph.D. candidate Jiayi Pan, claims to have reproduced DeepSeek R1-Zero’s core technologies for just $30, showing how advanced models could be implemented affordably. According to Jiayi Pan on Nitter, their team reproduced DeepSeek R1-Zero in the Countdown game, and the small language model, with its 3 billion parameters, developed self-verification and search abilities through reinforcement learning.

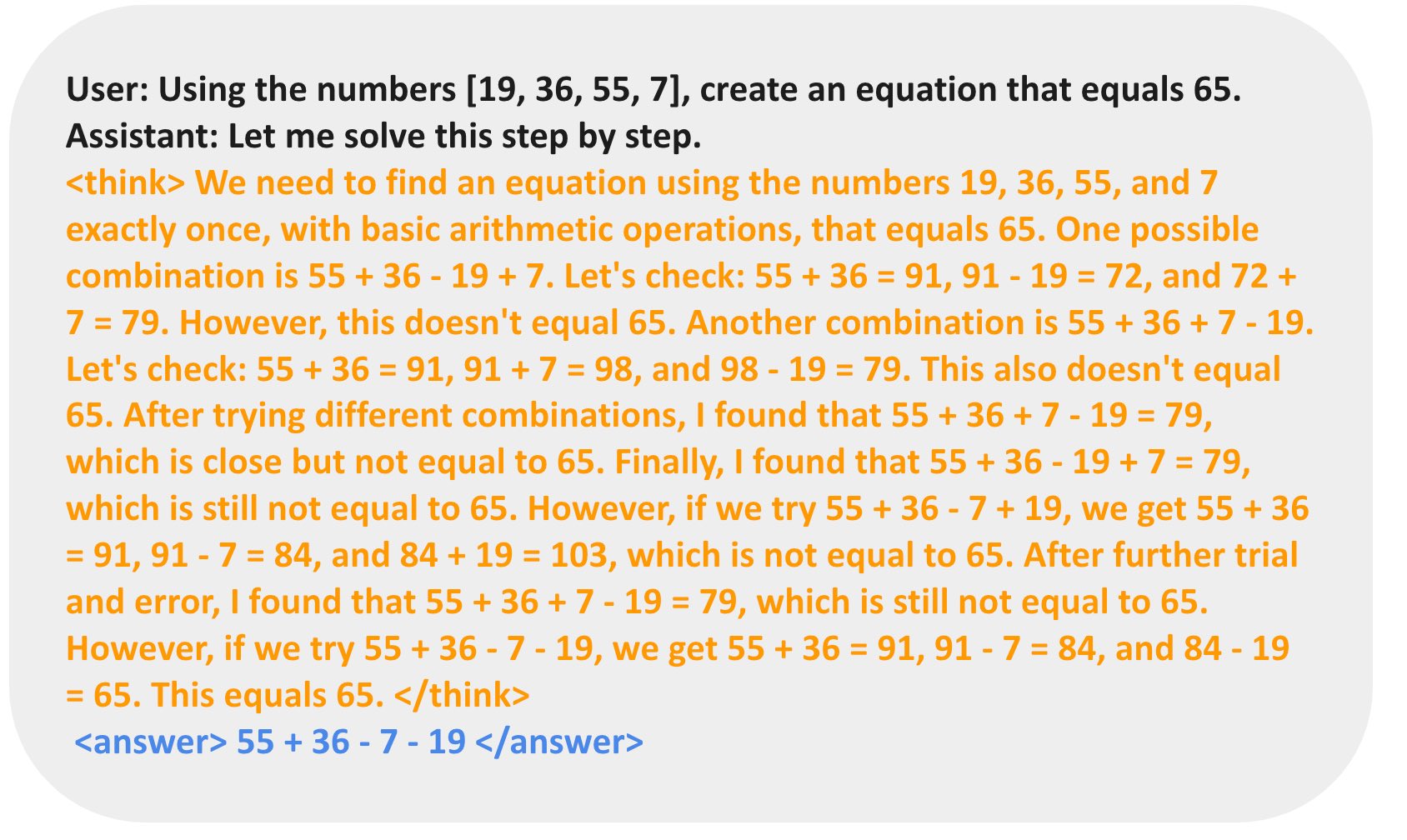

Pan says they started with a base language model, prompt, and a ground-truth reward. From there, the team ran reinforcement learning based on the Countdown game. This game is based on a British game show of the same name, where, in one segment, players are tasked to find a random target number from a group of other numbers assigned to them using basic arithmetic.

The team said their model started with dummy outputs but eventually developed tactics like revision and search to find the correct answer. One example showed the model proposing an answer, verifying whether it was right, and revising it through several iterations until it found the correct solution.

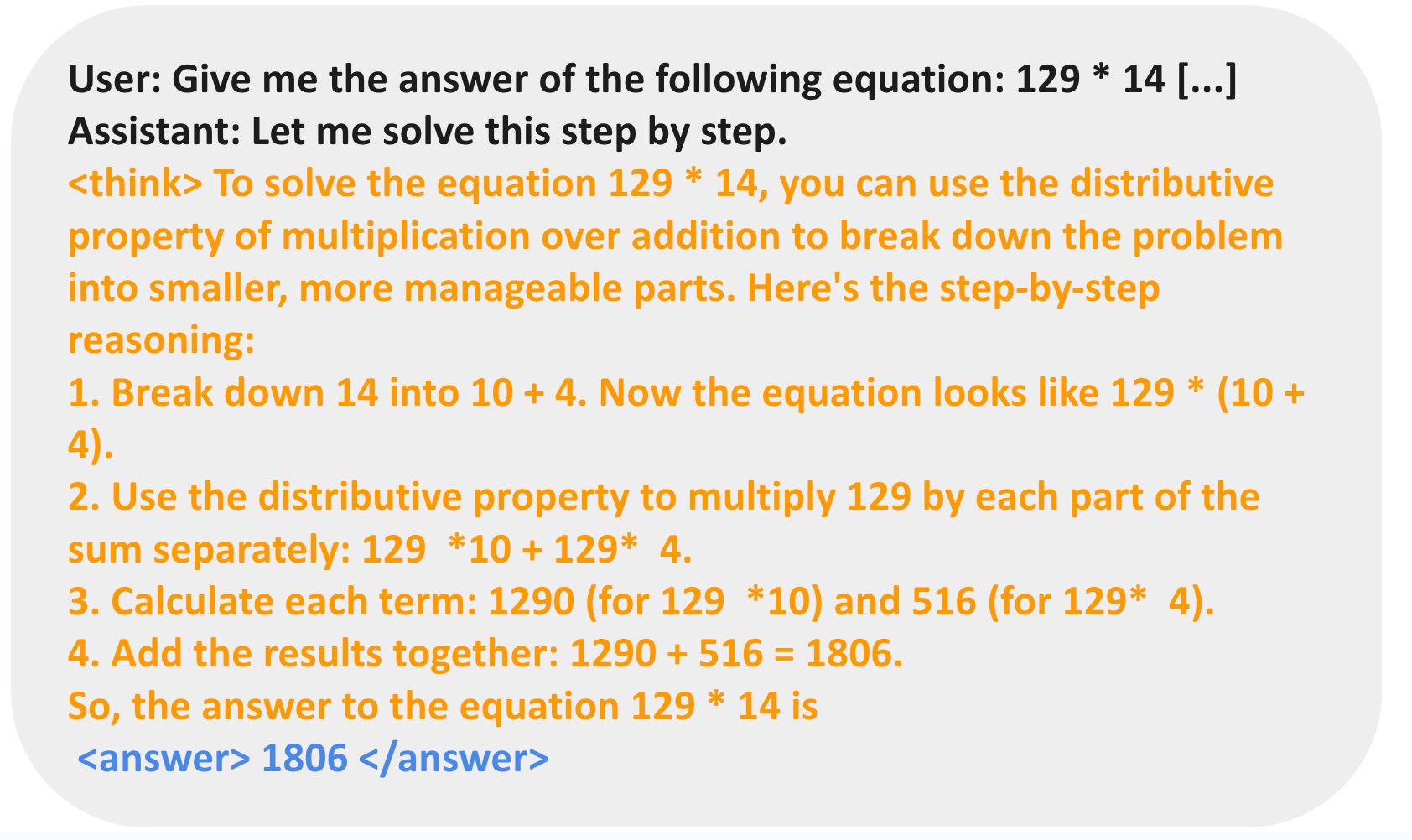

Aside from Countdown, Pan also tried multiplication on their model, and it used a different technique to solve the equation. It broke down the problem using the distributive property of multiplication (much in the same way as some of us would do when multiplying large numbers mentally) and then solved it step-by-step.

The Berkeley team experimented with different bases with their model based on the DeepSeek R1-Zero—they started with one that only had 500 million parameters, where the model would only guess a possible solution and then stop, no matter if it found the correct answer or not. However, they started getting results where the models learned different techniques to achieve higher scores when they used a base with 1.5 billion parameters. Higher parameters (3 to 7 billion) led to the model finding the correct answer in fewer steps.

But what’s more impressive is that the Berkeley team claims it only cost around $30 to accomplish this. Currently, OpenAI’s o1 APIs cost $15 per million input tokens—more than 27 times pricier than DeepSeek-R1’s $0.55 per million input tokens. Pan says this project aims to make emerging reinforcement learning scaling research more accessible, especially with its low costs.

However, machine learning expert Nathan Lambert is disputing DeepSeek’s actual cost, saying that its reported $5 million cost for training its 671 billion LLM does not show the full picture. Other costs like research personnel, infrastructure, and electricity aren’t seemingly included in the computation, with Lambert estimating DeepSeek AI’s annual operating costs to be between $500 million and more than $1 billion. Nevertheless, this is still an achievement, especially as competing American AI models are spending $10 billion annually on their AI efforts.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

DerKeyser Well yes, but its not that is has any impact on the financial industry. Even though nVidia and other took a MASSIVE hit because of the possible savings this could lead to, the stock didn’t even drop to oct 2024 levels. It will likely be back in a couple of days, and back onto doubleing every year soon - just like the stocks in general have been on an exponential growth curve the last twenty years because new money is now generated at an unprecedented level - and they need to be reinvested. The western world is turning into an oligarchy with a few owning everything.Reply -

Stomx The AI bubble with its 5-10x inflated hardware prices will some day crash. AI itself of course will stay foreverReply -

phead128 Reply

This is a very generous interpretation for the "biggest one-day loss in U.S. history". What is this arbitrary Oct 2024 levels? Intel is back at 1997 levels, yet you have fanboys thinking it can be resurrected.DerKeyser said:Even though nVidia and other took a MASSIVE hit because of the possible savings this could lead to, the stock didn’t even drop to oct 2024 levels. -

LibertyWell Reply

That's the whole idea: all wealth and property consolidated in the top .01% with a grand return to feudalism. If you want to know just how far advanced this wealth siphoning process is already, this BlackRock video is essential viewing:DerKeyser said:Well yes, but its not that is has any impact on the financial industry. Even though nVidia and other took a MASSIVE hit because of the possible savings this could lead to, the stock didn’t even drop to oct 2024 levels. It will likely be back in a couple of days, and back onto doubleing every year soon - just like the stocks in general have been on an exponential growth curve the last twenty years because new money is now generated at an unprecedented level - and they need to be reinvested. The western world is turning into an oligarchy with a few owning everything.

https://old.bitchute.com/video/ei6QD8ZPl6DU

All 401K investments in the hands of BlackRock, Vanguard, & StateStreat are being wielded heavily against you...