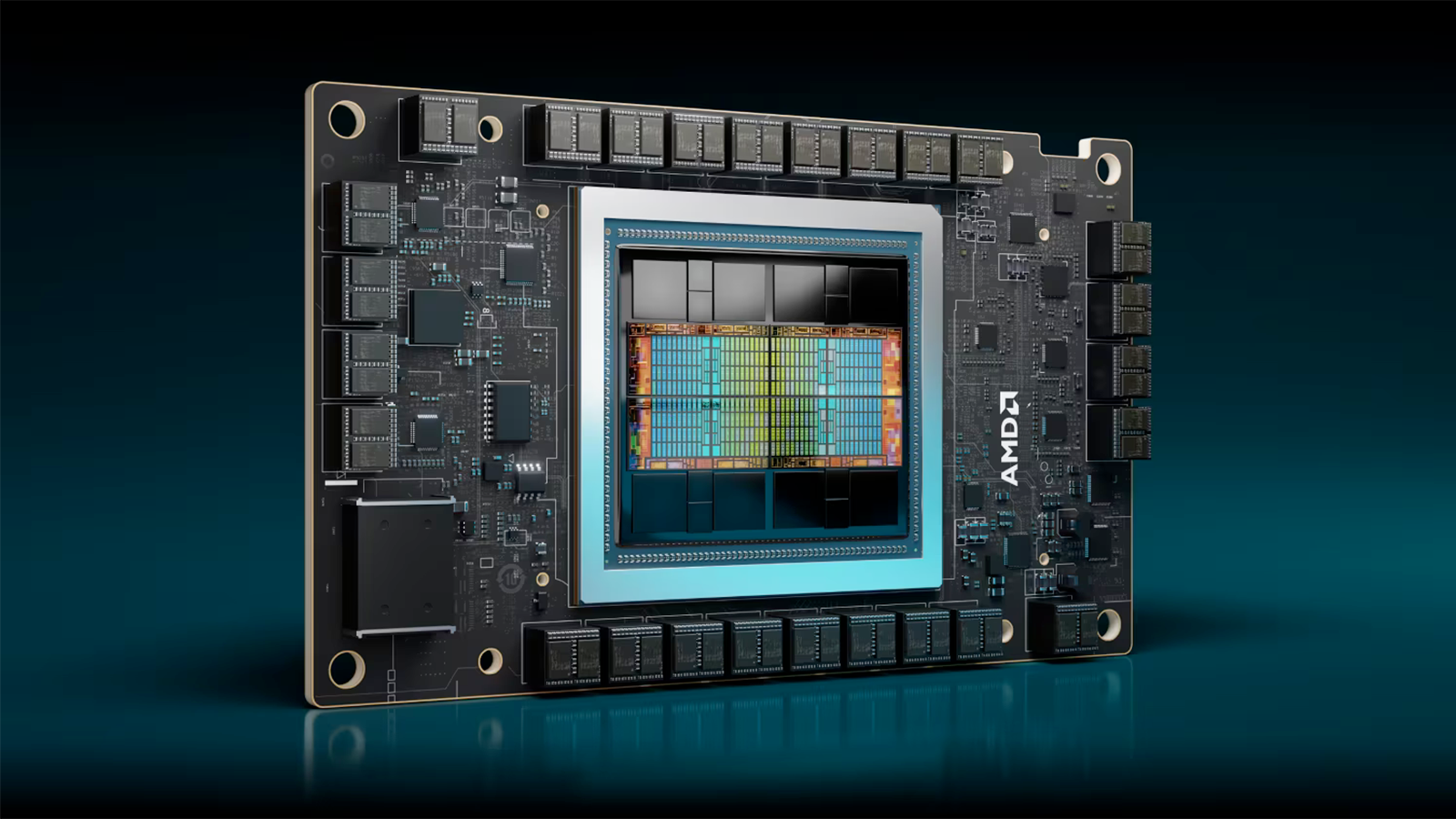

Lenovo says demand for AMD's Instinct MI300 is record high — plans to offer AI solutions from all important hardware vendors

Nobody likes Nvidia's dominance, it seems.

Demand for artificial intelligence servers will set records this year for hardware procurement, and while a significant portion of those machines will be based on Nvidia's processors, demand for systems running other hardware is also very high, according to Lenovo. Demand for AMD Instinct MI300-series-based machines is apparently setting records, at least at Lenovo.

"I think you could say in a very generic sense, demand is as high as we have ever seen for the product," said Ryan McCurdy, president of Lenovo North America, in an interview with CRN. "Then it comes down to getting the infrastructure launched, getting testing done, and getting workloads validated, and all that work is underway."

Okay, if we're being cynical, of course demand for this new product is as high as it's ever been. But perhaps that's not precisely what was meant. McCurdy could simply mean that demand for AMD server solutions equipped with CDNA accelerators is at an all time high, or that demand for the new MI300-based systems is higher than anything Lenovo has previously seen.

For years, Nvidia's GPUs were largely unchallenged in AI workloads. To some degree, that's because Nvidia's software stack was ahead of everyone else, but also because its hardware was superior. But with its Instinct MI300-series, AMD seems to finally have a chance thanks to some major improvements in both hardware and software.

Nvidia of course claims its GPUs are still faster than everything else, but with the right combination of price and performance, it looks like AMD and Intel can win some lucrative contracts. That's particularly true among enterprise clients that need to run inference workloads. Some analysts estimate that enterprise inference is a business worth tens of billions of dollars, so there's a lot to capitalize on.

Nvidia believes that the superior scalability of its Hopper-based systems — as well as the upcoming Blackwell-based machines — will enable it to retain the lead on the market of AI servers for at least another generation. But while Nvidia will probably keep commanding the very top of the market, Lenovo and other companies want other AI processor suppliers to thrive as well, so they're looking forward to working with AMD and Intel.

Lenovo does not want to limit itself, so it plans to offer customers the widest possible selection of AI hardware. This could strain its relationship with Nvidia in the short term, but in the long term there will be serious competition on the AI hardware market, and that's good for everyone.

"I think those partnerships will show itself in future launches with products that we are bringing out across our portfolio: Nvidia for sure, AMD for sure, Intel for sure, and Microsoft," McCurdy told CRN.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

DavidLejdar In particular AMD's MI300X doesn't seem to compare badly to Nvidia's H100 SXM5. The MI300X has 192 GB of VRAM (H100 has 80), and memory bus is 8192 bits (H100 has 5120), and some additional technical numbers. And e.g. for cinema, rendering large scenes seems to be working better with the MI300X (according to Blender GPU benchmarks).Reply

For other workloads, the H100 may perhaps be doing better. But there still is some market left for AMD.

(Disclaimer: I hold some AMD stock. So, I may be biased. But I do hold AMD stock, because of technical details as mentioned, where AMD is not really lightyears behind Nvidia, and giving Intel a run for their money, in some segments.) -

Steve Nord_ 200 cities' data scientists with other fabless AI hardware and 10 junior colleges each to seed some improvement in #include and libraries but 'only' holding stock via investment groupings: Oh yeah, nVidia dominance can be ok sometimes. 7 other cities with nVidia powered self-driving ferries: Pin compatibility would be great.Reply -

cusbrar2 Reply

Its worth noting that B200 and MI300X have almost identical AI performance watt per watt. And since the Mi300X costs half as much you can buy twice as many... this results in that you can also run 4x faster FP64 loads for the same cost and less power than the B200....DavidLejdar said:In particular AMD's MI300X doesn't seem to compare badly to Nvidia's H100 SXM5. The MI300X has 192 GB of VRAM (H100 has 80), and memory bus is 8192 bits (H100 has 5120), and some additional technical numbers. And e.g. for cinema, rendering large scenes seems to be working better with the MI300X (according to Blender GPU benchmarks).

For other workloads, the H100 may perhaps be doing better. But there still is some market left for AMD.

(Disclaimer: I hold some AMD stock. So, I may be biased. But I do hold AMD stock, because of technical details as mentioned, where AMD is not really lightyears behind Nvidia, and giving Intel a run for their money, in some segments.)

I mean anyone looking to buy these should definitely run the numbers on what thier needs are and how much value they can get out of these systems...

Also ZLUDA has pretty much proven there is nothing wrong with the HIP runtime either... since it can run CUDA applications on top of ROCm at expected native speeds.