OCZ Technology Reveals Indilinx Everest 2 Controller

OCZ Technology reveals its second generation Indilinx Everest 2 controller at CES 2012

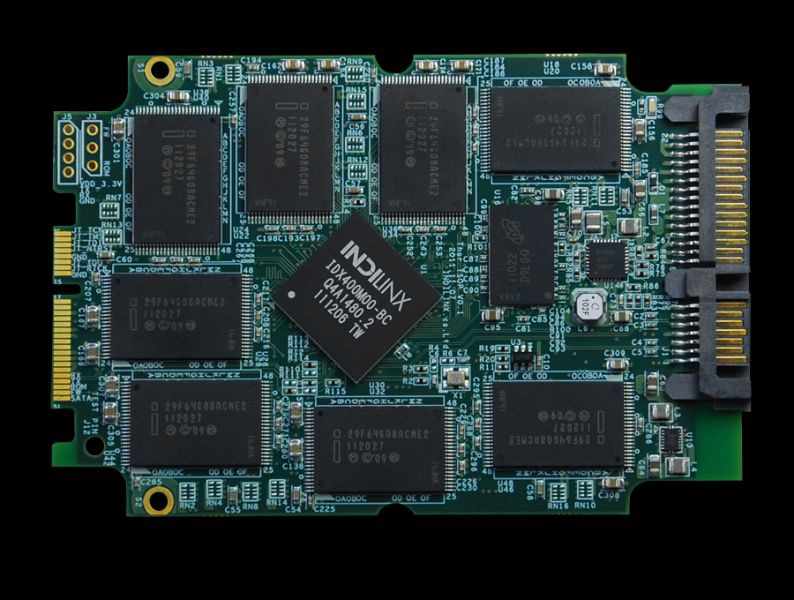

OCZ has utilized three versions of the Indilinx Everest controller over the years. The company first utilized the Indilinx Everest controller with its original OCZ Vertex drive. In 2011, OCZ purchased Indilinx Everest and introduced its Octane series SSD based on the new controller using a SATA III interface. In 2012, OCZ is set to launch the third generation Indilinx controller (second under OCZ ownership), which is designed specifically for I/O-intensive workloads in a wide range of applications. The new controller (IDX400M00-BC vs. IDX300M00-BC) supports performance numbers around 550MB/s sequential reads, 500MB/s sequential writes and 90,000 Random Write IOPS with the newest 2xnm flash technology. The Everest 2 platform supports up to 2TB capacity in a compact 2.5-inch form factor. The new controller is expected to be released in Q2 of 2012. The new controller could be the basis of OCZ's new Vertex performance line (a.k.a. Vertex 4) and the final sign of moving away from SandForce based controllers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

Pyree Sounds promising (550/500 MBps read/write and 90000 IOPS). Looks like what I will be getting to replace my Vertex 2.Reply -

joytech22 evangcan't wait to see how much that 2tb costsYou and me both.Reply

PLEASE be under $2000! HAHA Just kidding, One can dream though.. -

jrharbort That is a MASSIVE performance increase over their second generation controller. Looks like SandForce will finally get a proper run for its money.Reply -

alidan if anyone knows the the die size for how big a gigabyte is out whatever nanometer, please tell me I would love to do the math and figure out how much they up the price on SSD, because really right now I can't see them gouging us too much, but then again I don't know how big these chips are.Reply

In my chips I don't mean the plastic case around the chips on their I mean actual chips themselves, the silicon.

about 21 TB per wafer

wafer costs $50,000

21 divided by 50,000

it comes to about $2380 per terabyte

if I knew the die size I could tell you exactly how much it would cost, the materials alone.

------------------

I used to just want them to lower the price of SSD's, but then I realized that they are made of silicon-based memory and silicon cost crapton to make a wafer, now I understand why to go for speed over cost -

back_by_demand The SSD market should have capitalised on the recent HDD flood debacle to have an industry wide price drop, ramp up production and uptake would have gone through the roof.Reply -

stingstang back_by_demandThe SSD market should have capitalised on the recent HDD flood debacle to have an industry wide price drop, ramp up production and uptake would have gone through the roof.That actually did happen. It was only just recently that some ssd's went at or below the 1gb/$ threshold.Reply

-

back_by_demand stingstangThat actually did happen. It was only just recently that some ssd's went at or below the 1gb/$ threshold.It was close to that level already, just natural decrease to that level isn't good enough, a hard cut, maybe 75 cents per GB would have been enough to tip people over the edge.Reply -

freggo It would be interesting to see a graph comparison of the thru put development of hard drives vs. SSDs.Reply

I have a feeling that hard drives have reached a plateau where the sizes go up but the MB/sec are at their technical limit while SSDs seem to be getting still faster and faster.

There's got to be the point when PC makers start offering systems that come standard with an SSD boot drive and a HD for mass storage.

HP...Dell... anyone reading this ?

-

CaedenV freggoIt would be interesting to see a graph comparison of the thru put development of hard drives vs. SSDs.I have a feeling that hard drives have reached a plateau where the sizes go up but the MB/sec are at their technical limit while SSDs seem to be getting still faster and faster.There's got to be the point when PC makers start offering systems that come standard with an SSD boot drive and a HD for mass storage.HP...Dell... anyone reading this ?HDDs can be quite fast on sequential throughput, especially when you bring cost and size into consideration. You can easily have a larger and 'faster' RAID array for the same price as many high end SSDs. The problem is when you start doing anything non-linier, Random seek time for HDDs is somewhere arround 4.5-7ms, while a full seek time from center to edge is ~8.5-10ms, and those numbers largely have not changed from the turn of the century. It is in this random IO throughput and having a 0ms seek time that SSDs become so powerful, and no amount of RAIDing HDDs can catch up because it is a physical limit for each and every drive within the RAID. Anywho, my point is that SSDs are not the best tool for ever job, and it will be a really really long time before they are considered a good replacement for general bulk storage of things like video and audio which require a ton (relatively a ton) of space, but do not require much throughput at all.Reply

As far as SSDs becoming standard, that is one of the requirements of Ultrabooks. Granted the SSDs they will be getting will be slower cheaper SSDs, not the screamers like these.