Intel Pairs Integrated Graphics With Discrete AMD Card in Search of Higher Performance

Intel proof-of-concept combines Intel HD 530 integrated graphics with an AMD Radeon RX 480 graphics card.

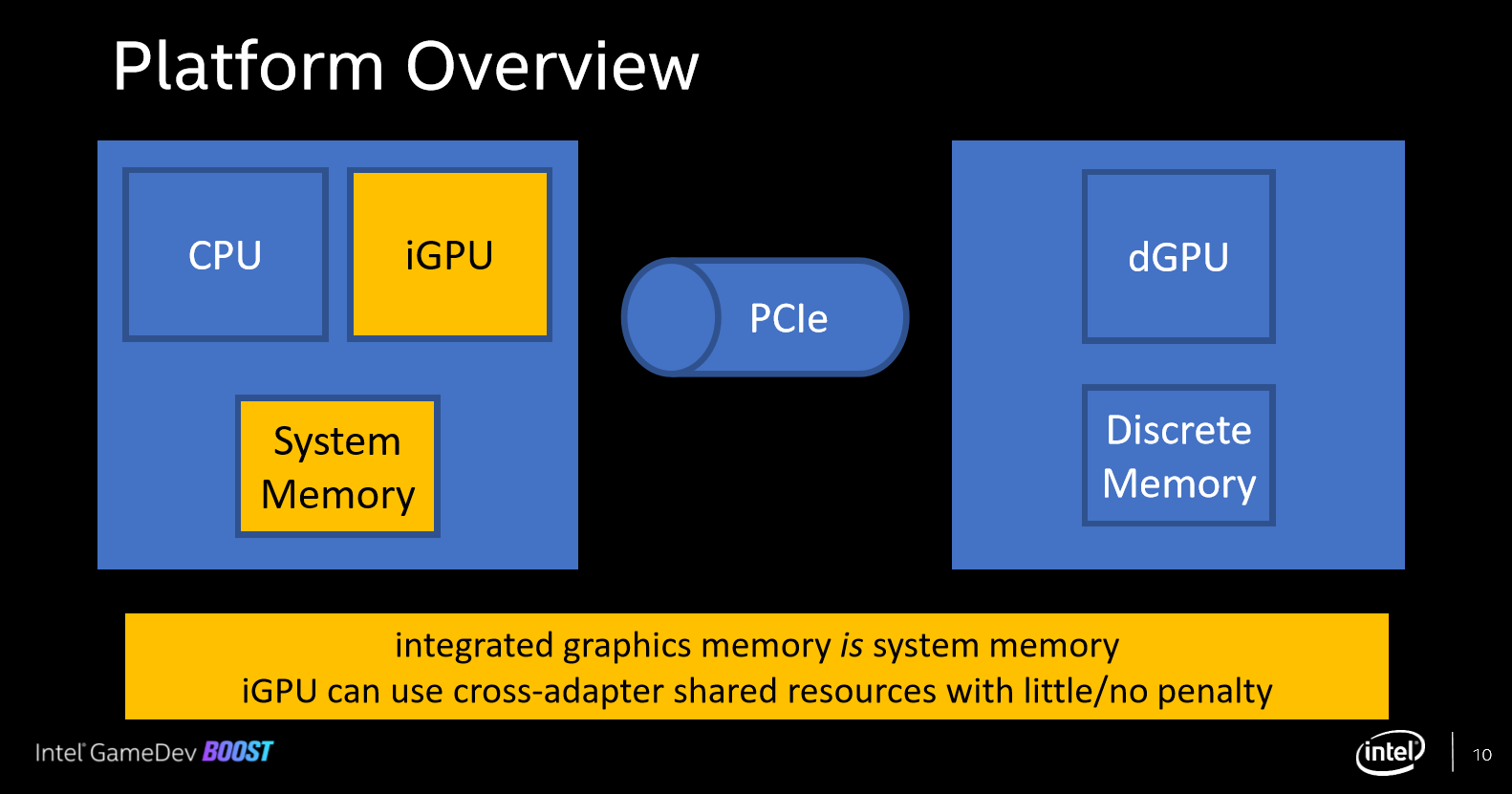

In a Game Developers Conference (GDC) 2020 presentation, Intel discussed the possibility of offloading work from a discrete graphics card to a CPU’s integrated graphics with Direct3D 12 (D3D12), the 3D graphics API powering Direct X. Using a multi-adapter involving asynchronous workloads, Intel simulated particles by using Intel HD 530 integrated graphics with AMD’s Radeon RX 480 graphics card.

With the decision to delay GDC 2020 until the summer, Intel hosted a virtual event and created a repository of its GDC 2020 content. Several presentations are already online.

Among those is one called ‘Multi-Adapter with Integrated and Discrete GPUs’. Intel noted that discrete graphics cards are often paired with CPUs with integrated graphics, and that it explored such “cases (async compute & post-processing) where the latest Intel integrated GPUs add significant performance” compared to only using the discrete graphics card.

This is accomplished by offloading some workloads to the integrated GPU. Intel’s methodology is to run the simulation (compute shader) on the integrated GPU, so the discrete GPU has more room to work on graphics.

Intel’s proof of concept involved Microsoft’s D3D12 n-body particle sim, and it simulated four million particles using the Intel HD 530 graphics with AMD’s Radeon RX 480 discrete GPU. Intel didn’t say how the discrete GPU-only approach performed. Intel did caution of the PCIe bandwidth using PCIe 3.0 x16: 4 million particles take up 64MB, meaning that the PCIe bus will be saturated at 256 Hz.

Intel said there are two ways to accomplish multi-adapter in D3D12.

The first is Linked Display Adapter (LDA). Here, the set-up appears as one adapter (D3D device) with multiple nodes, and resources are copied between nodes. Intel said this is typically symmetrical, which means that identical GPUs are used.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The second approach is explicit multi-adapter with shared resources, which is what Intel did.

Intel also listed three possible uses for multi-adapter. One is to share rendering, such as alternating frames, but Intel said this is not suitable for asymmetric GPUs.

Another is to do post-processing on the integrated graphics, but this requires crossing the PCIe bus twice.

The third approach is to do “async compute” workloads, such AI, physics, mesh deformation, particle simulation and shadows, on the integrated graphics. Intel found this approach to be the best because it follows a producer-consumer model, where the PCI bus is only crossed once: one adapter produces content that the other consumes. Such tasks can also be offloaded completely. Additionally, it's advantageous if the render doesn’t have to wait and if the compute is allowed to take more than one frame.

Intel has published the sample code on GitHub.

Thoughts

We have previously reported on Intel adding multi-GPU support to its drivers in preparation for its discrete graphics, starting with DG1 this year.

Intel’s GDC 2020 multi-adapter presentation seems narrower in scope but broader in adoption as it details how Intel’s integrated graphics could be leveraged using any discrete GPU to improve performance. Currently, the integrated graphics would be mostly idle during gaming.

This is not aimed at shared rendering, however, but works by splitting the compute tasks with the async ones going to the integrated graphics. It also requires some programming for the game developers to support this model.

While it shows that Intel is working to make its integrated graphics more useful and may foreshadow the multi-GPU support Intel plans for an all-Intel discrete plus integrated graphics set-up, it remains to be seen if Intel also plans to support other approaches, such as shared rendering and post-processing, that it found unsuitable for asymmetric set-ups.

-

King_V ReplyIntel didn’t say how the discrete GPU-only approach performed.

I'm wondering, though, how much can Intel's integrated graphics manage to accomplish that would ever make it worth the coding effort to do this?