AMD's Radeon HD 5000-Series: Measuring Power Efficiency

Most of our graphics card reviews include power measurements at idle and load. But how do applications tax your GPU in between those two extremes? We line up a handful of different programs and monitor power use with a handful of AMD's latest cards.

The Tests

Crysis serves as a base to compare measurements using graphics cards in their most popular workload—gaming. For this story, given the cards we were testing, we had to tone down the settings a bit. We chose the default CPU test under DirectX 9 (the High quality preset) at several resolutions (1024x768, 1280x720, 1680x1050, and 1920x1080). The CPU test was chosen instead of the default GPU test for two reasons. First, its POV is closer to the gaming experience, and second, the average frame rate from this test corresponds well to the performance you see throughout the single-player game. It is still sensitive enough to see differences between graphics cards and various settings/resolutions.

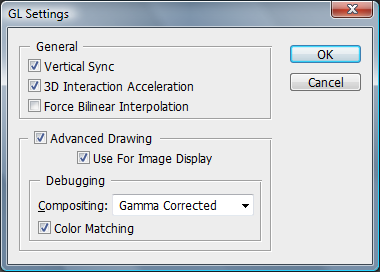

As our first test to compare to the Crysis baseline, we measured system power consumption when running Adobe Photoshop CS4 with GPU acceleration enabled via OpenGL. We ran through a series of zooming in and out using the Zoom tool, and then we zoomed in quickly on selected parts of the image using the Bird's Eye tool. Finally, we rotated the image using the Rotate View tool. Since we had to do this manually, there might be some concerns about accuracy and reproducibility of the workload from one system to the next. Thankfully, Adobe has done a very good job with Photoshop’s GPU acceleration. Power measurement results between runs indicate consistent measurements.

We also ran Cinebench's R11 OpenGL viewport test. Here, we use peak system power consumption and compare it to the measurements taken in Photoshop. Since there's no way to accurately measure the performance of GPU accelerated-functions in Adobe’s software, we’re using the results from Cinebench as a measure of desktop application performance.

The third test is H.264-accelerated video playback in Cyberlink's PowerDVD 9.

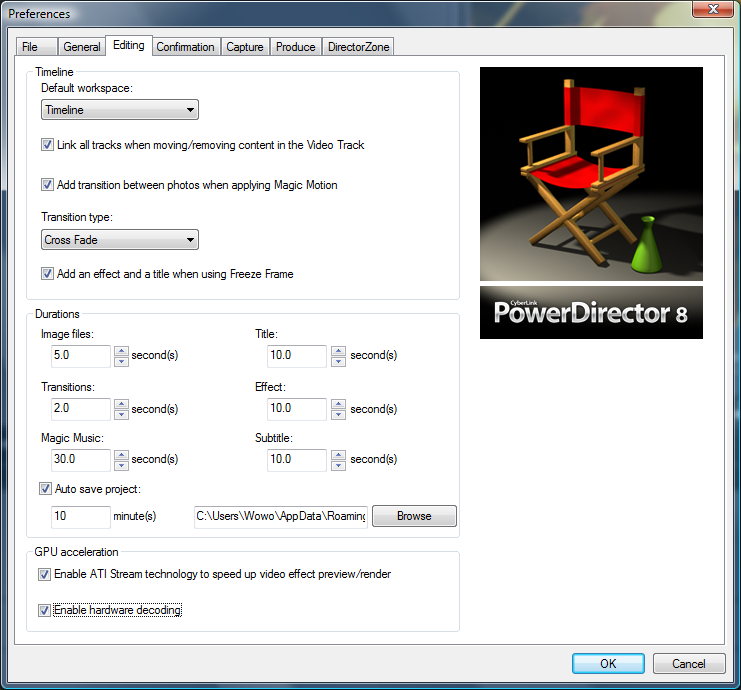

We've also conducted some tests with Cyberlink's PowerDirector 8, making sure GPU acceleration is active for accelerated video filters (these filters will work with both AMD’s Stream and Nvidia’s CUDA APIs). PowerDirector also supports video encoding with GPU acceleration.

Since PowerDirector 8 does not natively support hardware encoding on the GPU using AMD cards, we patched the application with the latest update (3022) and installed AMD’s Avivo transcoding tool. Disabling the hardware encoder and letting the processor handle encoding tasks changes the results significantly. The Radeon 2900 XT doesn't support hardware encoding and decoding, so only the filters will run on the GPU. Although the 790GX doesn't support hardware encoding, there is an option for hardware decoding, which we've left enabled.

For all of the Radeon HD 5000-series cards, we enabled hardware encoding, which is why you'll see such a large difference in performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As a side test, we've also included measurements taken with AMD’s Athlon II X2 250 with the graphics cards running GPU-accelerated filters and GPU encoding. This was done to gauge the value of using GPU-accelerated filters and encoding versus an all-CPU approach with the AMD Phenom II X4 955 BE. In addition to performance, we wanted to know how both setups differ in power consumption.

-

tony singh Very innovative article tom keep it up!! Similar article consisting of various cpus would be really useful.Reply -

spidey81 I know the FPS/watt wouldn't be as good, but what if the 5670 was crossfired. Would it still be a better alternative, efficiency wise, than say a 5850?Reply -

nforce4max Remember the R600 (2900xt) has a 80nm core while the 5870 has a 45nm core. Shrink the R600 and you will get the 3870 (55nm) that barely uses hardly any.Reply -

rhino13 And now just for fun we should compare to Fermi.Reply

Oh, wait, this just in:

There is a Fermi comparison chart that was avalible but you needed to have two screens to display the bar graph for Fermi's power consumption and temperature. So the decission was made to provide readers with the single screen only version.

-

aevm I loved this part:Reply

A mere 20 watts separate the Radeon HD 3300, HD 5670, HD 5770, and HD 5870 1 GB. So, in certain cases, the Radeon HD 5870 1 GB can still save enough power to close in on its more mainstream derivatives. Again, this is the case because the cards use a fixed-function video engine to assist in decoding acceleration, which is the same from one board to the next. Thus, even a high-end card behaves like a lower-end product in such a workload. This is very important, as you will see later on.

My next PC will be used mostly for movie DVDs and Diablo 3. Apparently if I get a 5870 1GB I get the best of both worlds - speed in Diablo and low power consumption when playing movies.

How about nVidia cards, would I get the same behavior with a GTX 480 for example?

-

Onus For those not needing the absolute maximum eye candy at high resolutions in their games, the HD5670 looks like a very nice choice for a do-it-all card that won't break the budget.Reply

Next questions: First, where does the HD5750 fall in this? Second, if you do the same kinds of manual tweaking for power saving that you did in your Cool-n-Quiet analysis, how will that change the results? And finally, if you run a F@H client, what does that do to "idle" scores, when the GPU is actually quite busy processing a work unit? -

eodeo Very interesting article indeed.Reply

I'd love to see nvidia cards and beefier CPUs used as well. Normal non green hdds too. Just how big of a difference in speed/power do they make?

Thank you for sharing. -

arnawa_widagda Hi guys,Reply

Thanks for reading the article.

Next questions: First, where does the HD5750 fall in this? Second, if you do the same kinds of manual tweaking for power saving that you did in your Cool-n-Quiet analysis, how will that change the results? And finally, if you run a F@H client, what does that do to "idle" scores, when the GPU is actually quite busy processing a work unit?

Have no 5750 sample yet, but they should relatively be close to 5770. For this article, we simply chose the best bin for each series (Redwood, Juniper and Cypress).

The second question, what will happen when you tweak the chip? Glad you ask!! I can't say much yet, but you'll be surprised what the 5870 1 GB can do.

As for NVIDIA cards, I'm hoping to have the chance to test GF100 and derivatives very soon.

Take care.