Can OpenGL And OpenCL Overhaul Your Photo Editing Experience?

In our continuing look at the heterogeneous computing ecosystem, it's time to turn our attention to the photo editing apps currently able to exploit OpenCL- and OpenGL-capable hardware. We interview experts, run benchmarks, and draw some conclusions.

Applications: Adobe Photoshop CS6

One common definition of heterogeneous computing is the integration of graphics capabilities on the same die as the CPU (central processing unit). This isn’t quite how Adobe uses the term. Rather, Adobe takes a more systemic view, looking for all the ways in which its software can leverage computing resources, and then discovering which areas of the system best provide those resources. As the company told us, heterogeneous computing is “multiple instruction sets and types of code in the same computer, and utilizing all those resources to give the users a better experience.”

The new Photoshop CS6 illustrates this very well. From its years-ago roots as a multi-threading pioneer to its just-added entrance into OpenCL, Photoshop looks for ways to accelerate features wherever they can be feasibly found. The object of the game is to never delay the user’s experience. Ideally, filters should apply in real-time, and a system should handle 30 layers on a 30-megapixel image with ease. Traditionally, we would have counted on CPU advances to make this dream a reality, but as we've seen from both AMD and Intel lately, expecting major leaps forward in processor performance will likely set you up for a bad time. Today, progress is being facilitated through the integration and utilization of many different subsystems (including the GPU), faster pipes, more parallelism, and so on.

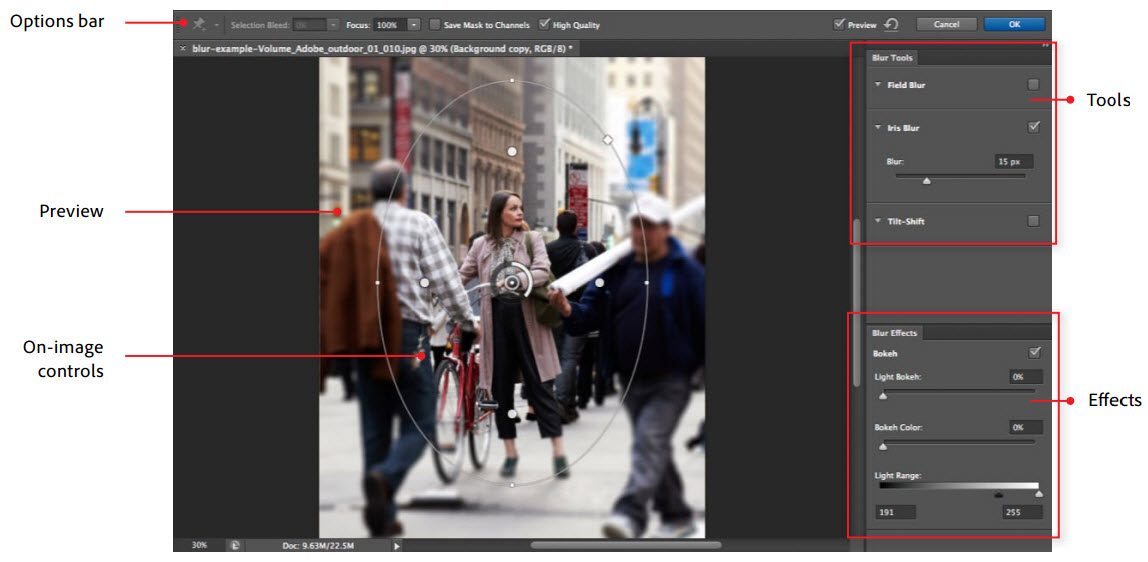

Because different software features and disparate workloads benefit from a variety of hardware design decisions, it’s impossible to have a complete discussion of GPU-based acceleration without examining the ways speed-ups are achieved. Photoshop CS6 introduces support for OpenCL in its Blur Gallery filter tools. Meanwhile, Photoshop continues to build on its OpenGL capabilities by accelerating many functions, including Liquify, adaptive wide angle, transform, warp, puppet warp, lighting effects, and pretty much every 3D feature (shadows, vanishing point, sketch rendering, etc.), except the ray tracer. Additionally, OpenGL powers the oil paint filter, while new features like background saving are threaded, which is really handy on very large jobs.

Despite Adobe being a large company and Photoshop being one of the industry’s most renowned applications, the Photoshop team is surprisingly small: fewer than 60 software and quality engineers. In our discussions, Adobe quipped at one point, “Throwing more bodies at a problem doesn't always make software better.” Especially given this relatively small head count, it’s doubly remarkable that Adobe has tightened its revision times from 18 to 24 months down to 12. Just keep in mind that faster revision cycles will likely mean a slower apparent adoption of a given new technology from version to version.

Adobe provided us with two scripts for testing its latest OpenCL and OpenGL performance. In the first case, the choice was inevitable: Blur Gallery, the only family of OpenCL-accelerated filters in the application. We ran four iterations of a general blur effect being applied to a 60.2 MB, 5615x3744 PSD file. Tests were either RGB or CMYK, and they applied a blur value of either 25 or 300, giving us four tests per blur group. The script ran the effect seven times and output a CSV file, from which we pulled the average time to complete the filter effect.

Adobe supplied a very similar script for its OpenGL-based Liquify filter, which had no varying parameters.

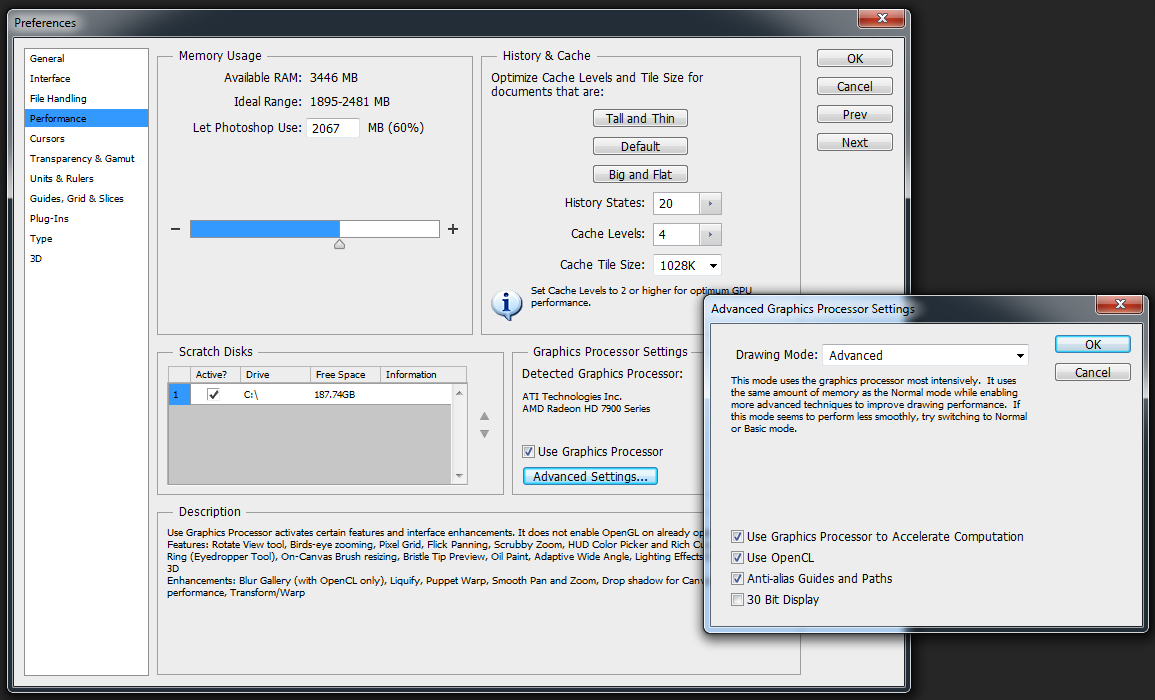

Note that we controlled GPU acceleration through Photoshop’s Preferences > Performance > Graphics Processor Settings options. In this area, unchecking the Use Graphics Processor box will disable all OpenGL and OpenCL acceleration, which turned out to be the only way to get accurate results for non-accelerated tests. The Advanced Settings button will spawn a pop-up with check boxes for OpenGL (unintuitively called Use Graphics Processor to Accelerate Computation) and OpenCL. Apparently, unchecking both of these still leaves OpenGL enabled.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Applications: Adobe Photoshop CS6

Prev Page Applications: GIMP, AfterShot Pro, And Musemage Next Page Q&A: Under The Hood With Adobe-

alphaalphaalpha1 Tahiti is pretty darned fast for compute, especially for the price of the 7900 cards, and if too many applications get proper OpenCL support, then Nvidia might be left behind for a lot of professional GPGPU work if they don't offer similar performance at a similar price point or some other incentive.Reply

With the 7970 meeting or beating much of the far more expensive Quadro line, Nvidia will have to step up. Maybe a GK114 or a cut-down GK110 will be put into use to counter 7900. I've already seen several forum threads talking about the 7970 being incredible in Maya and some other programs, but since I'm not a GPGPU compute expert, I guess I'm not in the best position to consider this topic on a very advanced level. Would anyone care to comment (or correct me if I made a mistake) about this? -

blazorthon A Bad DayHow many CPUs would it take to match the tested GPUs?Reply

That would depend on the CPU. -

de5_Roy can you test like these combos:Reply

core i5 + 7970

core i5 hd4000

trinity + 7970

trinity apu

core i7 + 7970

and core i7 hd 4000, and compare against fx8150 (or piledriver) + 7970.

it seemed to me as if the apu bottlenecks the 7970 and the 7970 could work better with an intel i5/i7 cpu on the graphical processing workloads. -

vitornob Nvidia cards test please. People needs to know if it's better/faster to go OpenCL or CUDA.Reply -

bgaimur vitornobNvidia cards test please. People needs to know if it's better/faster to go OpenCL or CUDA.Reply

http://www.streamcomputing.eu/blog/2011-06-22/opencl-vs-cuda-misconceptions/

CUDA is a dying breed.