Can OpenGL And OpenCL Overhaul Your Photo Editing Experience?

In our continuing look at the heterogeneous computing ecosystem, it's time to turn our attention to the photo editing apps currently able to exploit OpenCL- and OpenGL-capable hardware. We interview experts, run benchmarks, and draw some conclusions.

Q&A: Under The Hood With AMD, Cont.

Tom's Hardware: How much enthusiasm have you found in the developer community? Are they coming to you saying, “Help us out—get us running with OpenCL”?

Alex Lyashevsky: Well, there is no resistance. Frankly, using something like OpenCL is not easy, but I try to break the psychological barrier. People start using OpenCL and often, because it is cumbersome, they won’t get the performance they expect from the marketing claims, so they assume it doesn’t work. Users think it’s a marketing ploy and they won’t use it. My goal is to break the barriers and show that there is a lot of benefit. How to do that is another matter. The problem is that it is not exactly C. It’s not that it’s quite different from C; it’s the problem of mindset. You have to understand that you are really dealing with massively parallel problems. It’s more a problem of people understanding how to run parallel 32 or 64 synchronous threads, and this prevents wider, easier adoption.

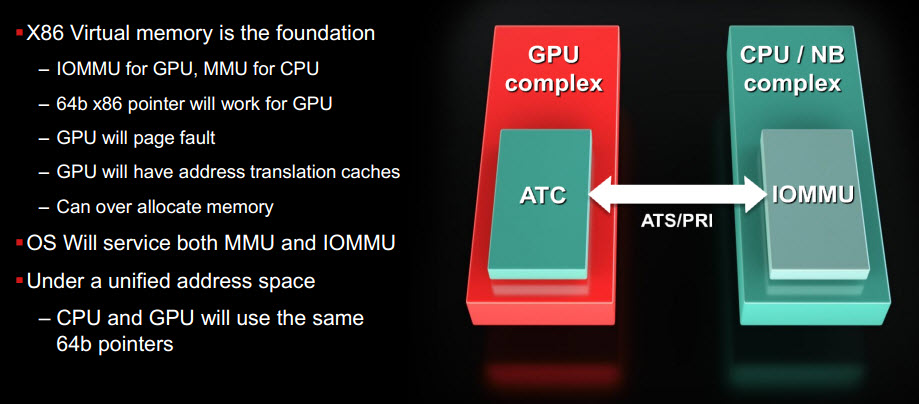

From there, you probably know there are architectural problems. There are system-wide performance concerns, because we need to move data from the CPU or system sides, or “system heaps” as we say, to the video heap to get the best performance. That movement, first of all, causes a problem and resistance. Second, on a system level, it decreases performance because you need to move the data. The question is how efficiently you move it. That’s what we explain how to do, even without optimal efficiency. The future will have a fully unified memory as part of HSA, and it’s physically unified on our APUs, but not unified on our discrete offerings. So, to get the best performance from our device, you have to use specialized memory or a specialized bus. This is another piece of information that people miss when they start developing with OpenCL. People come with some simplified assumption and feel that they cannot or should not differentiate CPU architectures from GPU architectures. And fortunately or unfortunately—it’s difficult to say—while OpenCL is guaranteed to work on both sides, there is no guarantee that CPU and GPU performance will be equal on both sides. You have to program knowing that you are coding for the GPU, not the CPU, to make the best use of OpenCL on the GPU.

Tom's Hardware: In a nutshell, what is your mission? What message mustget through to developers?

Alex Lyashevsky: First of all, you must understand that you have to move data. Second, understand that your programming must massively parallelize the data. And third, almost the same as the second, you have to understand that optimization is achieved through parallel processing. You have to understand the architecture you are programming for. That’s the purpose of the help we are providing to developers, and it’s starting to really pay off. We have surveys of developers in multiple geographies showing that OpenCL is gaining a lot of momentum.

Tom's Hardware: Let’s talk about APU in the context of mobile computing and power. Have the rules of power-savings algorithms changed? Does an APU throttle differently depending on AC or battery power?

Alex Lyashevsky: On CPU, I’m afraid this is the same thing. The memory and power management system is very sophisticated, and sometimes it depends on the grading system. The grading system has most of the rights to decrease activity. But our GPU is pretty adaptive, and its activity sometimes even affects our performance management. If you don’t put reasonable load pressure on our hardware, it will try to be as low-power as possible. It basically slows itself down when it doesn’t have enough work to do. So yeah, it is adaptive. I couldn’t say that it’s absolutely great, but we try to make it as power-efficient as possible.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Q&A: Under The Hood With AMD, Cont.

Prev Page Q&A: Under The Hood With AMD Next Page Test Platforms-

alphaalphaalpha1 Tahiti is pretty darned fast for compute, especially for the price of the 7900 cards, and if too many applications get proper OpenCL support, then Nvidia might be left behind for a lot of professional GPGPU work if they don't offer similar performance at a similar price point or some other incentive.Reply

With the 7970 meeting or beating much of the far more expensive Quadro line, Nvidia will have to step up. Maybe a GK114 or a cut-down GK110 will be put into use to counter 7900. I've already seen several forum threads talking about the 7970 being incredible in Maya and some other programs, but since I'm not a GPGPU compute expert, I guess I'm not in the best position to consider this topic on a very advanced level. Would anyone care to comment (or correct me if I made a mistake) about this? -

blazorthon A Bad DayHow many CPUs would it take to match the tested GPUs?Reply

That would depend on the CPU. -

de5_Roy can you test like these combos:Reply

core i5 + 7970

core i5 hd4000

trinity + 7970

trinity apu

core i7 + 7970

and core i7 hd 4000, and compare against fx8150 (or piledriver) + 7970.

it seemed to me as if the apu bottlenecks the 7970 and the 7970 could work better with an intel i5/i7 cpu on the graphical processing workloads. -

vitornob Nvidia cards test please. People needs to know if it's better/faster to go OpenCL or CUDA.Reply -

bgaimur vitornobNvidia cards test please. People needs to know if it's better/faster to go OpenCL or CUDA.Reply

http://www.streamcomputing.eu/blog/2011-06-22/opencl-vs-cuda-misconceptions/

CUDA is a dying breed.