SIPEW 2008: All About Benchmarking

Scheduling for Individual Servers

This session was one of the keynotes of the SIPEW 2008, and it dealt with results of stochastic analyses on server farms. More specifically, Professor Mor Harchol-Balter, who is the head of graduate education at the Carnegie Mellon University’s department of computer science, discussed scheduling in server farms as opposed to single servers.

Her presentation went into detail on various approaches to deal with scheduling while considering short response times—different techniques of routing and dispatching workloads to different servers can lead to greatly varying performance. A good starting point is a quick look back at how Windows 3.1 implemented multitasking versus to modern operating systems such as Windows 2003/XP or Vista, as distributing workloads across servers is very much a multitasking approach as well. At the time of Windows 3.1, cooperative multitasking relied on individual processes with no prioritization, which means that a thread often did not return to the operating system until it finished. This meant that while it was not necessary to synchronize various threads, individual processes could stall the entire system.

Task Dispatching Matters

Modern operating systems are based on preemptive multitasking, where the operating system kernel manages assigning threads to available processor resources. This can be done according to simple distributions such as a round robin approach, or based on other prioritization policies. While the performance results are predictable for systems with a single processor, task scheduling gets trickier as soon as multiple cores become available. Latency is added once interfaces have to be used, which is why professional operating systems are node-aware, meaning that they distribute threads according to the available processor sockets and cores in an effort to avoid unnecessary traffic. Obviously, job assignment in server farms, where a multitude of systems is involved, offers more options for how to handle task routing and how to adjust dispatching policies to reach maximum performance.

Task Scheduling Policies for Individual Servers

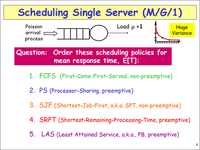

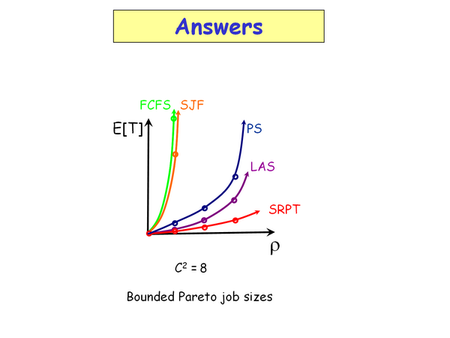

Mor Harchol-Balter talked about different scheduling policies for individual servers first:

- FCFS (First-Come-First-Served, non-preemptive)In this case, incoming jobs are distributed on a first come, first served basis, assigning workloads to available processing resources.

- PS (Processor-Sharing, preemptive)Processor sharing is very much what we know of as preemptive multitasking. All incoming workloads are distributed evenly across available processor resources.

- SJF (Shortest-Job-First, a.k.a. SPT, non-preemptive)This scheduling approach always first executes those workloads that can be completed fastest. However, tasks will always be processed until they’re completed.

- SRPT (Shortest-Remaining-Processing-Time, preemptive)In this approach, short workloads are prioritized, assigning them to available processor resources. As in processor sharing, workloads may be switched.

- LAS (Least Attained Service, preemptive)This method distributed workloads evenly to processing resources to create a balanced utilization.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Scheduling for Individual Servers

Prev Page SIPEW 2008 Next Page Scheduling in Server Farms