Soft Machines' 'Virtual Cores' Promise 2-4x Performance/Watt Advantage Over Competing CPUs

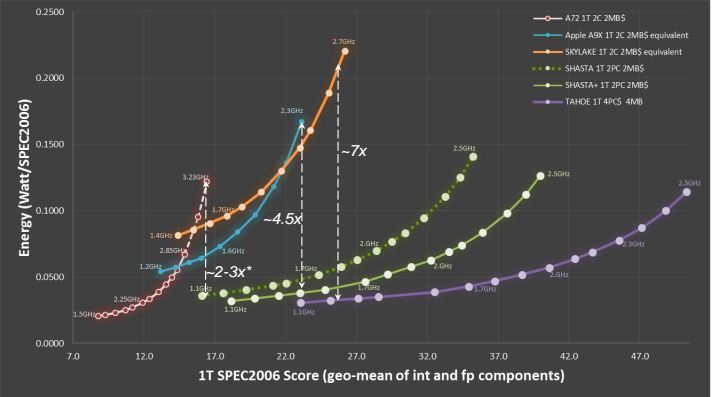

Soft Machines, a well-funded startup ($175 million to date) that came out of stealth last year, announced its "Virtual Instruction Set Computing" (VISC) architecture, which promises 2-4x higher performance/Watt compared to existing CPU designs.

Current CPU architectures scale performance by using wider architectures and out-of-order execution to improve instruction-level parallelism (ILP) and by adding additional cores to improve thread-level parallelism (TLP). These techniques are limited by Amdahl’s law, however, leading to larger, more power-hungry processors. The challenges of multi-threaded programming, which is necessary to extract the full benefit of multiple CPU cores, also places limits on achieving high levels of TLP.

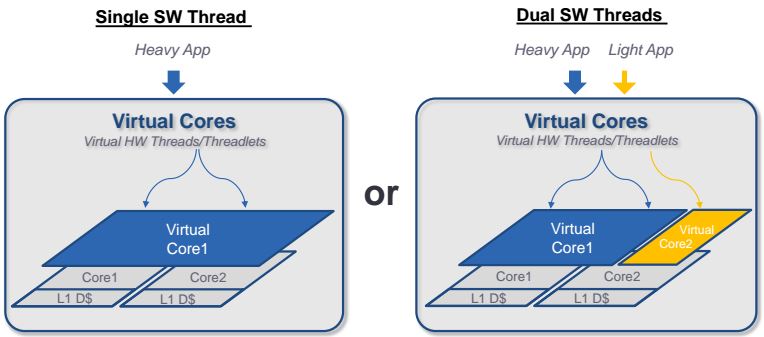

In order to improve performance/Watt scaling, Soft Machines is taking a different approach. Its architecture uses “virtual cores” (VC) that shift the burden of thread scheduling and synchronization from the software programmer and operating system to the hardware itself. With VISC, a single thread is not restricted to a single core like traditional multiprocessor designs. Instead, it gets broken down into smaller threadlets by the VCs and executes on multiple underlying physical cores (PC). By using the available execution units more efficiently, the VISC architecture, in theory, can maintain high performance even when using smaller, simpler physical cores, which reduces power consumption. Another advantage of this technique is that single-threaded applications can execute on multiple physical cores.

Soft Machines claimed that its virtual cores can either increase the performance/Watt by 2-4x at the same power consumption level, or they can decrease the power consumption by 4x at the same performance level relative to existing designs.

Unlike ARM, which licenses its core design IP, or Intel, which manufactures its own cores and SoCs, Soft Machines will partner with other companies to create custom processors and SoCs.

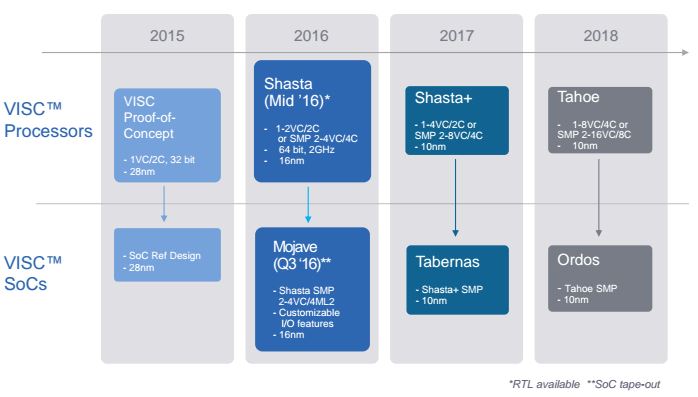

The company said that the first processor, called “Shasta,” will tape-out on TSMC’s 16FF+ processing node in mid-2016. That means we probably won’t see a shipping product until at least a year later.

Shasta will contain two physical cores up to 2 GHz each, with 1MB of cache per core, and a high-speed 256-bit read/write system interface unit. Shasta will use a custom 64-bit ISA but will support guest ISAs as well. The corresponding SoC, which will contain up to two Shasta processors and 2-4 virtual cores, is called “Mojave” and will tape-out in Q3 2016. The chips are designed to scale up from mobile to server markets.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In 2017, the company’s roadmap includes the “Shasta+” processor with the corresponding “Tabernas” SoC. Shasta+ will have 1-4 virtual cores for 2 physical cores, or 2-8 virtual cores for 4 physical cores, and it will include additional architectural enhancements. The processor will be made on a 10nm process node.

For 2018, the company will work on the “Tahoe” processor with 1-8 virtual cores and 4 physical cores, as well as a 2-16VC/8C variant. The corresponding SoC will be called “Ordos.”

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Puiucs There is a reason why single threaded instructions aren't already using multiple cores already. While i think their design can work, i feel like they are omitting a lot of details about real performance and limitations.Reply

What i'm more interested in is the dynamic balancing of the cores in the case of multiple threads, This is something that can really improve performance by a lot if done right. -

Puiucs There is a reason why single threaded instructions aren't already using multiple cores already. While i think their design can work, i feel like they are omitting a lot of details about real performance and limitations.Reply

What i'm more interested in is the dynamic balancing of the cores in the case of multiple threads, This is something that can really improve performance by a lot if done right. -

CRITICALThinker Somethink tells me this design would pair really well with the ideas behind bulldozer and its multithreadeing capabilities.Reply -

Mahdi_B Ok but I don't seem to understand exactly what we're talking about, is this a x86 desktop processor or an ARM or perhaps a new IS called VISC? (in which case I don't really see how it will work considering existing legacy apps and operating systems)Reply -

Epsilon_0EVP ReplyOk but I don't seem to understand exactly what we're talking about, is this a x86 desktop processor or an ARM or perhaps a new IS called VISC? (in which case I don't really see how it will work considering existing legacy apps and operating systems)

It's a new IS, yes. It uses a "custom 64-bit ISA," but the article also says they might use other architectures.

Backwards compatibility isn't an issue, since this is mostly designed for servers. Since most servers run Linux and open-source software, recompiling the necessary code isn't particularly difficult once a proper compiler is set up for the architecture. However, they will indeed take much longer to arrive on consumer PC's (if ever).

-

bourgeoisdude I wonder if this would be helpful with console/system emulation as well. If this were licensed to Microsoft or Sony, for example, it could make it easier to emulate software from prior consoles.Reply -

ammaross It will be interesting to see how they manage. The big thing preventing single-threaded apps from being well-threaded is sequential dependence that prevents Out-of-Order Execution (can't do C until A+B is calculated or done). However, many things in an app CAN be threaded, but usually lazy programming leads to heap access (shared memory) and management makes that difficult. They must feel their tech can out-perform branch prediction and such though else they'd likely not be trying to bring it to market.Reply