Can The Flash-Based ioDrive Redefine Storage Performance?

Thoughts, Reliability, First Results

We already mentioned the reliability statements from the manufacturer: Fusion-io promises 24 years reliability for the 80 GB entry-level model at a 40% duty cycle and 5 TB of writes or erases per day. However, if for some reason the drive activity is more intensive than expected, 5 TB throughput can actually be written within seconds. You can do two hours of full-speed write operations per day and still stay within Fusion-io's forecast, but always be careful. As with other storage devices, backup should be a top priority, and it isn’t difficult to perform considering the relatively small total capacities of the ioDrive.

Write Performance

We tried all three performance settings: maximum capacity, improved write performance at 50% total capacity, and maximum write performance at 30% remaining capacity. We found that the improved write performance mode actually doubled write throughput in some benchmarks. However, maximum write performance did not introduce a lot of additional benefit. If you aren’t sure about the settings you should make some test runs using a development system comparable to a production machine, to find out.

I/O Versus Throughput

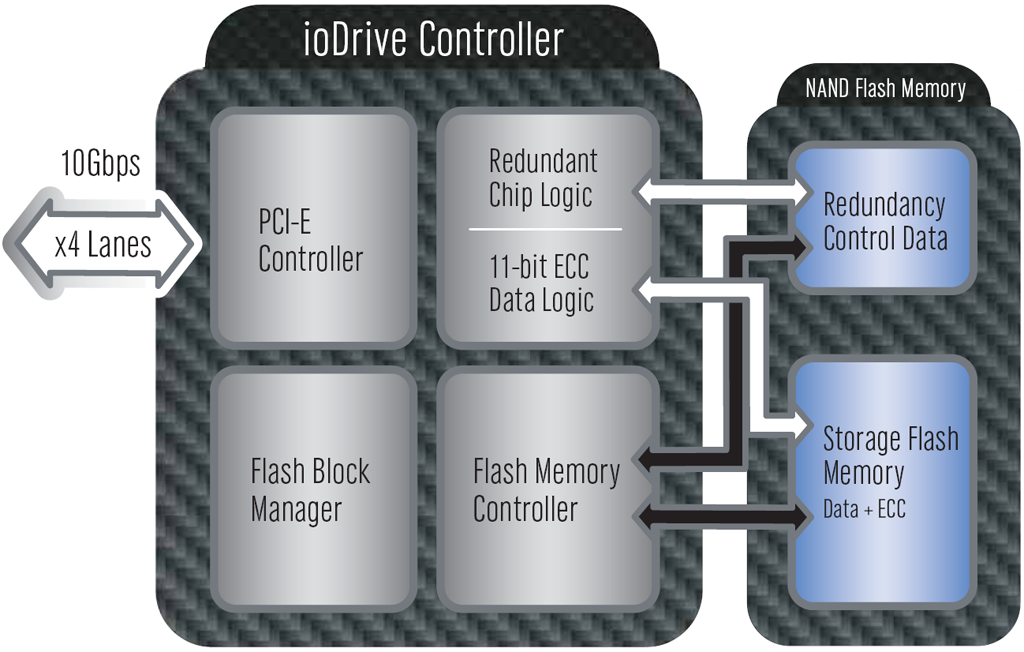

Flash memory performance depends a lot on the flash memory controller, which has to consider the characteristics of SLC and MLC flash memory. Smart devices optimize write operations by reducing the number of actual write operations, and they take care of so-called write amplification. As flash SSDs are also based on blocks of data, these blocks define the minimum amount of data that can be written to a flash SSD. Writing only 2 KB of data may trigger a 128 KB write, although it is not logically needed.

Most flash memory controllers adjust to the workload they have to take on, which means that performance may change drastically if you switch from intensive, small, random I/O operations to sequential reads or writes. We also looked at this, and found that there is an negative impact on throughput after I/O; Fusion-io manages to readjust performance quickly (although not instantly). At the same time we have to underscore that no one would buy an ioDrive for sequential storage operations: hard drives are much cheaper and even faster in that mode.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Thoughts, Reliability, First Results

Prev Page Fusion-io ioDrive Details Next Page Test Setup, Access Time