Report: Why The GeForce RTX 3080's GDDR6X Memory Is Clocked at 19 Gbps

German publication Igor's Lab has launched an investigation into why Nvidia chose 19 Gbps GDDR6X memory for the GeForce RTX 3080 and not the faster 21 Gbps variant. There are various claims, but it's not entirely clear how exactly some of the testing was conducted, or where the peak temperature came from.

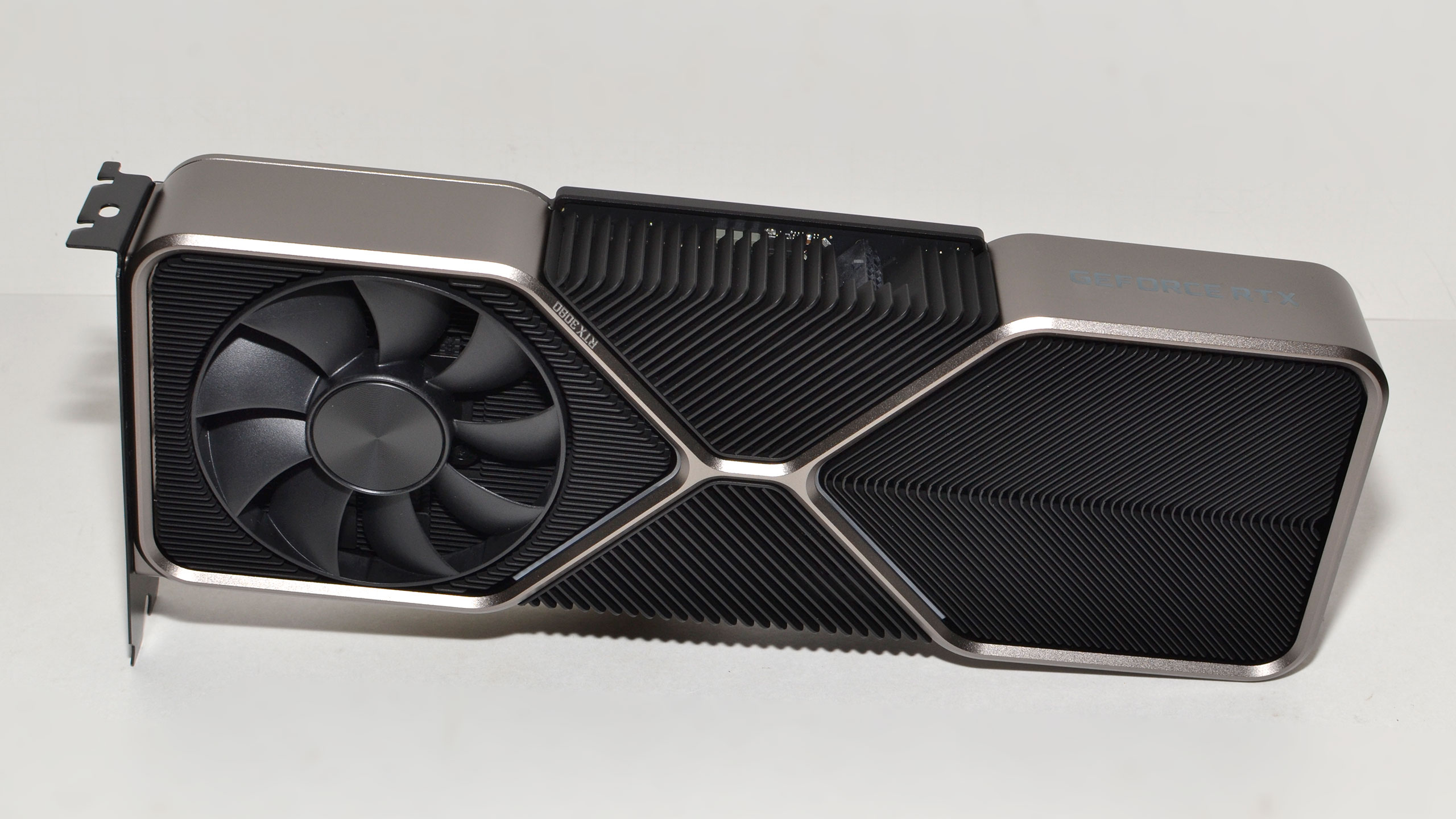

The GeForce RTX 3080, which is the newly anointed king of gaming graphics cards, comes equipped with 10GB of GDDR6X memory. Unsurprisingly, the 10 memory chips are from Micron since the U.S. chipmaker is the only one that produces GDDR6X memory. However, some might find it intriguing that Nvidia opted for the 19 Gbps variants, when Micron currently offers 21 Gbps chips as well. Since Nvidia is Micron's only GDDR6X customer, stock shouldn't be a problem.

The research from Igor's Lab suggests that Nvidia's choice was due to thermal issues and could explain why the success rate of pushing the memory beyond 20 Gbps is pretty slim.

For reference, Micron rates its GDDR5, GDDR5X and GDDR6 memory chips with a Maximum Junction Temperature (TJ Max or Tjunction Max) of 100C (degrees Celsius). The typical recommended operating temperatures ranges between 0C to 95C. The reading materials on Micron's GDDR6X don't reveal the TJ Max for the new memory chips so there's still a bit of mystery to the topic. According to Igor, the general consent is around 120C before the GDDR6X chips suffer damage. This would mean that the Tjunction value should be set at 105C or up to 110C.

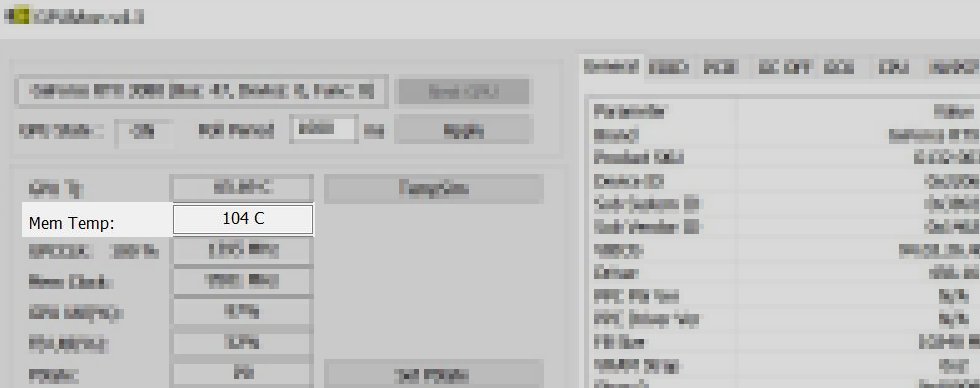

In order to prove his theory, Igor coated the backside of his GeForce RTX 3080 with a transparent substance that has an emissivity of roughly 0.95. He didn't mention the exact name of the material, but stated that it's widely use in the industry to safeguard component against environmental hazards, such as high air humidity. The reviewer kept his ambient temperature at 22C and let Witcher 3 run at 4K during 30 minutes.

At that point, he took readings of the board with his Optris PI640 thermal camera and a 'special' piece of software that's only available for internal use. That generated the above image, which is blurred out except for the mem. temp field.

According to the results, the hottest GDRR6X memory chip had a Tjunction temperature of 104C, resulting in a delta of around 20C between the chip and the bottom of the board. Another interesting discovery is that the addition of a thermal pad between the backplate and the board helps drop the board temperature by up to 4C and the Tjunction temperature around 1-2C.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Again, it's not clear how all the testing was conducted. Reading memory temperatures from underneath a heatsink is tricky in the best of situations. The takeaway is that the GDDR6X memory chips run hotter on the inside of the card than on the outside (under the backplate) due to the surrounding components, such as the voltage regulators. Even if you were to up the Tjunction to 110C, the additional 6C doesn't represent a large enough headroom before throttling kicks in. Yet another reason to ensure your PC has sufficient cooling if you're planning on running a 320W or higher TGP RTX 3080.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

gggplaya I'm definitely going to wait for BIG NAVI, if it can come close to or outperform Nvidia RTX 3080, it wins IMO. Why bother with all the extra heat and inefficiency for a little extra performance. Doesn't matter if they made a new cooler to be quiet, your computer room will still heat up like crazy.Reply -

JTWrenn Replygggplaya said:I'm definitely going to wait for BIG NAVI, if it can come close to or outperform Nvidia RTX 3080, it wins IMO. Why bother with all the extra heat and inefficiency for a little extra performance. Doesn't matter if they made a new cooler to be quiet, your computer room will still heat up like crazy.

I feel the same way. I feel like NVidia cranked up clock rates to get their cards numbers up instead of designing it for this from the start. The perf/watt increases are just abysmal. -

spongiemaster Reply

Or it could be that the Samsung 8nm process isn't that great and Nvidia had to try and make lemonade from a lemon.JTWrenn said:I feel the same way. I feel like NVidia cranked up clock rates to get their cards numbers up instead of designing it for this from the start. The perf/watt increases are just abysmal. -

gggplaya Replyspongiemaster said:Or it could be that the Samsung 8nm process isn't that great and Nvidia had to try and make lemonade from a lemon.

Adored TV made a great video explaining Nvidia's decision on 8nm and why the performance/watt is so bad.

tXb-8feWoOEView: https://www.youtube.com/watch?v=tXb-8feWoOE

Basically as you know, the power curve is not linear, so pushing higher clock speeds means exponentially more power is required once you go past the efficiency part of the curve. Nvidia knew 8nm clock speeds were never going to be good, 1400mhz base and 1700mhz boost is really the best they could expect for a mass produced card. So instead, since 8nm wafers are cheaper, they decided to make huge dies and rely on higher transistor count to give more performance. -

cryoburner Reply

I suspect Big Navi's efficiency may be relatively similar, though a lot might depend on how hard they push clock rates. A 5700XT can draw around 220 watts or so while gaming. If AMD's 1.5x efficiency claims for RDNA2 hold true, then a card with a similar level of performance as a 5700XT might only draw around 150 watts or so under load. A 3080 is roughly twice as fast, though AMD is rumored to be doubling core counts for their top-end card, so matching that card's gaming performance might be within reach. However, if that's the case, then a doubling of power draw would similarly put us up around 300 watts or so.gggplaya said:I'm definitely going to wait for BIG NAVI, if it can come close to or outperform Nvidia RTX 3080, it wins IMO. Why bother with all the extra heat and inefficiency for a little extra performance. Doesn't matter if they made a new cooler to be quiet, your computer room will still heat up like crazy. -

nofanneeded Replygggplaya said:I'm definitely going to wait for BIG NAVI, if it can come close to or outperform Nvidia RTX 3080, it wins IMO. Why bother with all the extra heat and inefficiency for a little extra performance. Doesn't matter if they made a new cooler to be quiet, your computer room will still heat up like crazy.

BIG NAVI can compete against RTX 3080 but will need more power ... something near 430 watts.

AMD claimed their performance per watt increased 50% ...

This means around 15 tflops will need 215 watts. amd 30 Tflops (RTX 3080) will need 430 watts.

I think their card will land between RTX 3070 and RTX 3080 at around 350 watts and some 25 Tflops. -

watzupken Reply

It is too early to say how much power RDNA2 will need for the top end card. I am not expecting power consumption to be low for a top end card, and since Nvidia have started the race with 320/350W cards, I think that will become a norm.nofanneeded said:BIG NAVI can compete against RTX 3080 but will need more power ... something near 430 watts.

AMD claimed their performance per watt increased 50% ...

This means around 15 tflops will need 215 watts. amd 30 Tflops (RTX 3080) will need 430 watts.

I think their card will land between RTX 3070 and RTX 3080 at around 350 watts and some 25 Tflops. -

lorfa This doesn't shed any light on why Nvidia chose the 19.5 gbps chips because all Igor's lab is testing is how they handle the heat when being pushed beyond their limits. We'd have to have the thermals on the actual 21 gbps chips to make a meaningful comparison, and well, there aren't any at this time.Reply

It could be a simple matter of binning, or there could be more to it, we don't know. Just because Nvidia is their only customer doesn't mean that there aren't for example yield issues. We don't know that either. All the tests from Igor's lab reveals is that the chips get too hot really quick when you push them, there isn't much overhead there, but we already knew that from basic tests. -

watzupken Replygggplaya said:Adored TV made a great video explaining Nvidia's decision on 8nm and why the performance/watt is so bad.

tXb-8feWoOEView: https://www.youtube.com/watch?v=tXb-8feWoOE

Basically as you know, the power curve is not linear, so pushing higher clock speeds means exponentially more power is required once you go past the efficiency part of the curve. Nvidia knew 8nm clock speeds were never going to be good, 1400mhz base and 1700mhz boost is really the best they could expect for a mass produced card. So instead, since 8nm wafers are cheaper, they decided to make huge dies and rely on higher transistor count to give more performance.

While the video said that 8nm is Nvidia's dumbest decision, the fact is that the cards are still selling like hot cakes. Nvidia is well aware of their popularity, and I strongly believe they are aware of the shortcoming of Samsung's 8nm. But to cut cost for themselves, they made that decision. The saving grace here is the fact that Nvidia's GPU architecture still holds a solid lead against AMD's RDNA. Not sure what RDNA 2 will bring to the table at this point, so keeping my comparison to just RDNA for now. -

cryoburner Reply

You can't go by FP32 compute performance as a good way of estimating gaming performance, as Nvidia made significant changes to the way FP32 works on their cards this generation. The 3080 might be able to get those Tflops in a pure FP32 compute workload, but not in games. While not exactly the same, think of it a bit like how AMD's Bulldozer CPU architecture shared a floating-point pipeline for every two integer pipelines. While Bulldozer offered competitive performance in integer workloads, its floating point performance was significantly lower.nofanneeded said:BIG NAVI can compete against RTX 3080 but will need more power ... something near 430 watts.

AMD claimed their performance per watt increased 50% ...

This means around 15 tflops will need 215 watts. amd 30 Tflops (RTX 3080) will need 430 watts.

I think their card will land between RTX 3070 and RTX 3080 at around 350 watts and some 25 Tflops.

Similarly, while the RTX 3080 might be able to get close to 30 Tflops of compute performance in FP32 workloads, in today's games it gets far lower performance than what that might otherwise imply. As a result, the 3080 only performs roughly similar to what one might expect from an 18-19 Tflop Turing card. Even at 4K, it's not much more than 30% faster than a 13.5 TFlop 2080 Ti, and is certainly not anywhere remotely close to being 120% faster, as the Tflops might suggest.

Or another way to look at it, the 3080's real-world gaming performance is about twice that of a 5700XT. So, if AMD doubles their core counts as rumored, and their performance per watt actually increases by around 50%, then they have the potential to match the 3080's performance while drawing around 300 watts. Of course, we have no idea what RDNA2's raytracing will perform like yet, so that part is still a big unknown.