The 30 Year History of AMD Graphics, In Pictures

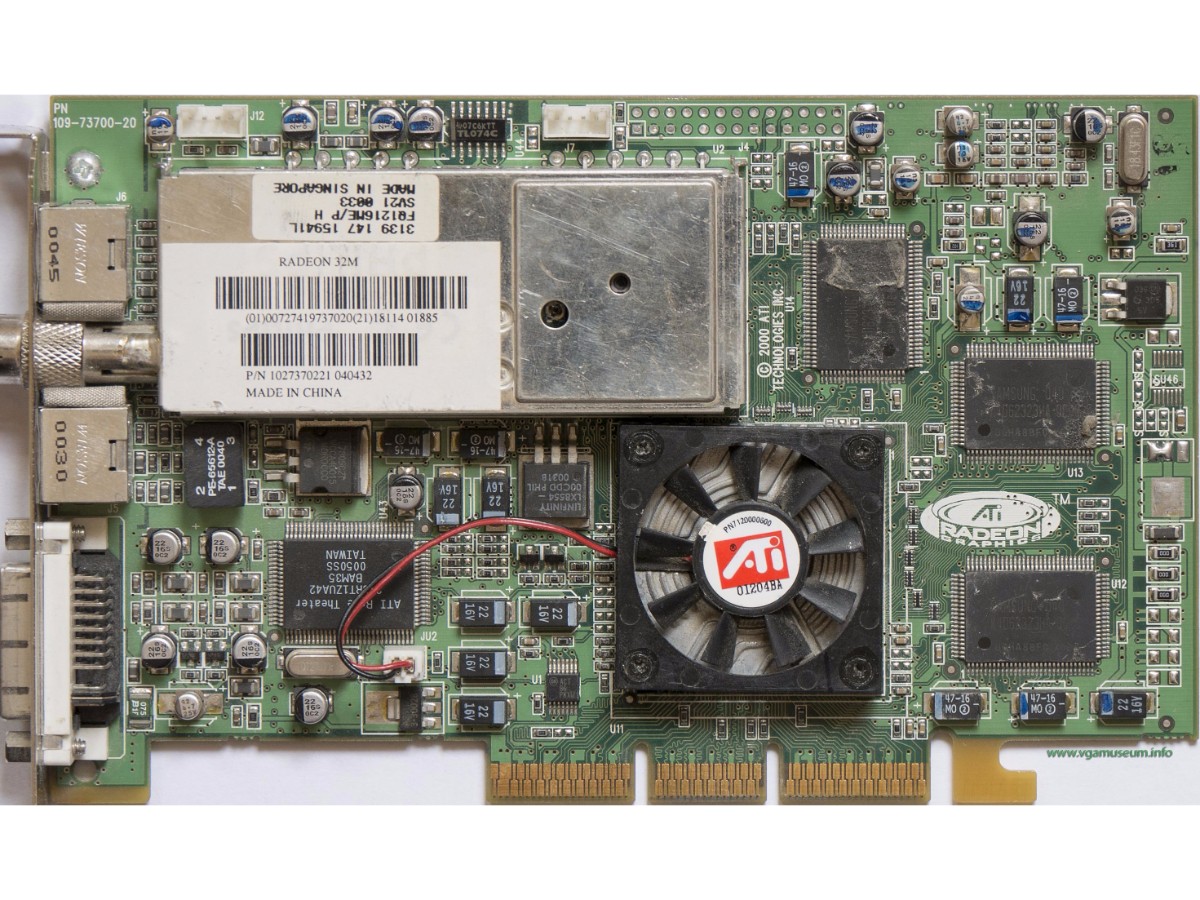

ATI All-In-Wonder Radeon 7500 (2001)

In 2001, ATI transitioned to 150 nm manufacturing. The first GPU to benefit from the new node was RV200, used in the All-In-Wonder Radeon 7500 (along with the Radeon 7500 and 7500 LE). Architecturally, RV200 was identical to R100. But it allowed ATI to push core frequency much higher.

The All-in-Wonder board incorporated ATI's Rage Theater chip, along with a TV tuner on-board, giving it serious multimedia chops that Nvidia was never able to counter effectively.

Image Credit: VGAMuseum.info

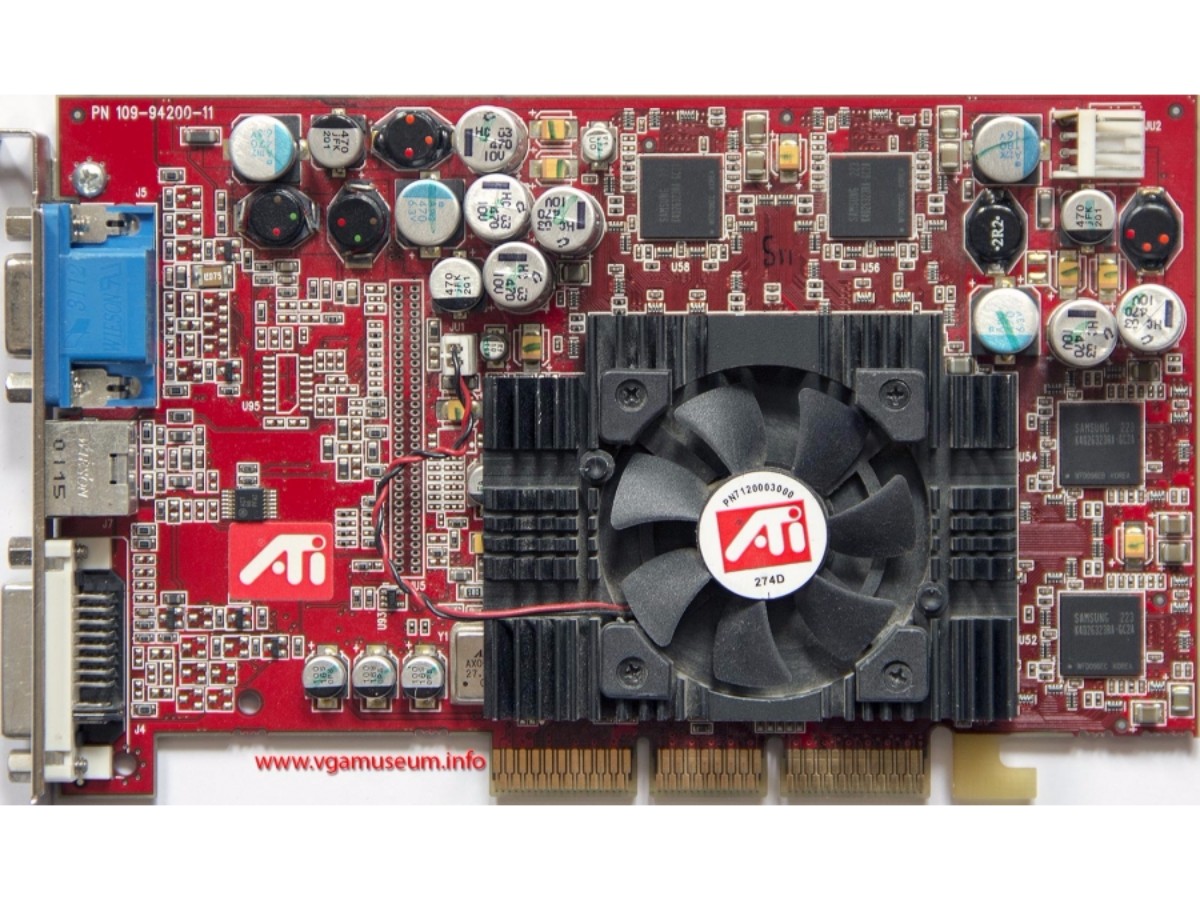

ATI All-In-Wonder Radeon 8500 (2001)

The All-in-Wonder Radeon 8500 used ATI's R200 GPU with four pixel pipelines and two TMUs per pipe, along with a pair of vertex shaders. Through its implementation, ATI supported Microsoft's Pixel Shader 1.4 spec. The company also rolled out HyperZ II with R200, improving the technology's efficiency.

A core clock rate of 260 MHz and 128 MB of 550 MHz DDR memory landed the All-in-Wonder 8500 close to the performance of AMD's Radeon 8500, which was enough to compete against Nvidia's GeForce 3.

ATI Radeon 9700 Pro (2002)

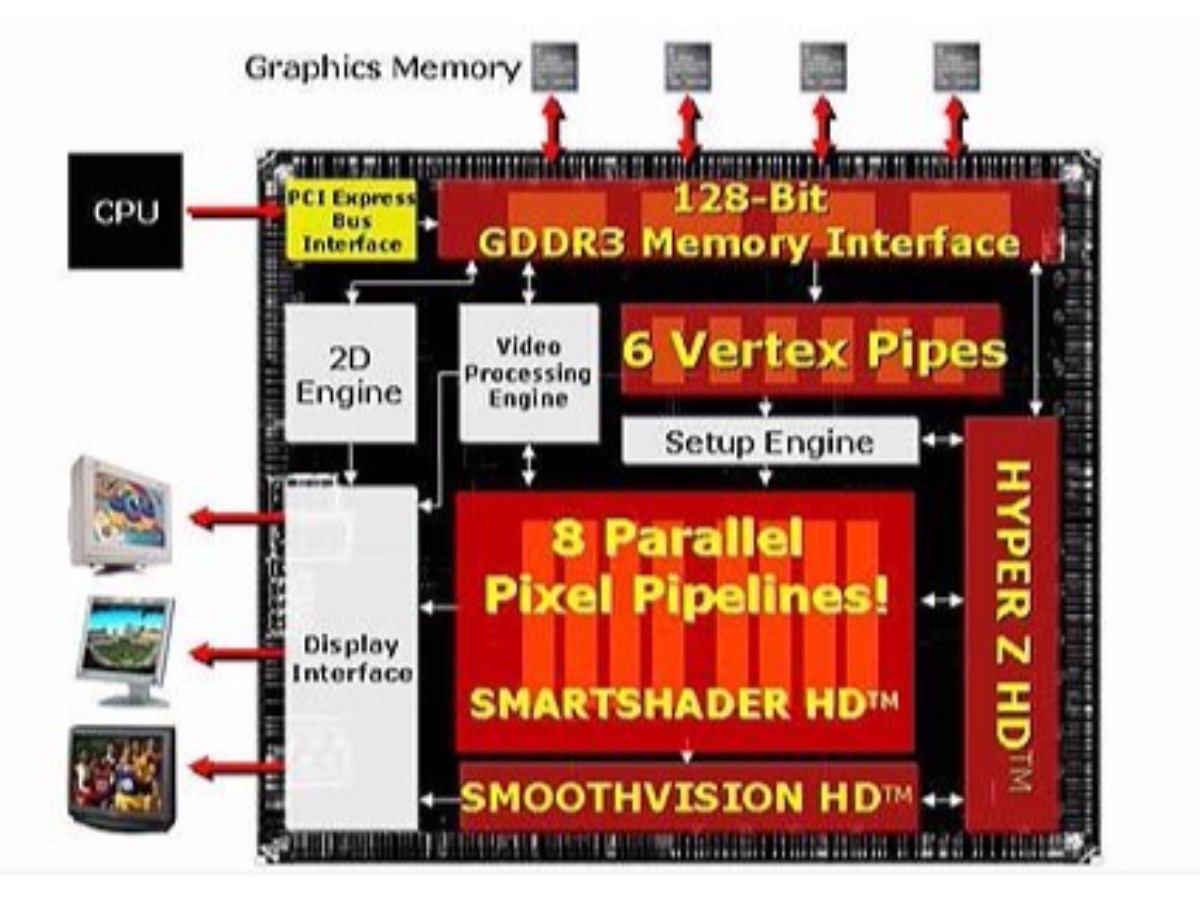

For the Radeon 9700 Pro, ATI used a completely new architecture. Its R300 GPU employed eight pixel pipelines with one texture unit each, along with four vertex shaders, dramatically increasing geometry processing and textured fillrate. The big 110 million-transistor chip was manufactured using a 150 nm process, just like R200, but it enjoyed higher clock rates thanks to flip-chip packaging.

The design also implemented the Pixel Shader 2.0 specification, and was the first GPU to support Microsoft's DirectX 9 API. To keep the eight-pipe design fed, ATI connected it to 128 MB of DDR memory on a 256-bit interface, yielding just under 20 GB/s of throughput. Third-generation HyperZ helped maximize what ATI could get from the memory subsystem, and derivative cards were able to get away with half as much RAM on a 128-bit bus.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With the Radeon 9700 Pro clocked at 325 MHz, ATI beat Nvidia's flagship GeForce4 Ti 4600 to claim the performance crown. ATI later released a slightly slower version called the Radeon 9700, which was just a lower-clocked Radeon 9700 Pro.

Image Credit: VGAMuseum.info

ATI Radeon 9800 Pro (2003)

In an attempt to combat the Radeon 9700 Pro, Nvidia launched its GeForce FX 5800 Ultra. This narrowed the performance gap, but was not enough to overtake the Radeon 9700 Pro. To cement its position, ATI introduced a subtle update called the Radeon 9800 Pro that utilized an R350 GPU at higher clock rates. ATI later followed up with a model sporting 256 MB of memory.

The Radeon 9800 Pro extended ATI's performance lead, but would later fall behind when Nvidia released the GeForce FX 5900. Although the Radeon 9800 Pro subsequently gave up its crown, it was still less expensive, more compact, and loaded with other advantages that kept it desirable.

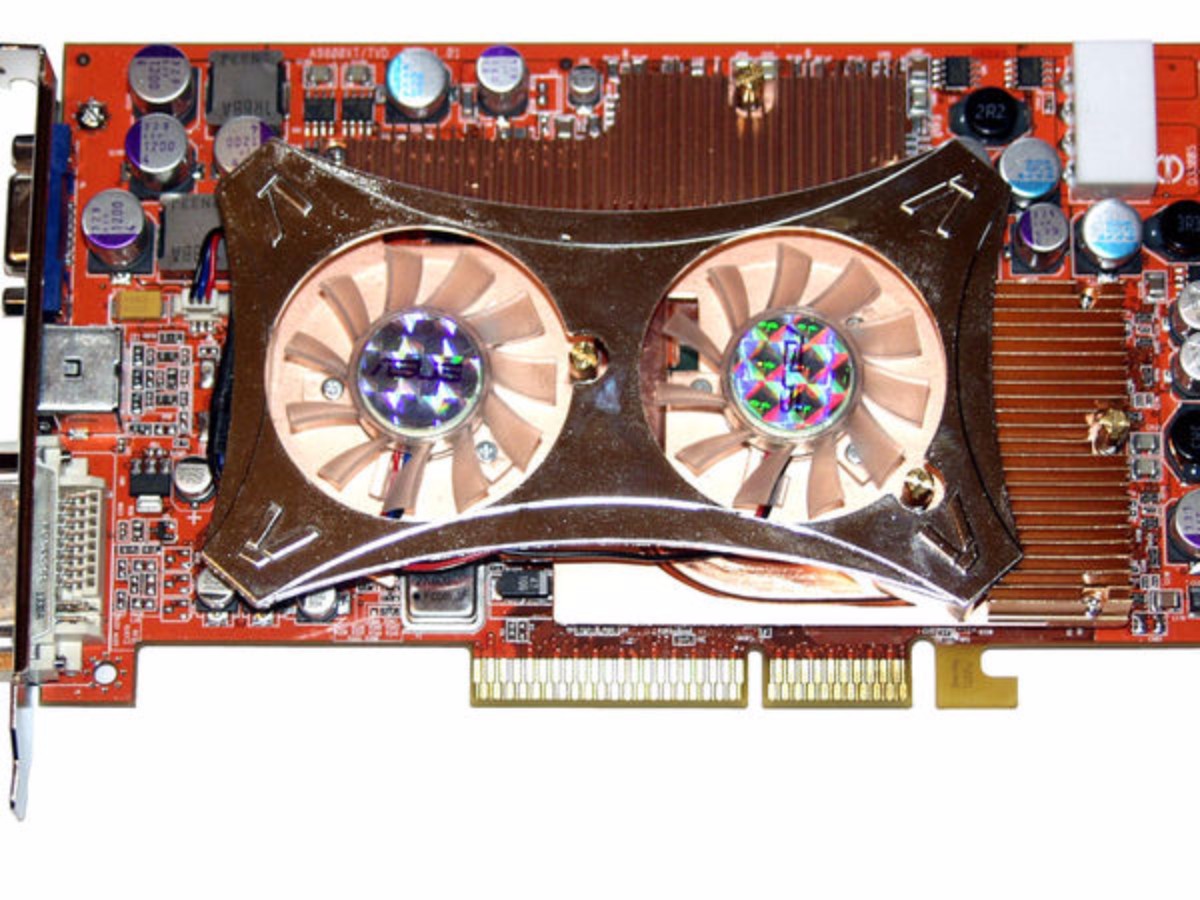

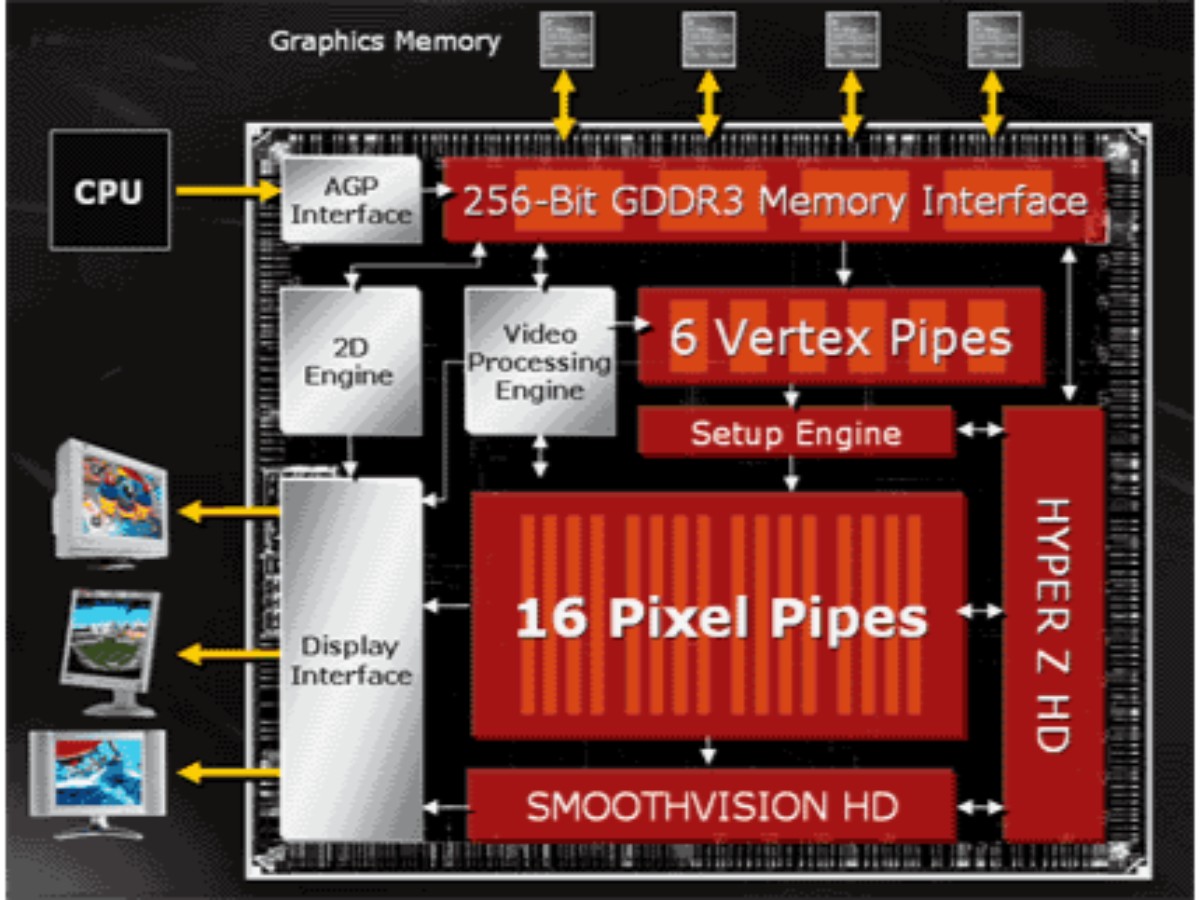

ATI Radeon X800 XT (2004)

The rivalry between ATI and Nvidia continued as Nvidia launched its GeForce 6800 GPU and reclaimed its technology and performance lead in the graphics card market. ATI fired back with its X800 XT. The card's R420 GPU had 16 pixel pipelines that were organized into groups of four. Compatibility was limited to Shader Model 2.0b at a time when Nvidia's NV40 had SM 3.0 support. But the GPU also had 16 TMUs, 16 ROPs, and six vertex shaders. R420 connected to 256 MB of GDDR3 over a 256-bit bus and used a new memory compression technique called 3Dc, which helped make the use of available bandwidth more efficient.

Competition between the X800 XT and the GeForce 6800 Ultra was fierce, but in the end, Nvidia held on to its performance crown.

It is also worth noting that the X800 series was the first generation of graphics cards from ATI to feature CrossFire support.

ATI Radeon X700 XT (2004)

Not long after its X800 XT launch, ATI introduced the Radeon X700 XT powered by its RV410 GPU. The RV410 was essentially half of an RV420, with eight pixel pipelines, eight TMUs, eight ROPs, and a 128-bit memory bus. RV410 had the same number of vertex shaders as R420, though, and was manufactured using a 110 nm process. Clocked similarly to the X800 XT, the X700 XT was competitive against other mid-range graphics cards like Nvidia's GeForce 6600.

ATI Radeon X850 XT PE (2004)

Late in 2004, ATI launched a new flagship called the Radeon X850 XT PE. This card used an R480 core built with 130 nm transistors. It was essentially just a die shrink of the R420, but operated at somewhat higher clock rates. This resulted in a modest performance boost, and the X850 XT PE was more competitive against Nvidia's GeForce 6800 Ultra.

ATI Radeon X1800 XT (2005)

ATI's next graphics core was known as R520, and it was used inside of the original X1000-series flagship. This allowed ATI to support the Shader Model 3.0 specification. The company also shifted to TSMC's 90 nm manufacturing process.

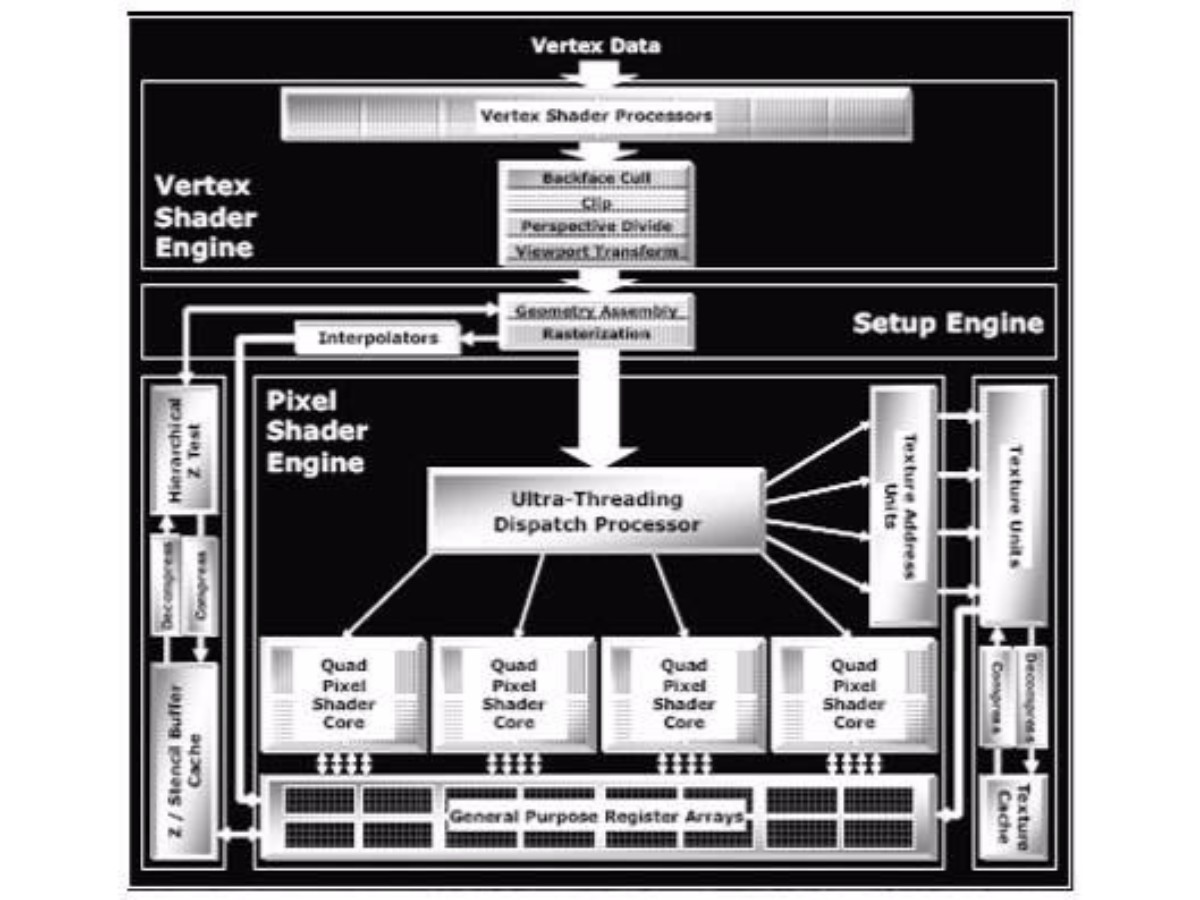

The R520 was designed around an Ultra-Threading Dispatch processor, which broke shader data down into as many as 512 parallel threads that were then executed by a graphics quad pixel shader core. As the name suggested, each quad was made up of four pixel pipelines.

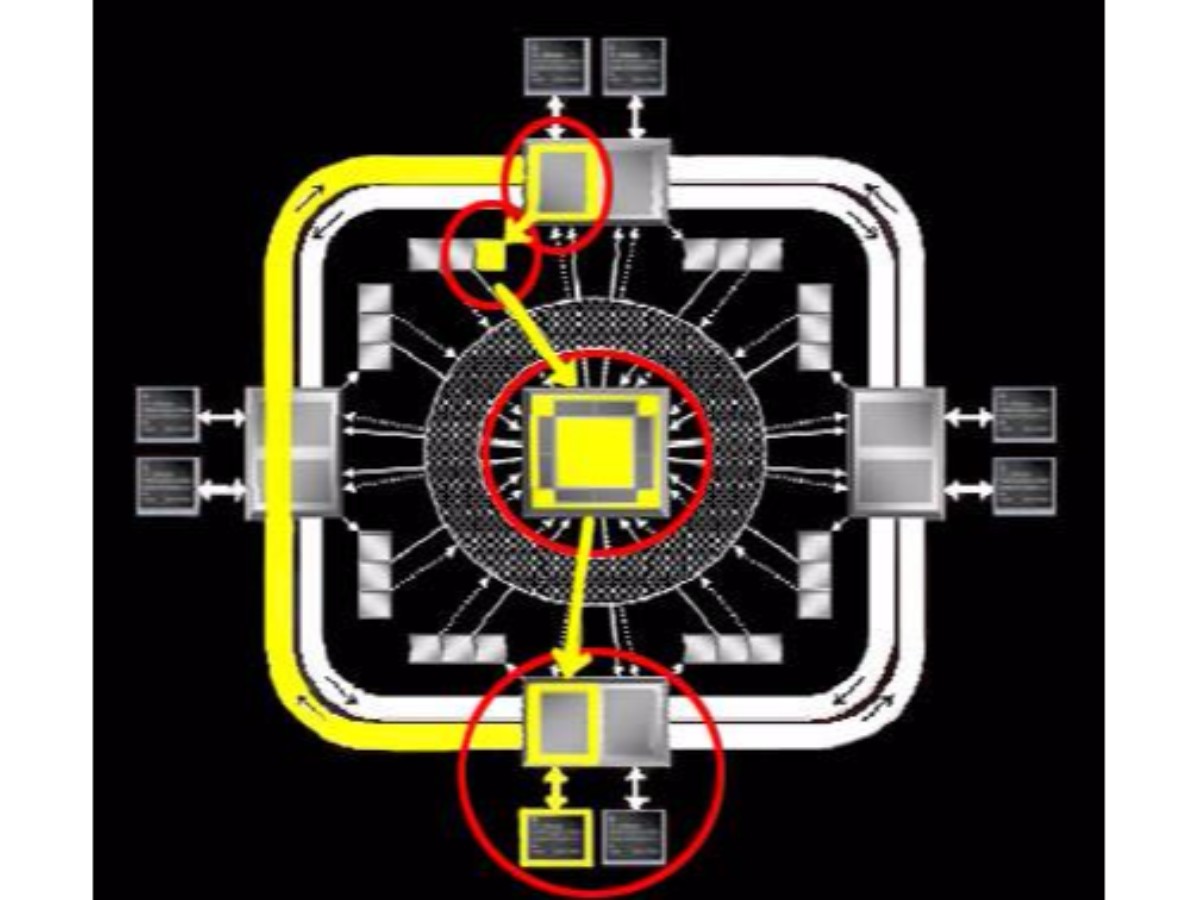

The original X1000-series flagship was the Radeon X1800 XT. It came armed with four quads (16 pixel pipelines), eight vertex shaders, 16 TMUs, and 16 ROPs. The core used a unique memory interface that consisted of two 128-bit buses operating in a ring. Data moved on and off the ring-bus at four different points. This effectively increased memory latency, but reduced memory congestion due to the unique way the dispatch processor handled workloads.

The Radeon X1800 XT shipped with either 256 or 512 MB of GDDR3. Clocked at 625 MHz, the Radeon X1800 XT was highly competitive against Nvidia's GeForce 7800 GTX.

ATI Radeon X1900 XTX (2006)

The following year, ATI launched the R580 GPU, which powered its Radeon X1900 XTX. The key difference between the Radeon X1800 XT and Radeon X1900 XTX was that the Radeon X1900 XTX had three times as many pixel pipelines (48) and quads (12). The rest of the core's resources were unchanged. This had mixed results, as the X1900 XTX could in some cases be nearly three times as fast as the X1800 XT. In other games, however, it could perform nearly identical to the X1800 XT.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: The History Of Nvidia GPUs

ATI Radeon X1950 XTX (2006)

ATI later shifted the Radeon X1900 XTX design to an 80 nm process. This resulted in the RV580+ core inside of its Radeon X1950 XTX. The core was otherwise unchanged, but managed to achieve higher clock rates. Further, ATI paired it with GDDR4 memory. The combined effect enabled higher performance.

The X1950 XTX was intended to hold the line while ATI finished up with the Radeon HD 2000 series, and it was relatively short-lived.

-

adamovera Archived comments are found here: http://www.tomshardware.com/forum/id-3207492/year-history-amd-graphics-pictures.htmlReply -

XaveT I still have a 5870 in production. Great card. And six miniDP ports on one card is great for the workstation it's in. I'm sad to see no current flagships with the same configuration, I may have to do two graphics cards or get an unneeded professional series card at upgrade time.Reply -

Nuckles_56 This sentence doesn't make a lot of senseReply

"All-in-Wonder 8500 close to the performance of AMD's Radeon 8500, which was enough to compete against Nvidia's GeForce 3." -

cub_fanatic I have a couple of those 8500 all-in-wonder cards with the remote, receiver for the remote, the giant dongle, the software and all. A friend had them sitting in his garage and was going to chuck them so I took them home. I believe they were used in a doctor's office or something so that they could have a single display in the room and switch to TV while the patient waited and then back to a desktop to show them x-rays or whatever. I have one hooked up to a Pentium 4 Dell running XP but can't figure out much of a use for it in 2017. Even the tuner is almost useless unless you want to capture a video off of a VCR since they can only handle analog.Reply -

Martell1977 My first ATI discreet GPU was a Radeon 9250, and it was pretty good at the time considering the resolutions of the CRT monitors. My second was a Radeon 3850 AGP, while my brother went with a nVidia card. I sold the 3850 with the other parts, working, on eBay mid last year....my brothers nVidia card barely outlasted the warranty.Reply

Next came my 6870, which I got in 2010 and tried crossfire in 2015, but ended up upgrading to a R9 390 as the 6870's ran into memory issues.

I know a lot of people complain about AMD/ATI drivers, but I've had more issues with nVidia driver (especially recently) than any AMD. Both my laptops have been nVidia GPU's (8600M GT and 1060 3gb).

Awaiting to see what Vega brings, I'm surprised it didn't at least get a tease at the end of the article, but hey, maybe this is a lead up to a Vega release soon? -

I look forward to the next 30 years.Reply

P.S. Maybe eventually heterogeneous compute will work. I doubt it. In any case, they seem back in formation, and charging forward. -

blackmagnum I hate reading this page format on IOS; lots of problems and hangs with page scrolling.Reply -

CRO5513Y You can definitely see the change in Aesthetics, what ever happened to all the fancy details and characters on the cards? :PReply

(I mean to be fair not much room to fit them on cards these days since most have non-blower style coolers). -

LaminarFlow Hard to believe that I've been an ATI user for almost 15 years now. Always had a somewhat limited budget, I picked up a 9600XT in 2003, then X1900 XT in 2006, HD 4850 in 2008 (for $125, that was the best computer part purchased I ever made), and R9 280X in 2013.Reply