The 30 Year History of AMD Graphics, In Pictures

Introduction

8/19/2017 Update: added an entry for AMD Radeon Vega RX on the last slide.

ATI entered the graphics card market in 1986, and it continued to operate independently until 2006. Even after its acquisition by AMD, products were sold under the ATI brand for several more years.

In the face of many ups and downs, ATI/AMD is clearly determined to make its mark on graphics. Over the last three decades, most of the competition rose and fell. And over the next several pages, we'll look at the best boards ATI (and, later, AMD) created to keep it in a very unforgiving game.

We're primarily focusing on flagship GPUs. Although we discuss lower-end models, there are dozens of other notable GPUs, too. There's not much talk of dual-GPU solutions, since the purpose of our story is an examination of ATI/AMD's architectural march forward.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: The History Of Nvidia GPUs

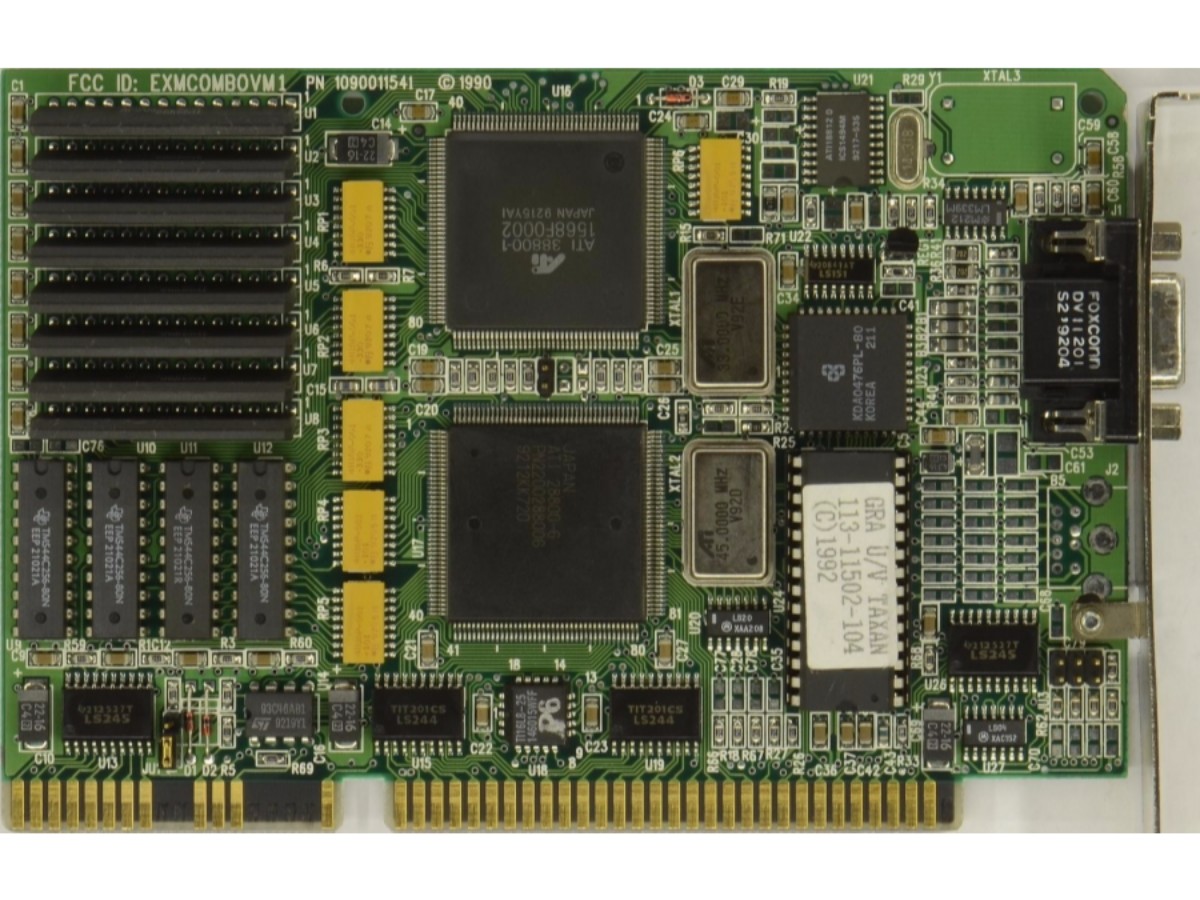

ATI Wonder (1986)

ATI produced several models in its Wonder family between 1986 and the early 1990s. All of them were extremely simplistic, designed to handle text and rudimentary 2D images.

One notable entry in the ATI Wonder line was the VGA Stereo-F/X, which combined a 2D graphics accelerator and Creative Sound Blaster audio processor on a single add-on card.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

ATI Mach 8 (1990)

ATI continued to improve its display technology, culminating in the Mach series of 2D graphics accelerators. The first implementation was the Mach 8, which introduced more advanced 2D features.

Image Credit: VGAMuseum.info

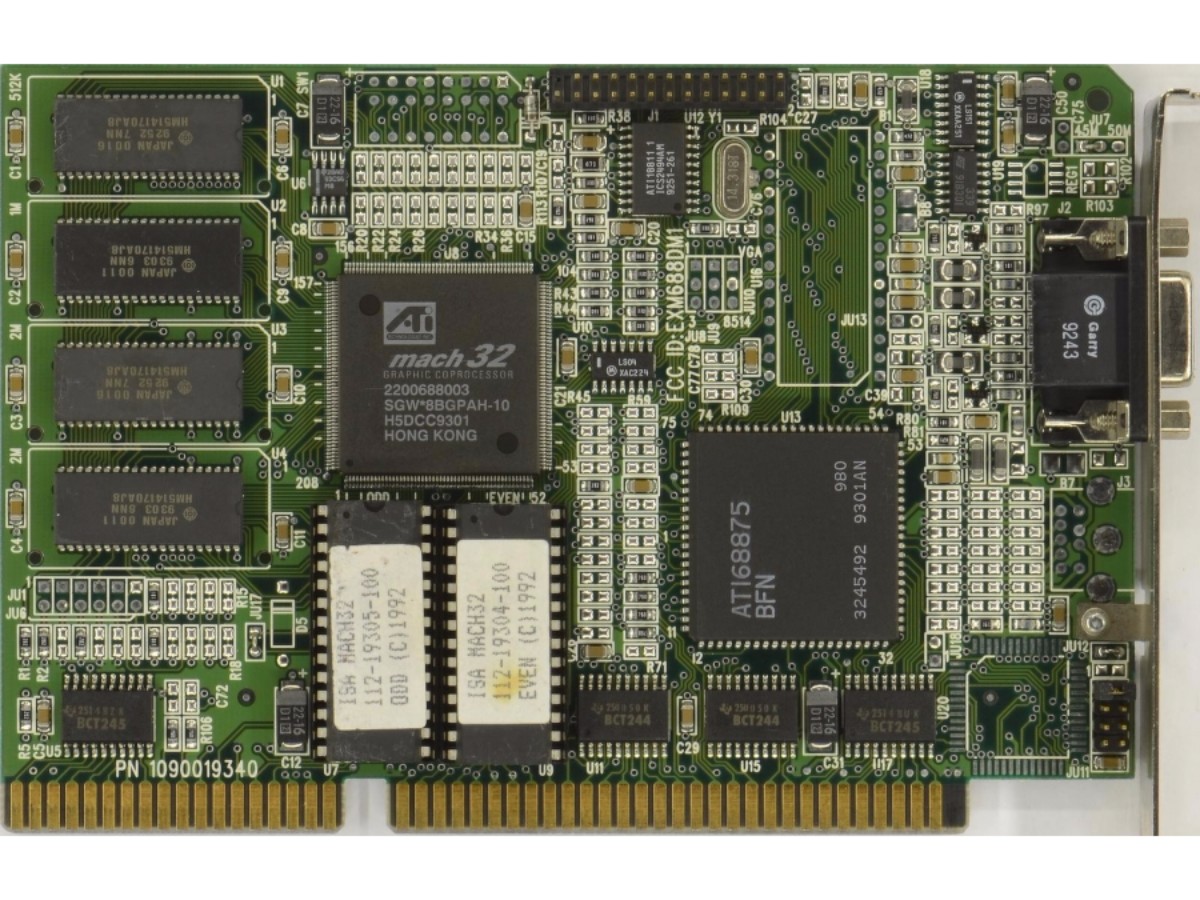

ATI Mach 32 (1992)

ATI later incorporated the functions of both its Wonder and Mach product lines into a single card, which became known as the Mach 32.

Image Credit: VGAMuseum.info

ATI Mach 64 (1994)

The Mach 32 was succeeded by the Mach 64, which accelerated 2D graphics like the rest of the family. Later, ATI added 3D graphics processing capabilities. This marked ATI's first entry into the 3D gaming market, and the end of the Mach line-up.

ATI 3D Rage (1995)

ATI sold its first 2D/3D graphics accelerators under two brand names. We already mentioned the Mach 64. Now we need to discuss its successor: 3D Rage. The first 3D Rage-based cards were identical in every way to the 3D-capable Mach 64s. They even used a Mach 64 2D graphics core.

A later revision of the original 3D Rage, named "3D Rage II," improved 3D performance significantly while also adding new multimedia and CAD features. It was used on several motherboards as an integrated solution. The 3D Rage II core was typically clocked at 60 MHz, and graphics cards shipped with 4 to 8MB of on-board memory.

Image Credit: VGAMuseum.info

ATI 3D Rage Pro (1997)

The 3D Rage Pro incorporated several improvements over the 3D Rage II. For instance, it was designed to work with Intel's Accelerated Graphics Port. ATI also added support for several new features like fog and transparent images, specular lighting, and DVD playback. It also upgraded the triangle setup engine and made numerous tweaks to the core to boost performance. The 3D Rage Pro operated at 75 MHz, or 15 MHz higher than the 3D Rage II. Maximum memory jumped to 16MB of SGRAM. But while performance did increase compared to ATI's own 3D Rage II, the 3D Rage Pro failed to distinguish itself against Nvidia's Riva 128 and 3dfx's Voodoo.

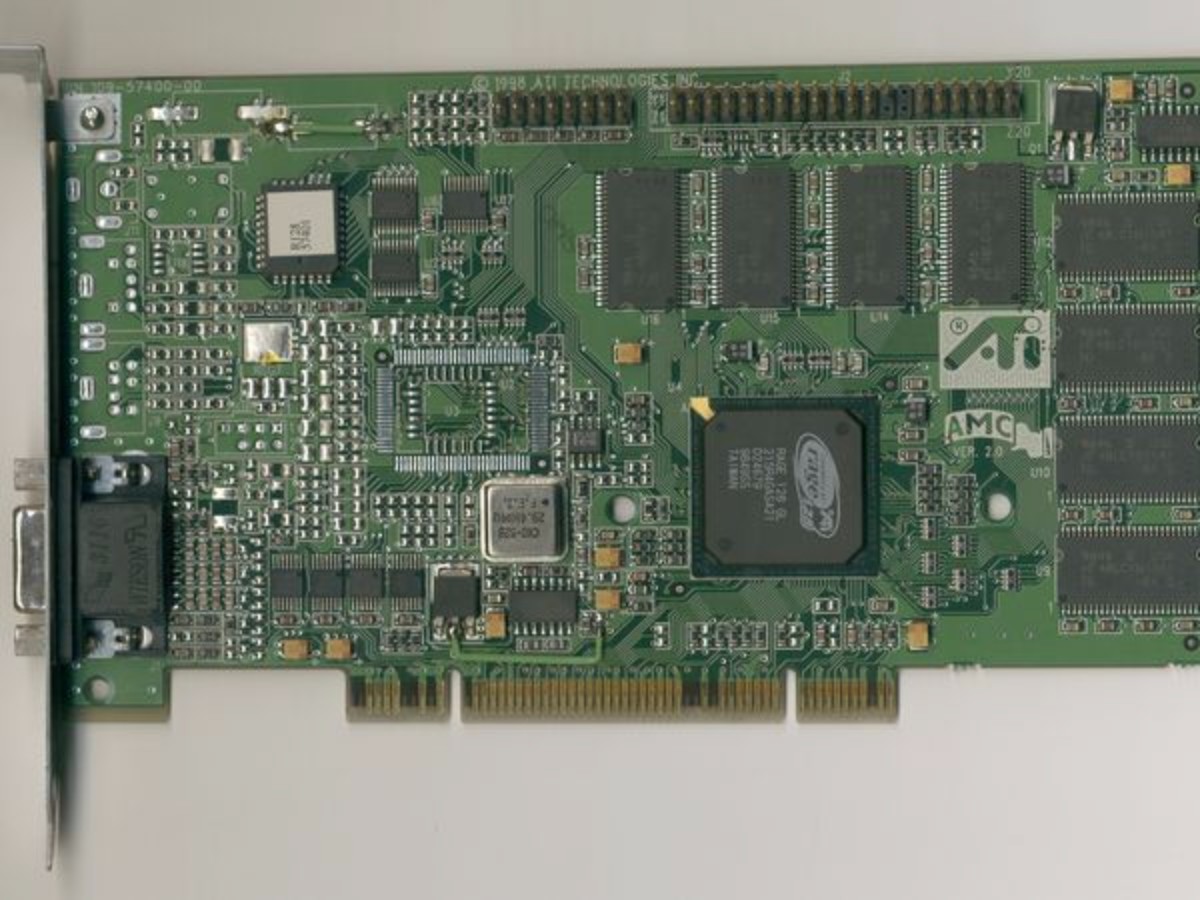

ATI Rage 128 (1998)

ATI's next project was much more ambitious. The company incorporated support for 32-bit color and a second pixel pipeline, which allowed the Rage 128 to output two pixels per clock instead of just one. It fed the architecture with a 128-bit memory interface, too. As a means to further improve performance, the Rage 128 leveraged what ATI called its Twin Cache Architecture consisting of an 8KB pixel cache and an 8KB buffer for already-textured pixels.

The Rage Fury card was highly competitive for its time. It wasn't quite as fast as the Voodoo3, which beat it in 16-bit mode, but the Rage 128 chip outperformed Matrox's G400 and Nvidia's Riva TNT when 32-bit color was used (the Voodoo card didn't support 32-bit at all).

ATI Rage 128 Pro (1999)

The Rage 128 Pro supported DirectX 6.0 and the AGP X4 interface. ATI also updated aspects of the triangle setup engine, increasing theoretical geometry throughput to eight million triangles/second. These enhancements landed the Rage Fury Pro around the same performance as 3dfx's Voodoo3 2000 and Nvidi's Nvidia's Riva TNT2.

ATI complemented the multimedia features of its Rage 128 Pro with an on-board chip called the Rage Theater. This allowed the Rage Fury Pro to output video through composite and S-video connectors.

Image Credit: VGAMuseum.info

ATI Rage Fury MAXX (1999)

Unable to beat the competition with its Rage 128 Pro, ATI took a page out of 3dfx's book and created a single graphics card with two Rage 128 Pro processors. That card came to be known as the Rage Fury MAXX, and it employed Alternate Frame Rendering technology. Using AFR, each graphics chip rendered all of the odd or all of the even frames, which were then displayed one after the other. This differed from 3dfx's SLI technology, which broke the frame down into even and odd scan lines. AFR is still in use today.

Although the Rage Fury MAXX outperformed most of its competition (except Nvidia's GeForce 256 DDR), it was doomed to a short life. Microsoft's Windows 2000 and Windows XP operating systems did not support two GPUs on the AGP bus, instead utilizing a single Rage Fury Pro. Rather, the MAXX was best paired to Windows 98 and ME.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: The History Of Nvidia GPUs

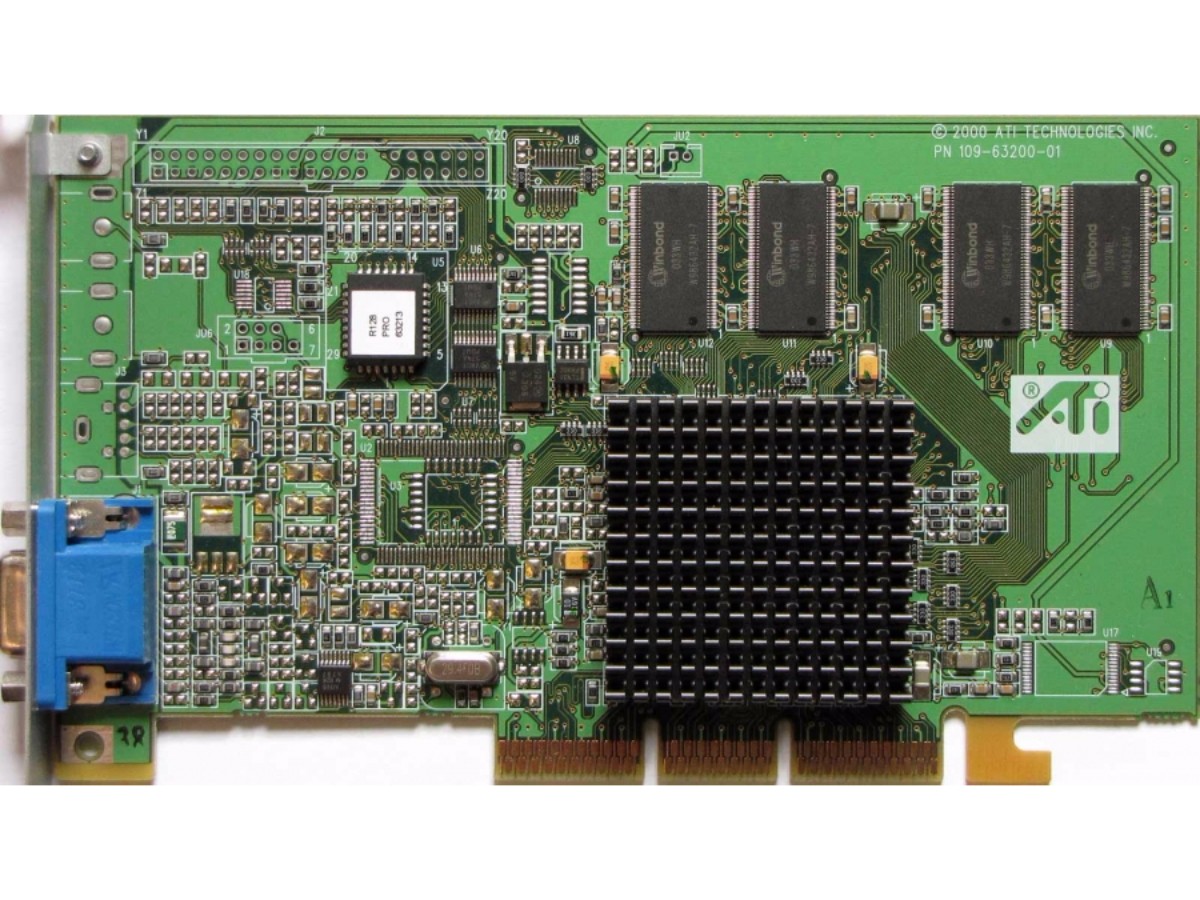

ATI Radeon DDR (2000)

In 2000, ATI switched to the Radeon branding still in use today. The first member of that family was simply called the Radeon DDR, and it was based on the R100 GPU. The R100 was an evolution of the Rage 128 Pro, but it featured a hardware Transform and Lighting (T&L) engine. It also had two pixel pipelines and three TMUs. ATI added a feature called HyperZ consisting of three technologies: Z compression, Fast Z clear, and a Hierarchical Z-buffer, which combined to conserve memory bandwidth and improve rendering efficiency.

The Radeon DDR also marked ATI's transition to a new 180 nm fabrication process, which helped the company push clock rates as high as 183 MHz on the 64 MB version (a 32 MB card was also available with a 166 MHz GPU).

Image Credit: VGAMuseum.info

-

adamovera Archived comments are found here: http://www.tomshardware.com/forum/id-3207492/year-history-amd-graphics-pictures.htmlReply -

XaveT I still have a 5870 in production. Great card. And six miniDP ports on one card is great for the workstation it's in. I'm sad to see no current flagships with the same configuration, I may have to do two graphics cards or get an unneeded professional series card at upgrade time.Reply -

Nuckles_56 This sentence doesn't make a lot of senseReply

"All-in-Wonder 8500 close to the performance of AMD's Radeon 8500, which was enough to compete against Nvidia's GeForce 3." -

cub_fanatic I have a couple of those 8500 all-in-wonder cards with the remote, receiver for the remote, the giant dongle, the software and all. A friend had them sitting in his garage and was going to chuck them so I took them home. I believe they were used in a doctor's office or something so that they could have a single display in the room and switch to TV while the patient waited and then back to a desktop to show them x-rays or whatever. I have one hooked up to a Pentium 4 Dell running XP but can't figure out much of a use for it in 2017. Even the tuner is almost useless unless you want to capture a video off of a VCR since they can only handle analog.Reply -

Martell1977 My first ATI discreet GPU was a Radeon 9250, and it was pretty good at the time considering the resolutions of the CRT monitors. My second was a Radeon 3850 AGP, while my brother went with a nVidia card. I sold the 3850 with the other parts, working, on eBay mid last year....my brothers nVidia card barely outlasted the warranty.Reply

Next came my 6870, which I got in 2010 and tried crossfire in 2015, but ended up upgrading to a R9 390 as the 6870's ran into memory issues.

I know a lot of people complain about AMD/ATI drivers, but I've had more issues with nVidia driver (especially recently) than any AMD. Both my laptops have been nVidia GPU's (8600M GT and 1060 3gb).

Awaiting to see what Vega brings, I'm surprised it didn't at least get a tease at the end of the article, but hey, maybe this is a lead up to a Vega release soon? -

I look forward to the next 30 years.Reply

P.S. Maybe eventually heterogeneous compute will work. I doubt it. In any case, they seem back in formation, and charging forward. -

blackmagnum I hate reading this page format on IOS; lots of problems and hangs with page scrolling.Reply -

CRO5513Y You can definitely see the change in Aesthetics, what ever happened to all the fancy details and characters on the cards? :PReply

(I mean to be fair not much room to fit them on cards these days since most have non-blower style coolers). -

LaminarFlow Hard to believe that I've been an ATI user for almost 15 years now. Always had a somewhat limited budget, I picked up a 9600XT in 2003, then X1900 XT in 2006, HD 4850 in 2008 (for $125, that was the best computer part purchased I ever made), and R9 280X in 2013.Reply