AMD Radeon VII 16GB Review: A Surprise Attack on GeForce RTX 2080

AMD is first to market with a 7nm gaming GPU. The company complements its Vega 20 processor with 16GB of HBM2 on a 4,096-bit bus, packing it all into a 300W Radeon VII graphics card. Should those numbers impress you? Yeah, actually, they should.

Why you can trust Tom's Hardware

Power Consumption

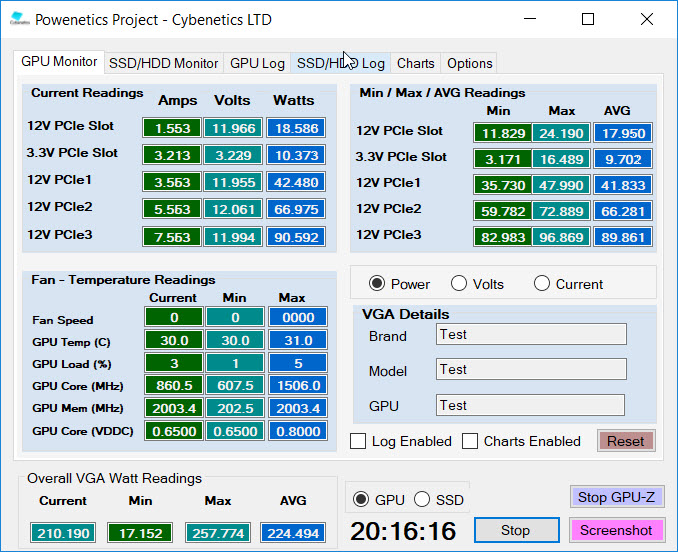

Slowly but surely, we’re spinning up multiple Tom’s Hardware labs with Cybenetics’ Powenetics hardware/software solution for accurately measuring power consumption.

Powenetics, In Depth

For a closer look at our U.S. lab’s power consumption measurement platform, check out Powenetics: A Better Way To Measure Power Draw for CPUs, GPUs & Storage.

In brief, Powenetics utilizes Tinkerforge Master Bricks, to which Voltage/Current bricklets are attached. The bricklets are installed between the load and power supply, and they monitor consumption through each of the modified PSU’s auxiliary power connectors and through the PCIe slot by way of a PCIe riser. Custom software logs the readings, allowing us to dial in a sampling rate, pull that data into Excel, and very accurately chart everything from average power across a benchmark run to instantaneous spikes.

The software is set up to log the power consumption of graphics cards, storage devices, and CPUs. However, we’re only using the bricklets relevant to graphics card testing. AMD's Radeon VII gets all of its power from the PCIe slot and a pair of eight-pin PCIe connectors. Should third-party Vega 20-based board materialize at some point in the future with three auxiliary power connectors, we can support them, too.

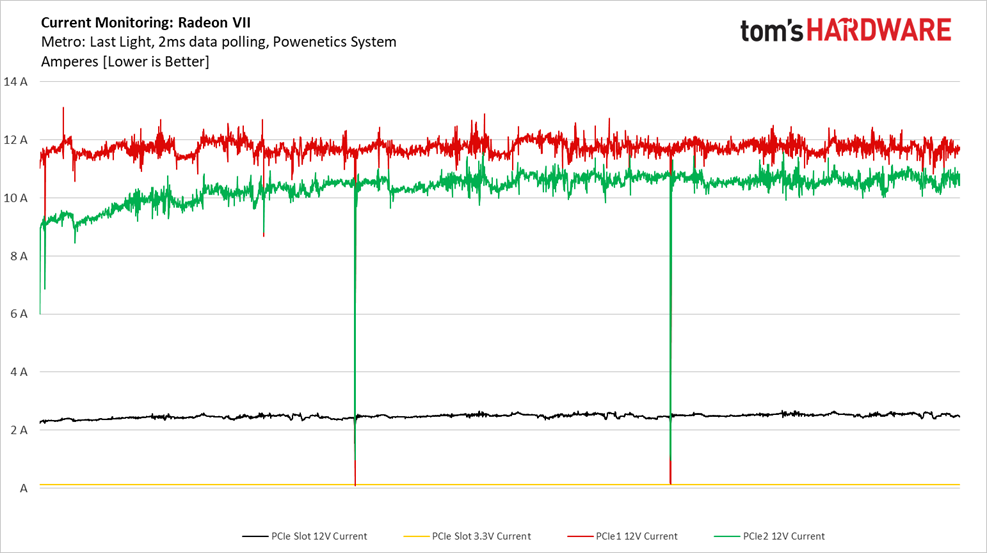

Gaming: Metro: Last Light

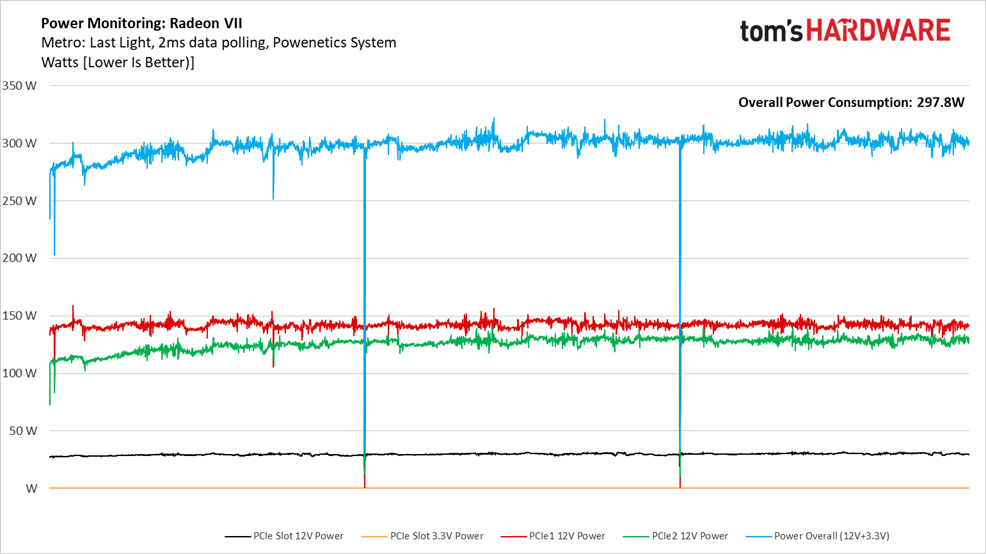

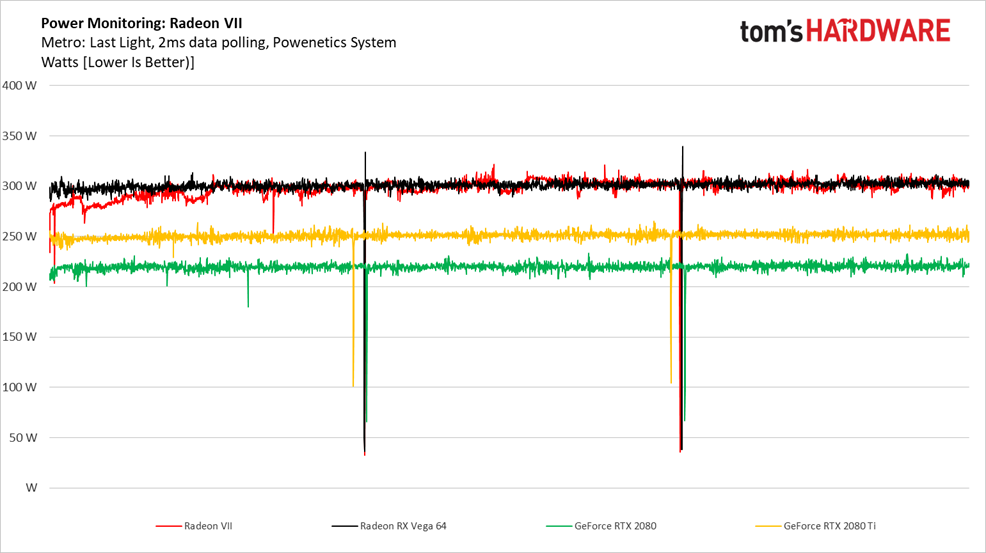

Three runs of the Metro: Last Light benchmark give us consistent, repeatable results, which makes it easier to compare the power consumption of graphics cards.

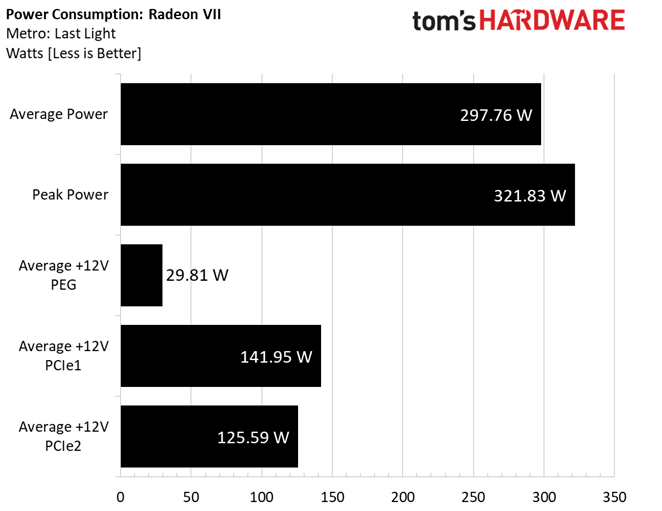

AMD extracts as much performance out of Radeon VII's power budget as possible. Through our three-run recording, the card averages almost 298W with spikes that approach 322W.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Very little power is delivered over the PCI Express slot. Rather, it's fairly evenly balanced between both eight-pin auxiliary connectors.

The blue overall power consumption line, representing the sum of all other lines, mostly obeys AMD's 300W limit.

Less impressive is the fact that Radeon VII does battle against a card rated for 75W less, manufactured on a 12nm node. Even GeForce RTX 2080 Ti, which is significantly faster, uses quite a bit less power.

At least AMD isn't in unprecedented territory. Despite a supposed 295W power limit, its Radeon RX Vega 64 demonstrated a similar power profile as Radeon VII.

Current over the PCIe slot stays just over 2A. Clearly, those eight-pin connectors do most of the heavy lifting here.

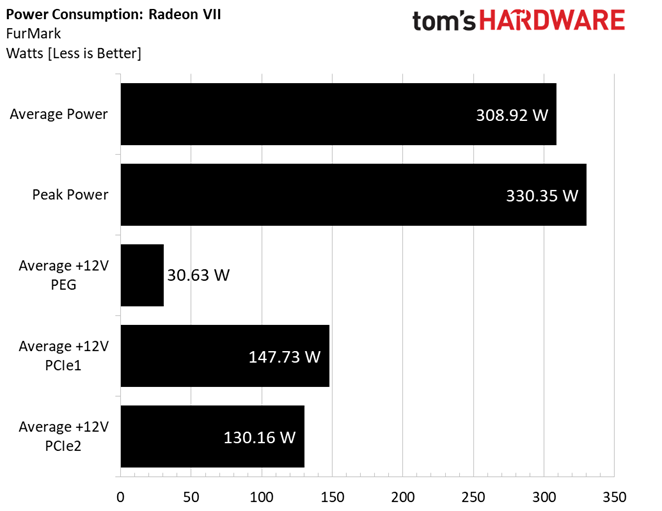

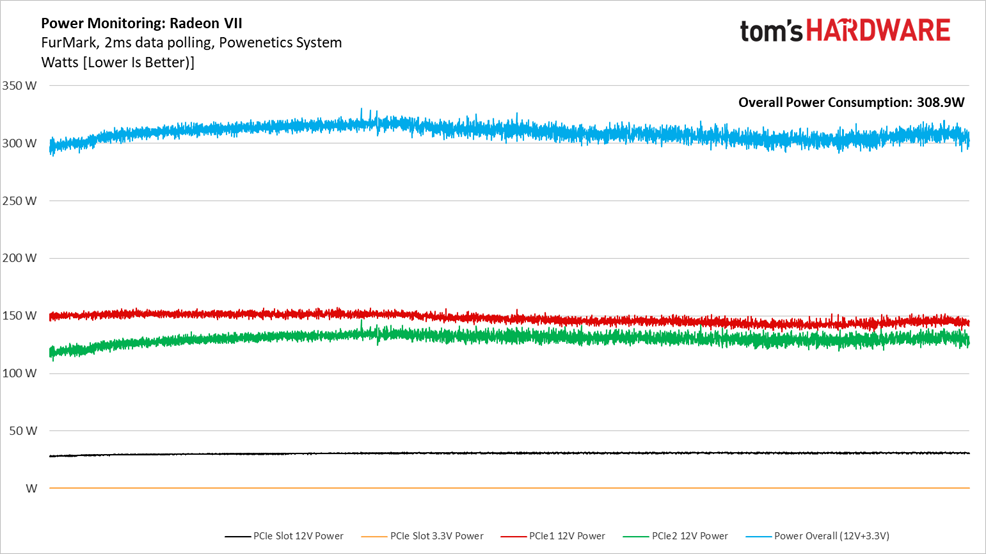

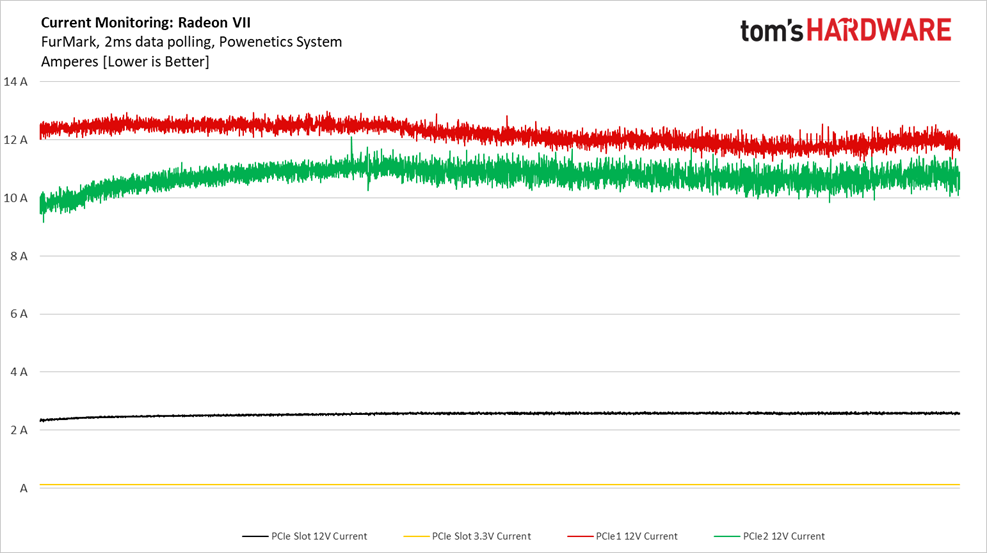

FurMark

FurMark is a steadier workload, resulting in less variation across our test run. Average power does rise slightly to 309W with spikes as high as 330W.

The much more consistent workload makes it easier to compare draw over each rail. Again, AMD achieves good balance between its two eight-pin auxiliary connectors, while the PCIe slot averages 30W.

GeForce RTX 2080 and 2080 Ti are well-behaved. They operate within a tight power range and generally obey Nvidia's limits.

Radeon RX Vega 64 has a harder time keeping up with the demands of FurMark. It starts off strong, quickly heats up, and then oscillates within a ~15W range to avoid violating one of AMD's upper bounds.

Radeon VII doesn't have the same issue. Its power consumption line chart isn't as tightly grouped. But the card still maintains its performance under that full-length heat sink and trio of axial fans.

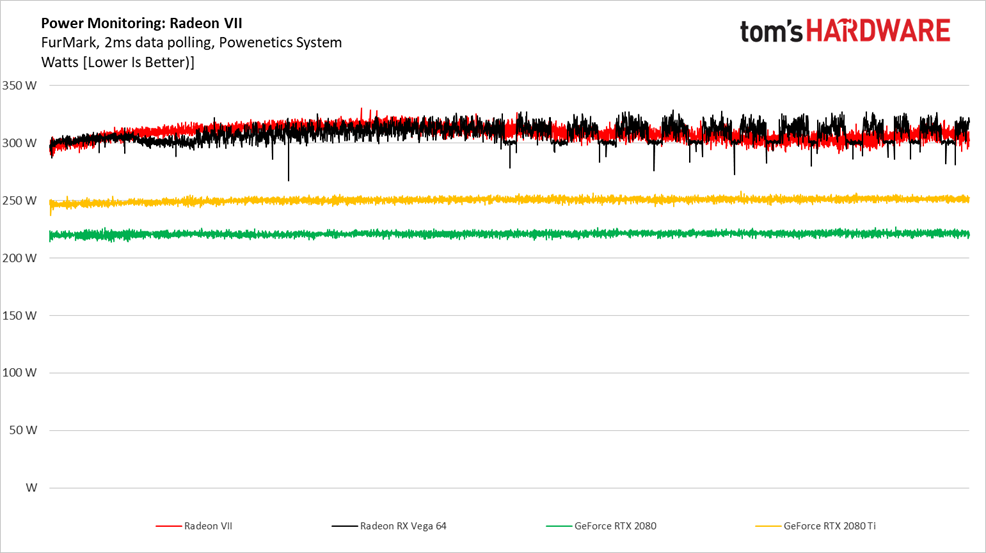

Current draw over the PCIe slot hovers between 2A and 3A, leaving lots of headroom under the 5.5A ceiling.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Consumption

Prev Page Performance Results: LuxMark, SPECviewperf, Cinema4D and Blender Next Page Fan Speed and Noise-

cknobman I have to say (as an AMD fan) that I am a little disappointed with this card, especially given the price point.Reply -

velocityg4 This might be an attack on the RTX 2080 if the price was closer to that of an RTX 2070. Looking at the results in the article. It only approaches RTX 2080 performance in games which tend to favor AMD cards. Yet even in those it barely surpases the RTX 2080. While the RTX 2080 soundly beats it in nVidia optimized titles. I'd say it would be an RTX 2075 if such a thing existed.Reply

Don't get me wrong. It is still a better card than an RTX 2070. But it's performance doesn't justify RTX 2080 pricing. Based on current pricing on PCParticker of the 2070 and 2080. $600 USD would be a price better suited for it.

Compute is a different matter. Depending on your specific work requirements. You can get some great bang for your buck.

Still it would be nice if AMD could blow out the pricing in the GPU segment as it does in the CPU segment. Although their strategy may be more of an attack on the compute segment. Given the large amount of memory and FP64 performance.

21749331 said:Under the hood, AMD’s Vega 20 graphics processor looks a lot like the Vega 10 powering Radeon RX Vega 64. But a shift from 14nm manufacturing at GlobalFoundries to TSMC’s 7nm node makes it possible for AMD to operate Vega 20 at much higher clock rates than its processor.

Did you mean predecessor?

-

richardvday they cant lower the price without losing money on each card that memory cost so much its half of the BOMReply -

King_V Reply21749475 said:they cant lower the price without losing money on each card that memory cost so much its half of the BOM

I know this has been mentioned, but do we have any hard data where we know this for certain?

I remember asking someone before, and they posted a link, but even that seemed to be a he-said-she-said kind of thing.

I do have to agree, though, overall, with a vague disappointment. Given its performance, value-wise, it seems this is worthwhile only if you really want at least two of the games in the bundle.

I hadn't thought about what AMD's motivation was, but the thought that even AMD was caught a little by surprise at Nvidia's somewhat arrogant pricing for the RTX 2070/2080/2080Ti, and "smelled blood" as it were, is somewhat plausible. -

redgarl Something is wrong with your Tomb Raider bench, techspot results are giving the RVII the victory.Reply -

timtiminhouston I am an AMD fan but AMD needs to stop letting sales gurus dictate everything. The fact is AMD is WAY behind Nvidia at this point, and there is absolutely no way they should have disabled anything from the datacenter card to make up for not having Tensor OR Ray Tracing; they should have only had less memory. I understand reviewers trying not to savage the card, but let's be honest, if I won this card I would probably sell it unopened. I am all about GPGPU, and in the HD7970 at least I had massive compute compared to the competition (125k Pyrit hashes/sec), even though Nvidia was still better in most games. Not only is this card not better in gaming, it is way behind in GPGPU technology. I look forward to buying a used RTX card in a year or two to see what else the Tensor cores can be used for on Linux, but not so much this card. If I saw it for $300 on Craigslist I might be tempted, but GPU and memory prices are still way over inflated due to the cryptoscam boom. Luckily it looks like they are running short of suckers. This is a hard pass, and no way I can recommend it. A used GTX 1070 or Vega 56 for 200 is far better bang for buck. Hold your money for a year or so.Reply -

edjetorsy I am not impressed by this AMD graphical card at almost the same price for a RTX2080 more performance and lower power consumption.Reply

The Ryzen CPU however I am interested in, the 2700X is a good deal and waiting for the Ryzen 3e generation to appear and see what this baby can do compare to Intel high end . But no I own a GTX1070 il think I pass this whole RTX and Radeon VII generation, there is not so much to gain for the price at this time. -

shapoor12 can't understand the 331mm2 die size on readon vii while rtx 2080 have 545mm2 , amd could smash Nvidia by making bigger cards. why u doing this to yourself AMD? WHY?WHY?Reply -

Olle P At least it's the first 7nm consumer-marketed GPU to the market.Reply

The price cut from ~$5,000 (vanilla MI50) to $700 doesn't hurt.

Some undervolting and -clocking should do wonders to noise and heat.

Time to sit back and wait for Navi... -

Fulgurant "Nvidia’s Turing-based cards proved this by serving up solid performance, but simultaneously turning many gamers off with steep prices. It was only when the company worked its way down to GeForce RTX 2060 and had to compete against Radeon RX Vega 56/64 that it got serious about telling a more compelling value story."Reply

I don't see how the 2060 is a compelling value story. Sure, it's more cost-efficient than its high-end cousins, but the 2060 offers very little in the way of a performance increase to people who were in the same price bracket previously (1070 owners), and it offers an enormous price and power premium to people who own 1060s.

Spent ~$370 almost 3 years ago for an Nvidia card? Well now you can plop down roughly the same money for about a 15% performance increase and a 2 GB loss of VRAM. That tech-journalists actually tout this as great progress mystifies me.

Unfortunately this newest release from AMD doesn't look like it presages significant price pressure to bring Nvidia back down to earth. Things might get better as AMD drives towards down towards the midrange segment, but who knows? Here's hoping.