AMD Radeon VII 16GB Review: A Surprise Attack on GeForce RTX 2080

AMD is first to market with a 7nm gaming GPU. The company complements its Vega 20 processor with 16GB of HBM2 on a 4,096-bit bus, packing it all into a 300W Radeon VII graphics card. Should those numbers impress you? Yeah, actually, they should.

Why you can trust Tom's Hardware

Performance Results: LuxMark, SPECviewperf, Cinema4D and Blender

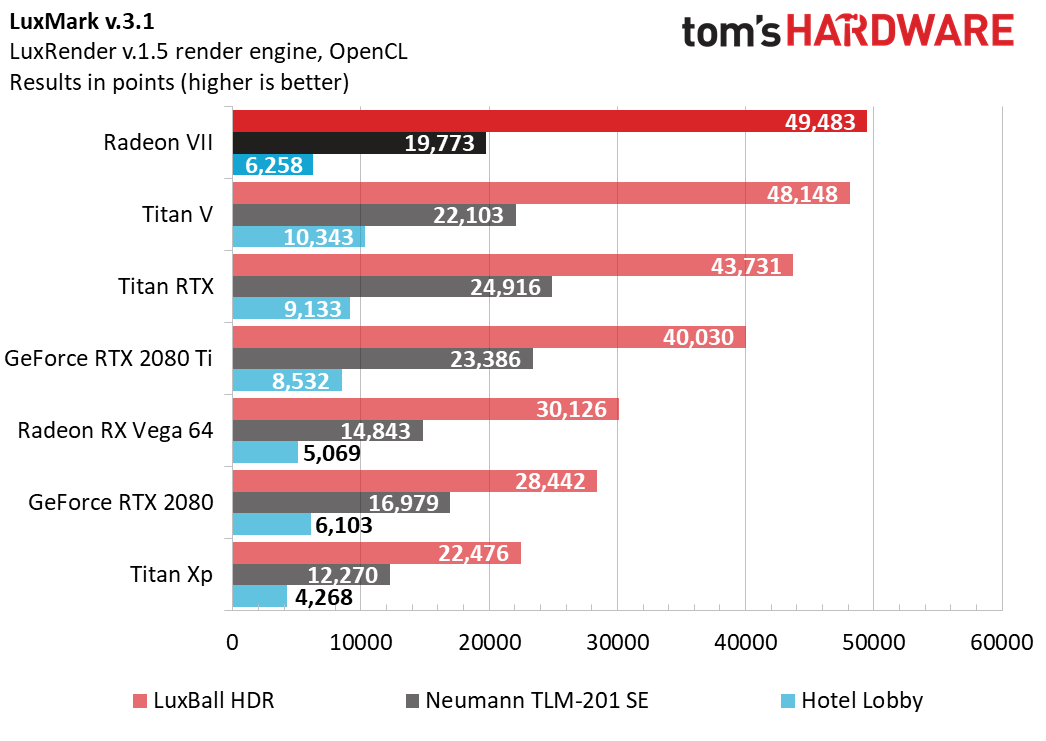

LuxMark v.3.1

The latest version of LuxMark is based on an updated LuxRender 1.5 render engine, which specifically incorporates OpenCL optimizations that invalidate comparisons to previous versions of the benchmark.

We tested all three scenes available in the 64-bit benchmark: LuxBall HDR (with 217,000 triangles), Neumann TLM-102 SE (with 1,769,000 triangles), and Hotel Lobby, with 4,973,000 triangles).

Radeon VII is the first-place finisher in LuxMark's LuxBall HDR workload, beating the Turing-based Titan RTX and Volta-based Titan V thanks to a substantial memory bandwidth advantage. It doesn't fare quite as well in the subsequent tests, which are more compute-intensive.

With that said, Radeon VII also scores better than GeForce RTX 2080, its primary competition, in the Neumann TLM-201 SE and Hotel Lobby tests.

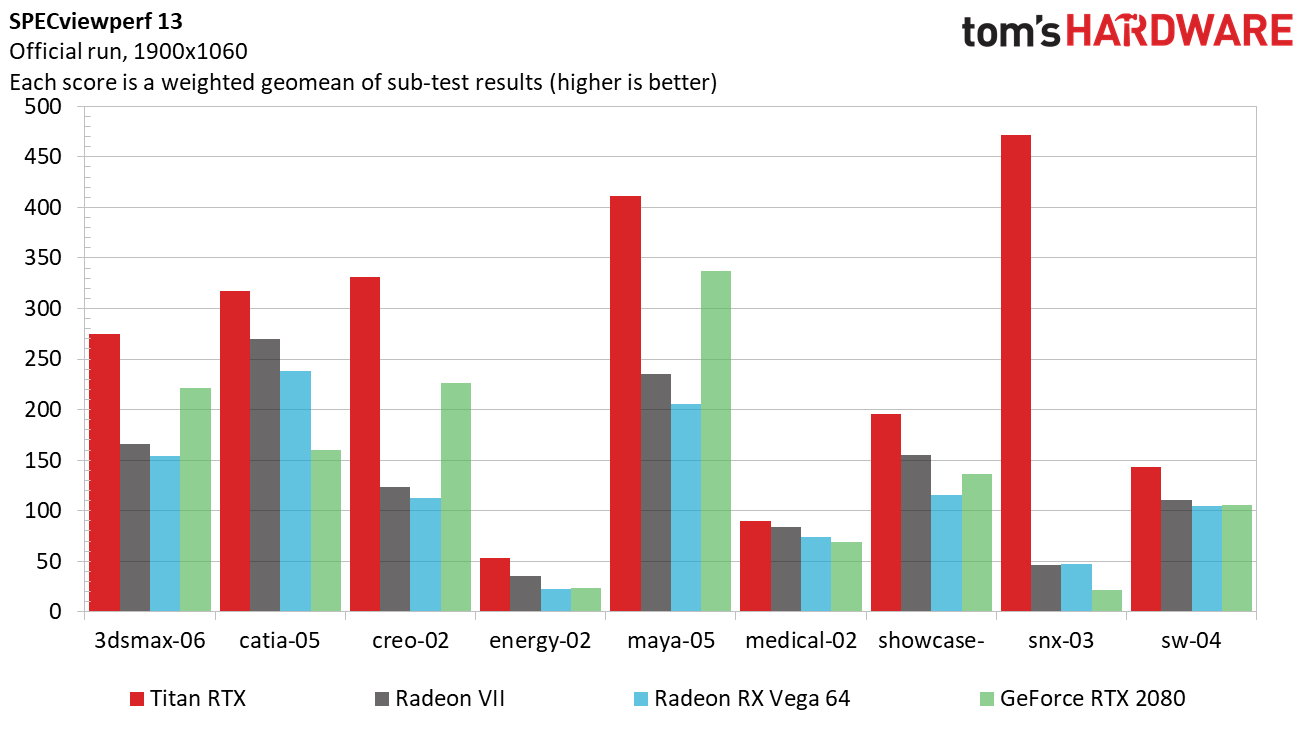

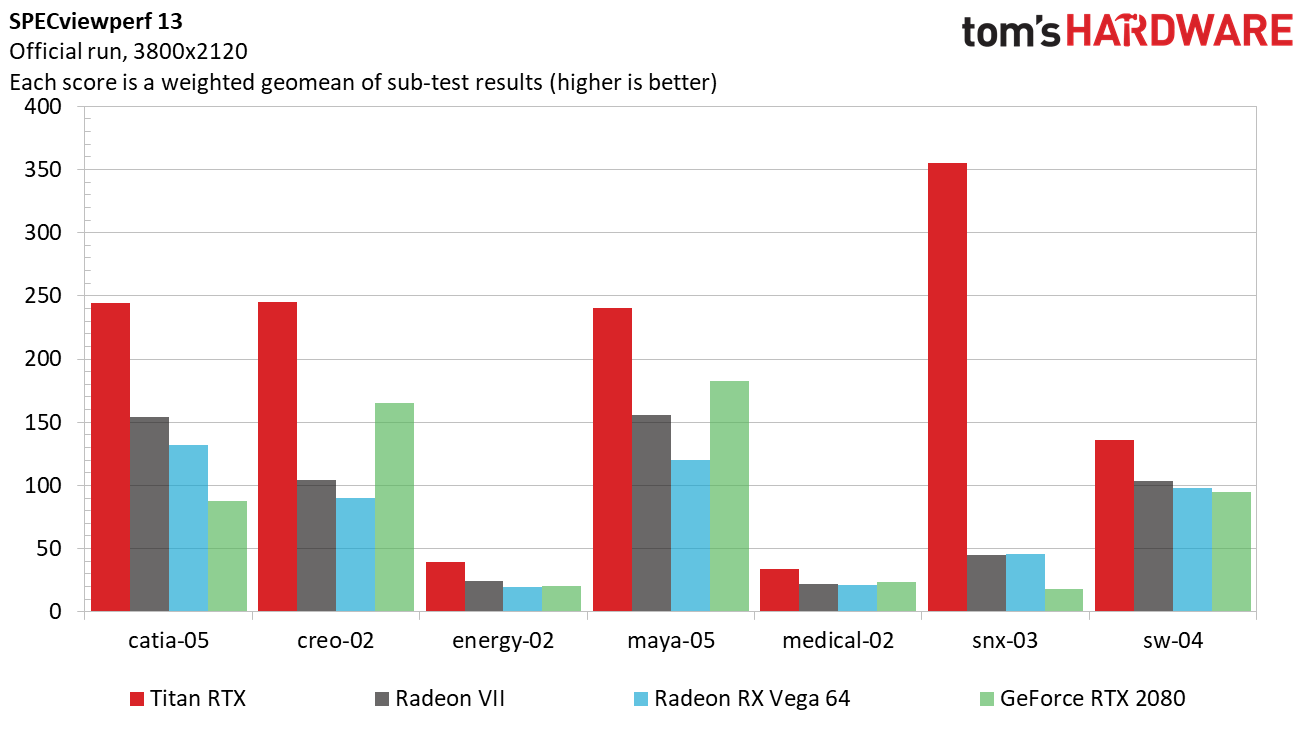

SPECviewperf 13

The most recent version of SPECviewperf employs traces from Autodesk 3ds Max, Dassault Systemes Catia, PTC Creo, Autodesk Maya, Autodesk Showcase, Siemens NX, and Dassault Systemes SolidWorks. Two additional tests, Energy and Medical, aren’t based on a specific application, but rather on datasets typical of those industries.

Oil and gas workloads tend to involve very large datasets, which play into Radeon VII's 16GB of HBM2 at 1 TB/s. The same goes for a certain medical applications. And in those tests, AMD's flagship is faster than GeForce RTX 2080.

Catia and NX, specifically, respond well to the professional driver optimizations that benefit Nvidia's Titan cards. AMD's Radeons are quite a bit slower in both benchmarks. However, the Radeon VII and Radeon RX Vega 64 make easy work of GeForce RTX 2080.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

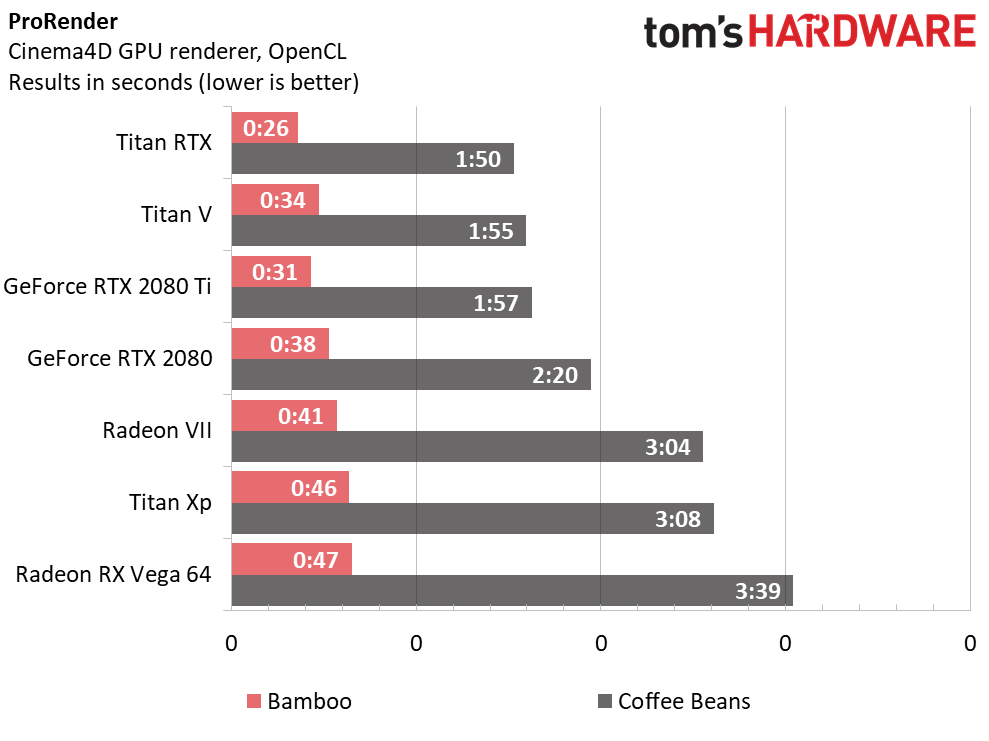

Cinema4D

ProRender is a physically-based GPU render engine. Unlike Arion Render, which we tested in our Titan RTX review, it utilizes OpenCL instead of CUDA.

Blender

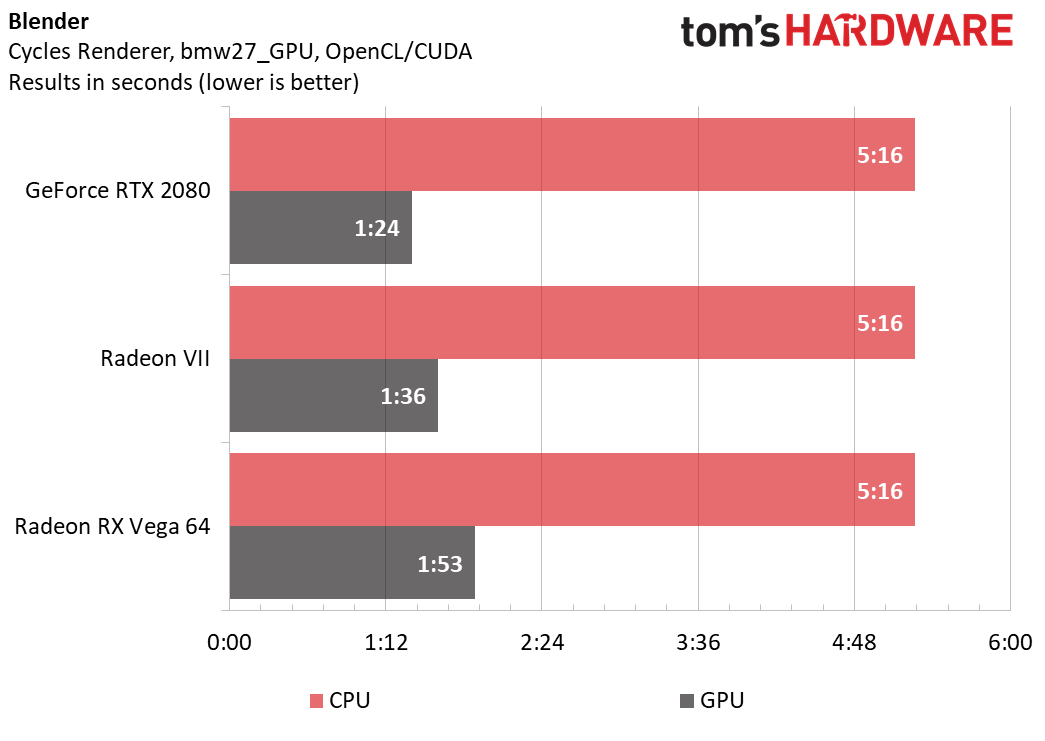

Per AMD's recommendation, we tested Radeon VII and Radeon RX Vega 64 using Blender v.2.79b. In order to get CUDA acceleration from GeForce RTX 2080, we had to use v.2.80.

Rendering the bmw27_GPU test file using our Core i7-8700K took 5:16, regardless of the graphics card we had installed. Switching over to GPU acceleration through OpenCL or CUDA brought those times down significantly. Although Radeon VII trails GeForce RTX 2080, it definitely improves upon Radeon RX Vega 64's performance.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Performance Results: LuxMark, SPECviewperf, Cinema4D and Blender

Prev Page Performance Results: Gaming at 3840 x 2160 Next Page Power Consumption-

cknobman I have to say (as an AMD fan) that I am a little disappointed with this card, especially given the price point.Reply -

velocityg4 This might be an attack on the RTX 2080 if the price was closer to that of an RTX 2070. Looking at the results in the article. It only approaches RTX 2080 performance in games which tend to favor AMD cards. Yet even in those it barely surpases the RTX 2080. While the RTX 2080 soundly beats it in nVidia optimized titles. I'd say it would be an RTX 2075 if such a thing existed.Reply

Don't get me wrong. It is still a better card than an RTX 2070. But it's performance doesn't justify RTX 2080 pricing. Based on current pricing on PCParticker of the 2070 and 2080. $600 USD would be a price better suited for it.

Compute is a different matter. Depending on your specific work requirements. You can get some great bang for your buck.

Still it would be nice if AMD could blow out the pricing in the GPU segment as it does in the CPU segment. Although their strategy may be more of an attack on the compute segment. Given the large amount of memory and FP64 performance.

21749331 said:Under the hood, AMD’s Vega 20 graphics processor looks a lot like the Vega 10 powering Radeon RX Vega 64. But a shift from 14nm manufacturing at GlobalFoundries to TSMC’s 7nm node makes it possible for AMD to operate Vega 20 at much higher clock rates than its processor.

Did you mean predecessor?

-

richardvday they cant lower the price without losing money on each card that memory cost so much its half of the BOMReply -

King_V Reply21749475 said:they cant lower the price without losing money on each card that memory cost so much its half of the BOM

I know this has been mentioned, but do we have any hard data where we know this for certain?

I remember asking someone before, and they posted a link, but even that seemed to be a he-said-she-said kind of thing.

I do have to agree, though, overall, with a vague disappointment. Given its performance, value-wise, it seems this is worthwhile only if you really want at least two of the games in the bundle.

I hadn't thought about what AMD's motivation was, but the thought that even AMD was caught a little by surprise at Nvidia's somewhat arrogant pricing for the RTX 2070/2080/2080Ti, and "smelled blood" as it were, is somewhat plausible. -

redgarl Something is wrong with your Tomb Raider bench, techspot results are giving the RVII the victory.Reply -

timtiminhouston I am an AMD fan but AMD needs to stop letting sales gurus dictate everything. The fact is AMD is WAY behind Nvidia at this point, and there is absolutely no way they should have disabled anything from the datacenter card to make up for not having Tensor OR Ray Tracing; they should have only had less memory. I understand reviewers trying not to savage the card, but let's be honest, if I won this card I would probably sell it unopened. I am all about GPGPU, and in the HD7970 at least I had massive compute compared to the competition (125k Pyrit hashes/sec), even though Nvidia was still better in most games. Not only is this card not better in gaming, it is way behind in GPGPU technology. I look forward to buying a used RTX card in a year or two to see what else the Tensor cores can be used for on Linux, but not so much this card. If I saw it for $300 on Craigslist I might be tempted, but GPU and memory prices are still way over inflated due to the cryptoscam boom. Luckily it looks like they are running short of suckers. This is a hard pass, and no way I can recommend it. A used GTX 1070 or Vega 56 for 200 is far better bang for buck. Hold your money for a year or so.Reply -

edjetorsy I am not impressed by this AMD graphical card at almost the same price for a RTX2080 more performance and lower power consumption.Reply

The Ryzen CPU however I am interested in, the 2700X is a good deal and waiting for the Ryzen 3e generation to appear and see what this baby can do compare to Intel high end . But no I own a GTX1070 il think I pass this whole RTX and Radeon VII generation, there is not so much to gain for the price at this time. -

shapoor12 can't understand the 331mm2 die size on readon vii while rtx 2080 have 545mm2 , amd could smash Nvidia by making bigger cards. why u doing this to yourself AMD? WHY?WHY?Reply -

Olle P At least it's the first 7nm consumer-marketed GPU to the market.Reply

The price cut from ~$5,000 (vanilla MI50) to $700 doesn't hurt.

Some undervolting and -clocking should do wonders to noise and heat.

Time to sit back and wait for Navi... -

Fulgurant "Nvidia’s Turing-based cards proved this by serving up solid performance, but simultaneously turning many gamers off with steep prices. It was only when the company worked its way down to GeForce RTX 2060 and had to compete against Radeon RX Vega 56/64 that it got serious about telling a more compelling value story."Reply

I don't see how the 2060 is a compelling value story. Sure, it's more cost-efficient than its high-end cousins, but the 2060 offers very little in the way of a performance increase to people who were in the same price bracket previously (1070 owners), and it offers an enormous price and power premium to people who own 1060s.

Spent ~$370 almost 3 years ago for an Nvidia card? Well now you can plop down roughly the same money for about a 15% performance increase and a 2 GB loss of VRAM. That tech-journalists actually tout this as great progress mystifies me.

Unfortunately this newest release from AMD doesn't look like it presages significant price pressure to bring Nvidia back down to earth. Things might get better as AMD drives towards down towards the midrange segment, but who knows? Here's hoping.