Micron 9100 Max NVMe 2.4TB SSD Review

Why you can trust Tom's Hardware

OLTP & Email Server Workloads

To read more on our test methodology visit How We Test Enterprise SSDs, which explains how to interpret our charts. The most crucial step to assuring accurate and repeatable tests starts with a solid preconditioning methodology, which is covered on page three. We cover workload performance measurements on page six, explain latency metrics on page seven and explain QoS testing and the QoS domino effect on page nine.

The 9100 has taken a bit of flak for its low performance and higher latency during light, simple random read and write workloads, but now the server workloads change gears to the mixed workloads. These mixed read/write workloads are the bread and butter of daily application performance, and the Micron 9100 delivers where it counts.

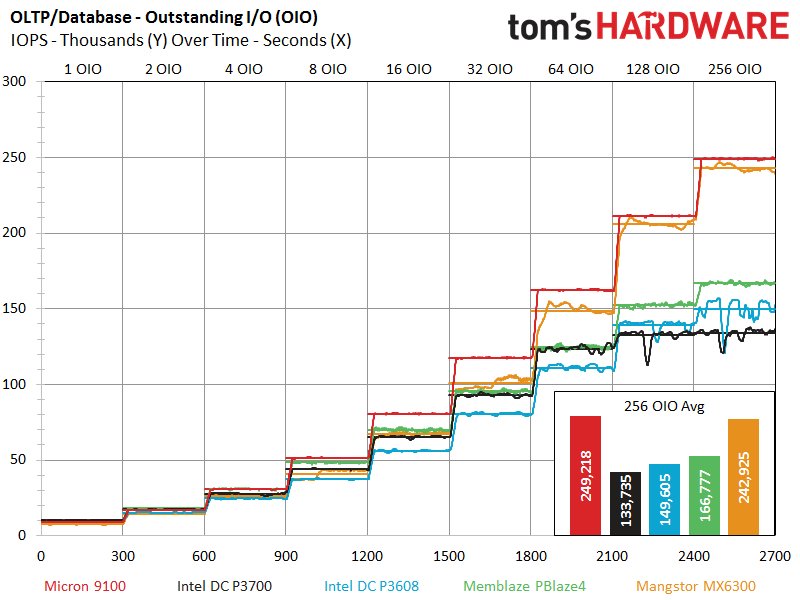

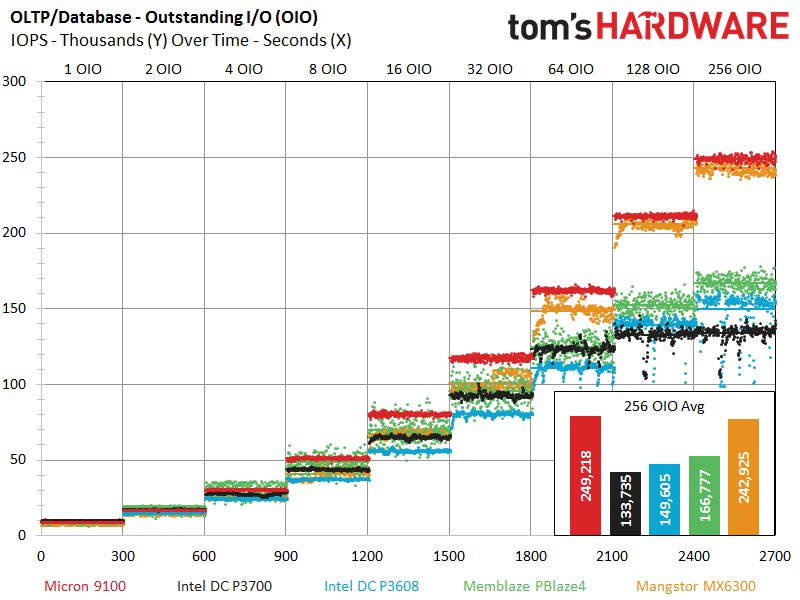

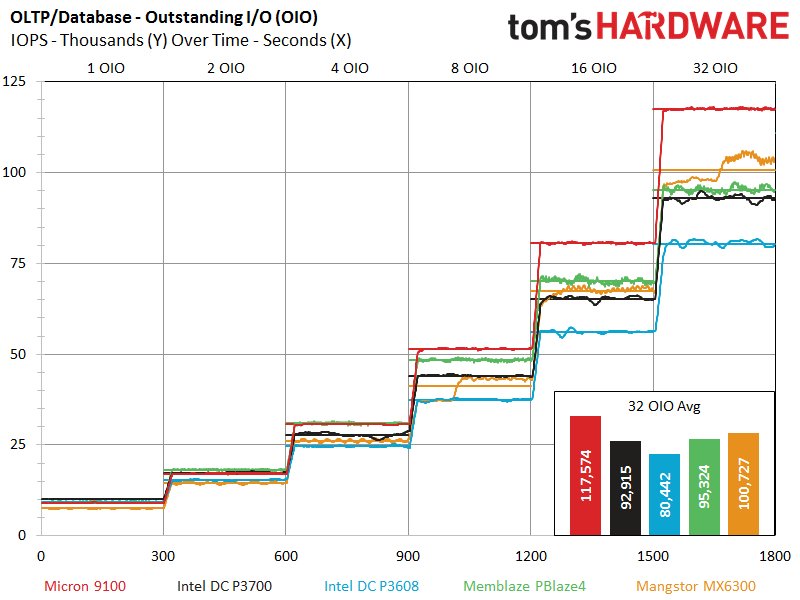

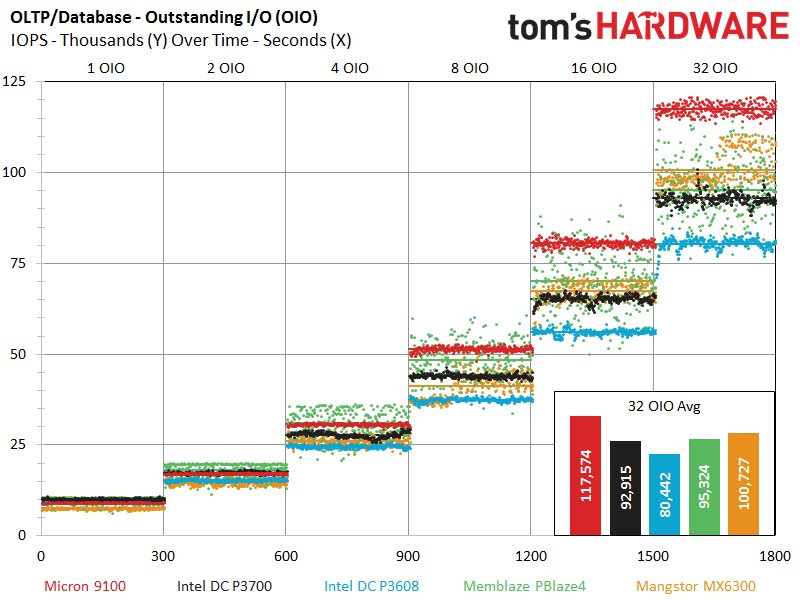

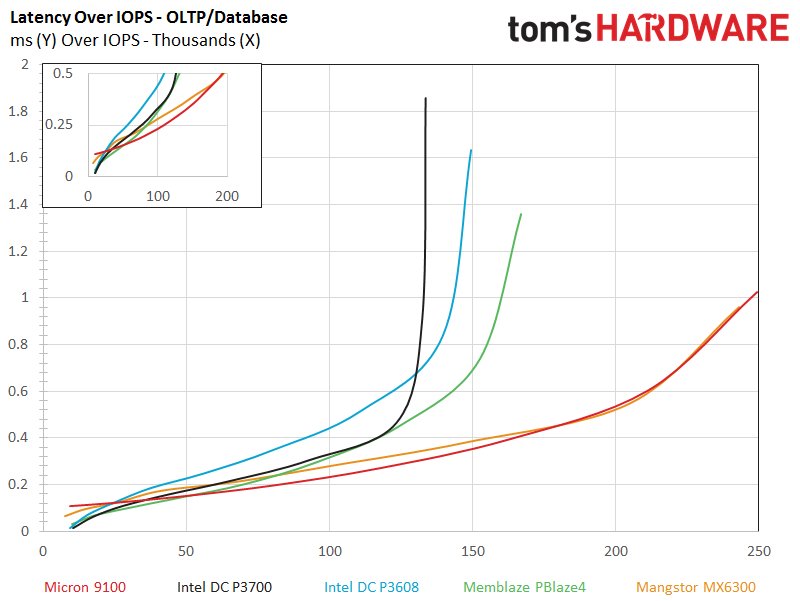

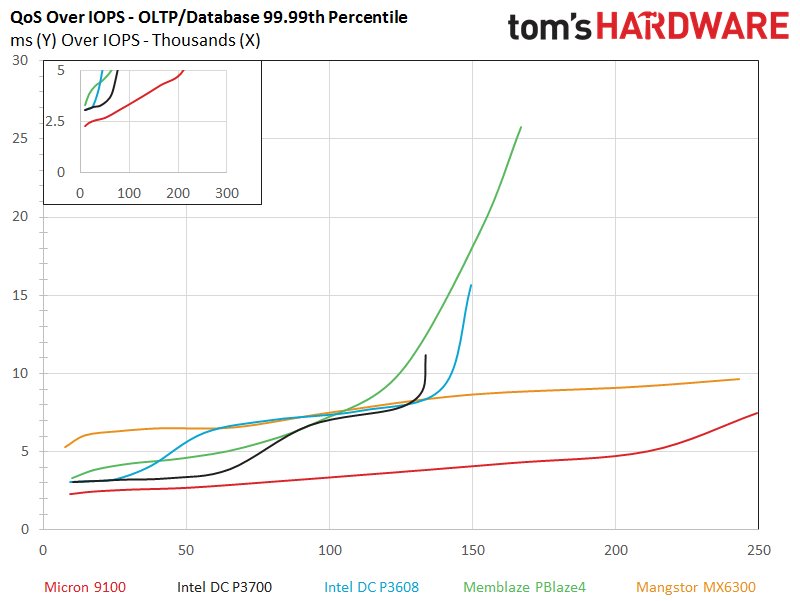

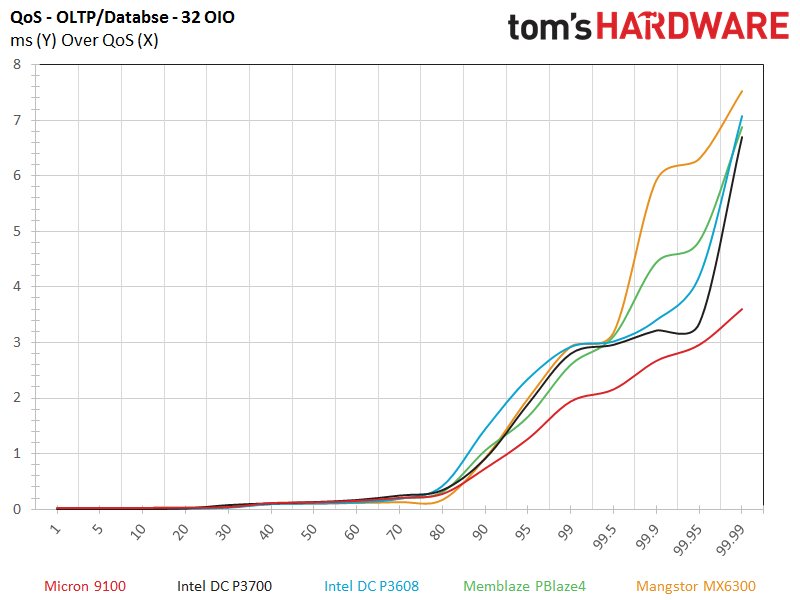

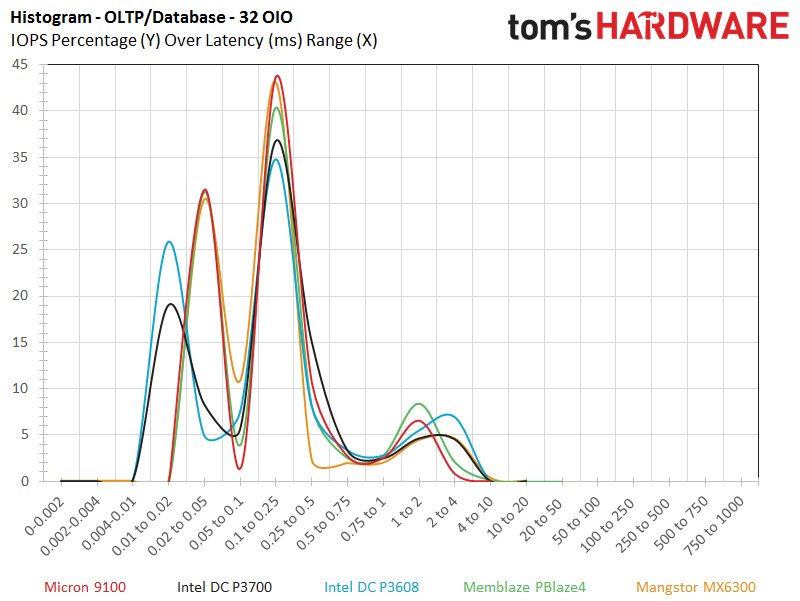

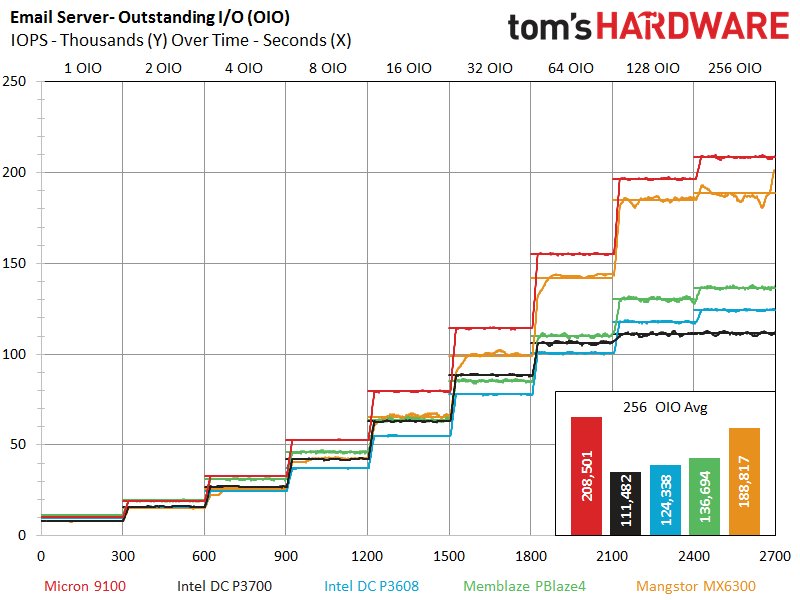

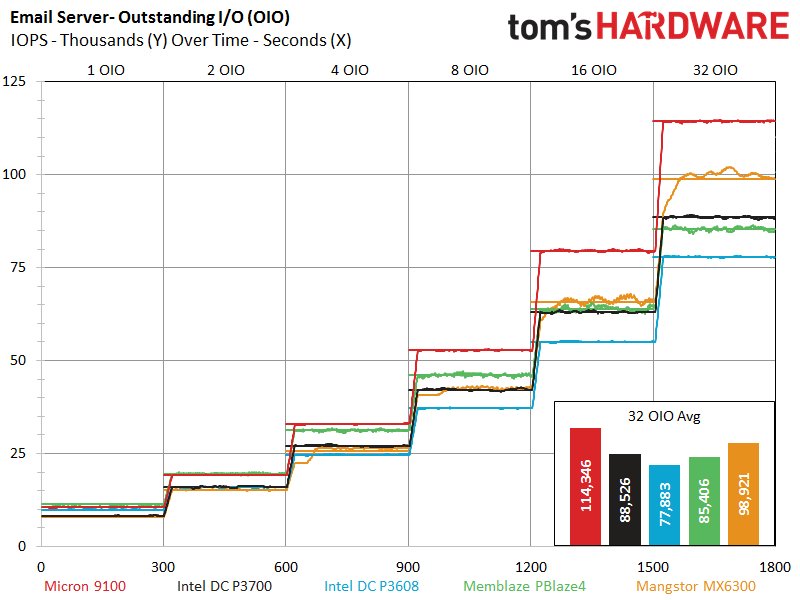

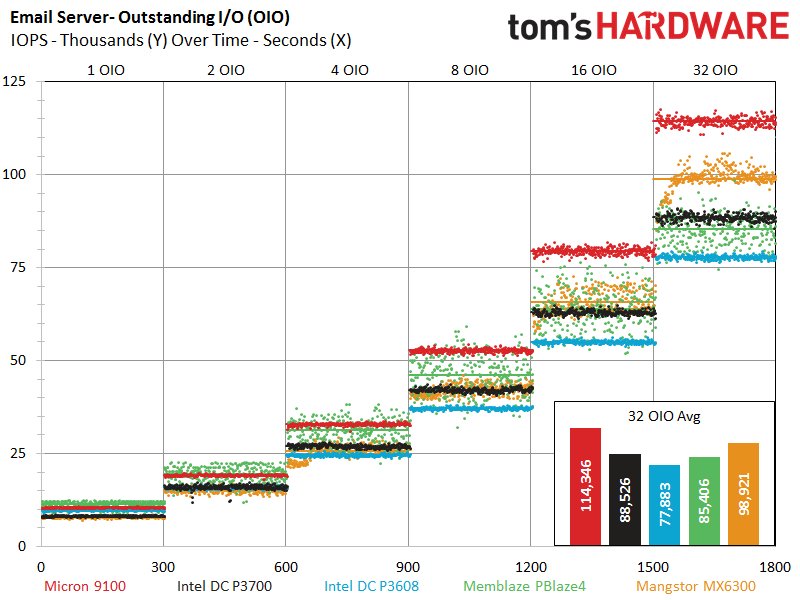

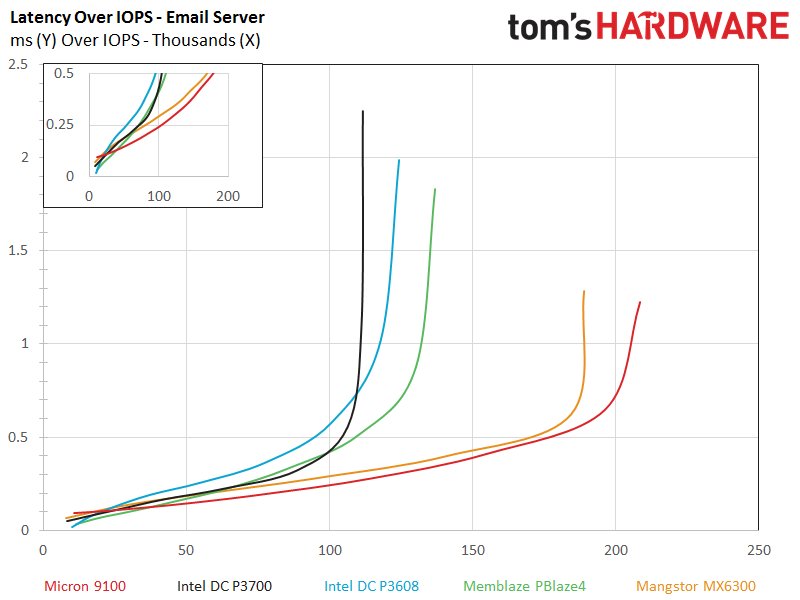

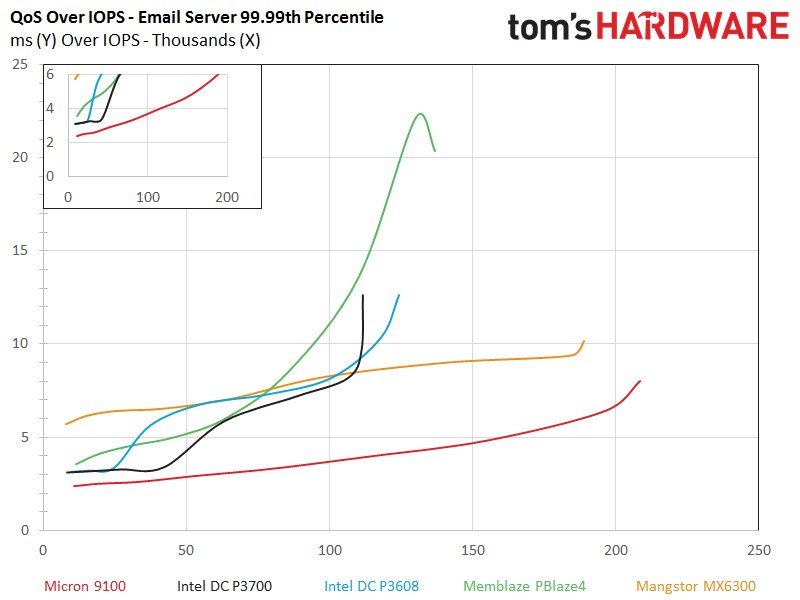

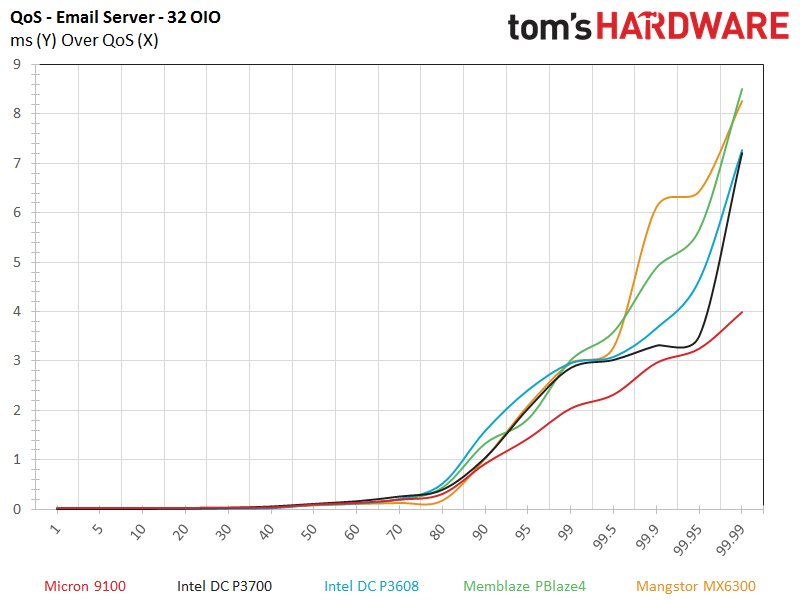

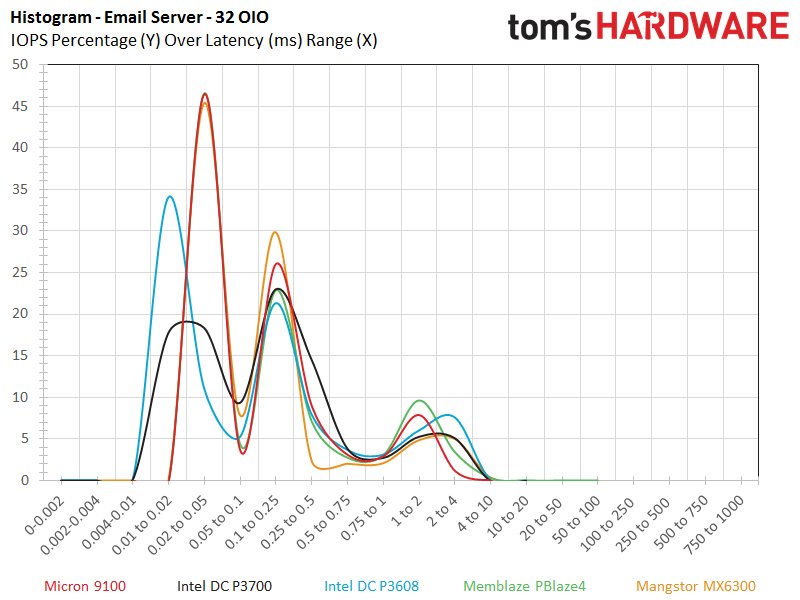

The increased light workload performance during the server workloads isn't entirely surprising; our 8K mixed workloads foreshadowed the turnaround. The Micron 9100 Max is in the mix with the rest of the test pool at 1 and 2 OIO, although the DC P3700 takes a slight lead at 1 OIO and the PBlaze4 edges it out at 2 OIO. The 9100 takes off at 4 OIO and trounces the rest of the competition handily for the remainder of the test. The MX6300 bears down with its 100-core controller but still can't keep pace with the 9100, which beats the rest of the single-ASIC test pool by more than 80,000 IOPS. It also offers the most refined performance distribution of the test pool, and although we note a slightly higher latency at 1 OIO in the latency-over-IOPS chart, it provides an impressive (and untouchable) profile in the QoS metrics.

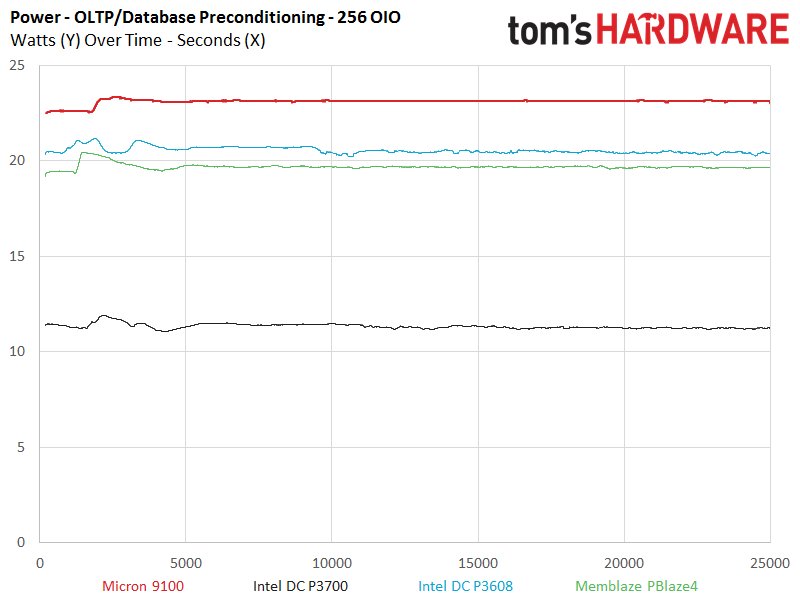

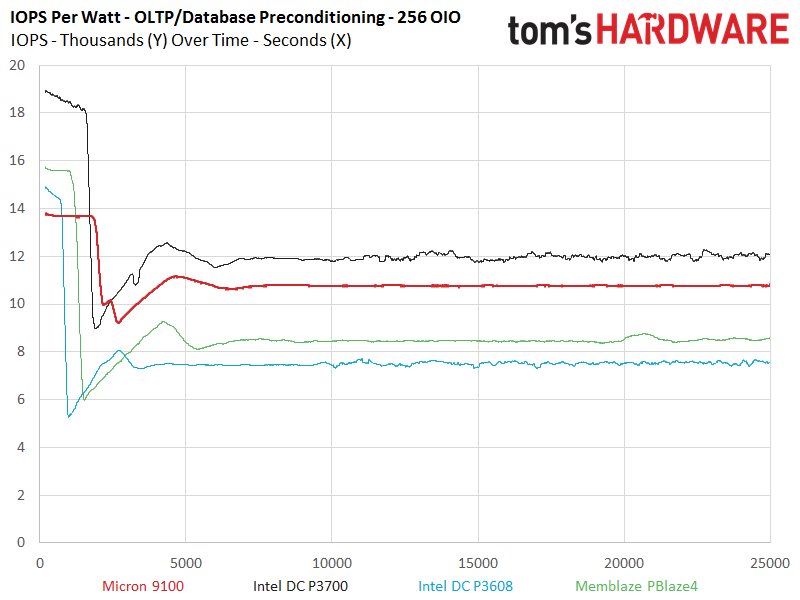

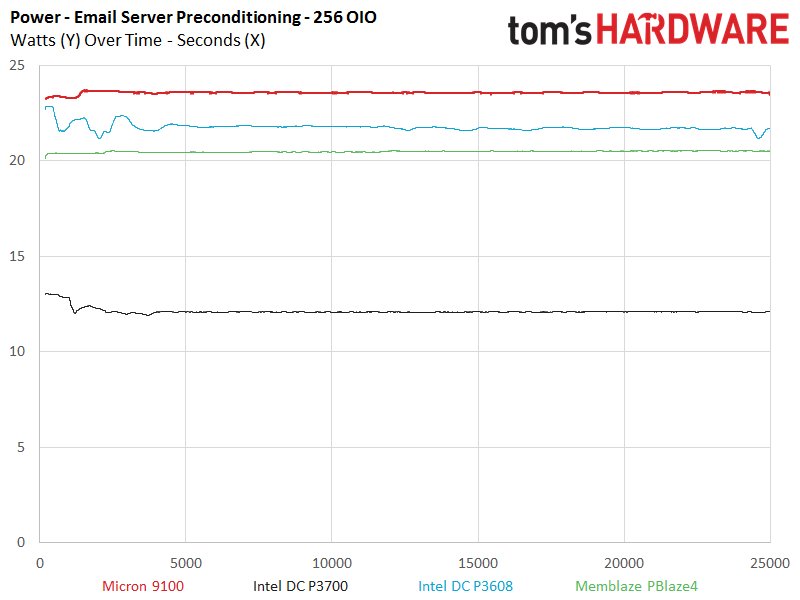

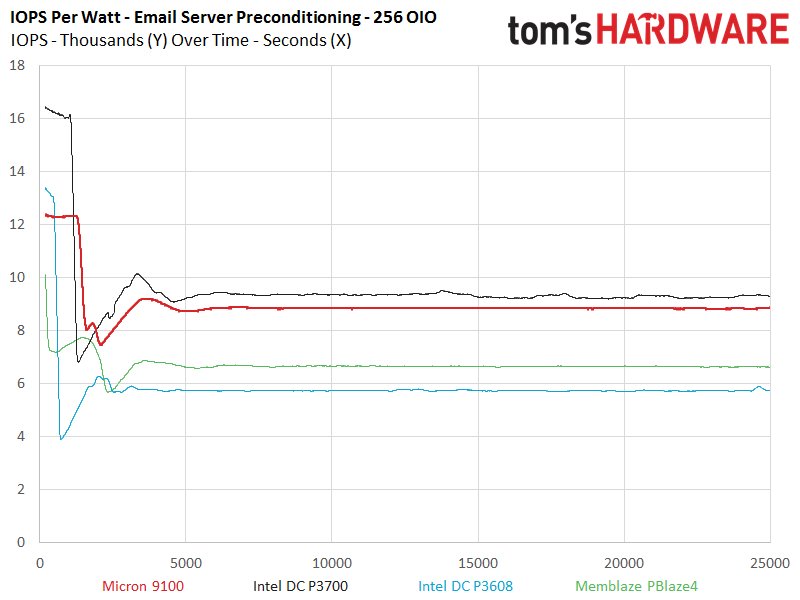

The DC P3700 is a power-sipping workhorse, and it takes the lead in efficiency metrics again, but the 9100 isn't far behind.

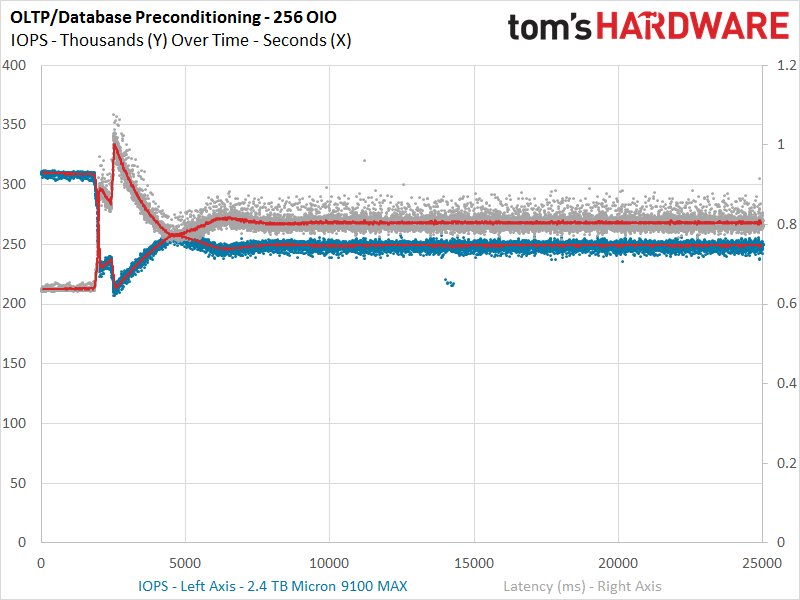

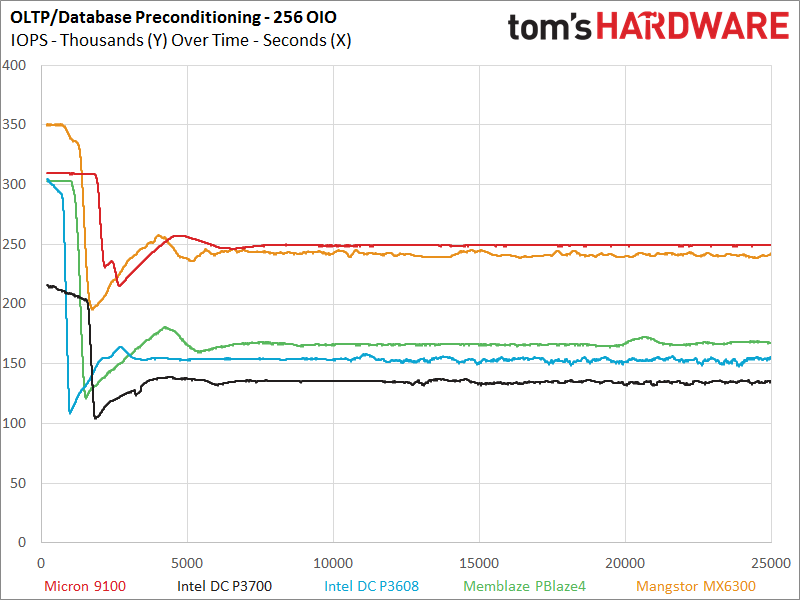

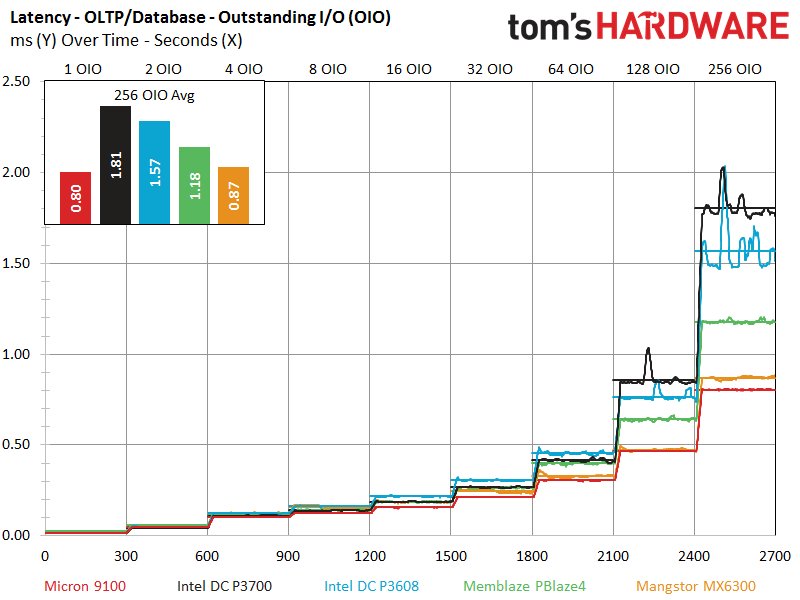

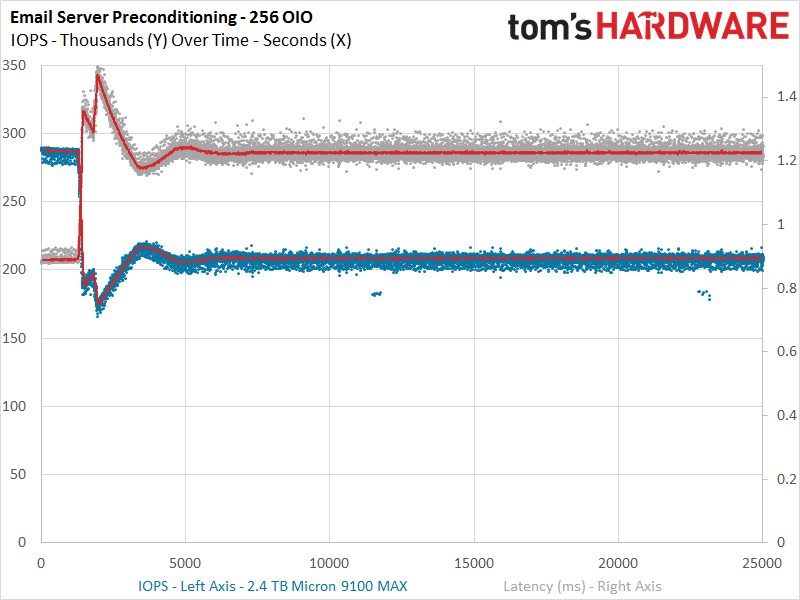

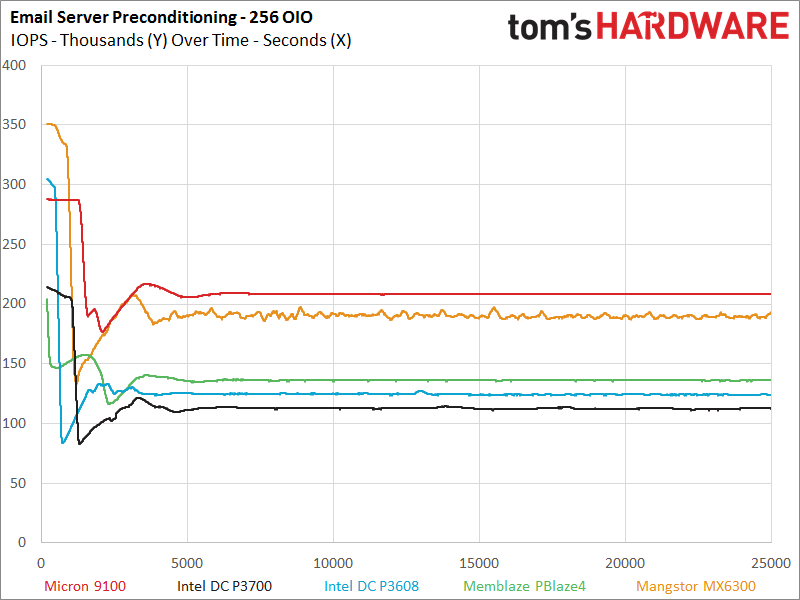

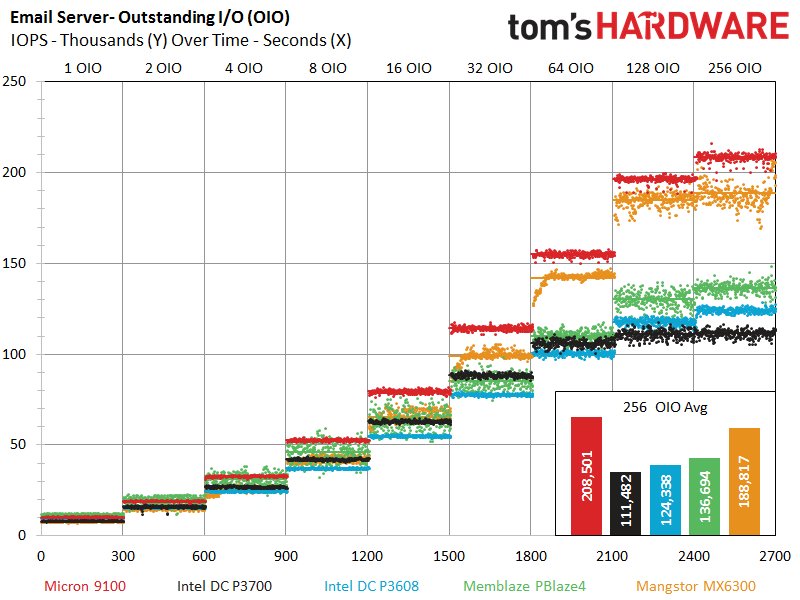

The combined preconditioning chart gives us an indication that the 9100 is about to take off again, and the gap widens as it outpaces the MX6300 convincingly at 256 OIO. Notice the performance chasm between the 9100 and the competition from 8 to 64 OIO, which is an excellent mid-range scaling characteristic. The light workload performance is well in hand, and the QoS metrics are well beyond the capabilities of the other challengers. Even the PBlaze4 is unable to keep pace, and it is a solid contender in the QoS realm.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: OLTP & Email Server Workloads

Prev Page 128KB Sequential Read And Write Next Page Conclusion

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Flying-Q If the flash packages are producing so much heat that they need such a massive heatsink, why is there only one? Surely the flash on the rear of the card would need heatsinking too, even just a flat plate would suffice?Reply -

Unolocogringo It appears the heatsink is more for the voltage converters and controller chip to me.Reply -

Paul Alcorn Reply18234127 said:If the flash packages are producing so much heat that they need such a massive heatsink, why is there only one? Surely the flash on the rear of the card would need heatsinking too, even just a flat plate would suffice?

This is a standard configuration, though there are a few SSDs that have rear plates. Thermal pads were more common with larger lithography NAND, 20nm, 25nm, etc, because it generated more heat. New smaller NAND, such as the 16nm here, draws less power and generates less heat. In fact, it was very common with old client SSDs to have a thermal pad on the NAND, whereas now they are relatively rare. I think that they may be relying upon reducing the heat enough on one side to help wick heat from the other side, but the heatsink is primarily for the controller and DRAM with the latest SSDs. Also, it may just be convenient to add additional thermal pads to the NAND to keep the spacing for the HS even across the board.