Nvidia Titan RTX Review: Gaming, Training, Inferencing, Pro Viz, Oh My!

Why you can trust Tom's Hardware

Performance Results: Deep Learning

The introduction of Turing saw Nvidia’s Tensor cores make their way from the data center-focused Volta architecture to a more general-purpose design with its beginnings in gaming PCs. However, the company’s quick follow up with Turing-based Quadro cards and the inferencing-oriented Tesla T4 GPU made it pretty clear that DLSS wasn’t the only purpose of those cores.

Now that we have Titan RTX—a card with lots of memory—it’s possible to leverage TU102’s training and inferencing capabilities in a non-gaming context.

Before we get to the benchmarks, it’s important to understand how Turing, Volta, and Pascal size up in theory.

Titan Xp’s GP102 processor is more like GP104 than the larger GP100 in that it supports general-purpose IEEE 754 FP16 arithmetic at a fraction of its FP32 rate (1/64), rather than 2x. GP102 does not support mixed precision (FP16 inputs with FP32 accumulates) for training, though. This becomes an important distinction in our Titan Xp benchmarks.

Volta greatly improved on Pascal’s ability to accelerate deep learning workloads with Nvidia’s first-generation Tensor cores. These specialized cores performed fused multiply adds exclusively, multiplying a pair of FP16 matrices and adding their result to an FP16 or FP32 matrix. Titan V’s Tensor performance can be as high as 119.2 TFLOPS for FP16 inputs with FP32 accumulates, making it an adept option for training neural networks.

Although TU102 hosts fewer Tensor cores than Titan V’s GV100, a higher GPU Boost clock rate facilitates a theoretically better 130.5 TFLOPS of Tensor performance on Titan RTX. GeForce RTX 2080 Ti Founders Edition should be able to muster 113.8 TFLOPS. However, Nvidia artificially limits the desktop card’s FP16 with FP32 accumulates to half-rate.

Training Performance

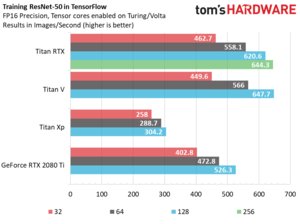

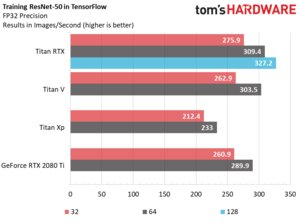

Our first set of benchmarks utilizes Nvidia’s TensorFlow Docker container to train a ResNet-50 convolutional neural network using ImageNet. We separately charted training performance using FP32 and FP16 (mixed) precision.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The numbers in each chart’s legend represent batch sizes. In brief, batch size determines the number of input images fed to the network concurrently. The larger the batch, the faster you’re able to get through all of ImageNet’s 14+ million images, given ample GPU performance, GPU memory, and system memory.

In both charts, Titan RTX can handle larger batches than the other cards thanks to its 24GB of GDDR6. This conveys a sizeable advantage over cards with less on-board memory. Of course, Titan V remains a formidable graphics card, and it’s able to trade blows with Titan RTX using FP16 and FP32.

GeForce RTX 2080 Ti’s half-rate mixed-precision mode causes it to shed quite a bit of performance compared to Titan RTX. Why isn’t the difference greater? The FP32 accumulate operation is only a small part of the training computation. Most of the matrix multiplication pipeline is the same on Titan RTX and GeForce RTX 2080 Ti, creating a closer contest than the theoretical specs would suggest. Switching to FP32 mode erases some of the discrepancy between Nvidia’s two TU102-based boards.

It’s also worth mentioning the mixed precision results for Titan Xp. Remember, GP102 doesn’t support FP16 inputs with FP32 accumulates, so it operates in native FP16 mode. The benchmarks do run successfully. But their accuracy is inferior to what Volta and Turing enable. To compare generational improvements at a like level of accuracy, you’d need to pit Titan RTX with mixed precision (644 images/sec) against Titan Xp in FP32 mode (233 images/sec).

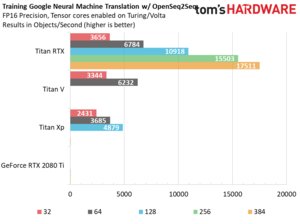

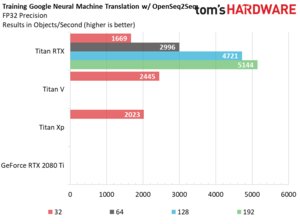

Next, we train the Google Neural Machine Translation recurrent neural network using the OpenSeq2Seq toolkit, again in TensorFlow.

Specifying FP32 precision again lets Titan RTX show off the advantage of 24GB of memory. Titan V and Xp started displaying out-of-memory errors after successful runs with batch sizes of 32.

Enabling FP16 precision in the dataset’s training parameter script allows the 12GB cards to accommodate larger batches. However, they’re both overwhelmed by Titan RTX as we push 384-image batches through its TU102.

Inferencing Performance

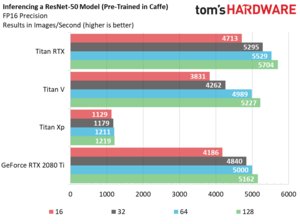

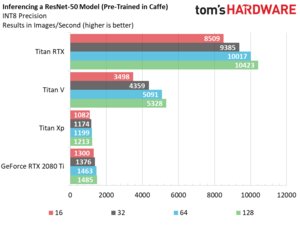

Before we isolate the performance of Turing’s new INT8 mode, we took a pass at inferencing on a trained ResNet-50 model with TensorFlow.

Across the board, Titan RTX outperforms Titan V (as does GeForce RTX 2080 Ti).

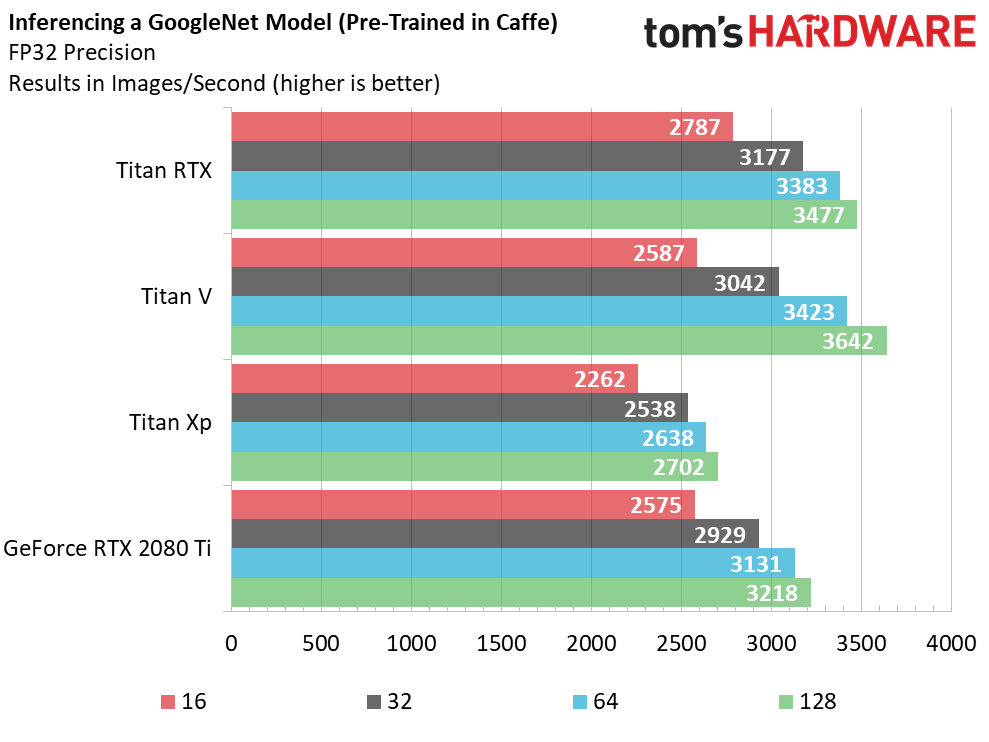

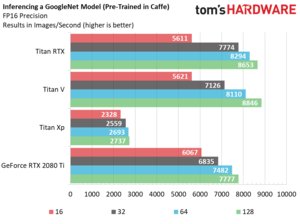

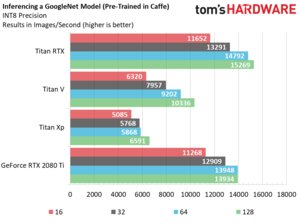

Nvidia recommends inferencing in TensorRT, though, which supports Turing GPUs, CUDA 10, and the Ubuntu 18.04 environment we’re testing under. Our first set of results inference a GoogleNet model pre-trained in Caffe.

With the FP32 numbers serving as a baseline, Titan RTX’s speed-ups thanks to FP16 and INT8 modes are significant. GeForce RTX 2080 Ti benefits similarly.

Titan V’s INT8 rate is ½ of its peak FP16 throughput, so although the card does see improvements versus FP16 mode, they’re not as pronounced.

Titan Xp’s 48.8 TOPS of INT8 performance prove useful in inferencing workloads. An FP16 rate that’s 1/64 of FP32 throughput means we’re not surprised to see FP16 precision only barely faster than the FP32 result.

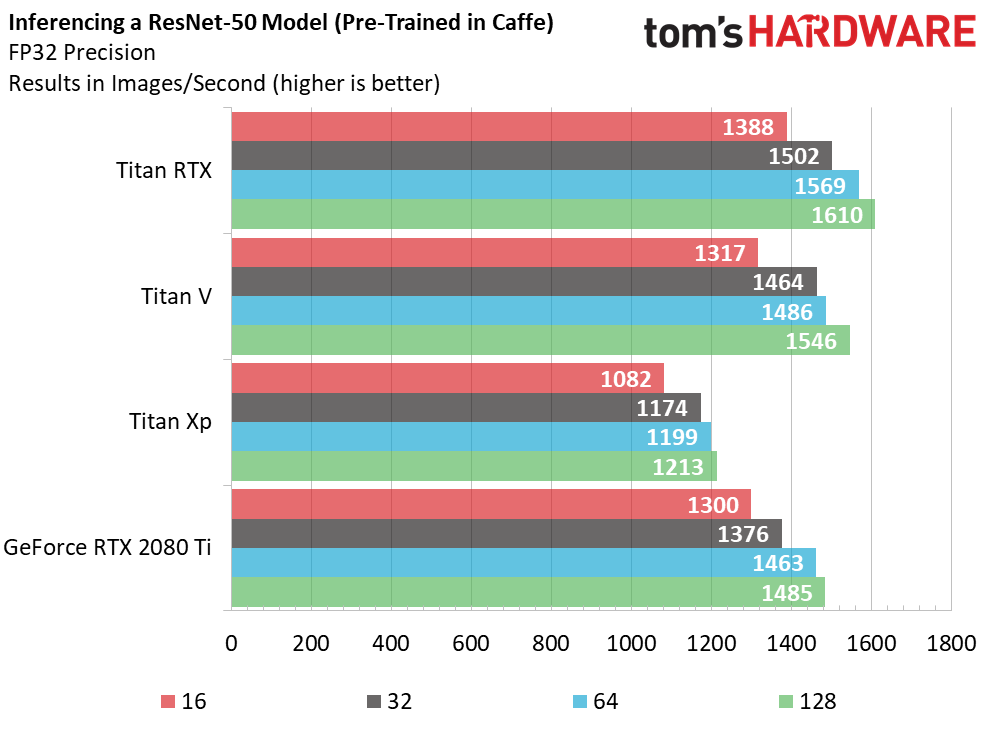

Inferencing a ResNet-50 model trained in Caffe demonstrates similar trends. That is, Titan V doesn’t scale as well using INT8 precision, while Titan Xp enjoys a big speed-up from INT8 compared to its FP16 performance.

TU102, however, dominates across the board. Titan RTX turns in the best benchmark numbers, followed by GeForce RTX 2080 Ti. The desktop card hangs tight with Titan RTX, achieving greater-than 90% of its performance through each change in precision.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Performance Results: Deep Learning

Prev Page Performance Results: Pro Visualization Next Page Performance Results: Gaming at 2560 x 1440-

AgentLozen This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.Reply

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for. -

bloodroses Reply21719532 said:This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for.

True, but the 'Titan' designation was more so designated for super computing, not gaming. They just happen to game well. Quadro is designed for CAD uses, with ECC VRAM and driver support being the big difference over a Titan. There is quite a bit of crossover that does happen each generation though, to where you can sometimes 'hack' a Quadro driver onto a Titan

https://www.reddit.com/r/nvidia/comments/a2vxb9/differences_between_the_titan_rtx_and_quadro_rtx/ -

madks Is it possible to put more training benchmarks? Especially for Recurrent Neural Networks (RNN). There are many forecasting models for weather, stock market etc. And they usually fit in less than 4GB of vram.Reply

Inference is less important, because a model could be deployed on a machine without a GPU or even an embedded device. -

mdd1963 Reply21720515 said:Just buy it!!!

Would not buy it at half of it's cost either, so...

:)

The Tom's summary sounds like Nviidia payed for their trip to Bangkok and gave them 4 cards to review....plus gave $4k 'expense money' :)

-

alextheblue So the Titan RTX has roughly half the FP64 performance of the Vega VII. The same Vega VII that Tom's had a news article (that was NEVER CORRECTED) that bashed it for "shipping without double precision" and then later erroneously listed the FP64 rate at half the actual rate? Nice to know.Reply

https://www.tomshardware.com/news/amd-radeon-vii-double-precision-disabled,38437.html

There's a link to the bad news article, for posterity.