Nvidia Titan RTX Review: Gaming, Training, Inferencing, Pro Viz, Oh My!

Why you can trust Tom's Hardware

Conclusion

Reviewing Titan RTX is daunting for the scope of its capabilities, and yet the testing is largely an exercise in exhibition. After all, if you can afford a $2,500 graphics card, you already know what you’re going to do with it. In some cases, that lofty price tag might actually represent savings to customers who were previously looking at a much more expensive Quadro card.

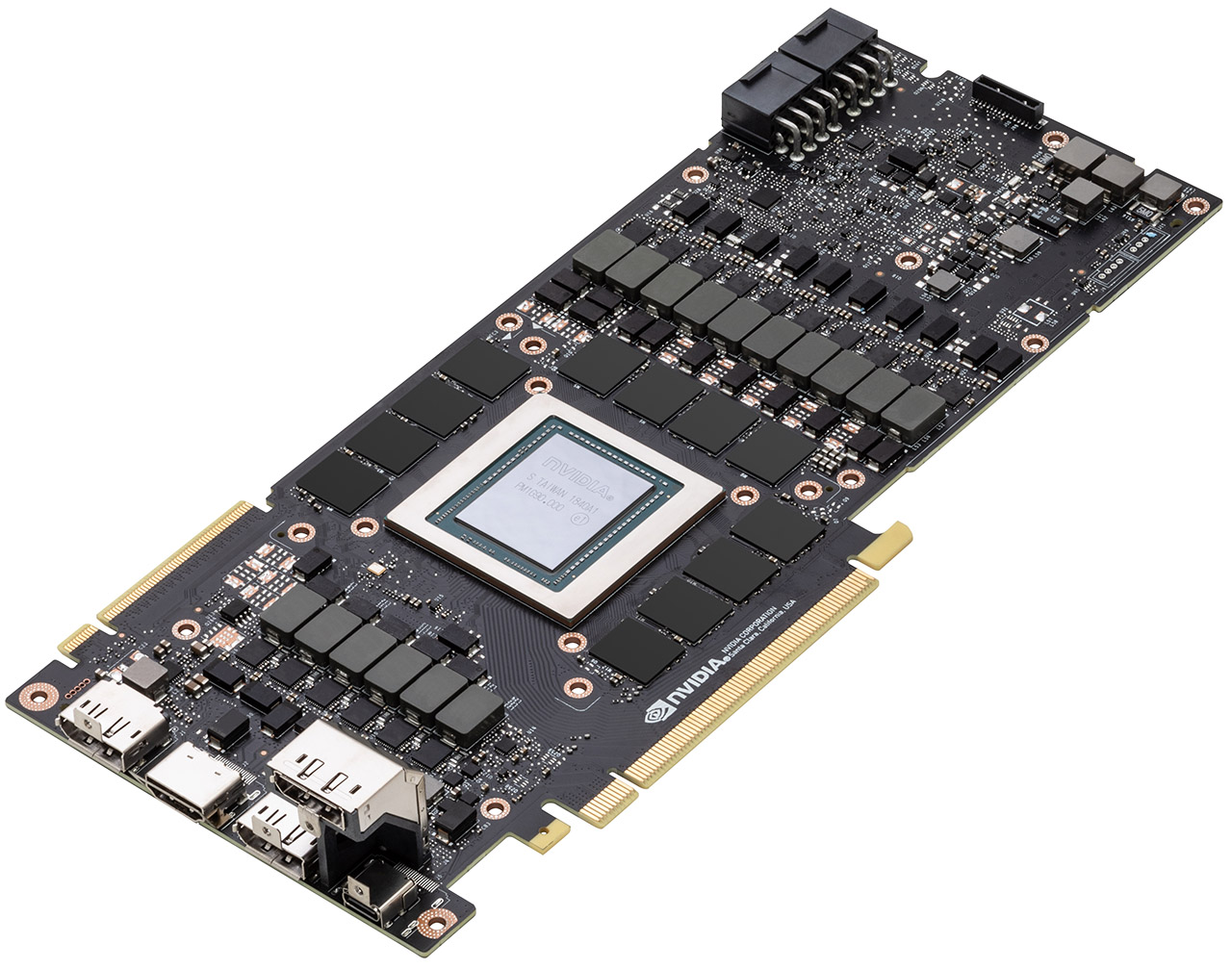

Physically, Titan RTX is almost identical to GeForce RTX 2080 Ti. Its soft golden color is unique, of course. And the model designator between its two axial fans correctly identifies the card. But its dimensions, weight, and even PCB are the same. Nvidia takes advantage of the overbuilt board design and cooler to enable a fully functional TU102 processor and a twelfth memory IC, pushing Titan RTX’s TDP to 280W. That extra bit of headroom allows this card to behave exceptionally well, even under the duress of FurMark. Whereas Titan V shows the limitations of its blower-style cooler under a 250W ceiling, Titan RTX didn’t stumble once with peaks as high as 300W.

Titan RTX also exhibited its prowess in projects able to overwhelm the 11GB and 12GB of memory on cards like GeForce RTX 2080 Ti and Titan Xp/V. The first large-geometry OctaneRender 4 scene that Nvidia sent over for testing simply failed on the older Titans. It had to be pared down before our comparison hardware could complete the render task. Further, stepping up to 24GB of GDDR6 allowed Titan RTX to train networks using larger batches than the cards with less memory, speeding up the time it takes to work through inputs.

New support for INT8 and INT4 precision gives Titan RTX a big advantage in inferencing workloads able to leverage its hardware functionality, too. There, the card blows right past Nvidia’s still-potent (and more expensive) Titan V in our benchmarks. Where Titan RTX falls short is FP64 performance. In situations where double precision matters, Titan V remains the best FP64 card out there.

But Titan V can’t hold a candle to Titan RTX when it comes to after-hours entertainment. Because Titan RTX is based on the same TU102 processor as GeForce RTX 2080 Ti, it benefits from the work Nvidia put in to optimizing for games. As a result, it’s the fastest gaming graphics card you can buy. Now, you’ll have to decide for yourself if a few frames per second, on average, are worth what you’d pay for a pair of GeForce RTX 2080 Tis joined by NVLink.

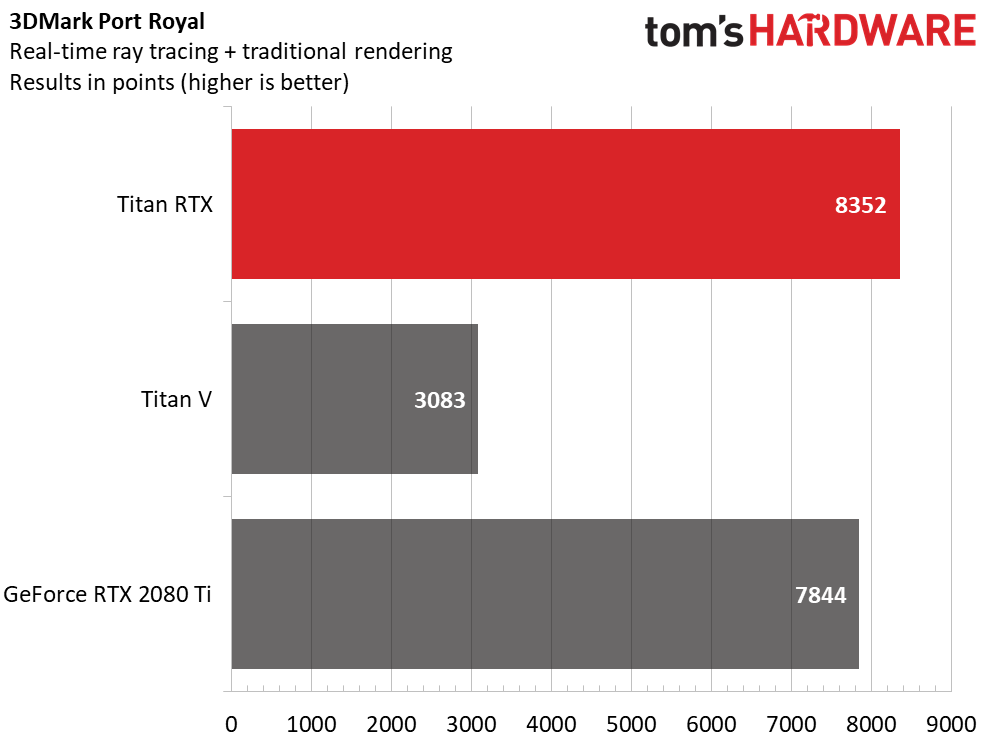

We didn’t even touch on Titan RTX’s RT cores, which accelerate BVH traversal and ray casting functions. Mostly, there isn’t anything to test outside of Battlefield V and 3DMark's Port Royal. That latter synthetic shows Titan RTX about 6% ahead of GeForce RTX 2080 Ti, with both Turing-based cards way ahead of Titan V. Nevertheless, Nvidia says it’s working with rendering software partners to exploit ray tracing acceleration through Microsoft DXR and OptiX, citing pre-release versions of Chaos Group’s Project Lavina, OTOY’s OctaneRender, and Autodesk’s Arnold GPU renderer.

It’ll be interesting to see how Nvidia enables Turing’s highest-profile fixed-function feature. In the meantime, a complete TU102 processor is an absolute monster for professional applications able to utilize its improved Tensor cores or massive 24GB of GDDR6 memory, including deep learning and professional visualization workloads. Affluent gamers are better off with an overclocked GeForce RTX 2080 Ti from one of Nvidia’s board partners. The Gigabyte Aorus GeForce RTX 2080 Ti Xtreme 11G we tested offered more than 95% of Titan V’s average frame rate. But if money is truly no object, we couldn’t fault you for chasing that extra 5%.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

-

AgentLozen This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.Reply

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for. -

bloodroses Reply21719532 said:This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for.

True, but the 'Titan' designation was more so designated for super computing, not gaming. They just happen to game well. Quadro is designed for CAD uses, with ECC VRAM and driver support being the big difference over a Titan. There is quite a bit of crossover that does happen each generation though, to where you can sometimes 'hack' a Quadro driver onto a Titan

https://www.reddit.com/r/nvidia/comments/a2vxb9/differences_between_the_titan_rtx_and_quadro_rtx/ -

madks Is it possible to put more training benchmarks? Especially for Recurrent Neural Networks (RNN). There are many forecasting models for weather, stock market etc. And they usually fit in less than 4GB of vram.Reply

Inference is less important, because a model could be deployed on a machine without a GPU or even an embedded device. -

mdd1963 Reply21720515 said:Just buy it!!!

Would not buy it at half of it's cost either, so...

:)

The Tom's summary sounds like Nviidia payed for their trip to Bangkok and gave them 4 cards to review....plus gave $4k 'expense money' :)

-

alextheblue So the Titan RTX has roughly half the FP64 performance of the Vega VII. The same Vega VII that Tom's had a news article (that was NEVER CORRECTED) that bashed it for "shipping without double precision" and then later erroneously listed the FP64 rate at half the actual rate? Nice to know.Reply

https://www.tomshardware.com/news/amd-radeon-vii-double-precision-disabled,38437.html

There's a link to the bad news article, for posterity.