Nvidia Titan RTX Review: Gaming, Training, Inferencing, Pro Viz, Oh My!

Why you can trust Tom's Hardware

Temperatures and Fan Speeds

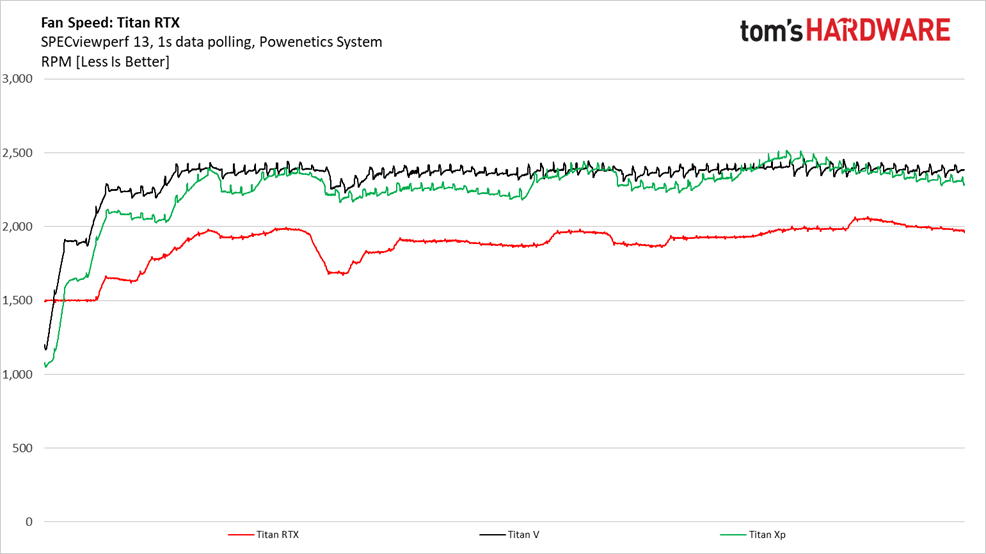

SPECviewperf 13

The SPECviewperf 13 benchmark doesn’t apply constant stress to Titan RTX, so fan speeds rise and fall throughout the run in response to thermal load. It’s clear, however, that Nvidia’s axial fans spin much slower than its blower-style coolers, even on a higher-power card.

In comparison, Titan V ramps up quickly to keep up with heat generated by this workstation-class benchmark. Titan Xp chases close behind.

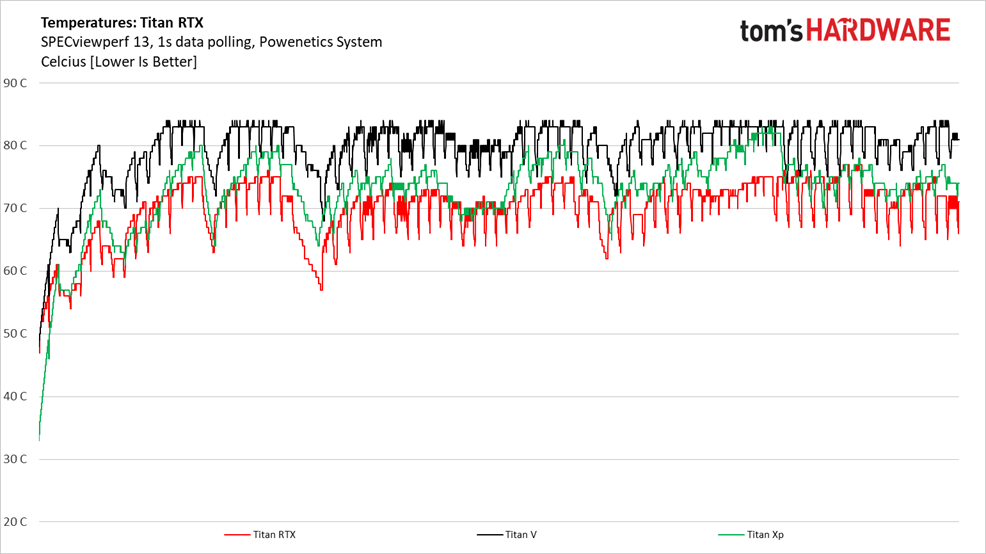

Temperatures fluctuate as SPECviewperf spins each dataset up and down. But as a general trend, the massive GV100 processor runs hottest, followed by Titan Xp’s GP102. TU102, despite its size, is kept relatively cool by dual axial fans and a beefy vapor chamber cooler.

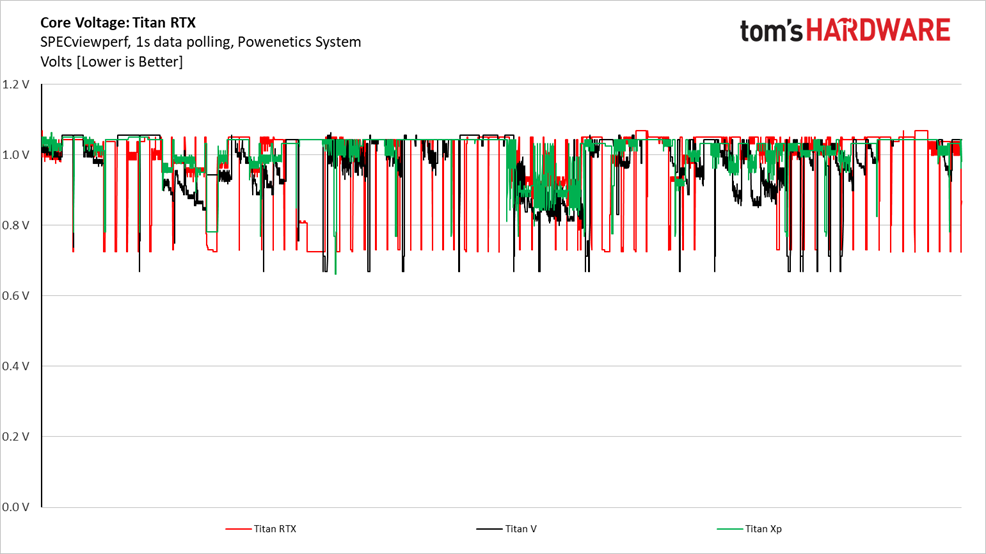

More so than Titan V or Titan Xp, Titan RTX spends a lot of time jumping between power states, depending on its load.

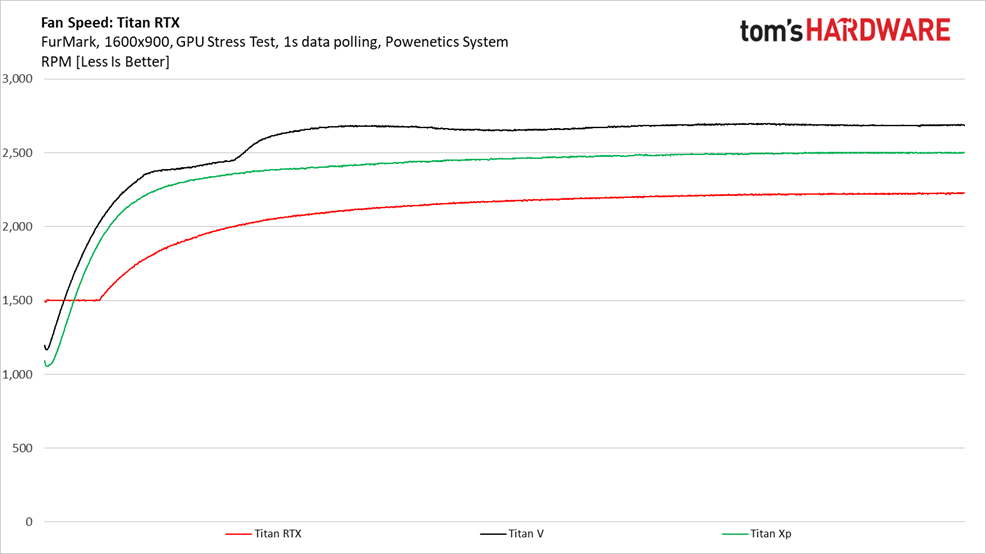

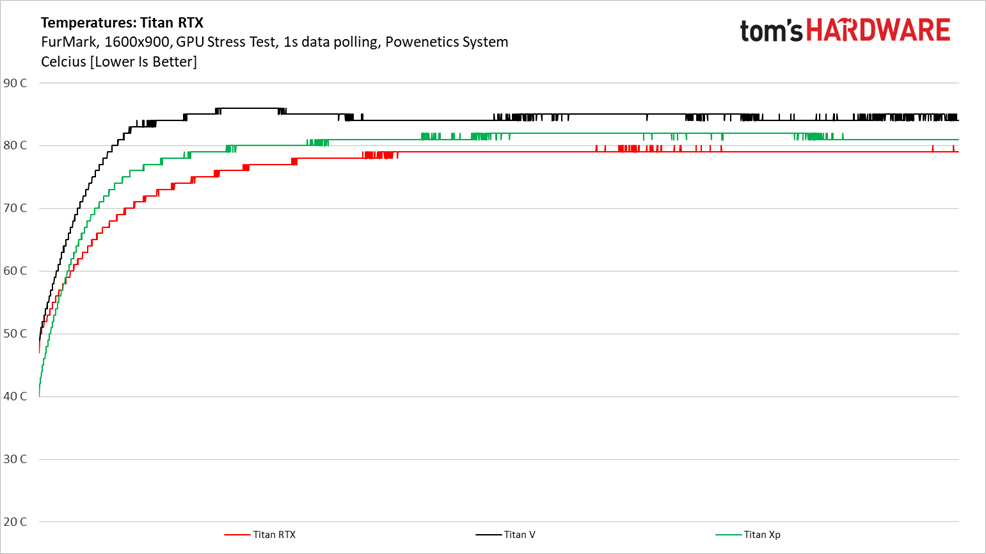

FurMark

FurMark’s intensity doesn’t faze Titan RTX. A smooth ramp up to about 2,250 RPM is much more graceful than Titan Xp’s sharper rise to 2,500 RPM. Titan V settles around 2,700 RPM as it struggles to keep GV100 cool enough.

Although Titan V never hits its 91°C thermal threshold, it still runs hotter than either GP102 or TU102. The former’s maximum GPU temperature is 94°C, while the latter is rated at up to 89°C. With that said, Titan RTX peaks at a mere 80°.

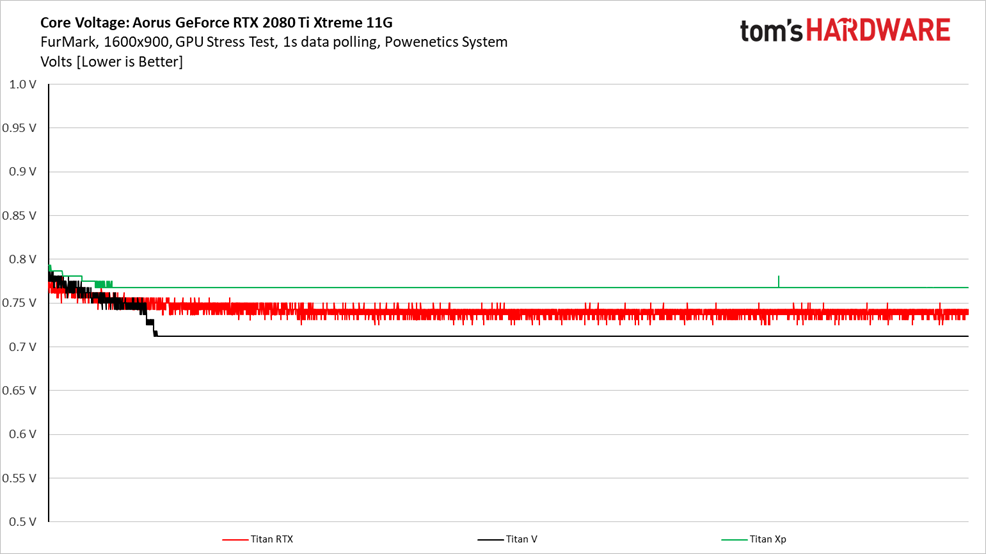

Titan V maintains a steady 1,200 MHz at its 0.712V, obeying its rated base frequency.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Meanwhile, Titan RTX oscillates between 1,365 and 1,380 MHZ at roughly 0.737V, keeping its nose just above Nvidia’s 1,350 MHz base clock rate.

Titan Xp bounces around as well, but generally hovers in the 1,300 MHz range at 0.768V. That makes it the only Titan card to violate Nvidia’s base frequency specification (it shouldn’t drop below 1,404 MHz).

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

-

AgentLozen This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.Reply

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for. -

bloodroses Reply21719532 said:This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for.

True, but the 'Titan' designation was more so designated for super computing, not gaming. They just happen to game well. Quadro is designed for CAD uses, with ECC VRAM and driver support being the big difference over a Titan. There is quite a bit of crossover that does happen each generation though, to where you can sometimes 'hack' a Quadro driver onto a Titan

https://www.reddit.com/r/nvidia/comments/a2vxb9/differences_between_the_titan_rtx_and_quadro_rtx/ -

madks Is it possible to put more training benchmarks? Especially for Recurrent Neural Networks (RNN). There are many forecasting models for weather, stock market etc. And they usually fit in less than 4GB of vram.Reply

Inference is less important, because a model could be deployed on a machine without a GPU or even an embedded device. -

mdd1963 Reply21720515 said:Just buy it!!!

Would not buy it at half of it's cost either, so...

:)

The Tom's summary sounds like Nviidia payed for their trip to Bangkok and gave them 4 cards to review....plus gave $4k 'expense money' :)

-

alextheblue So the Titan RTX has roughly half the FP64 performance of the Vega VII. The same Vega VII that Tom's had a news article (that was NEVER CORRECTED) that bashed it for "shipping without double precision" and then later erroneously listed the FP64 rate at half the actual rate? Nice to know.Reply

https://www.tomshardware.com/news/amd-radeon-vii-double-precision-disabled,38437.html

There's a link to the bad news article, for posterity.