Update: Radeon R9 295X2 8 GB In CrossFire: Gaming At 4K

We spent our weekend benchmarking the sharp-looking iBuyPower Erebus loaded with a pair of Radeon R9 295X2 graphics cards. Do the new boards fare better than the quad-GPU configurations we've tested before, or should you stick to fewer cards in CrossFire?

Results: Grid 2

Aside from Battlefield 4, Grid 2 is the other game that previously benchmarked poorly, demonstrating negative scaling. The original prognosis was that this typically-platform-bound title was maxed out by a pair of Hawaii GPUs, and the overhead of two more hurt performance. Again, a spiky frame rate over time graph seemed to corroborate.

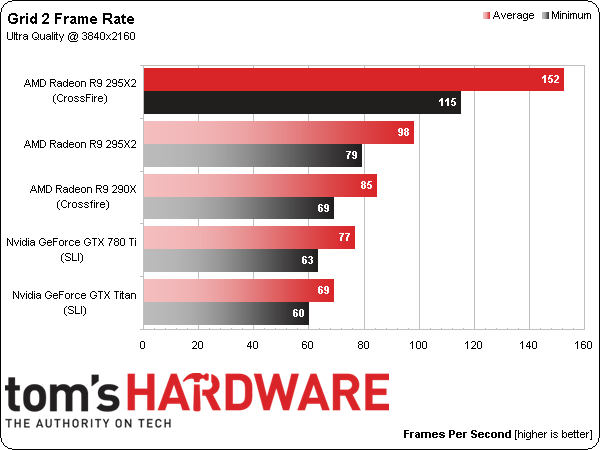

The same troubleshooting that helped knock the Battlefield 4 numbers into line works here as well. FCAT shows the in-game benchmark averaging 152 FPS and Fraps says 156. There are dropped frames observable in the FCAT output, so this checks out. Still, we end up with 55% scaling, and that’s not bad for a title often held back by processor and system memory performance.

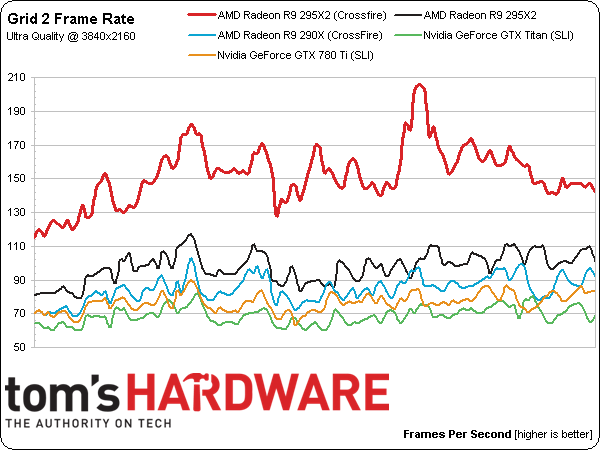

Even though frame rates peak above 200 FPS and dip under 120, Grid 2 runs smoothly at 3840x2160. This isn’t one of the games we’d worry about with regard to stuttering. Unfortunately, the second Radeon R9 295X2 also isn’t needed for an enjoyable experience, even with the Ultra preset applied. A pair of Hawaii GPUs is already capable of 98 FPS on average, after all.

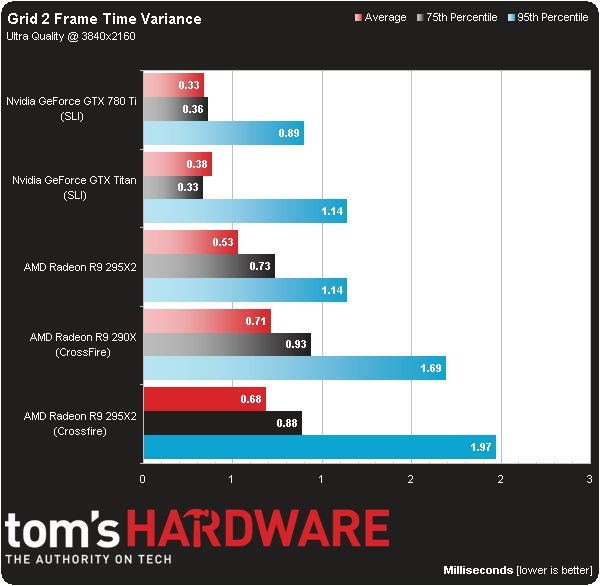

The performance of all five configurations is so high that even a last-place showing in the frame time variance chart is perfectly acceptable for two Radeon R9 295X2s. At worst, you’re looking at a 95th percentile figure under 2 ms.

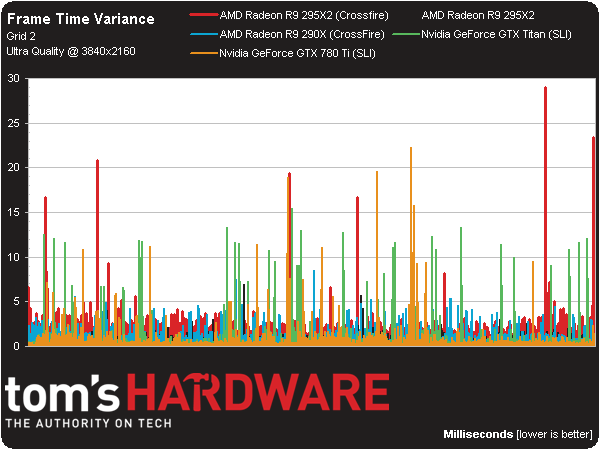

This is what that looks like on a line over time. There are some clear examples where the time between two frames spikes, but every combination of cards experiences that on occasion. The higher average variance comes from the underlying trend, which you can see as the red line consistently peeking up over the other colors along the bottom.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

redgarl I always said it, more than two cards takes too much resources to manage. Drivers are not there either. You are getting better results with simple Crossfire. Still, the way AMD corner Nvidia as the sole maker able to push 4k right now is amazing.Reply -

BigMack70 I personally don't think we'll see a day that 3+ GPU setups become even a tiny bit economical.Reply

For that to happen, IMO, the time from one GPU release to the next would have to be so long that users needed more than 2x high end GPUs to handle games in the mean time.

As it is, there's really no gaming setup that can't be reasonably managed by a pair of high end graphics cards (Crysis back in 2007 is the only example I can think of when that wasn't the case). 3 or 4 cards will always just be for people chasing crazy benchmark scores. -

frozentundra123456 I am not a great fan of mantle because of the low number of games that use it and its specificity to GCN hardware, but this would have been one of the best case scenarios for testing it with BF4.Reply

I cant believe the reviewer just shrugged of the fact that the games obviously look cpu limited by just saying "well, we had the fastest cpu you can get" when they could have used mantle in BF4 to lessen cpu usage. -

Matthew Posey The first non-bold paragraph says "even-thousand." Guessing that should be "eleven-thousand."Reply -

Haravikk How does a dual dual-GPU setup even operate under Crossfire? As I understand it the two GPUs on each board are essentially operating in Crossfire already, so is there then a second Crossfire layer combining the two cards on top of that, or has AMD tweaked Crossfire to be able to manage them as four separate GPUs? Either way it seems like a nightmare to manage, and not even close to being worth the $3,000 price tag, especially when I'm not really convinced that even a single of those $1,500 cards is really worth it to begin with; drool worthy, but even if I had a ton of disposable income I couldn't picture myself ever buying one.Reply