Update: Radeon R9 295X2 8 GB In CrossFire: Gaming At 4K

We spent our weekend benchmarking the sharp-looking iBuyPower Erebus loaded with a pair of Radeon R9 295X2 graphics cards. Do the new boards fare better than the quad-GPU configurations we've tested before, or should you stick to fewer cards in CrossFire?

Results: Thief

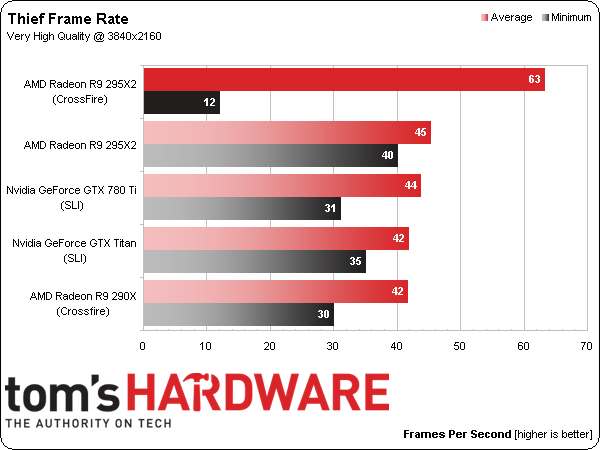

For the first time, we observe significant difference between the FCAT- and Fraps-reported benchmark results using Thief’s in-game test. FCAT tells us that there’s a 62 FPS average, while Fraps spits back 77 FPS. But Fraps also tries convincing us that the game dips as low as 6 FPS and shoots as high as 1174 FPS, which surely throws the average out of whack. The FCAT number is far more believable, dropping to 16 FPS, and peaking under 90 FPS. That’s scaling in the 38% range, which is not great.

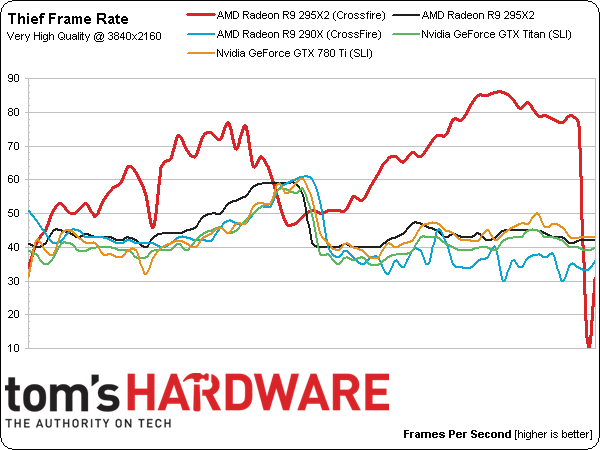

What’s up with the big difference between FCAT and Fraps in this title? Using four GPUs, the Thief benchmark exhibits strange behavior in that it starts, chops through a few seconds, and then spits out a rendered payload in faster-than-real-time until it catches up with where the action is supposed to be. AMD suggests to us that this could be due to the app compiling thousands of shaders upfront, affecting performance. If you play through the game for several minutes, the frame rate does even out a bit.

The big drop in performance happens at the end of the test for no clear reason.

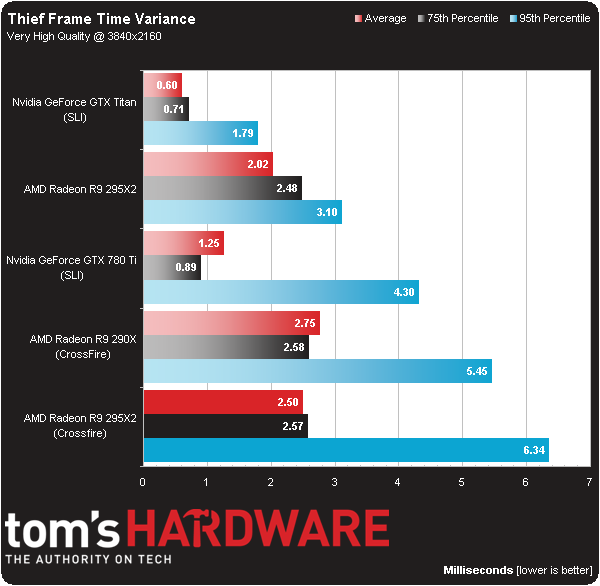

Two Radeon R9 295X2s in CrossFire again yield the highest worst-case frame time variance, though I normally don’t consider the 6 ms-range problematic. We do know, however, that results in the 5 ms range can be distinguished in blind testing, depending on the title.

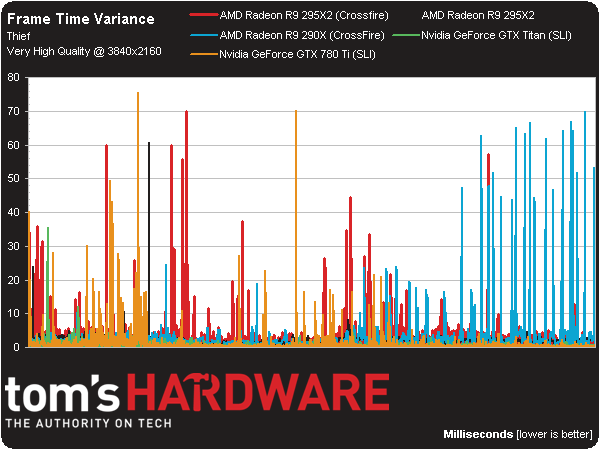

Big frame time variance spikes are indicative of our biggest problem with the Thief benchmark: severe stuttering. As with Battlefield 4 the experience in Thief simply isn’t acceptable. The issue was confirmed when we went into the actual game and encountered the same stuttering issues.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

redgarl I always said it, more than two cards takes too much resources to manage. Drivers are not there either. You are getting better results with simple Crossfire. Still, the way AMD corner Nvidia as the sole maker able to push 4k right now is amazing.Reply -

BigMack70 I personally don't think we'll see a day that 3+ GPU setups become even a tiny bit economical.Reply

For that to happen, IMO, the time from one GPU release to the next would have to be so long that users needed more than 2x high end GPUs to handle games in the mean time.

As it is, there's really no gaming setup that can't be reasonably managed by a pair of high end graphics cards (Crysis back in 2007 is the only example I can think of when that wasn't the case). 3 or 4 cards will always just be for people chasing crazy benchmark scores. -

frozentundra123456 I am not a great fan of mantle because of the low number of games that use it and its specificity to GCN hardware, but this would have been one of the best case scenarios for testing it with BF4.Reply

I cant believe the reviewer just shrugged of the fact that the games obviously look cpu limited by just saying "well, we had the fastest cpu you can get" when they could have used mantle in BF4 to lessen cpu usage. -

Matthew Posey The first non-bold paragraph says "even-thousand." Guessing that should be "eleven-thousand."Reply -

Haravikk How does a dual dual-GPU setup even operate under Crossfire? As I understand it the two GPUs on each board are essentially operating in Crossfire already, so is there then a second Crossfire layer combining the two cards on top of that, or has AMD tweaked Crossfire to be able to manage them as four separate GPUs? Either way it seems like a nightmare to manage, and not even close to being worth the $3,000 price tag, especially when I'm not really convinced that even a single of those $1,500 cards is really worth it to begin with; drool worthy, but even if I had a ton of disposable income I couldn't picture myself ever buying one.Reply