Intel Xeon E5-2600 v2: More Cores, Cache, And Better Efficiency

Intel recently launched its Xeon E5-2600 v2 CPU, based on the Ivy Bridge-EP architecture. We got a couple of workstation-specific -2687W v2 processors with eight cores and 25 MB of L3 cache each, and are comparing them to previous-generation -2687Ws.

All About Intel's Ivy Bridge-EP-Based Xeon CPUs

Intel has a lot of irons in its proverbial fire. The company is merrily hammering away on the mobile space with its Haswell and Silvermont architectures. It’s targeting everything from tablets to notebooks with very powerful integrated graphics, and doing a really good job, in my opinion. We have Bay Trail-based tablets in the lab that look promising, and I have a bunch of data from the Iris Pro 5200 graphics engine that hasn’tevenbeen published yet.

However, the desktop portfolio is cooling off to the side after more than two years of fairly modest evolution. Because the Tom’s Hardware team spends so much time at work and play on stationary workstations, our disappointment with Intel’s efforts in this space is woven through much of the site’s content, even if Intel does sell the fastest CPUs. Without AMD competing at a high enough level, we’ve had very little reason to recommend upgrading your host processor since the Sandy Bridge days.

Not so in the server and workstation space. There, Intel leverages its manufacturing strength to build CPUs that deftly cut through professional applications and carve up our efficiency measurements. A few months back, we previewed the Xeon E5-2697 v2 in Intel's 12-Core Xeon With 30 MB Of L3: The New Mac Pro's CPU? and determined the chip to be more efficient than either the eight-core Xeon E5-2687W or Core i7-3970X.

And now I have a pair of Xeon E5-2687W v2 processors based on the same Ivy Bridge-EP design. Although they’re 150 W CPUs (the -2697 v2 is a 130 W part), the workstation-specific chips sport the same eight cores as the first-gen -2687W. Intel added shared L3 cache, though. It also increased the peak base and Turbo Boost frequencies at a lower peak VID. And I know both CPUs bear the same TDP, but the newer architecture is simply lower-power.

Meet Ivy Bridge-EP

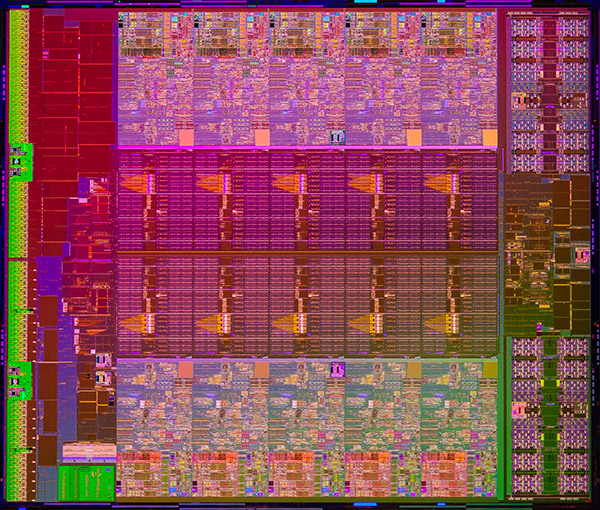

After today, we’ll have benchmarked the 12- and eight-core Xeon E5-2600-series CPUs. But Intel also sells four-, six-, and 10-core models as well. In fact, there are 18 total SKUs up and down the v2 stack. The diverse line-up is derived from three physical dies sporting six, 10, and 12 cores. As you can imagine, each set of resources is intentionally modular to help simplify the creation of these different designs.

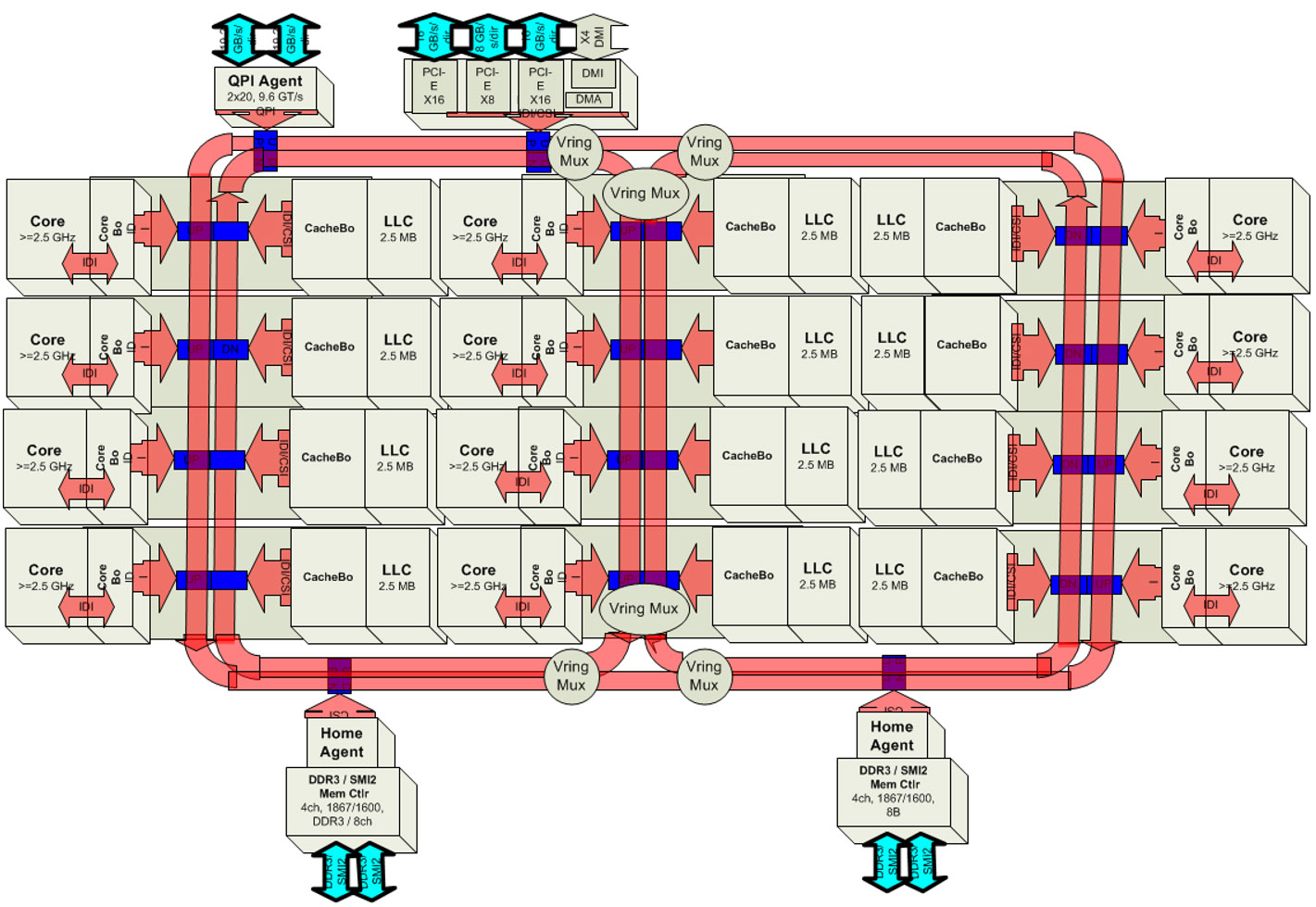

The most complex version, which is what we previewed in the Xeon E5-2697 story, employs three columns of building blocks, consisting of cores and 2.5 MB last-level cache slices, and four rows of those resources. Multiple ring buses facilitate communication across the die, and multiplexers ensure information gets to the stop where it’s needed. There’s a single QPI agent communicating at up to 9.6 GT/s (though existing models are capped at 8 GT/s), and 40 lanes of third-gen PCI Express connectivity split into two x16 links and an additional eight-lane link. The 12-core die utilizes two memory controllers, each responsible for two channels of up to DDR3-1866.

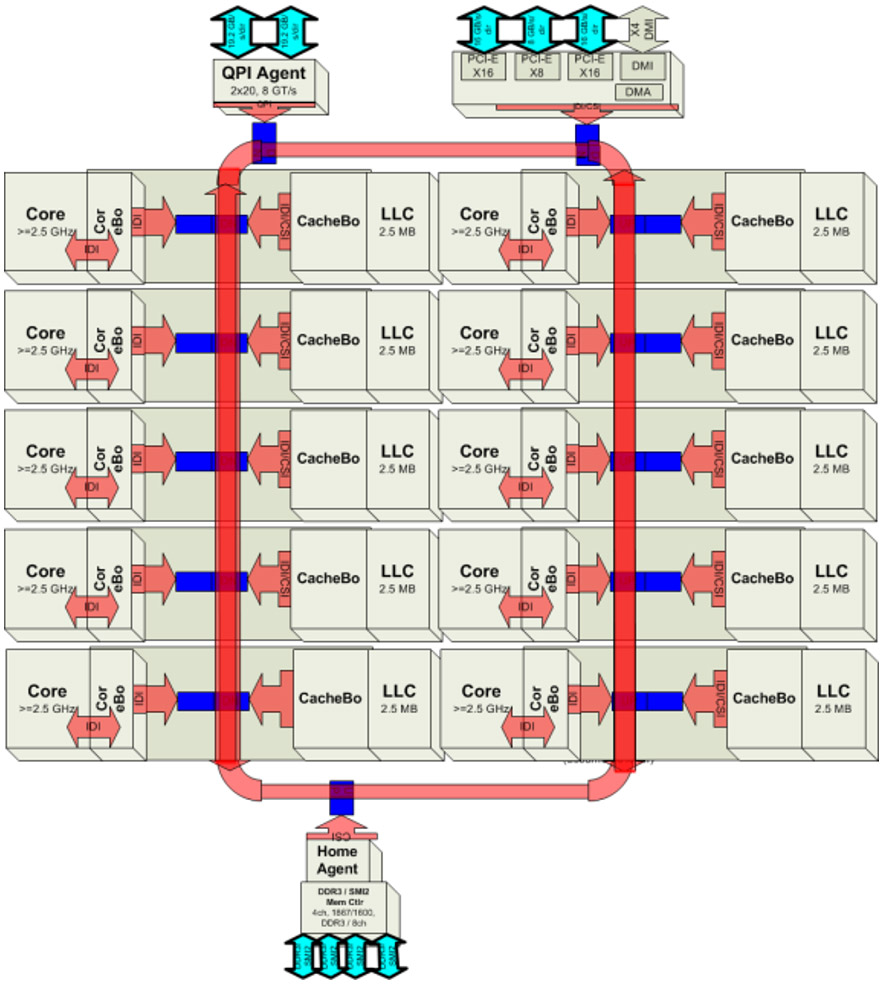

Stepping back to 10 cores reduces complexity quite a bit. The configuration shrinks to two columns, but is now five rows long. The QPI agent remains intact, but maxes out at 8 GT/s, while the PCI Express controller doesn’t change at all. Intel’s 10-core configuration sports a single memory controller that hosts all four channels. And there’s just one ring bus to shuttle data between the various stops, too.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Eight-core Xeon E5s are sourced from this same die, so a couple of the core/cache slices are disabled, leaving everything else functional. Incidentally, that’s how the Xeon E5-2687W v2 we’re testing today can include eight cores but also a 25 MB shared L3 cache—two cores are disabled, but the corresponding L3 remains active.

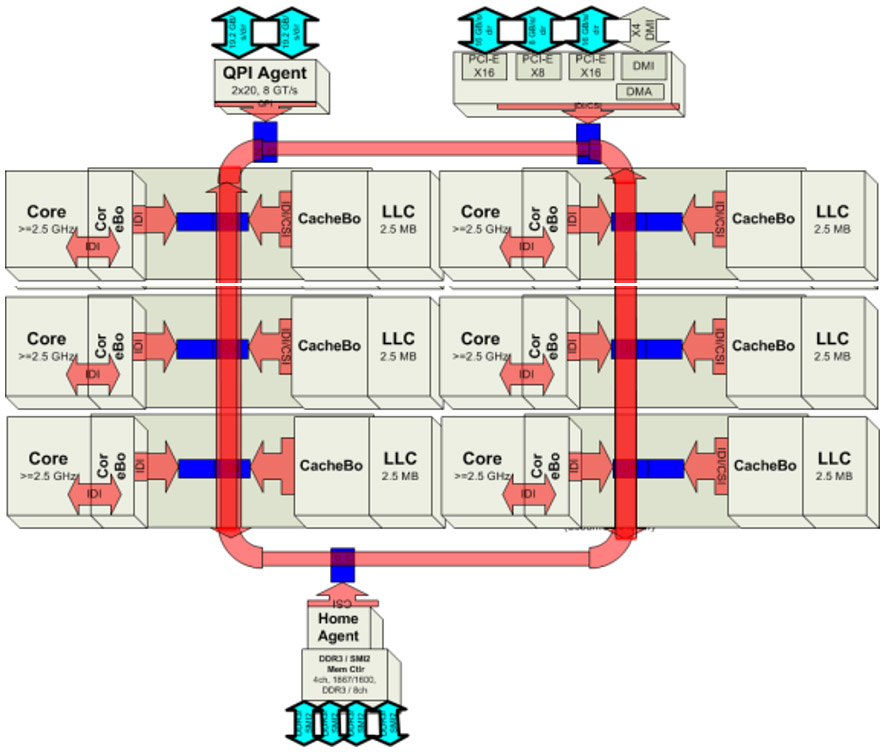

Once you drop to six cores, it’s cheaper to create a third die configuration than to create higher-volume parts from the pricey 10-core arrangement. Also a two-column part, the six-core CPU is three rows long with an 8 GT/s-capable QPI agent and the same PCI Express connectivity. Again, one memory controller is responsible for all four 64-bit DDR3 channels.

Intel uses those three dies to create a stack broken up into Advanced, Standard, Basic, and Segment-Optimized models ranging from 60 up to 150 W, and base clock rates from 1.7 up to 3.5 GHz. From the top to the bottom, the entire portfolio is compatible with the same LGA 2011 (Socket R) interface as before. That means upgrading an existing server or workstation is as easy as updating the platform’s firmware. On our Intel W2600CR2 motherboard, we simply did this with the Sandy Bridge-EP-based Xeon E5-2687Ws installed.

| Header Cell - Column 0 | Cores | LLC | QPI | Memory | Base Clock | TDP | Price |

|---|---|---|---|---|---|---|---|

| Advanced | |||||||

| Xeon E5-2690 v2 | 10 | 25 MB | 8 GT/s | DDR3-1866 | 3.0 GHz | 130 W | $2057 |

| Xeon E5-2680 v2 | 10 | 25 MB | 8 GT/s | DDR3-1866 | 2.8 GHz | 115 W | $1723 |

| Xeon E5-2670 v2 | 10 | 25 MB | 8 GT/s | DDR3-1866 | 2.5 GHz | 115 W | $1552 |

| Xeon E5-2660 v2 | 10 | 25 MB | 8 GT/s | DDR3-1866 | 2.2 GHz | 95 W | $1389 |

| Xeon E5-2650 v2 | 8 | 20 MB | 8 GT/s | DDR3-1866 | 2.6 GHz | 95 W | $1166 |

| Standard | |||||||

| Xeon E5-2640 v2 | 8 | 20 MB | 7.2 GT/s | DDR3-1600 | 2.0 GHz | 95 W | $885 |

| Xeon E5-2630 v2 | 6 | 15 MB | 7.2 GT/s | DDR3-1600 | 2.6 GHz | 80 W | $612 |

| Xeon E5-2620 v2 | 6 | 15 MB | 7.2 GT/s | DDR3-1600 | 2.1 GHz | 80 W | $406 |

| Basic | |||||||

| Xeon E5-2609 v2 | 4 | 10 MB | 6.4 GT/s | DDR3-1333 | 2.5 GHz | 80 W | $294 |

| Xeon E5-2603 v2 | 4 | 10 MB | 6.4 GT/s | DDR3-1333 | 1.8 GHz | 80 W | $202 |

| Segment-Optimized | |||||||

| Xeon E5-2697 v2 | 12 | 30 MB | 8 GT/s | DDR3-1866 | 2.7 GHz | 130 W | $2614 |

| Xeon E5-2695 v2 | 12 | 30 MB | 8 GT/s | DDR3-1866 | 2.4 GHz | 115 W | $2336 |

| Xeon E5-2687W v2 | 8 | 20 MB | 8 GT/s | DDR3-1866 | 3.4 GHz | 150 W | $2108 |

| Xeon E5-2667 v2 | 8 | 25 MB | 8 GT/s | DDR3-1866 | 3.3 GHz | 130 W | $2057 |

| Xeon E5-2643 v2 | 6 | 25 MB | 8 GT/s | DDR3-1866 | 3.5 GHz | 130 W | $1552 |

| Xeon E5-2637 v2 | 4 | 15 MB | 8 GT/s | DDR3-1866 | 3.5 GHz | 130 W | $996 |

| Xeon E5-2650L v2 | 10 | 25 MB | 8 GT/s | DDR3-1600 | 1.7 GHz | 70 W | $1219 |

| Xeon E5-2630L v2 | 6 | 15 MB | 7.2 GT/s | DDR3-1600 | 2.4 GHz | 60 W | $612 |

CPUs in the Advanced bin are mostly 10-core models with 25 MB of L3, though there’s an eight-core CPU with 20 MB in there as well. They all feature 8 GT/s QPI links, Hyper-Threading and Turbo Boost support, and a memory controller capable of 1866 MT/s transfer rates.

The Standard stack is smaller, with one eight-core SKU boasting 25 MB of LLC and two six-core chips complemented by 15 MB. Intel’s QuickPath Interface is deliberately slowed to 7.2 GT/s, as is the quad-channel memory controller’s maximum speed (all three processors accommodate up to DDR3-1600 modules). Hyper-Threading and Turbo Boost are both retained, though.

Both members of the Basic segment are quad-core CPUs with 10 MB of shared L3. QPI performance is pared back to 6.4 GT/s, while the memory controller tops out at DDR3-1333. That’s still arguably plenty for the lower-power applications those processors will find themselves in, though Intel does deactivate Hyper-Threading and Turbo Boost, unfortunately.

Xeon E5-2687W v2: Bringing Out The Big Guns

Of course, Intel’s Xeon E5-2687W v2 doesn’t fit into any of those three categories. It’s a workstation-specific member of the Segment-Optimized line-up, purpose-built for roomy pedestal/4U enclosures where dissipating 2 x 150 W isn’t a problem, and a balance between parallelism and clock rate takes precedent over more lower-frequency cores.

Like the Xeon E5-2687W before it, -2687W v2 is an eight-core part. Its base clock rate increases from 3.1 GHz the generation prior up to 3.4 GHz, and the maximum Turbo Boost frequency similarly jumps from 3.8 to 4 GHz. An extra 5 MB of shared L3 cache typically won’t confer significant gains. However, you will see benchmark situations where it makes a difference.

Each of the -2687W v2’s QPI links operate at a full 8 GT/s. And the processor’s quad-channel memory controller supports 1866 MT/s data rates. In theory, that’s up to 59.7 GB/s per processor, though real-world throughput is always going to be lower.

Of course, the second-gen Xeon E5 is built using Intel’s Ivy Bridge architecture, so it gets the subtle tweaks introduced back in April 2012 alongside the company’s desktop Core CPUs, including a handful adjustments to the core, cache, and memory controller that improve IPC throughput by a few percent compared to Sandy Bridge.

When you combine the architectural evolution, higher clock rates, and more shared L3 cache, you know what to expect going from Xeon E5-2687W to -2687W v2. But that’s not the whole story. When Intel made the switch from Sandy to Ivy Bridge, its emphasis was on transitioning from 32 to 22 nm manufacturing. The company does successfully push the Xeon E5 family’s performance story forward. However, it also cuts power consumption. That combination is great for boosting efficiency. So, we’re going to start by digging into the benchmarks, fold in power consumption, and then wrap with an energy comparison.

Current page: All About Intel's Ivy Bridge-EP-Based Xeon CPUs

Next Page Test Setup And Benchmarks-

GL1zdA1 Does this mean, that the 12-core variant with 2 memory controllers will be a NUMA CPU, with cores having different latencies when accessing memory depending on which MC is near them?Reply -

Draven35 The Maya playblast test, as far as I can tell, is very single-threaded, just like the other 3d application preview tests I (we) use. This means it favors clock speed over memory bandwidth.Reply

The Maya render test seems to be missing O.o

-

Cryio Thank you Tom's for this Intel Server CPU. I sure hope you'll make a review of AMD's upcoming 16 core Steamroller server CPUReply -

voltagetoe If you've got 3ds max, why don't you use something more serious/advanced like Mental Ray ? The default renderer tech represent distant past like year 1995.Reply -

lockhrt999 "Our playblast animation in Maya 2014 confounds us."@canjelini : Apart from rendering, most of tools in Maya are single threaded(most of the functionality has stayed same for this two decades old software). So benchmarking maya playblast is as identical as itunes encode benchmarking.Reply -

daglesj I love Xeon machines. As they are not mainstream you can usually pick up crazy spec Xeon workstations for next to nothing just a few years after they were going for $3000. They make damn good workhorses.Reply -

InvalidError @GL1zdA1: the ring-bus already means every core has different latency accessing any given memory controller.Memory controller latency is not as much of a problem with massively threaded applications on a multi-threaded CPU since there is still plenty of other work that can be done while a few threads are stalled on IO/data. Games and most mainstream applications have 1-2 performance-critical threads and the remainder of their 30-150 other threads are mostly non-critical automatic threading from libraries, application frameworks and various background or housekeeping stuff.Reply -

mapesdhs Small note, one can of course manually add the Quadro FX 1800 to the relevant fileReply

(raytracer_supported_cards.txt) in the appropriate Adobe folder and it will work just

fine for CUDA, though of course it's not a card anyone who wants decent CUDA

performance with Adobe apps should use (one or more GTX 580 3GB or 780Ti is best).

Also, hate to say it but showing results for using the card with OpenCL but not

showing what happens to the relevant test times when the 1800 is used for CUDA

is a bit odd...

Ian.

PS. I see the messed-up forum posting problems are back again (text all squashed

up, have to edit on the UK site to fix the layout). Really, it's been months now, is

anyone working on it?