Intel Xeon E5-2600 v2: More Cores, Cache, And Better Efficiency

Intel recently launched its Xeon E5-2600 v2 CPU, based on the Ivy Bridge-EP architecture. We got a couple of workstation-specific -2687W v2 processors with eight cores and 25 MB of L3 cache each, and are comparing them to previous-generation -2687Ws.

Results: Rendering

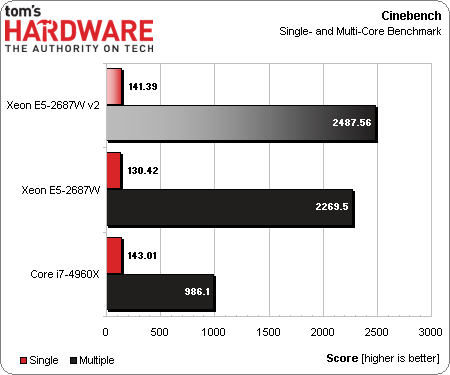

Maxon’s Cinebench R15 release (based on the company's Cinema 4D product) is a bit different from past versions of the benchmark. It’s able to utilize up to 256 cores (physical or logical) to render a scene with around 2000 objects made up of more than 300,000 polygons. Maxon altered the scale significantly so that results from previous versions can’t be compared—that’s why the numbers are so much higher than Cinebench results we’ve presented in the past.

The single-core numbers reflect the difference between Intel’s Sandy and Ivy Bridge architectures. Meanwhile, the multi-core component illustrates the difference between six and 16 cores. Moreover, the Ivy Bridge-EP-based Xeon E5-2687W v2 enjoys an extra advantage due to its tuned architecture and higher operating frequency.

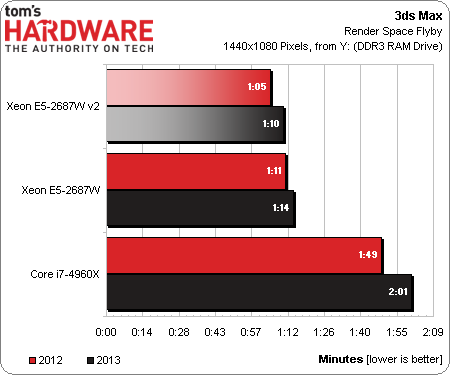

Our 3ds Max workload is real-world, so you don’t get the same sort of scaling that comes from a synthetic designed to extract maximum performance. With that said, we see a massive speed-up going from the single Core i7 to the dual-processor workstations. Ivy Bridge-EP is marginally faster than Sandy Bridge-EP, but that’s what we would have expected given a comparable core count and small clock rate increases. Where we’re really hoping for big gains is the efficiency measurement, where performance and power get factored together.

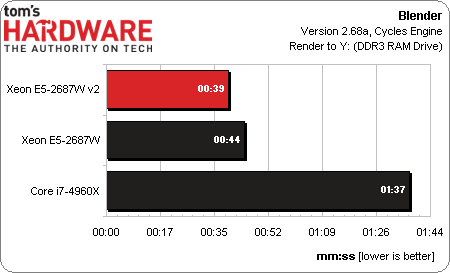

Or maybe it really is possible to maximize performance using a real-world workload. Our Blender test makes a clear distinction between the fastest desktop processor you can buy and Intel’s workstation-oriented Xeon E5s in dual-processor configs.

Again, comparing the Xeon E5-2687W and -2687W v2 reveals relatively minor performance differences, as expected. Power is where these two should stand apart.

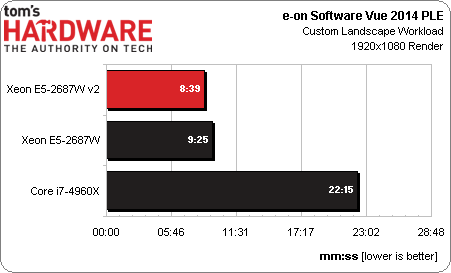

e-on Software’s Vue 2014 gives us another stark comparison between the very best you can do on the desktop-oriented LGA 2011 platform and what becomes possible as you step into the realm of Xeon-powered workstations. Our custom landscape test takes more than 22 minutes to render on the Core i7. Stepping up to a pair of Xeon E5-2687Ws cuts that under 10 minutes. And the newer -2687W v2s fall under nine.

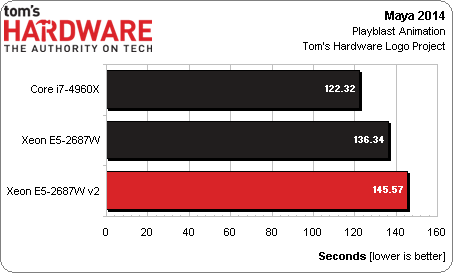

Our playblast animation in Maya 2014 confounds us. Our best theory is that the same GPU utilization issue that keeps OpenCL-accelerated titles like Vegas and Photoshop from favoring the dual-CPU workstations is in effect here as well, giving Intel’s Core i7 the lead.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

GL1zdA1 Does this mean, that the 12-core variant with 2 memory controllers will be a NUMA CPU, with cores having different latencies when accessing memory depending on which MC is near them?Reply -

Draven35 The Maya playblast test, as far as I can tell, is very single-threaded, just like the other 3d application preview tests I (we) use. This means it favors clock speed over memory bandwidth.Reply

The Maya render test seems to be missing O.o

-

Cryio Thank you Tom's for this Intel Server CPU. I sure hope you'll make a review of AMD's upcoming 16 core Steamroller server CPUReply -

voltagetoe If you've got 3ds max, why don't you use something more serious/advanced like Mental Ray ? The default renderer tech represent distant past like year 1995.Reply -

lockhrt999 "Our playblast animation in Maya 2014 confounds us."@canjelini : Apart from rendering, most of tools in Maya are single threaded(most of the functionality has stayed same for this two decades old software). So benchmarking maya playblast is as identical as itunes encode benchmarking.Reply -

daglesj I love Xeon machines. As they are not mainstream you can usually pick up crazy spec Xeon workstations for next to nothing just a few years after they were going for $3000. They make damn good workhorses.Reply -

InvalidError @GL1zdA1: the ring-bus already means every core has different latency accessing any given memory controller.Memory controller latency is not as much of a problem with massively threaded applications on a multi-threaded CPU since there is still plenty of other work that can be done while a few threads are stalled on IO/data. Games and most mainstream applications have 1-2 performance-critical threads and the remainder of their 30-150 other threads are mostly non-critical automatic threading from libraries, application frameworks and various background or housekeeping stuff.Reply -

mapesdhs Small note, one can of course manually add the Quadro FX 1800 to the relevant fileReply

(raytracer_supported_cards.txt) in the appropriate Adobe folder and it will work just

fine for CUDA, though of course it's not a card anyone who wants decent CUDA

performance with Adobe apps should use (one or more GTX 580 3GB or 780Ti is best).

Also, hate to say it but showing results for using the card with OpenCL but not

showing what happens to the relevant test times when the 1800 is used for CUDA

is a bit odd...

Ian.

PS. I see the messed-up forum posting problems are back again (text all squashed

up, have to edit on the UK site to fix the layout). Really, it's been months now, is

anyone working on it?