Korean researchers power-shame Nvidia with new neural AI chip — claim 625 times less power draw, 41 times smaller

Claim Samsung-fabbed chip is the first ultra-low power LLM processor.

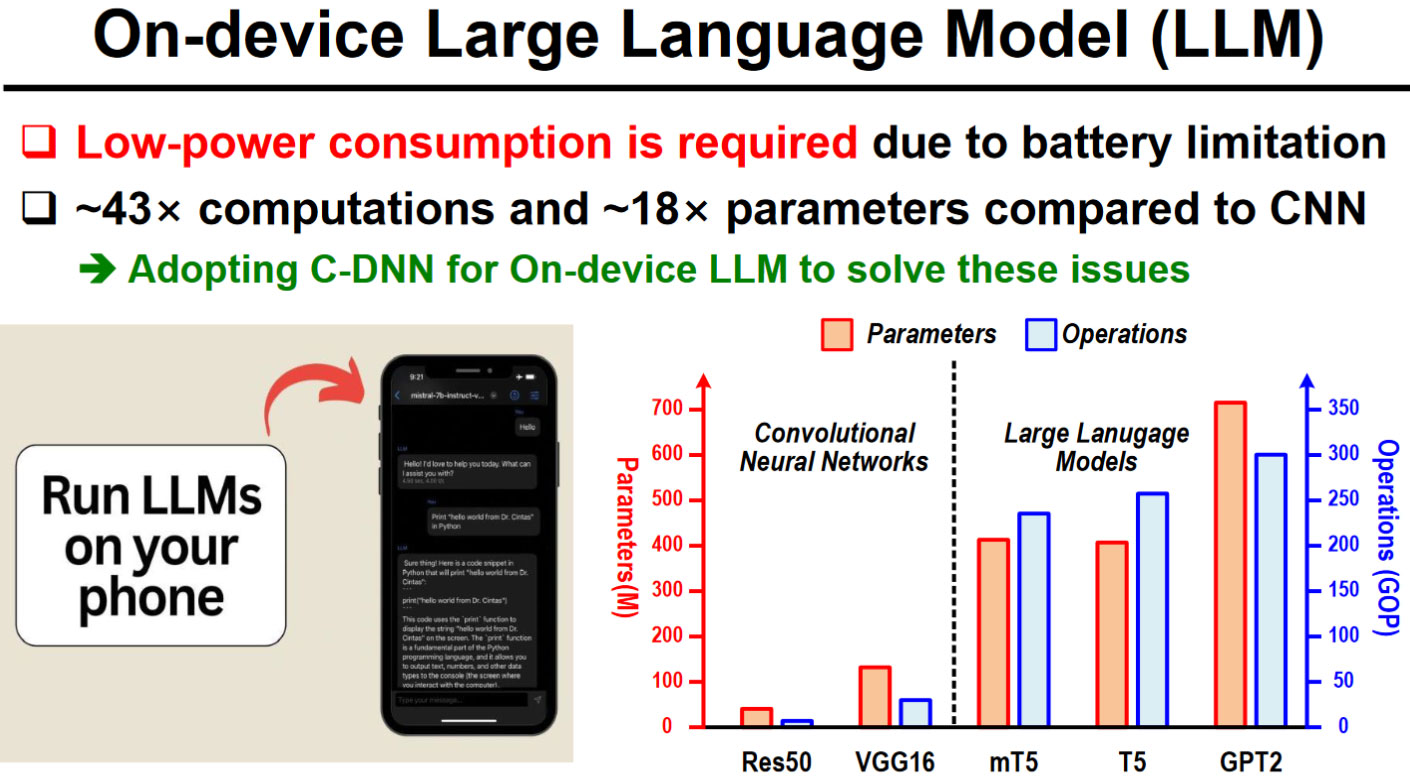

A team of scientists from the Korea Advanced Institute of Science and Technology (KAIST) detailed their 'Complementary-Transformer' AI chip during the recent 2024 International Solid-State Circuits Conference (ISSCC). The new C-Transformer chip is claimed to be the world's first ultra-low power AI accelerator chip capable of large language model (LLM) processing.

In a press release, the researchers power-shame Nvidia, claiming that the C-Transformer uses 625 times less power and is 41x smaller than the green team's A100 Tensor Core GPU. It also reveals that the Samsung fabbed chip's achievements largely stem from refined neuromorphic computing technology.

Though we are told that the KAIST C-Transformer chip can do the same LLM processing tasks as one of Nvidia's beefy A100 GPUs, none of the press nor conference materials we have provided any direct comparative performance metrics. That's a significant statistic, conspicuous by its absence, and the cynical would probably surmise that a performance comparison doesn't do the C-Transformer any favors.

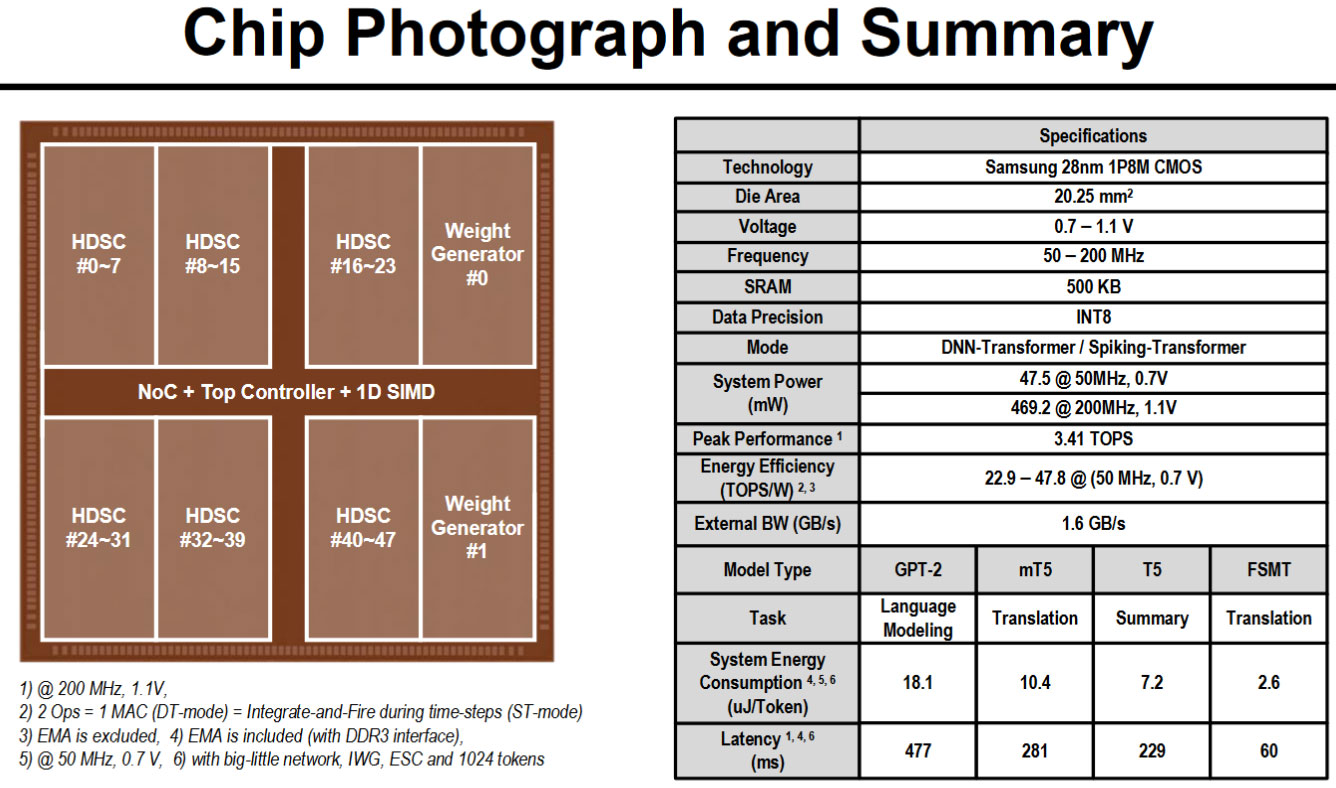

The above gallery has a 'chip photograph' and a summary of the processor's specs. You can see that the C-Transformer is currently fabbed on Samsung's 28nm process and has a die area of 20.25mm2. It runs at a maximum frequency of 200 MHz, consuming under 500mW. At best, it can achieve 3.41 TOPS. At face value, that's 183x slower than the claimed 624 TOPS of the Nvidia A100 PCIe card (but the KAIST chip is claimed to use 625x less power). However, we'd prefer some kind of benchmark performance comparison rather than look at each platform's claimed TOPS.

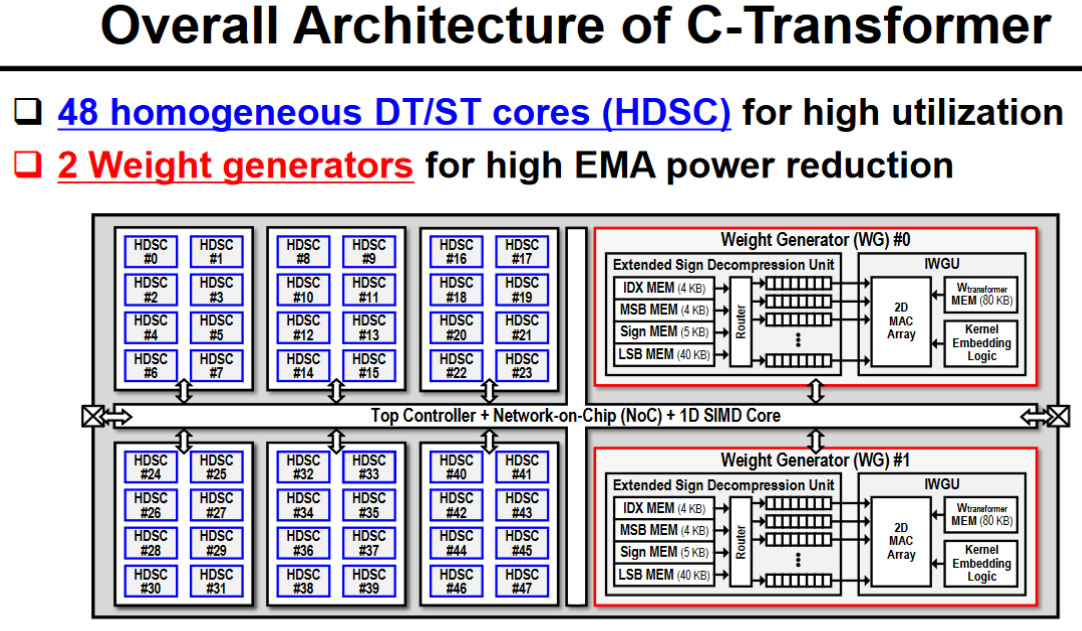

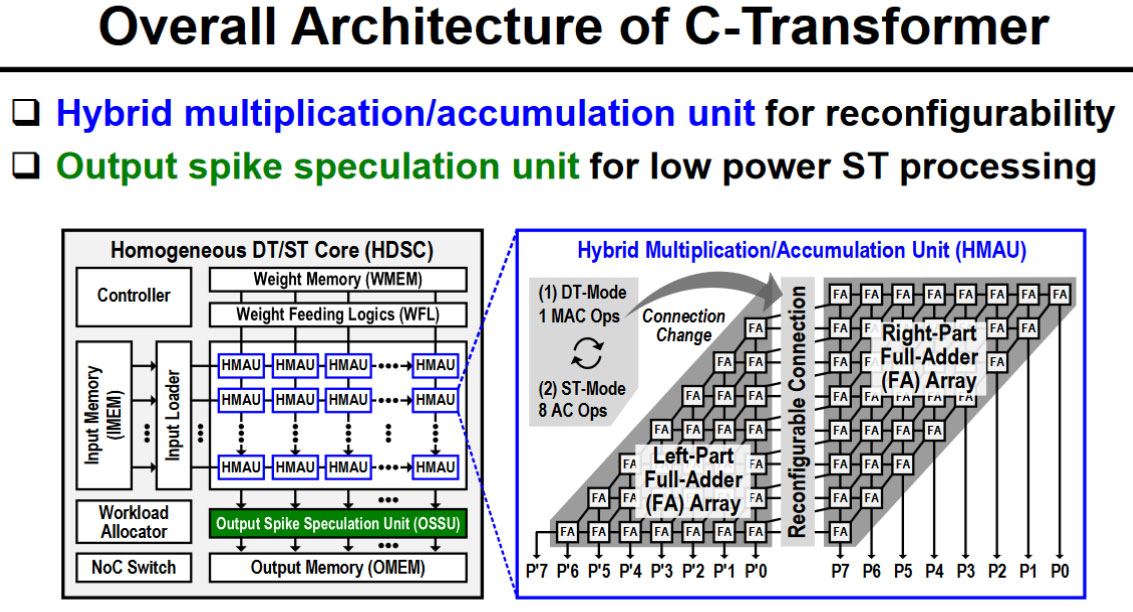

The architecture of the C-Transformer chip is interesting to look at and is characterized by three main functional feature blocks. Firstly, there is a Homogeneous DNN-Transformer / Spiking-transformer Core (HDSC) with a Hybrid Multiplication-Accumulation Unit (HMAU) to efficiently process the dynamically changing distribution energy. Secondly, we have an Output Spike Speculation Unit (OSSU) to reduce the latency and computations of spike domain processing. Thirdly, the researchers implemented an Implicit Weight Generation Unit (IWGU) with Extended Sign Compression (ESC) to reduce External Memory Access (EMA) energy consumption.

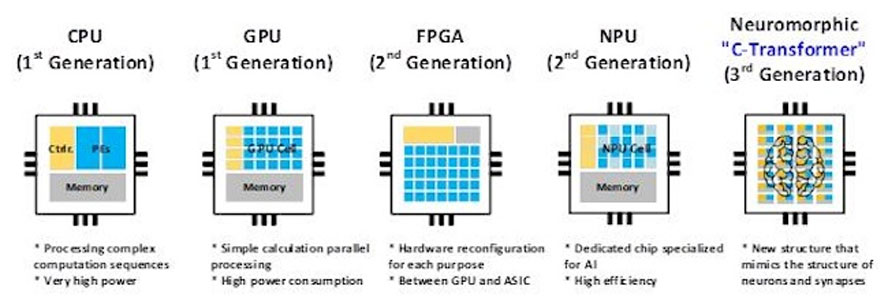

It is explained that the C-Transformer chip doesn't just add some off-the-shelf neuromorphic processing as its 'special sauce' to compress the large parameters of LLMs. Previously, neuromorphic computing technology wasn't accurate enough for use with LLMs, says the KAIST press release. However, the research team says it "succeeded in improving the accuracy of the technology to match that of [deep neural networks] DNNs."

Though there are uncertainties about the performance of this first C-Transformer chip due to no direct comparisons with industry-standard AI accelerators, it is hard to dispute claims that it will be an attractive option for mobile computing. It is also encouraging that the researchers have got this far with a Samsung test chip and extensive GPT-2 testing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

ekio Nvidia is the most overvalued company currently. They are rich because they lead temporarily in a very demanding market. Their solution is based on graphics processors doing an alternative job they were not fully intended for, full greedy premium price. Can’t wait to see competition bringing them back down to earth.Reply -

derekullo Reply

GPUs were initially developed for rendering graphics in video games but have become instrumental in AI due to their highly parallel structure. They can perform thousands of operations simultaneously. This aligns perfectly with the tasks required by neural networks, the critical technology in generative AI.ekio said:Nvidia is the most overvalued company currently. They are rich because they lead temporarily in a very demanding market. Their solution is based on graphics processors doing an alternative job they were not fully intended for, full greedy premium price. Can’t wait to see competition bringing them back down to earth.

GPUs were never perfect for rendering graphics either ... that's why Nvidia releases newer more efficient models every other year. -

Unolocogringo Reply

Maybe you do not understand the underlying technology of CUDA cores(NVIDIA)/SHADERS (AMD)?ekio said:Nvidia is the most overvalued company currently. They are rich because they lead temporarily in a very demanding market. Their solution is based on graphics processors doing an alternative job they were not fully intended for, full greedy premium price. Can’t wait to see competition bringing them back down to earth.

https://www.techcenturion.com/nvidia-cuda-cores/

https://www.amd.com/system/files/documents/rdna-whitepaper.pdf

Their are more, these are quick search primers.

They were already rich with their 80% (plus or minus a few points) gaming and professional graphics card market share.

It just so happens these highly parallel compute units work well for AI also.

They also work very well for Folding @ Home. Trying to solve humanities diseases/afflictions . -

edzieba Replyclaiming that the C-Transformer uses 625 times less power and is 41x smaller than the green team's A100 Tensor Core GPU

Or in other words, their chip is only ~6.5% of the power density of the A100. Since almost any even moderately high performing chip is power limited these days (CPUs, GPUs, even mobile SoCs now) their chip is either downclocked to hell for this particular my-number-is-biggest bullshotting exercise, or is just plain inefficient in terms of die usage - and with die area correlating to fab cost and fab cost dominating total cost, don't expect price/perf to be great. -

ekio The problem with Nvidia, is that their tech is relying on a single generalist GPU architecture rather than an extremely optimized GPU for graphics and an extremely optimized AI processor line.Reply

When specialized processors are emerging, they will beat Nvidia like crazy.

Nvidia tripled their value thanks to mining then AI, they are just relying on trend where they are the easy solution, but they were never the best for long. -

DougMcC Reply

Have you seen Tesla's valuation? They're valued as if they are not only the most valuable car company on earth, but also as if none of their competition exists.ekio said:Nvidia is the most overvalued company currently. They are rich because they lead temporarily in a very demanding market. Their solution is based on graphics processors doing an alternative job they were not fully intended for, full greedy premium price. Can’t wait to see competition bringing them back down to earth.

Tesla: 500B valuation on <2M auto sales

GM: 50B valuation on > 6M auto sales. -

dlheliski Reply

Exactly. This article is just hot garbage. 625 less power, 41 times smaller, ignoring the fact that it is 183 times slower. So TOPS/W is about 3X better, and TOPS/area is about 4X worse. No surprise, reduce Vdd and use more silicon. An idiot can do this on a bad day.edzieba said:Or in other words, their chip is only ~6.5% of the power density of the A100. Since almost any even moderately high performing chip is power limited these days (CPUs, GPUs, even mobile SoCs now) their chip is either downclocked to hell for this particular my-number-is-biggest bullshotting exercise, or is just plain inefficient in terms of die usage - and with die area correlating to fab cost and fab cost dominating total cost, don't expect price/perf to be great. -

hotaru251 Reply

why?Amdlova said:Maybe now nvidia comes to gammer market with sorry indeed on mind.

They have no reason to. There are not enoguh ai chips in world to feed demand.

it sucks, but Nvidia has stated they arent a graphics card company anymore. Gaming will never become their focus again. There is sadly mroe $ in other stuff. -

bit_user ReplyAt face value, that's 183x slower than the claimed 624 TOPS of the Nvidia A100 PCIe card (but the KAIST chip is claimed to use 625x less power).

So, it's only it's only 3.4 times as efficient as their previous generation GPU, which is less than 6 months away from being a two-generation old GPU???

Stopped reading right there. This is the epitome of a click-bait headline. It suggests equivalent performance at the stated size & efficiency. You should've either used metrics which normalize for the performance disparity or just gone ahead and actually included the performance data.

Korean researchers power-shame Nvidia with new neural AI chip — claim 625 times less power draw, 41 times smallerWorse, the thing which makes A100 and H100 GPUs so potent is their training ability, which is something these Korean chips can't do. The article doesn't even mention the words "training" or "inference".

This "journalism" is really disappointing, guys.