Xilinx Pairs AMD's EPYC With Four FPGAs At Supercomputing 2017

AMD's made quite the splash at Supercomputing 2017 with a range of EPYC server solutions on display both at its own booth and from several third-party vendors. We found one of the most interesting demos at Xilinx's booth, where the company paired a single-socket EPYC server platform with four of its beefy FPGAs.

The demonstration featured four Xilinx cards that each power up to 21 TOPs of 8-bit integer throughput. Each card features 64GB of onboard DDR4 memory and a VU9P Virtex UltraScale+ FPGA with close to 2.5 million configurable logic cells. Xilinx claims a single dual-slot full-length full-height card can deliver 10-100x more performance than a standard CPU while pulling 225W. Cram four of them in a 2U server chassis and you have a processing beast.

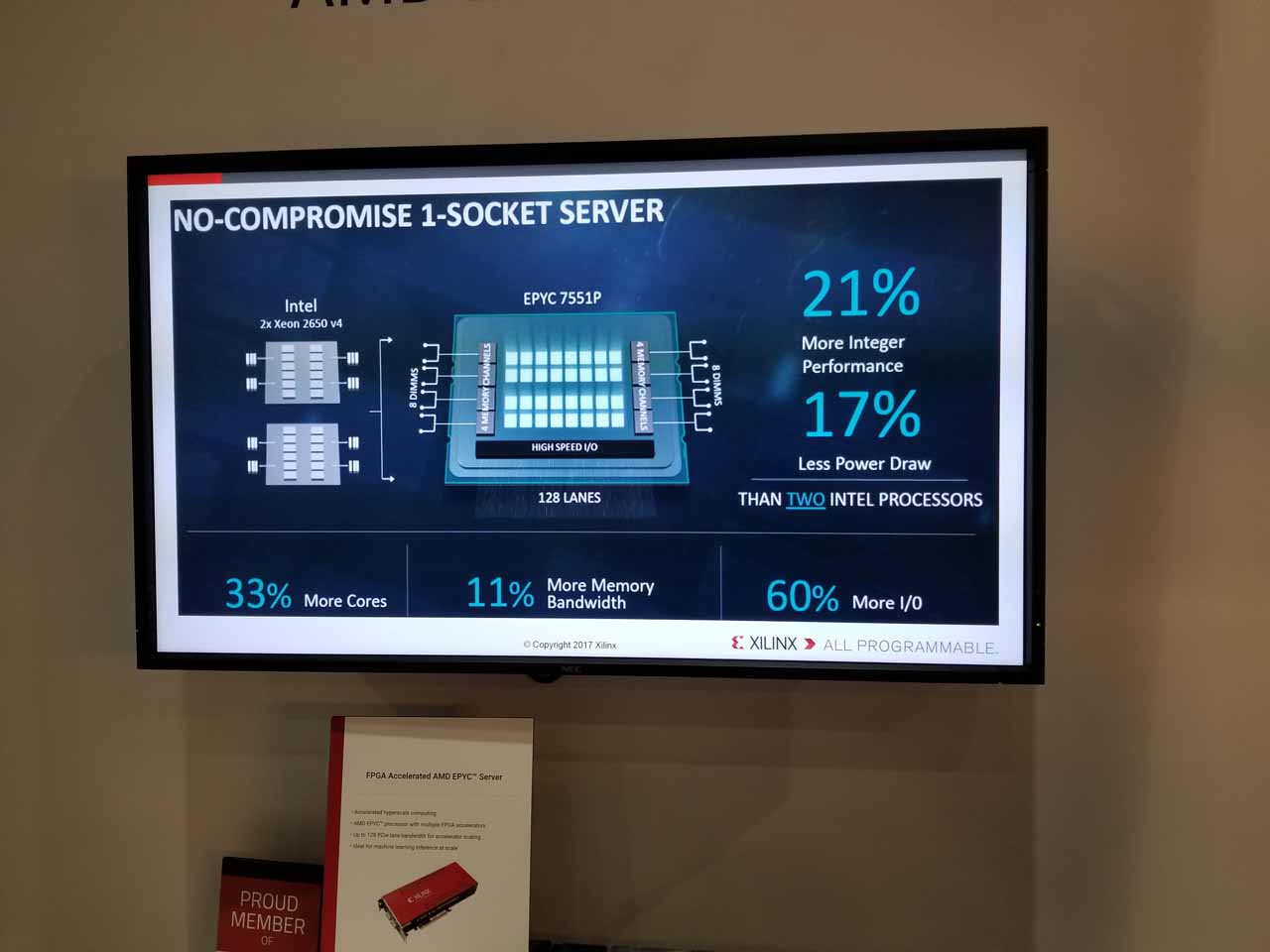

The single-socket server space is pining for an alternative that delivers copious connectivity, and EPYC's 128 PCIe 3.0 lanes pair nicely with platforms that feature multiple PCIe connected accelerators, be they FPGAs or GPUs. Each respective accelerator has its own advantages and disadvantages. FPGAs can be reprogrammed on the fly and strip out many of the unnecessary functions found on GPUs, which reduces latency and boosts performance. GPUs tend to handle the heavy lifting for many workloads because FPGAs are limited to integer inference or lower performance floating point than GPUs.

But FPGAs also tend to deliver superior performance-per-watt, which is a crucial consideration in large deployments, like data centers. These systems are designed for machine learning, data analytics, genomics and live video transcoding workloads.

Scalability is a key requirement for many applications, so networking becomes another important aspect. AMD's EPYC provides enough lanes to host these four PCIe 3.0 x16 FPGAs and still have an additional 64 PCIe lanes available for other additives, such as networking.

Memory capacity and performance is also a limiting factor for many workloads, so EPYC's 145GBps of bandwidth, eight DDR4 channels and 2TB of memory capacity for a single socket server is a good fit for many diverse workloads. Of course, the company also features lower pricing than Intel's equivalents with similar core counts, which aren't capable of delivering the same amount of connectivity in a single-socket solution. Throwing in up to 32 Zen cores and 64 threads also provide plenty of CPU processing power for parallelized workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

redgarl AMD knews that PCIe lines were a big thing for data center using GPU or other calculating processors. I wonder if AMD could get a good part of the market for these systems over Intel.Reply -

berezini.2013 Redgarl, intel solved the networking issue and has multitude solutions on that front that differs from AMD's lane tacticsReply -

bit_user ReplyXilinx cards that each power up to 21 TOPs of 8-bit integer throughput ... while pulling 225W.

Okay, just a bit less than a single GTX 1070, while pulling only 150W. Seriously. -

crazygerbil Bit_User, Yeah, I don't know what the heck they are doing there. The chip itself draws <15W maximum, so either the on-board peripherals draw way too much power, or (more likely) they massively over-specified the power supply for it. 225W is actually the maximum power that can be drawn through the power connectors it has (75W from the PCIe slot and 150W from the 8-pin PCIe power connector).Reply -

alextheblue Reply

First, you're comparing TDP of a reference card to peak board power. Second, latency. There's a reason they're using DDR4 and not GDDR.20383156 said:Xilinx cards that each power up to 21 TOPs of 8-bit integer throughput ... while pulling 225W.

Okay, just a bit less than a single GTX 1070, while pulling only 150W. Seriously.

More importantly, it's not fair to compare a chip with fully programmable logic vs a GPU. If GPUs are well suited to your workload, fantastic. If they're not, the only competition FPGAs have left would be CPUs. It's sort of like comparing a semi truck to a train for purposes of hauling goods. If you ignore the fact that freight trains are bound to rails, they seem like a clear winner, why would anyone ship goods via a truck?

Slightly off topic: If you're limited to fewer lanes per CPU, you have to buy more CPUs to support your accelerator cards. That simultaneously boosts CPU sales and the relative competitiveness of CPUs against said accelerator cards. Unfortunately for Intel, AMD has spoiled their fun a little bit. -

bit_user Reply

Wow, looks like I touched a nerve.20386903 said:First, you're comparing TDP of a reference card to peak board power. Second, latency. There's a reason they're using DDR4 and not GDDR.

Dude, I didn't say this product makes no sense. I was just putting it in perspective, which I felt was relevant after Paul went on about how FPGAs are so much faster per W. -

alextheblue Reply

You didn't touch a nerve and I really have no stake here. I am not defending Paul's statement - though I actually assumed he was referring to their power consumption in workloads where GPUs are not ideal (thus pitting them against less efficient CPUs).20389963 said:

Wow, looks like I touched a nerve.20386903 said:First, you're comparing TDP of a reference card to peak board power. Second, latency. There's a reason they're using DDR4 and not GDDR.

Dude, I didn't say this product makes no sense. I was just putting it in perspective, which I felt was relevant after Paul went on about how FPGAs are so much faster per W.

I just felt it wasn't a fair comparison. -

bit_user Reply

Okay, go ahead and down-vote Paul - he made the comparison. I just posted facts, of which you contested none.20390720 said:I just felt it wasn't a fair comparison.

I think your down-vote is mostly to do with the fact that this concerns an AMD-based system and I quoted figures about a Nvidia GPU. -

alextheblue Reply

I contest the validity of the comparison itself. FPGAs are better than GPUs for some workloads. GPUs are better than FPGAs for some workloads. Making best use of FPGAs is more challenging. A generalized comparison is very difficult. Paul should have been more clear about what exactly he meant, also I can't downvote him.20391104 said:

Okay, go ahead and down-vote Paul - he made the comparison. I just posted facts, of which you contested none.20390720 said:I just felt it wasn't a fair comparison.

Now you've touched a nerve, as you've ascribed false motive.20391104 said:I think your down-vote is mostly to do with the fact that this concerns an AMD-based system and I quoted figures about a Nvidia GPU.

Nvidia has the most powerful and efficient graphics cards. Period. Your choice of graphics card brand wasn't as issue. Here's another one: right now Intel makes the most powerful, best overclocking consumer chips for desktops. Full stop.