Exclusive Interview: Nvidia's Ian Buck Talks GPGPU

The Evolution Of GPGPU Computing

Tell me about CUDA, the "architecture," versus the "CUDA for C" compiler.

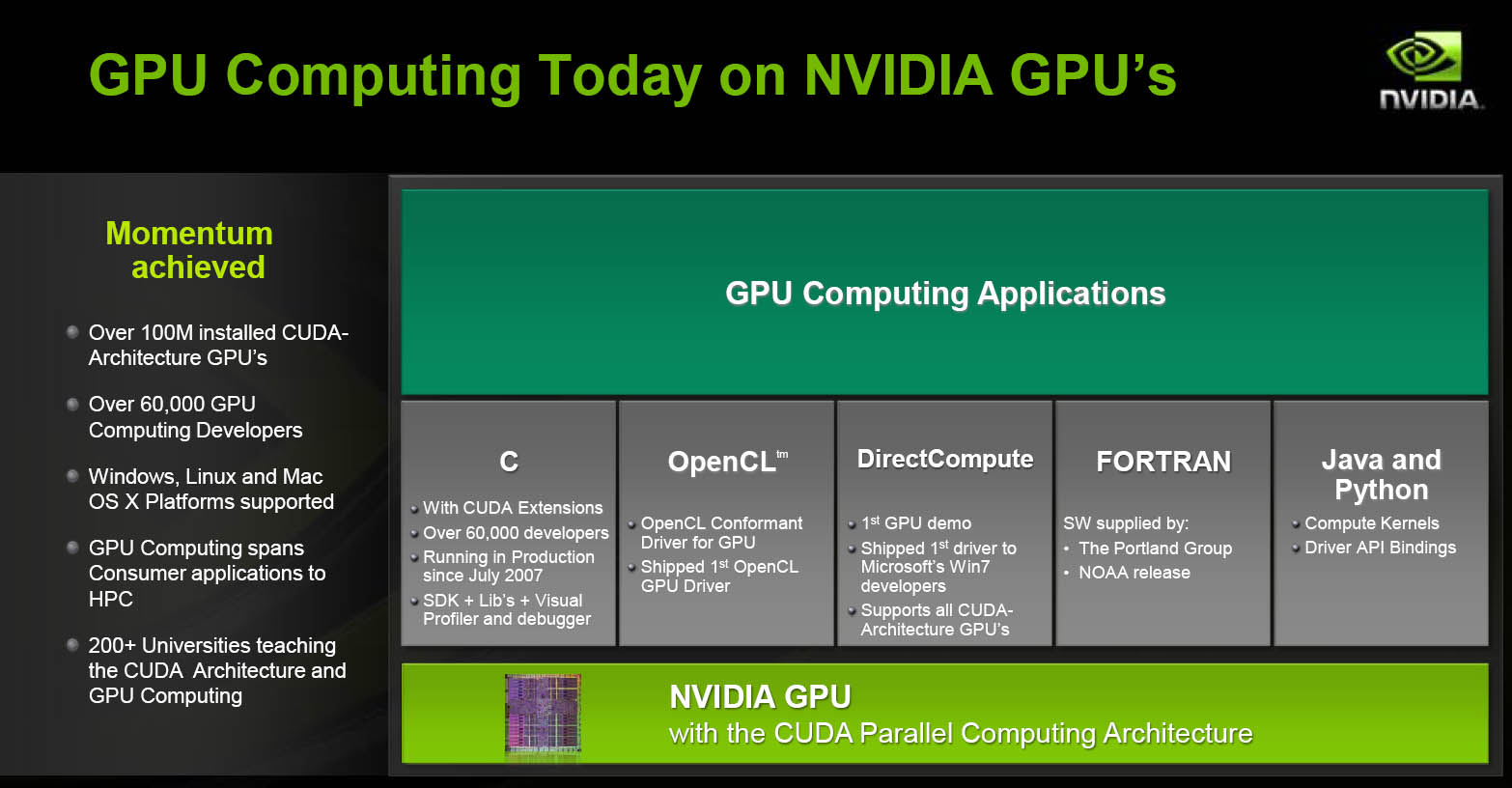

CUDA is the name of Nvidia’s parallel computing hardware architecture. Nvidia provides a complete toolkit for programming the CUDA architecture that includes the compiler, debugger, profiler, libraries, and other information developers need to deliver production-quality products that use the CUDA architecture. The CUDA architecture also supports standard languages (C with CUDA extensions and Fortran with CUDA extensions) and APIs for GPU computing, such as OpenCL and DirectX Compute.

This diagram may help:

With OpenCL, you gain the advantage of cross-platform support, but lose automated tools, such as memory management, that are found with CUDA. It seems that as a scientist, you'd want to decrease your startup development costs, but at the same time, you'd want support for multiple platforms. What's the best way to reconcile this challenge?

There are certainly compromises that have to be made to provide a cross vendor/platform solution. Nvidia has worked from the beginning with Apple and the OpenCL working group to make sure OpenCL provides a great driver-level API layer for GPU computing, especially for Nvidia hardware. Furthermore, we will certainly provide extensions to further enable Nvidia GPU’s with OpenCL.

Nvidia is also constantly improving our C compiler and development environment for Nvidia GPUs. We have a few simple extensions to C in order to enable our GPUs. If Fortran is more your preference, there is a Fortran compiler also available. With the introduction of Microsoft’s Windows 7 this fall, users and developers will have access to the DirectCompute API, which shares many concepts with our C extensions. Nvidia seeded a DirectCompute driver to key developers last December. These are the added advantages to choosing Nvidia hardware; we support all major languages and API’s.

Your work with GPUs started in the GeForce 5 era, and we're now several generations later. Obviously, the newer stuff is faster, but what new capabilities have been introduced over this time period (i.e. IEEE-754 compliance)? What can we do now that we couldn't do before?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Early programmable GPUs were basic floating point-only programmable processors. No integer or bit operations, no general access to GPU memory, no communication between neighboring processors. The first main innovation was to provide the hardware needed for supporting C, which includes full pointer support and native data types.

Another key innovation was the addition of dedicated, on-chip shared memory, which allows processors to intercommunicate and share results, greatly improving the efficiency of the algorithms. In addition, it offered programmers a place to temporarily store and process data close to the processor, rather than going all the way out to off-chip DRAMs. Shared memory improved our signal processing library by 20x over a similar OpenGL implementation.

Finally the addition of double-precision floating point hardware also signified a key step toward GPUs as a true high performance computing product, enabling applications that required extended precision numerics. It should also be noted that memory speed and on-board memory size improvements (up to 4GB per processor and 16GB for our Tesla 1U server) has increased the scale of problems an Nvidia GPU can tackle.

What about the compiler? What kind of optimizations and innovations have been added over time?

Very early on, we recognized that we needed to build a world-class compiler solution. GPU computing programs tend to be much larger, more complex, and benefit from more complex optimization. Our competition (the CPU) had almost 40 years to get it right. Our C compiler is based on technologies from the Edison Design Group, who has been making C compilers for 20 years, and the Open64 compiler core, which was originally designed for the Itanium processor. Our compiler technology, combined with the world-class compiler team we’ve assembled, is a key part of Nvidia’s success.

Currently, most GPUs are very fast with single precision math, but less quick with double precision math. Will GPUs still provide "better than CPU" cost/performance if it weren't for the economies of scale? That is, could you make a special double precision-optimized GPU while still keeping costs low?

As the market for GPU computing clearly continues to grow, I think you will see more areas invested in double precision arithmetic. Our double precision hardware released last year was only the starting point for what I imagine will be a growing investment in GPU computing from both the industry and Nvidia.

What is your impression of Intel's Larabee? AMD's Stream Architecture? Cell? Zii?

My view of Larabee is that it is a great validation of what the GPU has achieved and an acceptance of the limitations the CPU. Where CPUs have tried to take a legacy sequential programming model and squeeze out every last bit of parallelism, GPUs were created for 3D rendering, an embarrassingly parallel application. Massive parallelism is a part of the GPU’s core programming model.

In the end, it is the accessibility and productivity of a programming model that will take an architecture from a novelty to a success. We are all competing against a mountain of legacy code. We’ve focused on making Nvidia GPUs extremely easy to obtain orders of magnitude speedups with a familiar and simple programming model.

Current page: The Evolution Of GPGPU Computing

Prev Page Meet Nvidia's Ian Buck Next Page Applications Of GPGPU Computing-

How about some GPU acceleration for linux! I'd love blue-ray and HD content to be gpu accelerated by VlC or Totem. Nvidia?Reply

-

matt87_50 I ported my simple sphere and plane raytracer to the gpu (dx9) using Render Monkey, it was soo simple and easy, only took a few hours, nearly EXACTLY the same code. (using hlsl, which is basically c) and it was so beautiful, what took seconds on the cpu was now running at over 30fps at 1680x1050.Reply

a monumental speed increase with hardly any effort (even without a gpgpu language)

its going to be nothing short of a revolution when gpgpu goes mainstream (hopefully soon, with dx11)

computer down on power? don't replace the cpu, just dump another gpu in one of the many spare pci16x slots and away you go, no fussing around with sli or crossfire and the compadibillity issues they bring. it will just be seen as another pile of cores that can be used!

even for tasks that can't be done easily on the gpu architecture, most will still probably run faster than they would on the cpu, because the brute power the gpu has over the cpu is so immense, and as he kinda said, most of the tasks that aren't suited to gpgpu don't need speeding up anyway. -

shuffman37: Nvidia does have gpu accelerated video on linux. Link to wikipedia http://en.wikipedia.org/wiki/VDPAU about VDPAU. Its gaining support by a lot of different media players.Reply

-

NVIDIA, saying that "spreadsheet is already fast enough" may be misleading. Business users have the money. Spreadsheets are already installed (huge existing user base). Many financial spreadsheets are very complicated 24 layers, 4,000 lines, with built in Monte Carlo simulations.Reply

Making all these users instantly benefit from faster computing may be the road for success for NVIDIA.

Dr. Drey

Bloomington, IN -

raptor550 Although I appreciate his work... I had to AdBlock his face. Sorry, its just creepy.Reply -

techpops While I can't get enough of GPGPU articles, it really saddens me that Nvidia is completely ignoring Linux and not because I'm a Linux user. Ignoring Linux stops the GPU from being the main source for rendering in 3D software that also is available under Linux. So in my case, where I use Cinema 4D under Windows, I'll never see the massive speedups possible because Maxon would never develop for a Windows and Mac only platform.Reply

It's worth pointing out here that I saw video of Cuda accelerated global illumination from a single Nvidia graphics card, going up against an 8 core CPU beast. Beautiful globally illuminated images were taking 2-3 minutes to render, just for a single image on the 8 core PC. The Cuda one, rendering to the same quality was rendering at up to 10 frames per second! That speed up is astonishing and really makes an upgrade to a massive 8 core PC system seem pathetic in the face of that kind of performance.

One can only imagine what would be possible with multiple graphics cards.

I also think the killer app for the GPU is not ultimately going to be graphics at all, while in the early days it will be, further down the line, I think it will be augmented reality that takes over for the main GPU use. Right now, it's pretty shoddy using a smart phone for augmented reality applications, everything is dependent on GPS, and that's totally unreliable and will remain so. What's needed for silky smooth AR apps is a lot of processing power to recognize shapes and interpret all that visual data you get through a camera to work with the GPS. So if you're standing in front of a building, an arrow can point on the floor leading into the buildings entrance because the GPS has located the building and the gpu has worked out where the windows and doors are and made overlaid graphics that are motion locked to the video.

I think AR is going to change everything with portable computers, but only when enough compute power is in a device to make it a smooth experience, rather than the jerky unreliable experimental toy it is on today's smart phones. -

pinkzeppelin97 zipzoomflyhighIf my forehead was that big due to a retreating hairline, I would shave my head.Reply

amen to that -

tubers cpu and gpu combined? will that bring more profit to each of their respective companies? hmmReply -

jibbo shuffman37How about some GPU acceleration for linux! I'd love blue-ray and HD content to be gpu accelerated by VlC or Totem. Nvidia?Reply

There is GPU acceleration for Linux. I believe NVIDIA's provided a CUDA driver, compiler, toolkit, etc for Linux since day 1.