ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

Power Consumption

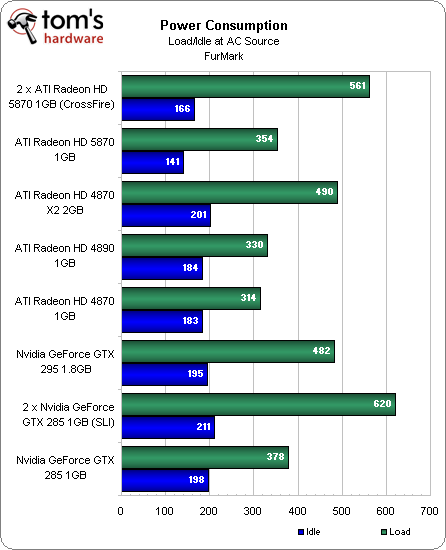

Remember when Nvidia’s GeForce GTX 200-series cards were so energy efficient at idle that the company decided to completely drop its HybridPower technology after just one generation of use? So what’s up with our power consumption measurements?

We’ve seen Windows Vista-based results that show Nvidia’s GeForce GTX 285 and GTX 260 Core 216 using 20W or so less than ATI’s Radeon HD 4870 1GB. However, in these Windows 7 tests, the Nvidia cards seem to be using as much as 15W more than the older ATI cards.

Now, we haven’t run the numbers comparing GeForce cards in Windows Vista versus 7 (that’s coming), but we do know from our Intel Core i5 and Core i7 launch coverage that Windows 7 does exhibit very different power consumption behavior than Vista, and it’s possible that 7’s more complex desktop is taking the Nvidia boards to a greater extent than Vista’s did, and the ATI cards aren’t seeing the same sort of power hit. Incidentally, a few days before this launch Nvidia sent over a beta of its latest drivers, which were said to fix potential idle power issues. We tried them and saw the same results, so it's safe to say this isn't a driver issue.

Either way, the more significant news is that the Radeon HD 5870’s idle consumption drops an astounding 42W from last year’s Radeon HD 4870. Moreover, adding a second Radeon HD 5870 card only adds an additional 24W of consumption at idle (and those two boards even idle below a single Radeon HD 4870).

Firing up FurMark shows that, even at 40nm, 2.15 billion transistors still use up a lot of juice. A single Radeon HD 5870 still uses about 25W more than a Radeon HD 4890 under load. And adding a second board in CrossFire mode increases consumption by another 207W, bringing the total to 561W. Incidentally, that’s 71W more than a Radeon HD 4870 X2 and 79W less than a GeForce GTX 295. The worst power offender is a pair of GeForce GTX 285s in SLI though, which take system power up to 620W.

Making It More Efficient

So just how did ATI drop the Radeon HD 5870’s idle power to 27W, following up the 90W Radeon HD 4870? The most obvious improvement is a reduction in idle clocks. Sitting on the Windows 7 desktop, our 5870 sample dropped to 157 MHz core and 300 MHz memory clock rates. In comparison, the Radeon HD 4870 only dropped to 500/900MHz.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Reference Graphics Card | Idle Clocks (Core/Memory) | 3D Clocks (Core/Memory) |

|---|---|---|

| ATI Radeon HD 5870 | 157/300 | 850/1,200 |

| ATI Radeon HD 4870 X2 | 507/500 | 750/900 |

| ATI Radeon HD 4890 | 240/975 | 850/975 |

| ATI Radeon HD 4870 | 520/900 | 750/975 |

| Nvidia GeForce GTX 295 | 300/100 (600 MHz Shader) | 576/999 (1,242 MHz Shader) |

| Nvidia GeForce GTX 285 | 300/100 (600 MHz Shader) | 648/1,242 (1,476 MHz Shader) |

At the other end of the spectrum, Cypress does have a higher maximum board power than its predecessor. However, ATI has implemented direct communication between the VRM and the GPU to signal an over-current state, triggering the processor to throttle down until the board is back within its power spec. We’ll discuss this more on the following page, but running two 5870s in CrossFire, we were able to trigger this dynamic protection.

Speaking of CrossFire, when you have two 5870s running concurrently at idle, ATI says that secondary board will drop into an ultra-low power state (purportedly sub-20W). We measured a 25W increase with a second board at idle, which is still not bad at all when you consider a pair of 4870s would be rated at 180W.

Current page: Power Consumption

Prev Page Benchmark Results: Grand Theft Auto IV Next Page Heat And Noise-

crosko42 So it looks like 1 is enough for me.. Dont plan on getting a 30 inch monitor any time soon.Reply -

jezza333 Looks like the NDA lifted at 11:00PM, as there's a load of reviews now just out. Once again it shows that AMD can produce a seriously killer card...Reply

Crysis 2 on an x2 of this is exactly what I'm waiting for. -

LORD_ORION Err... I thought I was going to see more for the price. Regardless, I think ATI missed the mark here. I am interested in playing games on my HDTV since me and my monitor don't care about these higher resolutions. Fail cakes... Nivida is undoubtedly going to rape ATI in performance with the 300 series. This is good news for mainstream prices however.... you can ptobably upgrade to a current DX10 board soon for a very good price, and then buy a 5850 for $100 in a year from now. Result? Don't but a 5000 series card yet until the price comes down? Heh, I bet the cards will be $100 less in December if the 300 series launches.Reply

This is not to say I am an Nvidia fan, just undoubtedly you would do well for yourself to hold off for a bit if you want to buy a 5000 series... as the price will come down for a good price/performance ratio soon enough. -

cangelini viper666why didn't they thest it against a GTX 295 rather than 280??? its far superior...Reply

Ran it against a GTX 295 and a 285 and 285s in SLI :) -

Annisman I refuse to buy until the 2GB versions come out, not to mention newegg letting you buy more than 1 at a time, paper launch ftl.Reply