ATI Radeon HD 5870: DirectX 11, Eyefinity, And Serious Speed

DirectX 11: More Notable Than DirectX 10?

I still remember when ATI launched the original Radeon in 2000. Brian Hentschel called me up to ask for my opinion on the chip’s name, and I remember thinking Radeon was horrible. Shows you how well I’d do in PR. That architecture emphasized the gaming experience, with two pixel pipelines and three texture units per pipeline. Though the Radeon had a fairly high texel rate, pixel fill rate was what won the day back then, and the decision to “go pretty” ceded the performance battle to Nvidia.

With R300 in 2002, ATI went the other way, putting its money behind eight pixel pipelines, each with a single texture unit—the focus was on performance—and the bet paid off. ATI smoked the GeForce4 Ti 4600 and fared well enough against an embarrassingly-loud GeForce FX 5800 Ultra.

Performance or experience—which is better? With the Radeon HD 5870, ATI says it’s gunning for both. We’ve already covered the architecture, ATI’s key to delivering performance with Cypress. Now let’s take a closer look at the experience. ATI is relying on three components enabled through hardware here: its Eyefinity technology, Stream, and DirectX 11.

Right off the bat, I think it’s fairly safe to say that for all of the hoopla ATI made about its DirectX 10.1 support, real gamers in the real world never saw a tangible benefit. I’ve played S.T.A.L.K.E.R.; I’ve played H.A.W.X. The experience on a DirectX 10 card versus DirectX 10.1 is not worth mention. And for that matter, I’d also argue that DirectX 10 hasn’t had as profound on impact on gaming experience as prior versions of the API. Why should we believe DirectX 11 is going to be any more prolific than its predecessor?

The reality of the situation is that DirectX 11 probably won’t be as impactful as DirectX 8 or 9, both of which introduced key shading capabilities. But it is seen as the next logical step for ISVs still working with DirectX 9, since it’s a super-set of DirectX 10/10.1 supporting existing hardware, plus DX11 cards. Microsoft has made sure it's easier to code with DX11, so we really are expecting to see a faster up-take of the API than DirectX 10.

New Features

The notable features supported by DX11 are illustrated in the chart below.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Feature | DirectX 10 | DirectX 10.1 | DirectX 11 |

|---|---|---|---|

| Tessellation | - | - | x |

| Shader Model | - | - | x |

| DirectCompute 11 | - | - | x |

| DirectCompute 10.1 | - | x | x |

| DirectCompute 10 | x | x | x |

| Multi-Threading | x | x | x |

| BC6/BC7 Texture Compression | - | - | x |

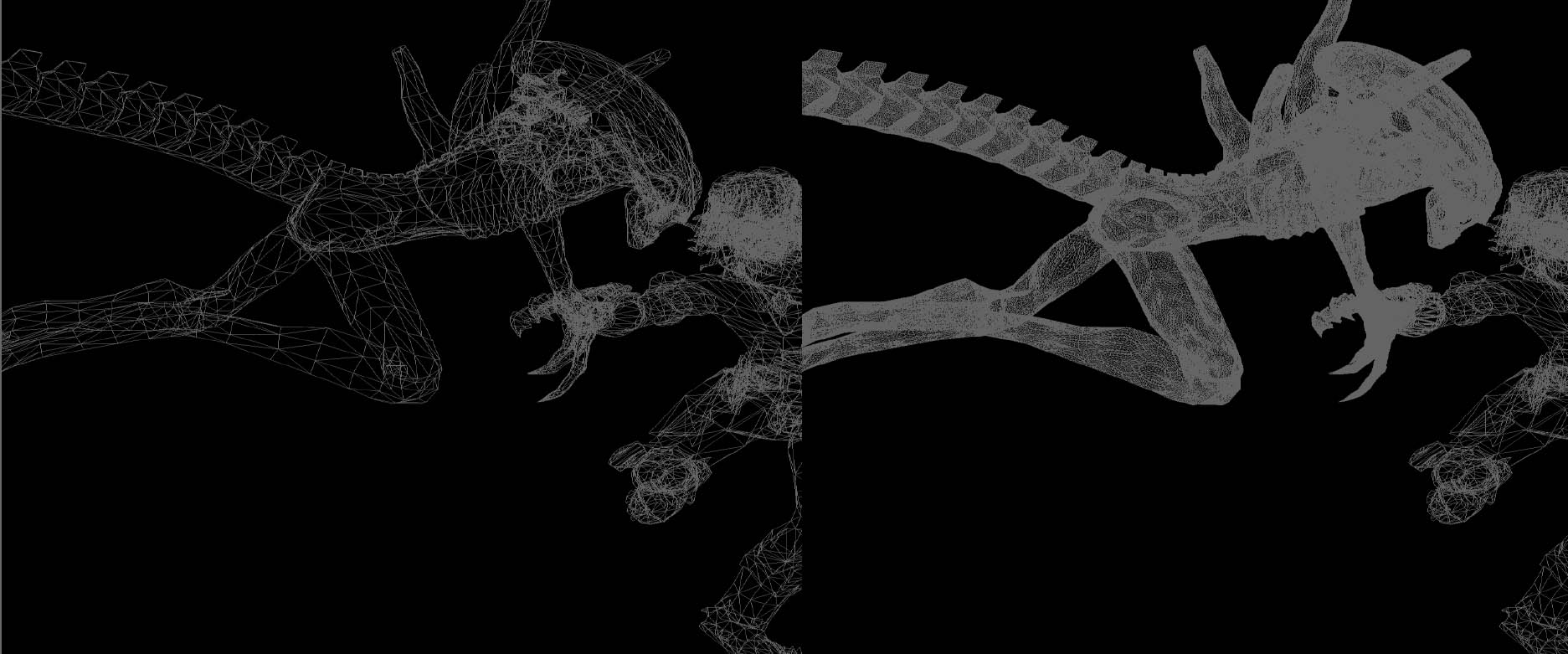

ATI has included tessellation support in its GPUs since 2001. And while I’m not sure how much play those early hardware implementations actually got in the game development world, they’ve helped pave the way for tessellation as it exists today, exposed through a number of different implementations (Catmull-Clark subdivision surface modeling, Bézier patch meshes, n-patches, displacement mapping, and adaptive/continuous tessellation). Of course, the benefits of tessellation are apparent—more polygons mean more detail and hence more realism. And because tessellation is now standardized as a component of DirectX 11, ISVs are more likely to lean on it without the frustration of only supporting one vendor’s hardware. In fact, we saw Rebellion demonstrate tessellation in its upcoming Aliens Vs. Predator title, launching in Q1 of next year.

DirectX 11 also introduces Shader Model 5.0, which offers developers a more object-oriented approach to coding HLSL. Ideally, this will help motivate ISVs to adopt the new API quicker, since programming becomes cleaner and more efficient (for a more specific example of this in practice, check out Fedy’s DirectX 11 preview).

Gamers with multi-core processors should realize performance gains from DirectX 11-based titles by virtue of threading optimizations made to the API. ATI and Nvidia have been shipping multi-threaded drivers for three years now capable of dispatching commands to the GPU in parallel. According to Nvidia, this was worth anywhere from 10 to 40 percent additional performance back in ’06. But DirectX 11 goes even further, allowing the application, DirectX runtime, and driver to run in separate threads. ATI gives the example of loading textures or compiling shaders in parallel with the main rendering thread. In essence, the threading is much more granular, which, almost ironically, should prove more beneficial to AMD and Intel than ATI and Nvidia. Wait. Yeah. That’s a real win for AMD, isn’t it?

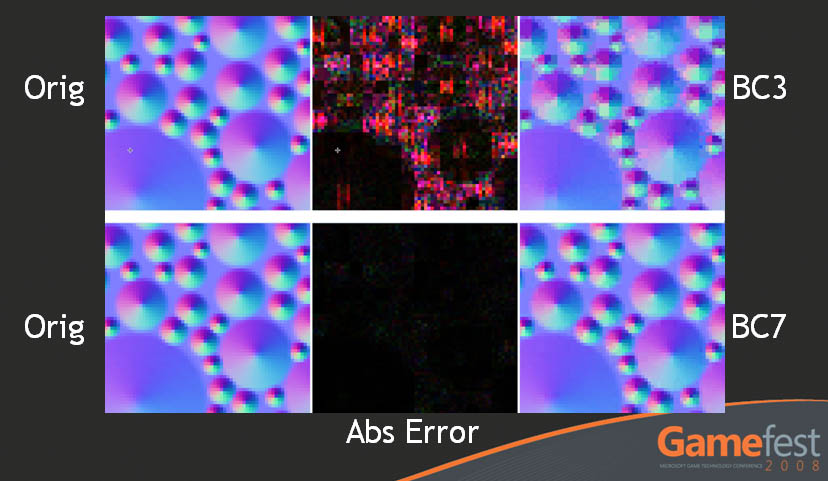

Improved texture compression is another one of those developer-oriented enhancements that will benefit gamers through greater rendering quality without the expected corresponding performance hit to memory bandwidth. DirectX 11 includes two new block compression formats: BC6 and BC7. BC6 enables up to 6:1 compression of 16-bit high dynamic range textures with hardware decompression support. BC7 delivers up to 3:1 compression of eight-bit textures and normal maps.

Current page: DirectX 11: More Notable Than DirectX 10?

Prev Page Cypress Becomes The Radeon HD 5800-Series Next Page DirectCompute-

crosko42 So it looks like 1 is enough for me.. Dont plan on getting a 30 inch monitor any time soon.Reply -

jezza333 Looks like the NDA lifted at 11:00PM, as there's a load of reviews now just out. Once again it shows that AMD can produce a seriously killer card...Reply

Crysis 2 on an x2 of this is exactly what I'm waiting for. -

LORD_ORION Err... I thought I was going to see more for the price. Regardless, I think ATI missed the mark here. I am interested in playing games on my HDTV since me and my monitor don't care about these higher resolutions. Fail cakes... Nivida is undoubtedly going to rape ATI in performance with the 300 series. This is good news for mainstream prices however.... you can ptobably upgrade to a current DX10 board soon for a very good price, and then buy a 5850 for $100 in a year from now. Result? Don't but a 5000 series card yet until the price comes down? Heh, I bet the cards will be $100 less in December if the 300 series launches.Reply

This is not to say I am an Nvidia fan, just undoubtedly you would do well for yourself to hold off for a bit if you want to buy a 5000 series... as the price will come down for a good price/performance ratio soon enough. -

cangelini viper666why didn't they thest it against a GTX 295 rather than 280??? its far superior...Reply

Ran it against a GTX 295 and a 285 and 285s in SLI :) -

Annisman I refuse to buy until the 2GB versions come out, not to mention newegg letting you buy more than 1 at a time, paper launch ftl.Reply