A Sexy Storage Spree: The 3 GB/s Project, Revisted

We repeat our extreme SSD RAID project for the third time and arrange 16 Samsung 470-series SSDs based on MLC NAND in a RAID 0 array to reach new levels of performance. We weren't as fortunate this time, but not for the reasons you might suspect.

Benchmark Results: Throughput

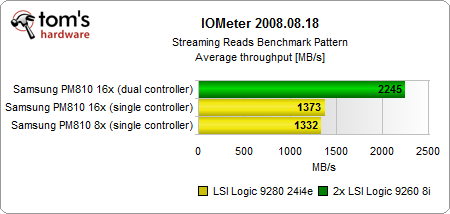

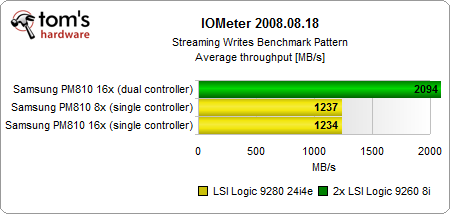

With two controllers, the RAID array generates nice throughput performance. However, the results seem somewhat sobering because, on average, the RAID array delivers a 2245 MB/s read and 2094 MB/s write rate in the fastest configuration. Those are good results, but still a good deal away from the magical 3 GB/s threshold. After breaking down the data according to queue depths, we see a very different picture.

Due to bandwidth limitations of the LSI cards, it really doesn’t matter whether we use the 9280-24i4e with eight or 16 SSDs. We reach the highest read and write rates using the dual-controller solution, driven by two 9260-8i cards, as each is connected to a different PCI Express 2.0 port.

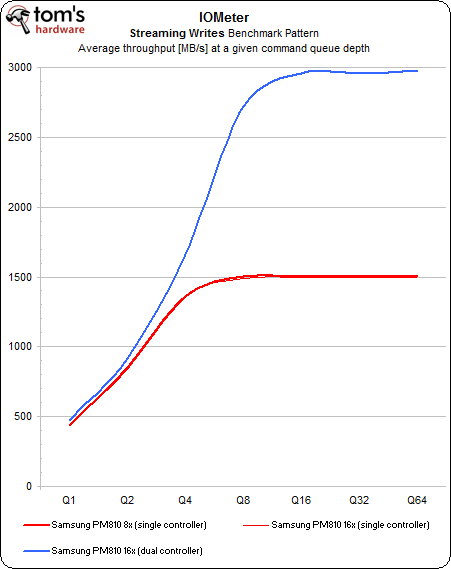

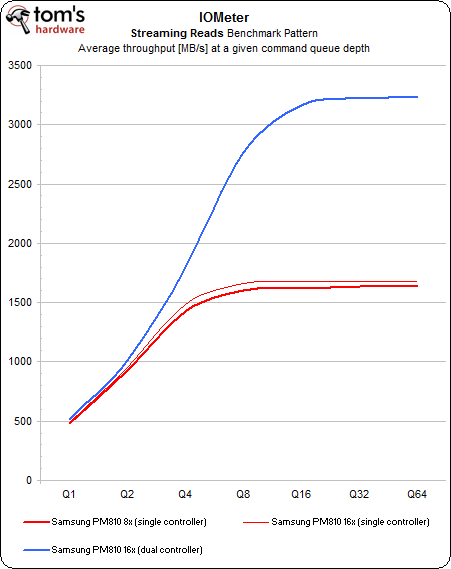

At low queue depths, the different test systems are pretty close to each other. But at a queue depth of four pending commands, the dual-controller system gets going. At QD=8, it really shows its advantage. There, it fluctuates between just below the 3 GB/s mark when writing, and significantly over when reading.

When we connect one controller to our test system, the performance limit is reached much faster. The RAID doesn't exceed 1500 MB/s in writes and around 1600 MB/s in reads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: Throughput

Prev Page RAID Creation In Windows Next Page Benchmark Results: I/O Performance-

burnley14 Wow, throughput in GB/s. Makes my paltry single SSD look shameful. How fast did Windows boot up out of curiosity?Reply -

the associate Overkill benches like this are awesome, I can't wait to see the crazy shit were gona have in 10 years from now.Reply

burnley14How fast did Windows boot up out of curiosity?

I'd also like to know =D -

abhinav_mall How many organs I will have to sell to get such a setup?Reply

My 3 year old Vista takes 40 painful seconds to boot. -

knowom You can use super cache/super volume on SSD's or even USB thumb drives to dramatically improve the I/O and bandwidth at the expense of using up a bit of your system ram still the results are impressive and works on HD's as well, but they suffer from access times no matter what.Reply

I don't even think I'd bother getting a SSD anymore after using super volume on a USB thumb drive and SSD the results are nearly identical regardless of which is used and thumb drives are portable and cheaper for the density you get for some messed up reason. -

knowom I'd be really interested to see super cache/super volume used on this raid array actually it can probably boost it further or should be able to in theory.Reply -

x3style abhinav_mallHow many organs I will have to sell to get such a setup?My 3 year old Vista takes 40 painful seconds to boot.Wow people still use vista? Was that even an OS? It felt like some beta test thing.Reply -

nitrium I suspect you'll all be VERY disappointed at how long Windows takes to boot (but I'd also like to know). Unfortunately, most operations in Windows (such as loading apps, games, booting, etc) occur at QD 1 (average is about QD 1.04, QD > 4 are rare). As you can see on Page 7, at QD1 it only gets about 19 MB/sec - the SAME speed as basically any decent single SSD manufactured in the last 3 years.Reply -

kkiddu mayankleoboy1holy shit! thats fast. how about giving them as a contest prize?Reply

I WANT 16 OF THOSE !

For God's sake, that's $7000 worth of hardware, not including the PC. DAMN DAMN DAMN !! 3 gigabytes per second. And to think, that while on dial-up 4 years back, I downloaded at 3 kilobytes per second (Actually it was more like 2.5 KB/s).