Arc A770 Beats RTX 4090 In Early 8K AV1 Decoding Benchmarks

CapFrameX tests 8K AV1 decoding on the latest GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

The developer of CapFrameX, a famous capture, and analysis tool, has published a performance comparison of AMD's Radeon RX 6800 XT (RDNA 2), Intel's Arc A770 (Alchemist), and Nvidia's GeForce RTX 3090 (Ampere) and GeForce RTX 4090 (Ada Lovelace) in AV1 video encoding in at 8K resolution. The results he obtained are pretty surprising.

The best graphics cards, like Nvidia's GeForce RTX 3090 or RTX 4090, offer formidable performance in demanding games in high resolutions, unlike Intel's Arc A770, which targets mainstream gamers. But when it comes to high-resolution video playback, everything comes down to video decoding hardware performed by a special-purpose hardware unit whose performance does not depend on the overall capabilities of the GPU.

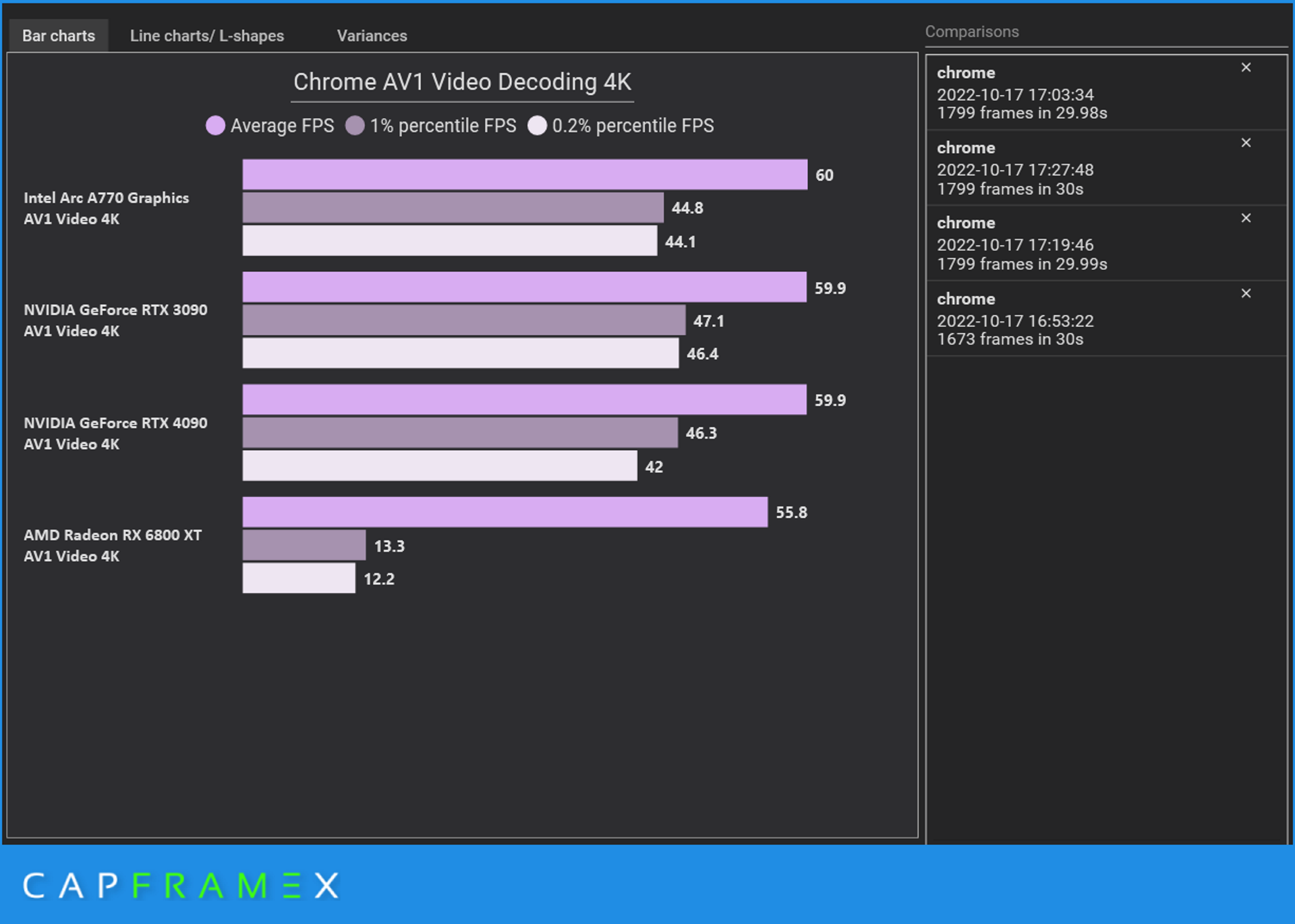

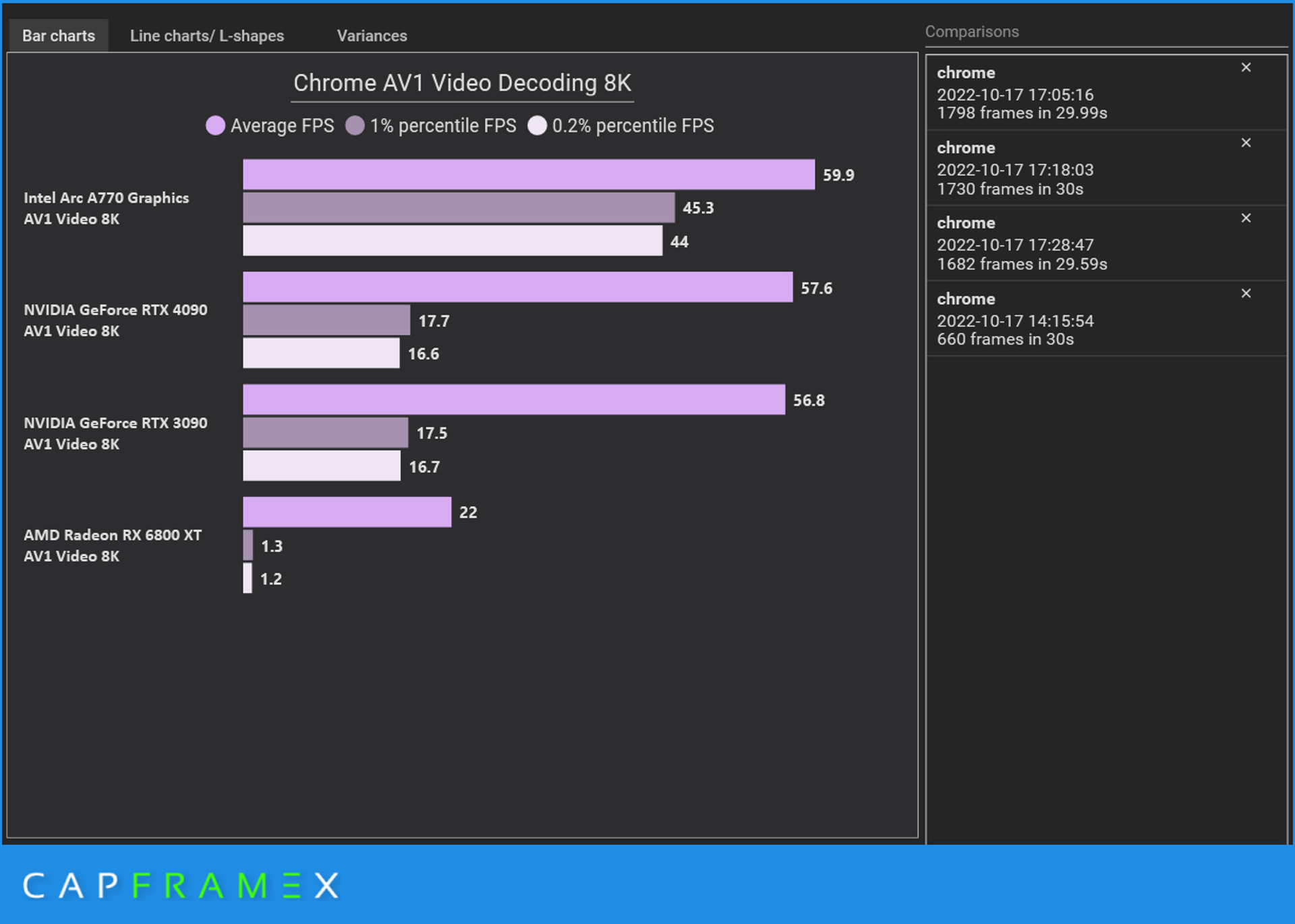

To test the decoding capabilities of modern graphics processors, the developer of CapFrameX took Japan in 8K 60 FPS video from YouTube and decoded it in 4K and 8K resolutions in Chrome browser. Ideally, all GPUs would need to deliver constant 60 FPS; those drops in 0.2% – 1% of cases should not ruin the experience.

In 8K, Intel's Arc A770 delivers smooth playback with 60 FPS on average and drops to 44 FPS in 0.2% of cases. By contrast, Nvidia's GPUs hit 56.8 – 57.6 FPS and fell to 16.7 FPS at times, making it uncomfortable to watch. Given how close the results of Nvidia's AD102 and GA102 are, we can only wonder whether Nvidia's current driver and Google Chrome can take advantage of Nvidia's latest NVDEC hardware (and whether Nvidia updated the hardware when compared to GA102). With AMD's Radeon RX 6800 XT, the 8K video was 'unwatchable' as it suffered from low frame rates and stuttering.

In general, Intel offers industry-leading video playback support even at 8K. By contrast, Nvidia's software may need to catch up with Intel's regarding high-resolution AV1 video playback. While AMD's Navi 21 formally supports AV1 decoding, it does not look like an 8K resolution and is a nut that it can crack, at least with current software.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

PlaneInTheSky The small difference between Nvidia and Intel is not the story.Reply

The real story is AMD with a $640 GPU being unable to properly decode a 4k youtube video, even though AMD claims it can decode AV1. -

pclaughton Reply

You don't think Nvidia's premier card getting beat by one that costs over a thousand dollars less is a story? I'd say you're unnecessarily trying to take only one piece of information from this; both can be a big deal.PlaneInTheSky said:The small difference between Nvidia and Intel is not the story.

The real story is AMD with a $640 GPU being unable to properly decode a 4k youtube video, even though AMD claims it can decode AV1. -

escksu Replypclaughton said:You don't think Nvidia's premier card getting beat by one that costs over a thousand dollars less is a story? I'd say you're unnecessarily trying to take only one piece of information from this; both can be a big deal.

Don't think it's a big deal. 4090 is marketed as a gaming card, not a workstation card nor dedicated video encoder/decoder card.

6900xt still has some driver issues. It's average is good but the 1% is bad. -

KyaraM Reply

Last time I checked, neither was the A770. Yet here we are. Back to square one.escksu said:Don't think it's a big deal. 4090 is marketed as a gaming card, not a workstation card nor dedicated video encoder/decoder card.

6900xt still has some driver issues. It's average is good but the 1% is bad.

That said, Intel's cards do seem to be very suited for productivity tasks, while still being decent enough for gaming, especially in 1440p and 4k. So if that is your aim, it looks like Intel might be the way to go, especially considering costs as well. -

KraakBal It looks like rdna2 might only support up to 8k30fps. Can you really not watch at 4k with it?Reply -

KraakBal Being able to watch quality video from youtube and netflix on my gpu to save my battery or power bill isn't a "workstation" use case, and should be prioritised on all new gpus!Reply

Pls do linux! Not quite there yet also when it comes to hardware support for video, especially in browsers -

richardvday I have to say I'm a little surprised by these results but I wouldn't get too upset about it unless you're that 1% of the people out there probably less than 1% who actually have a 8k monitor I wouldn't be too concerned about these results.Reply

Nvidia probably just needs to update their software and hopefully AMD have fixed their hardware/software for this new generation.

At least Intel has something to be proud of now 😅😅

I still wouldn't buy one but for some people I'm sure it would work just fine. -

junglist724 Reply

Since when is watching youtube a workstation task?escksu said:Don't think it's a big deal. 4090 is marketed as a gaming card, not a workstation card nor dedicated video encoder/decoder card.

6900xt still has some driver issues. It's average is good but the 1% is bad. -

GenericUser Replyjunglist724 said:Since when is watching youtube a workstation task?

I guess it depends on how bored you are at work