Blender 4.0 Released and Tested: New Features, More Demanding

Testing the latest open-source 3D rendering application.

The open-source Blender 3D rendering application has been a staple of our professional GPU benchmarks for a while now. Earlier this week, Blender 4.0 was released to the public. Alongside the main application, Blender Benchmark was also updated. Curious to see if there were any changes, we grabbed the new version and set about testing on some of the best graphics cards from Nvidia, AMD, and Intel.

Before we get to the performance results, let's quickly note that there are a host of changes. Most of these are for people that actually do 3D rendering for real work, whereas I only use it to measure performance on GPUs. Changes to the Cycles engine may result in lower performance in some cases, though it's also possible that future updates could include optimizations that would restore any lost performance.

If you're a professional who uses Blender already, and the new features are something you need, it may not matter too much whether Blender 4.0 runs slower or faster than previous releases. However, we use the benchmark application for comparing GPUs, so it's important to look at what has or hasn't changed in terms of performance. Note that the benchmark scores, as far as we're aware, are not supposed to be directly comparable either. Using the default rendering in Blender 4.0 will look slightly different in a variety of ways to Blender 3.x.

Our test PC uses a Core i9-12900K and is running the latest AMD, Intel, and Nvidia graphics drivers — 23.11.1, 4953, and 546.17, respectively. We are using the standard drivers in all cases, meaning "Game Ready" rather than "Studio" in the case of Nvidia — the same as we've done in previous testing. However, we also checked the latest 546.01 Nvidia Studio drivers with the RTX 4070 to check if there was any difference in performance; the Studio drivers were 0.5% slower than the non-Studio drivers, which is within the margin of error.

| Header Cell - Column 0 | Blender v3.6.0 Geomean | Blender v4.0.0 Geomean | Percent Change |

|---|---|---|---|

| RTX 4090 | 4213.9 | 3685.5 | -12.5% |

| RTX 4080 | 3119.6 | 2790.2 | -10.6% |

| RTX 4070 | 1943.2 | 1743.5 | -10.3% |

| RTX 4060 | 1160.3 | 1036.1 | -10.7% |

| RTX 3090 Ti | 2228.0 | 1943.2 | -12.8% |

| RX 7900 XTX | 1252.9 | 1260.7 | 0.6% |

| RX 7900 XT | 1144.1 | 1094.9 | -4.3% |

| RX 7800 XT | 752.6 | 720.8 | -4.2% |

| RX 7600 | 422.5 | 394.3 | -6.7% |

| Arc A770 16GB | 696.5 | 679.0 | -2.5% |

| Arc A750 | 693.7 | 672.7 | -3.0% |

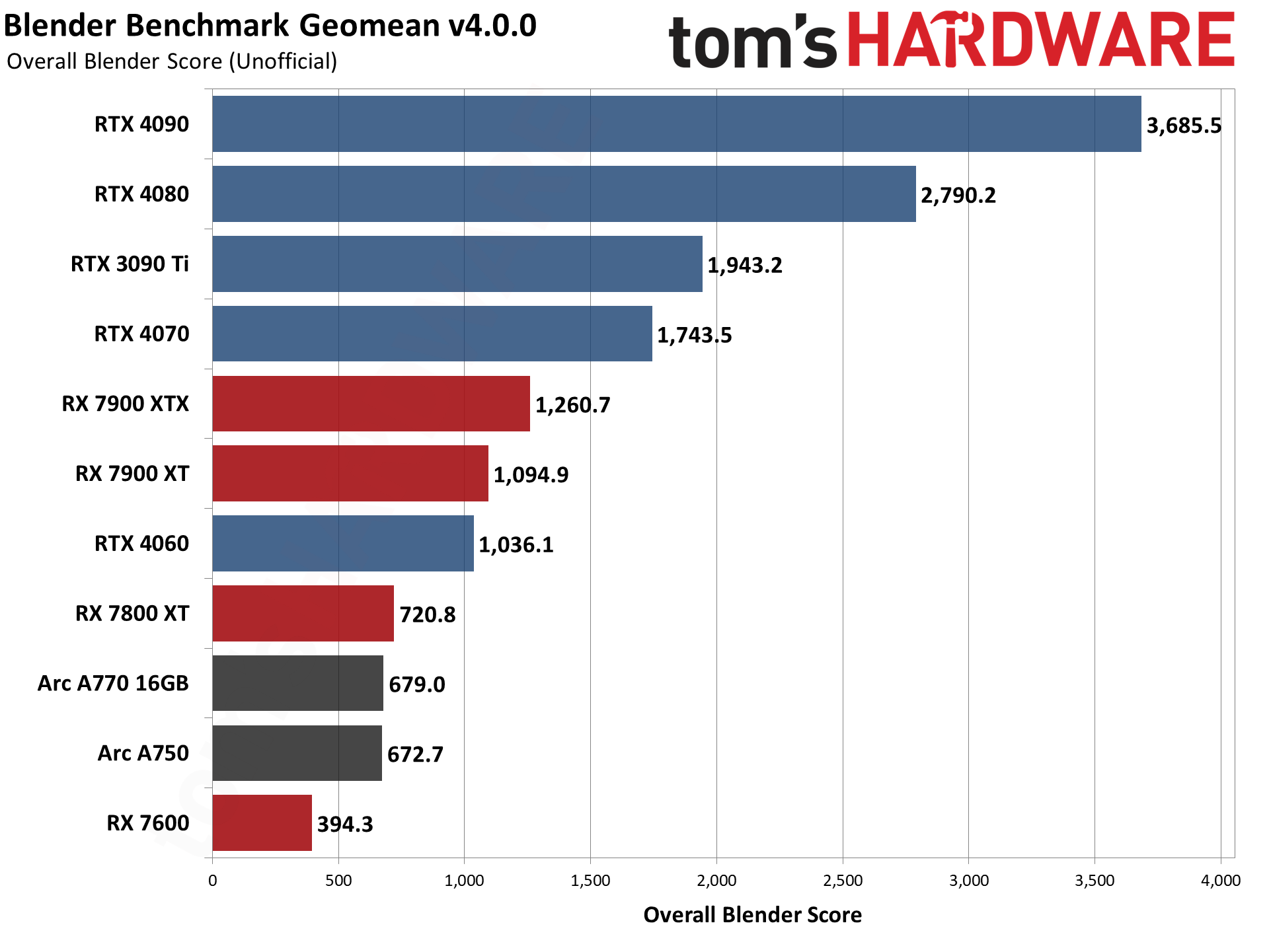

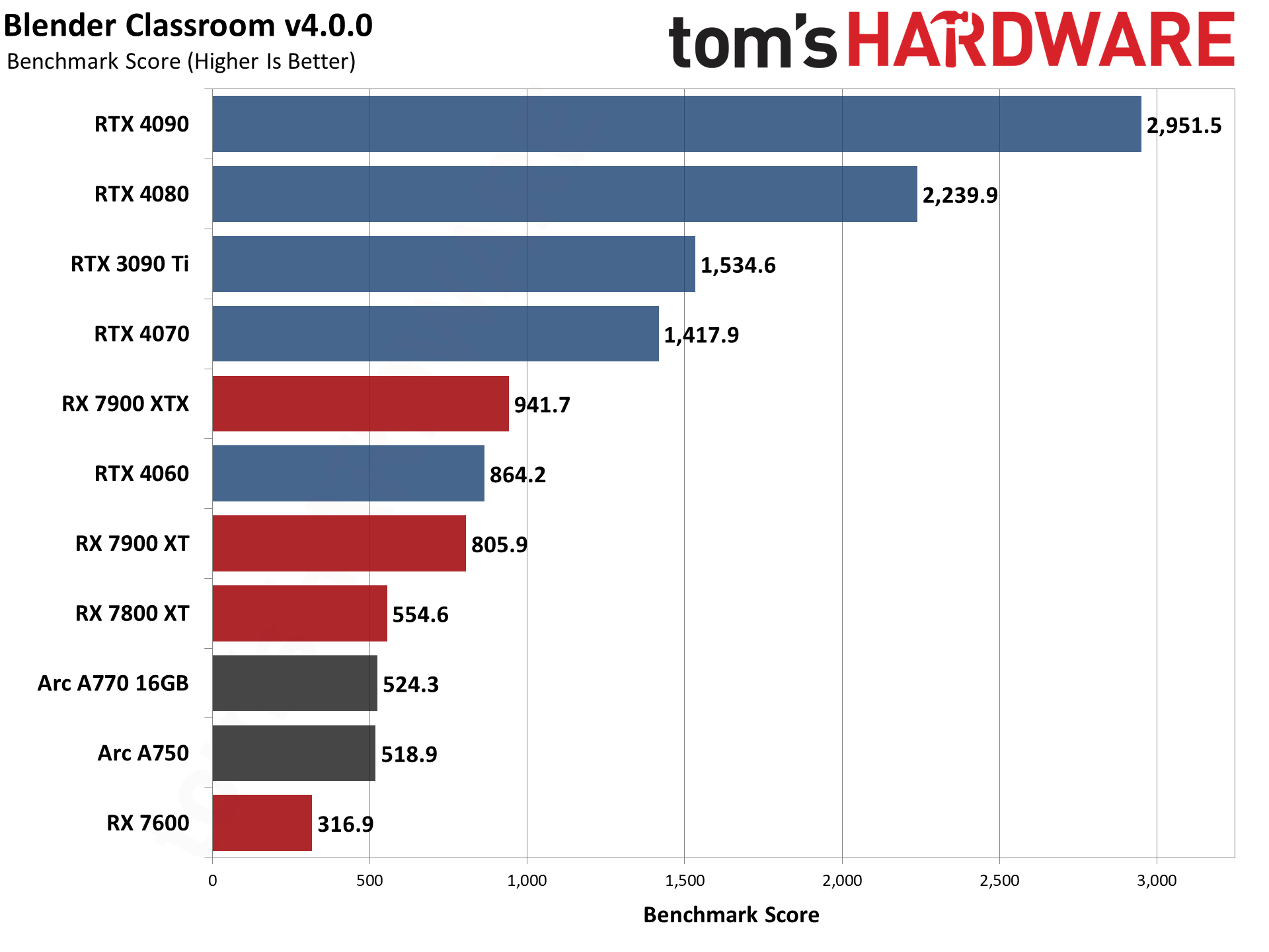

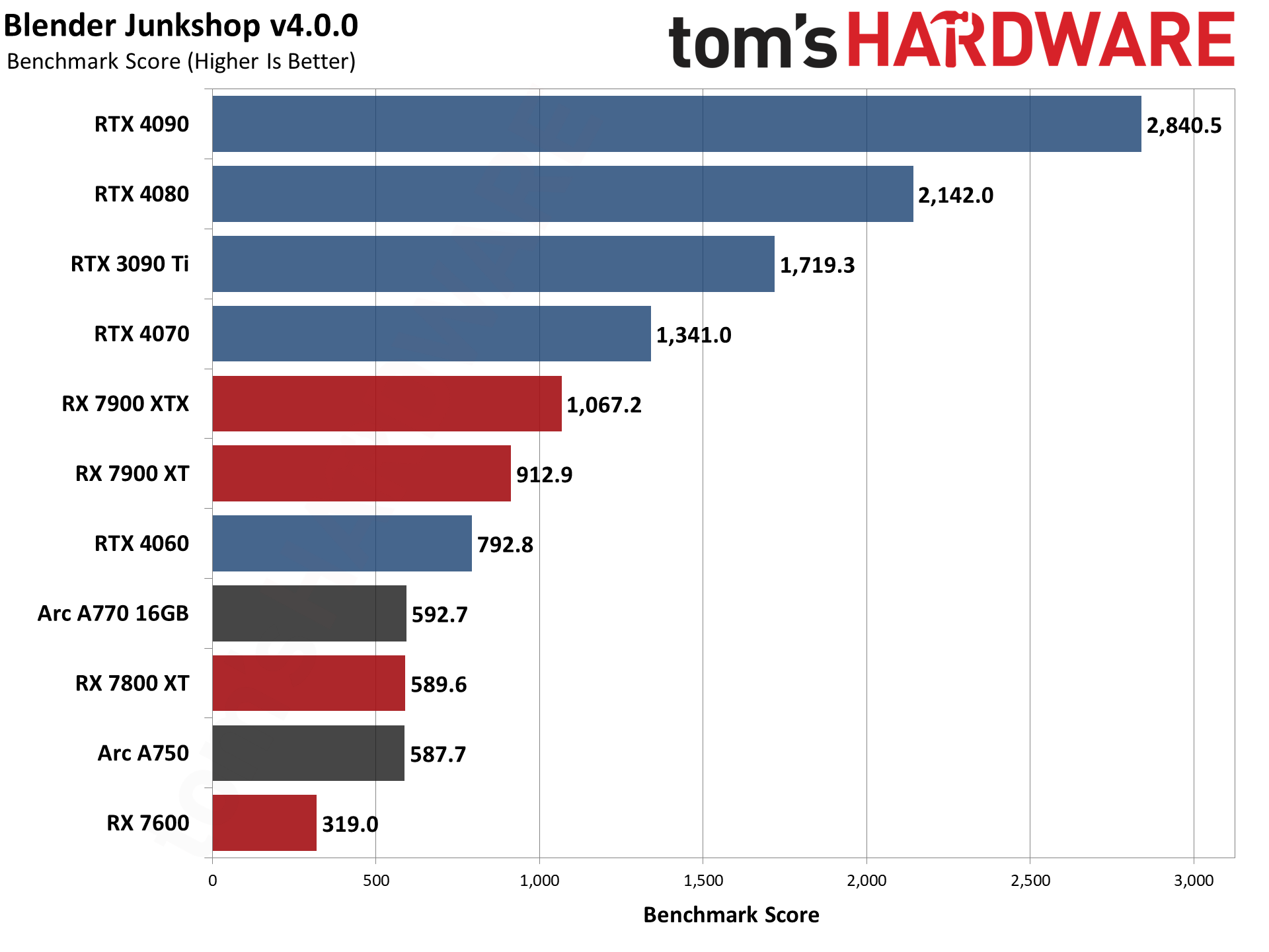

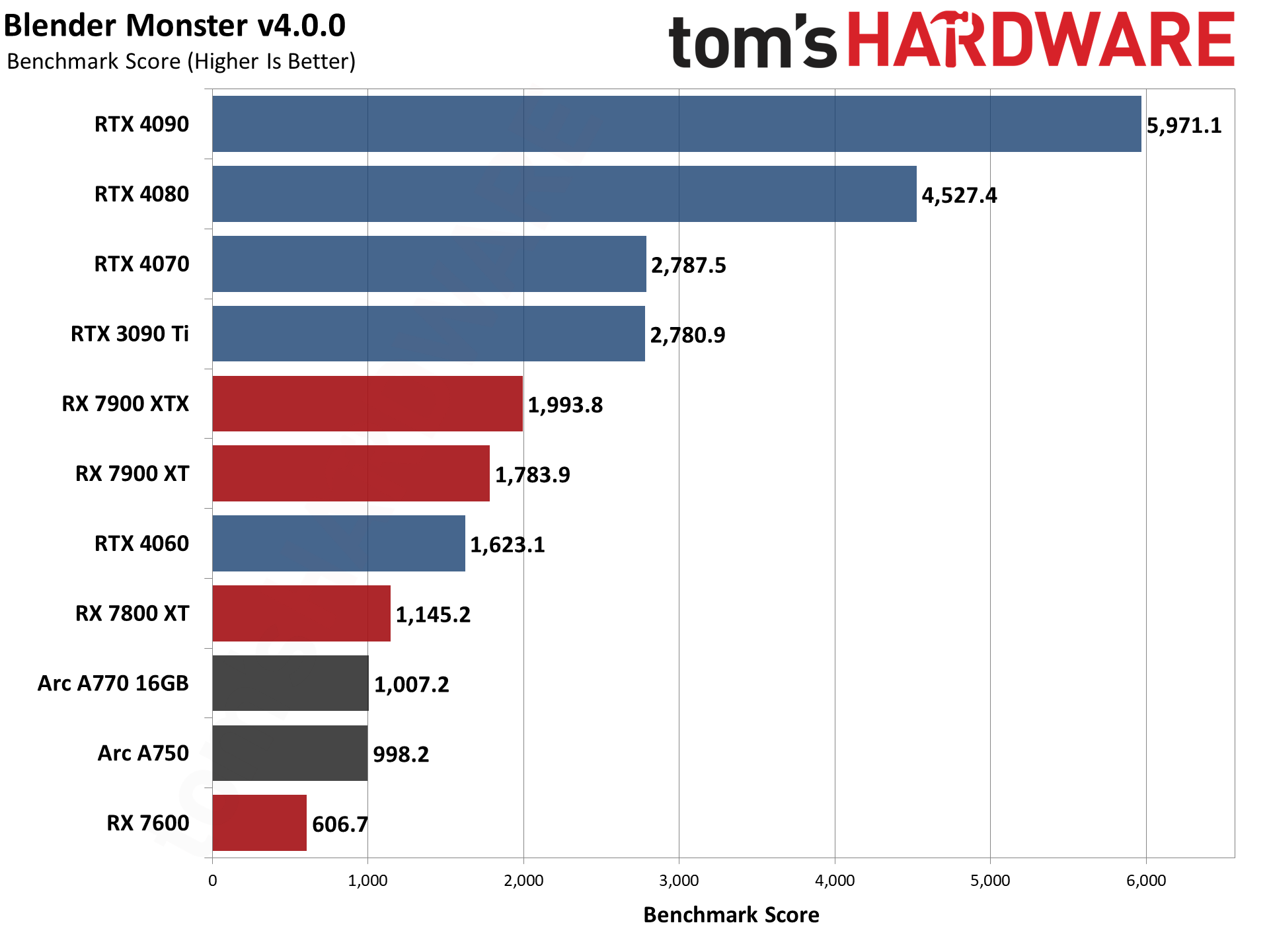

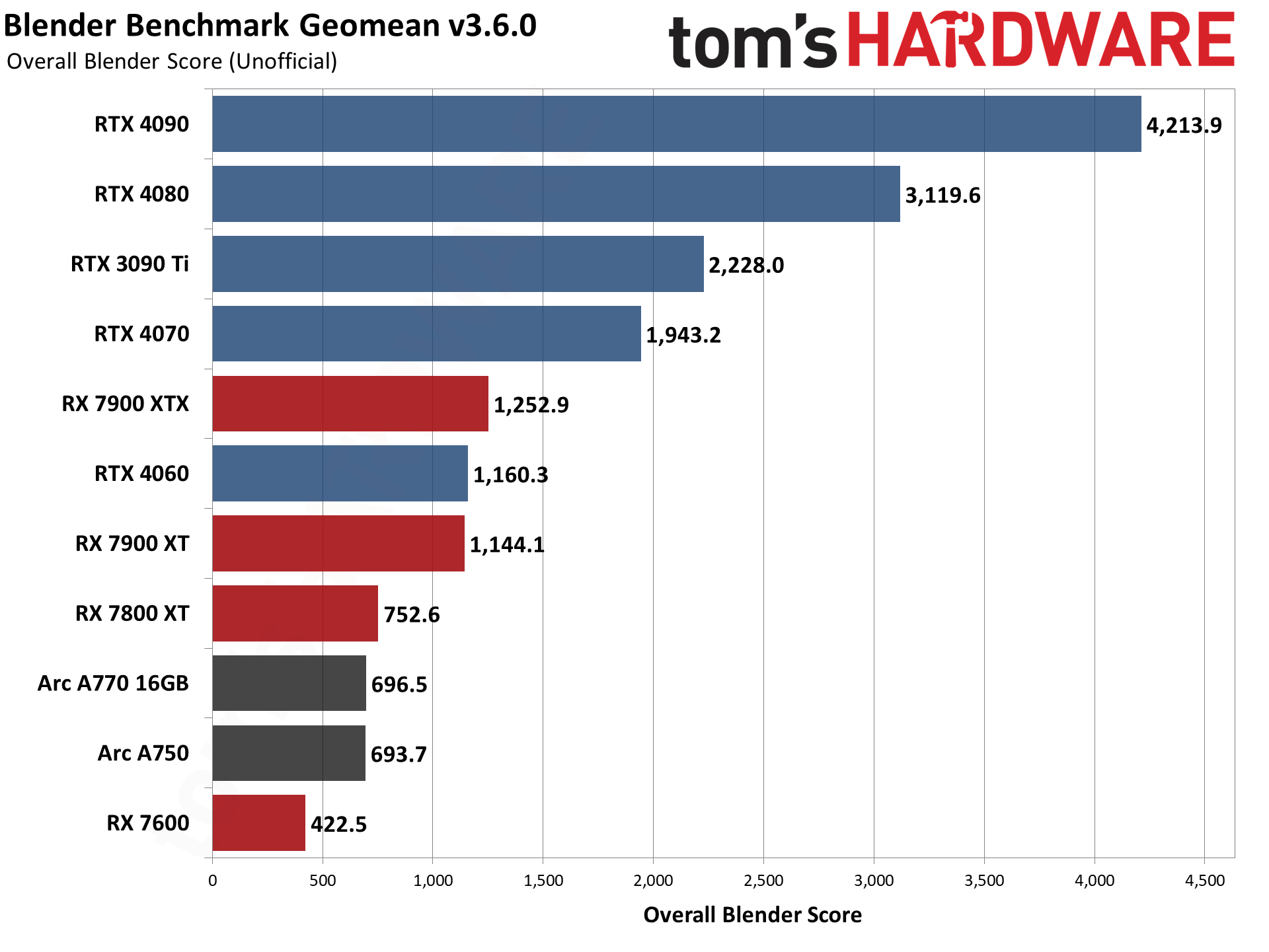

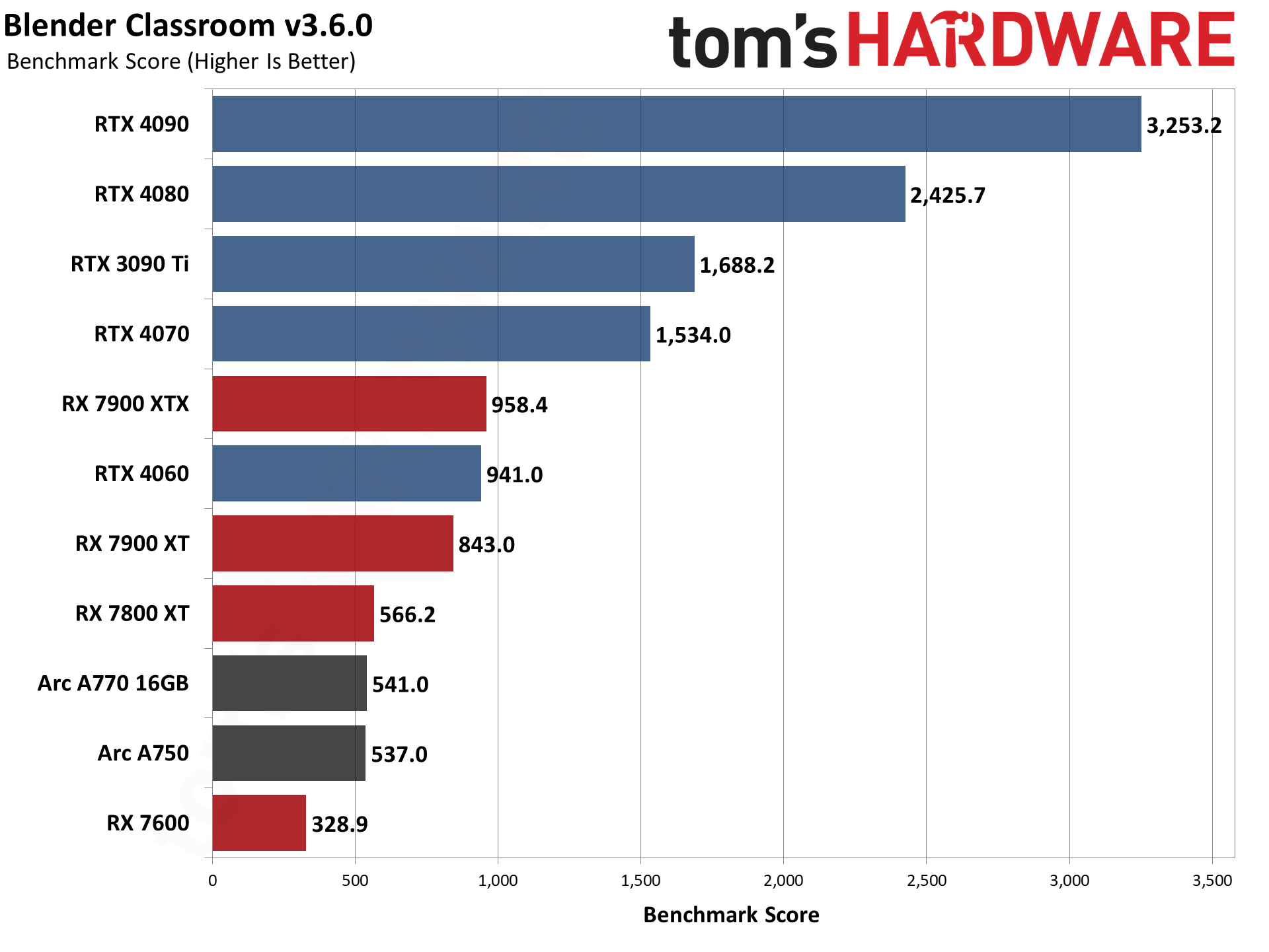

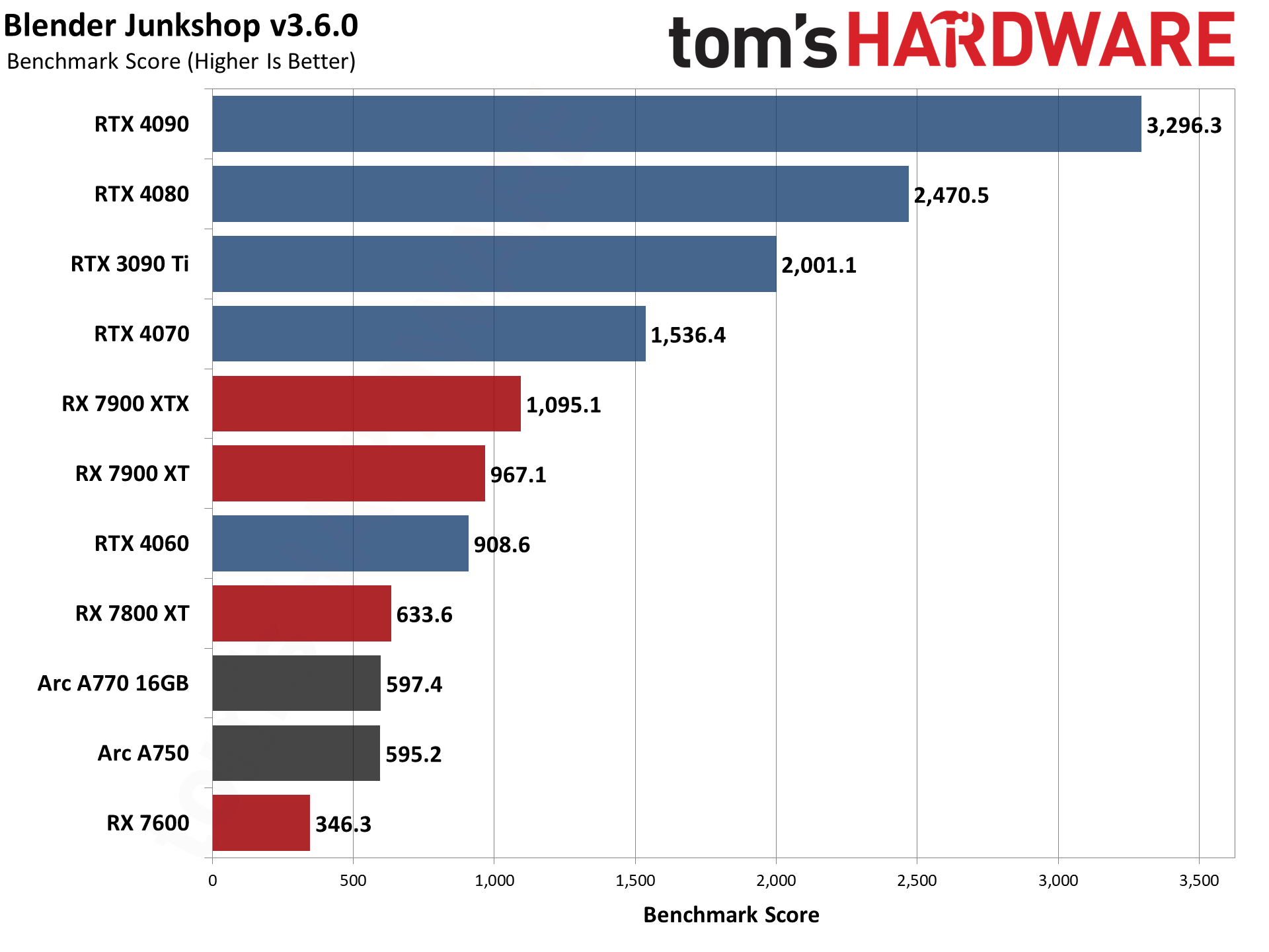

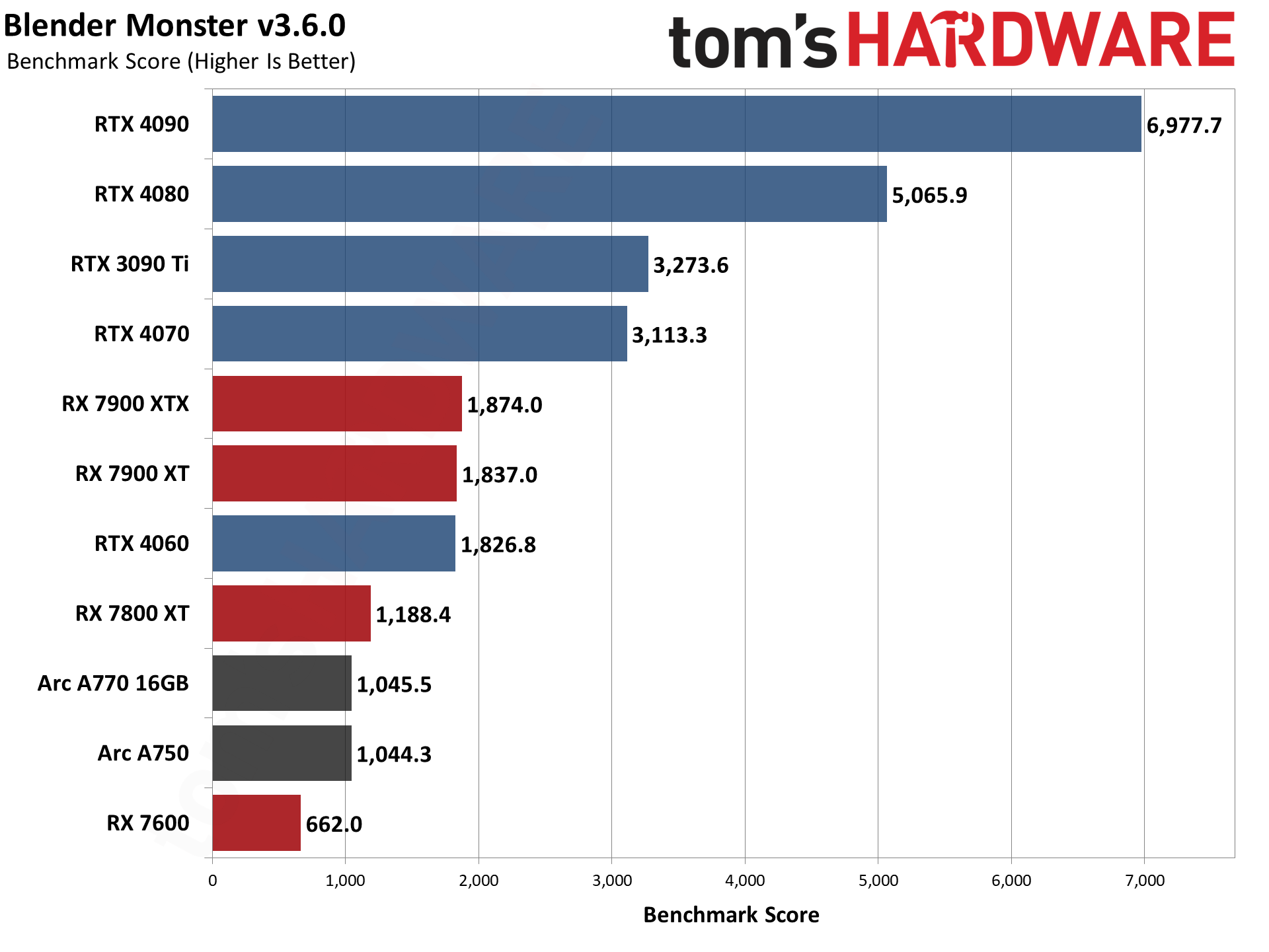

We've created two separate galleries showing performance with Blender 4.0 and Blender 3.6. In general, the rankings are nearly the same, but it can be hard to sort out the changes just by looking at the bar charts. We've also included a table of the geometric mean results to better highlight the differences.

The short summary is that, of the three GPU vendors, Nvidia shows the biggest delta right now, to the tune of a 10~13 percent loss in performance with Blender 4.0 compared to our previous testing. The 3.6 results are a couple of months old, so I'm not even sure which drivers were used in most cases, but I do know that I've seen fluctuations in performance in the past.

AMD's GPUs did comparatively better, with a 4~7% drop on three of the four cards we tested, and a slight 1% improvement in performance from the RX 7900 XTX. Perhaps that's because it had more room for improvement to begin with, though Nvidia still clearly wins on the raw performance front: The RTX 4070, for example, is still 38% faster than the RX 7900 XTX using Blender 4.0.

Finally, the two Intel Arc GPUs I tested ended up losing around 3% of their performance. What's odd about the A770 16GB and A750 is that performance is virtually identical on the two GPUs. The A770 was less than 1% faster overall, which doesn't really make sense. It has double the VRAM, and the memory is clocked 9% higher as well (17.5 Gbps versus 16 Gbps). If bandwidth was the bottleneck, we'd expect the A770 to win by around 9%. Conversely, if the Arc GPUs are compute bound, the A770 should still be about 14% faster — it has 32 Xe-Cores versus 28 Xe-cores, or 4096 GPU shaders versus 3584 shaders.

We're not going to fully switch to Blender 4.0 for testing of GPUs right now, simply because it's not clear whether it's fully optimized and performing as expected. We'll probably update to version 4.x some time next year, once things have settled down a bit more — running the initial release of most software usually means more bugs and room for optimizations.

As to whether the new features in Blender 4.0 are worth the potential loss in performance that we measured, we'll leave that debate to the artists. Certainly, there are a lot of changes and additions that should help create better visuals. The above official Blender video covers the major changes from this release. Just be forewarned that things may take longer to render compared to the previous release, though they should also look better as well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

abufrejoval Blender used to use the GPUs not at all, then very little and again later rather well.Reply

What I am trying to say is that it's far from trivial to redesign a rending pipeline built for quality to exploit a GPU that is designed to fake convincingly enough. And that they obviously selected code snippets for which GPUs would work well enough, while others were left alone, resulting in quite a bit of data dependencies and lost efficiency.

So if some scaling behavor "does not make sense", I understand the author's sentiment, but I can just imagine the groans of the developers who really tried the most they could.

I remember quite well that at one point Blender rendered a benchmark scene exacdtly in the same time on my 18-core Haswell and the RTX 2080ti, but in the latest pre 4.0 releases, GPU renders have made nearly all CPU power irrelevant, even if it did a rather nice job at at least trying to keep the CPUs busy, even if they contributed little speed-ups to the final result and were probably not efficient in terms of additional engergy vs. time reduction.

Let's just remember that Blender is a product that needs to deliver quality and will sacrifice performance if it can't have both.

A computer game would make the opposite choice. -

JarredWaltonGPU Reply

To be clear, the "doesn't make sense" on the A770 and A750 is more about Intel than it is about Blender. Because I'm sure Intel is helping quite a bit with getting the OpenVINO stuff working for Blender. AMD helps with HIP and Nvidia helps with Optix as well. I mostly point it out because it is a clear discrepancy in performance, where a faster GPU is tied with a slower GPU. I suspect Intel will work with the appropriate people to eventually make the A770 perform better.abufrejoval said:Blender used to use the GPUs not at all, then very little and again later rather well.

What I am trying to say is that it's far from trivial to redesign a rending pipeline built for quality to exploit a GPU that is designed to fake convincingly enough. And that they obviously selected code snippets for which GPUs would work well enough, while others were left alone, resulting in quite a bit of data dependencies and lost efficiency.

So if some scaling behavior "does not make sense", I understand the author's sentiment, but I can just imagine the groans of the developers who really tried the most they could.

I remember quite well that at one point Blender rendered a benchmark scene exactly in the same time on my 18-core Haswell and the RTX 2080ti, but in the latest pre 4.0 releases, GPU renders have made nearly all CPU power irrelevant, even if it did a rather nice job at at least trying to keep the CPUs busy, even if they contributed little speed-ups to the final result and were probably not efficient in terms of additional energy vs. time reduction.

Let's just remember that Blender is a product that needs to deliver quality and will sacrifice performance if it can't have both.

A computer game would make the opposite choice.

I would also suggest that GPUs aren't made to "fake convincingly enough." They're made to do specific kinds of math. For ray tracing calculations, they can offer a significant speed up over doing the same calculations on CPU. Doing ray/triangle intersections on dedicated BVH hardware will simply be way faster than using general purpose hardware to do those same instructions (which means both GPU shaders and CPU cores). For FP32 calculations, they're as precise as a CPU doing FP32 and can do them much, much faster. For FP64, you'd need a professional GPU to get decent performance.

It's really the software — games — that attempt to render graphics in a "convincing" manner. CPUs can "fake" calculations in the same fashion if they want. It's just deciding what precision you want. -

abufrejoval Reply

What you observe is Blender not scaling with GPU performance, on the ARC in this case.JarredWaltonGPU said:To be clear, the "doesn't make sense" on the A770 and A750 is more about Intel than it is about Blender. Because I'm sure Intel is helping quite a bit with getting the OpenVINO stuff working for Blender. AMD helps with HIP and Nvidia helps with Optix as well. I mostly point it out because it is a clear discrepancy in performance, where a faster GPU is tied with a slower GPU. I suspect Intel will work with the appropriate people to eventually make the A770 perform better.

And, yes, I agree that is obviously a software issue. Now, if it's Blender not eager enough to wring the last bit out of ARCs and e.g. leaving too much on the CPU side, or Intel not eager enough to provide fully-usable Blender-ready ray-tracing libraries, is a matter of perspective: I'd say we have a kind of Direct-X11 vs Mantle situation here and the "DX12 equivalent" optimal Blender interface for all GPUs is still missing.

To me it just makes complete sense, especially when do a migration from a pure CPU render to an accelerator, differences in the level of abstraction between GPUs will show off via scaling issues.... so had you said that the missing improvements between the 750 and 770 hint at software scaling issues blocking the optimal use of the hardware, I wouldn't have put a comment.

I'd say that's at least how they startedJarredWaltonGPU said:I would also suggest that GPUs aren't made to "fake convincingly enough."

And that may be where they have come to be, after transitioning into more GPGPU type hardware.JarredWaltonGPU said:They're made to do specific kinds of math. For ray tracing calculations, they can offer a significant speed up over doing the same calculations on CPU. Doing ray/triangle intersections on dedicated BVH hardware will simply be way faster than using general purpose hardware to do those same instructions (which means both GPU shaders and CPU cores). For FP32 calculations, they're as precise as a CPU doing FP32 and can do them much, much faster. For FP64, you'd need a professional GPU to get decent performance.

It's really the software — games — that attempt to render graphics in a "convincing" manner. CPUs can "fake" calculations in the same fashion if they want. It's just deciding what precision you want.

And there I might have predicted a continued split between real-time (Gaming-PU) oriented and quality-oriented (Rendering-PU) designs because at sufficient scale everything tends to split. It's like running LLMs on consumer GPUs: it's perfectly functional, but even at vastly lower prices per GPU the cost of running inference at scale still makes the vastly more expensive HBM variants more economical.

But that may be a discussion that is soon going to be outdated, because all rendering, whether classic GPU "real-time-first" rendering or ray-traced "quality-first" rendering according to Mr. Huang will be replaced by AIs faking all.

Why even bother with triangles, bump-maps, anti-aliasing and ray-tracing if AIs will just take a scene-description (from the authoring AI) and turn that into a video with "realism" simply part of the style prompt.

Things probably aren't going to get there in a single iteration, but if you aimed for head-start as a rendering startup, that's how far you'd have to jump forward.

Too bad Mr. Huang already got that covered...