Google's 'Cloud TPU' Does Both Training And Inference, Already 50% Faster Than Nvidia Tesla V100

At Google I/O 2017, Google announced its next-generation machine learning chip, called the “Cloud TPU.” The new TPU no longer does only inference--now it can also train neural networks.

First Gen TPU

Google created its own TPU to jump “three generations” ahead of the competition when it came to inference performance. The chip seems to have delivered, as Google published a paper last month in which it demonstrated that the TPU could be up to 30x faster than a Kepler GPU and up to 80x faster than a Haswell CPU.

The comparison wasn’t quite fair, as those chips were a little older, but more importantly, they weren’t intended for inference.

Nvidia was quick to point out that its inference-optimized Tesla P40 GPU is already twice as fast as the TPU for sub-10ms latency applications. However, the TPU was still almost twice as fast as the P40 in peak INT8 performance (90TOPS vs 48TOPS).

The P40 also achieved its performance using more than three times as much power, so this comparison wasn’t that fair, either. The bottom line is that right now it’s not easy to compare wildly different architectures to each other when it comes to machine learning tasks.

Cloud TPU Performance

In last month’s paper, Google hinted that a next-generation TPU could be significantly faster if certain modifications were made. The Cloud TPU seems to have have received some of those improvements. It’s now much faster, and it can also do floating-point computation, which means it’s suitable for training neural networks, too.

According to Google, the chip can achieve 180 teraflops of floating-point performance, which is six times more than Nvidia’s latest Tesla V100 accelerator for FP16 half-precision computation. Even when compared against Nvidia’s “Tensor Core” performance, the Cloud TPU is still 50% faster.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google made the Cloud TPU highly scalable and noted that 64 units can be put together to form a “pod” with a total performance of 11.5 petaflops of computation for a single machine learning task.

Strangely enough, Google hasn’t given the numbers for inference performance yet, but it may reveal them in the near future. Power consumption was not revealed either, as it was for the TPU.

Cloud TPUs For Everyone

Up until now, Google has kept its TPUs to itself, likely because it was still experimental technology and the company wanted to first see how it fared in the real world. However, the company will now make the Cloud TPUs available to all of its Google Compute Engine customers. Customers will be able to mix and match Cloud TPUs with Intel CPUs, Nvidia GPUs, and the rest of its hardware infrastructure to optimize their own machine learning solutions.

It almost goes without saying that the Cloud TPUs support the TensorFlow machine learning software library, which Google open sourced in 2015.

Google will also donate access to 1,000 Cloud TPUs to top researchers under the TensorFlow Research Cloud program to see what people do with them.

Update, 5/18/17, 7:52am PT: Fixed typo.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

redgarl Nvidia is selling shovel ware. They are selling an image of breakthrough while they are far from being even key players outside the GPU market.Reply -

bit_user Reply

Well, they're the leading GPU vendor, and machine learning is earning them quite a bit of revenue. But this was predictable - as long as they're still building general-purpose GPUs, they're going to be at a disadvantage relative to anyone building dedicate machine learning ASICs.19703240 said:Nvidia is selling shovel ware. They are selling an image of breakthrough while they are far from being even key players outside the GPU market.

I wish we knew power dissipation, die sizes, and what fab node. I'll bet Google's new TPU has smaller die size and burns less power. From their perspective, it'd probably be too risky to build dies as huge as the GV100.

Well, no, it can't run Crysis. Perhaps it can play it, with a bit of practice.19703511 said:But, can it play Crysis? -

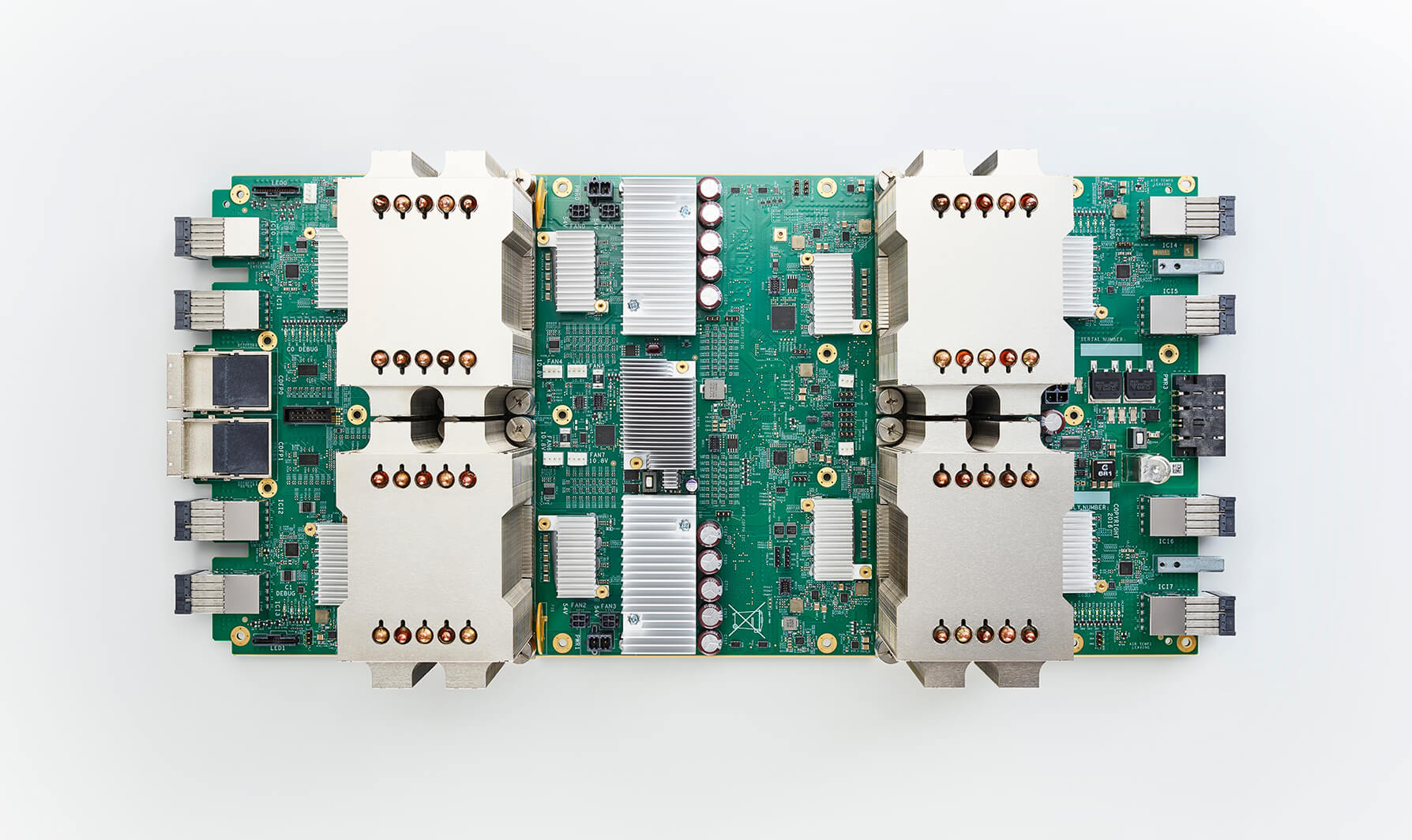

hahmed330 It's four 45 DL TFLOPs chips grouped together to produce aggregate 180 DL TFLOPs. Nvidia's Tesla V100 is a single chip producing 120 DL TFLOPs so no Tesla V100 is a more powerful chip then a single TPU2.Reply -

aldaia Reply19704066 said:It's four 45 DL TFLOPs chips grouped together to produce aggregate 180 DL TFLOPs. Nvidia's Tesla V100 is a single chip producing 120 DL TFLOPs so no Tesla V100 is a more powerful chip then a single TPU2.

Technically speaking a V100 has 9 chips 1 GPU and 8 HBM2 chips (and each HBM2 is actually 4 stacked chips).

Anyway, the number of chips in a package is totally irrelevant. Chip size alone is only good for braging rights. What is relevant is total size of package, power consumption of package, performance of package and total cost of package.

Right now we only know the performance, but i bet that the TPU2 4 chip solution is significantly cheaper than a single V100, consumes significantly less power, and by the looks of it uses less space.

Probably 4 TPU2 chips can be implemented in a single chip that will still be smaller than the nvidia one, but that will not improve performance and power consumption, while it will increase cost and require a more complex cooling solution.

-

hahmed330 Reply19704993 said:19704066 said:It's four 45 DL TFLOPs chips grouped together to produce aggregate 180 DL TFLOPs. Nvidia's Tesla V100 is a single chip producing 120 DL TFLOPs so no Tesla V100 is a more powerful chip then a single TPU2.

Technically speaking a V100 has 9 chips 1 GPU and 8 HBM2 chips (and each HBM2 is actually 4 stacked chips).

Anyway, the number of chips in a package is totally irrelevant. Chip size alone is only good for braging rights. What is relevant is total size of package, power consumption of package, performance of package and total cost of package.

Right now we only know the performance, but i bet that the TPU2 4 chip solution is significantly cheaper than a single V100, consumes significantly less power, and by the looks of it uses less space.

Probably 4 TPU2 chips can be implemented in a single chip that will still be smaller than the nvidia one, but that will not improve performance and power consumption, while it will increase cost and require a more complex cooling solution.

Actually, it is highly possible pretty much a guarantee that TPU2 has HBM2 since there are no DIMMs on the board and the first TPU had DDR3. They are not as power efficient you think they are since now they are doing deep learning as well.

"Chip size alone is only good for braging rights"

1.Rubbish a Tesla V100 is faster 2.6 times per chip then TPU2, therefore, spending more die size on ALUs is worth it. By the way, the next-gen of the digital world is the usage of Deep Learning, Analytics and Simulation simultaneously in a single application. The combined horsepower of a DGX-1V is capable of doing that, unlike TPU2, therefore, Nvidia is the furthest in that game.

2. You cannot scale efficiently on multiple chips for almost all of neural networks. Think of Crysis it would almost always run faster on a single 12 Teraflop chips then four 3 Teraflops chips using SLI... A lot of computation in deep learning done on a single neural network is quite sensitive to inter-node communication.

3. Just 4 TPU2s in a single node really Google??? Nvidia uses 2.6 times faster 8 V100's in a single node this will have dramatically bad effects on the scalability of "TPU2 Devices" since to match a single node of DGX-1V they would need 6 nodes of TPU2 nodes this defeats your main argument it won't be cost effective at all especially since they are gonna use HBM2.

4. DGX-1Vs are prebuilt server racks the client wouldn't need to worry about the cooling.

5. "DGX-1V" and "TPU2 Device" both are a single node, therefore, this is a fair comparison.

TPU2s are alright for small neural networks and for those which do not require a lot of Intra-Intercommunication between the processors. It is possible the TPU2 has better perf/power then Tesla V100, but TPU2 is a disappointment since its gonna take a long time to get TPU3 since ASICs take a lot of time and money to design.

Source for HBM2:

http://www.eetimes.com/document.asp?doc_id=1331753&page_number=2

-

renz496 so did google intend to sell this hardware to other company? or just provide cloud service? but google TPU beating nvidia tesla V100 probably not that unexpected. because ultimately tesla V100 is more a general solution for compute needs (tesla V100 for example also function as FP64 accelerator).Reply -

aldaia Reply19705092 said:19704993 said:19704066 said:It's four 45 DL TFLOPs chips grouped together to produce aggregate 180 DL TFLOPs. Nvidia's Tesla V100 is a single chip producing 120 DL TFLOPs so no Tesla V100 is a more powerful chip then a single TPU2.

Technically speaking a V100 has 9 chips 1 GPU and 8 HBM2 chips (and each HBM2 is actually 4 stacked chips).

Anyway, the number of chips in a package is totally irrelevant. Chip size alone is only good for braging rights. What is relevant is total size of package, power consumption of package, performance of package and total cost of package.

Right now we only know the performance, but i bet that the TPU2 4 chip solution is significantly cheaper than a single V100, consumes significantly less power, and by the looks of it uses less space.

Probably 4 TPU2 chips can be implemented in a single chip that will still be smaller than the nvidia one, but that will not improve performance and power consumption, while it will increase cost and require a more complex cooling solution.

Actually, it is highly possible pretty much a guarantee that TPU2 has HBM2 since there are no DIMMs on the board and the first TPU had DDR3. They are not as power efficient you think they are since now they are doing deep learning as well.

"Chip size alone is only good for braging rights"

1.Rubbish a Tesla V100 is faster 2.6 times per chip then TPU2, therefore, spending more die size on ALUs is worth it. By the way, the next-gen of the digital world is the usage of Deep Learning, Analytics and Simulation simultaneously in a single application. The combined horsepower of a DGX-1V is capable of doing that, unlike TPU2, therefore, Nvidia is the furthest in that game.

2. You cannot scale efficiently on multiple chips for almost all of neural networks. Think of Crysis it would almost always run faster on a single 12 Teraflop chips then four 3 Teraflops chips using SLI... A lot of computation in deep learning done on a single neural network is quite sensitive to inter-node communication.

3. Just 4 TPU2s in a single node really Google??? Nvidia uses 2.6 times faster 8 V100's in a single node this will have dramatically bad effects on the scalability of "TPU2 Devices" since to match a single node of DGX-1V they would need 6 nodes of TPU2 nodes this defeats your main argument it won't be cost effective at all especially since they are gonna use HBM2.

4. DGX-1Vs are prebuilt server racks the client wouldn't need to worry about the cooling.

5. "DGX-1V" and "TPU2 Device" both are a single node, therefore, this is a fair comparison.

TPU2s are alright for small neural networks and for those which do not require a lot of Intra-Intercommunication between the processors. It is possible the TPU2 has better perf/power then Tesla V100, but TPU2 is a disappointment since its gonna take a long time to get TPU3 since ASICs take a lot of time and money to design.

Source for HBM2:

http://www.eetimes.com/document.asp?doc_id=1331753&page_number=2

I agree on HBM. That is actually quite evident from the pictures and, if you read google's paper to be published at ISCA, it's also quite evident from their continuous comments about memory bandwidth being the main botleneck.

Respect single chip performance, I insist, it's nice to say "mine is bigger than yours" but its totally irrelevant for datacenters. Datacenter priorities are:

1 Performance/power

We don't know the real numbers but acording to some estimations a 4 chip package may use (in the worst case) the same power as a single tesla V100, so at least 50% better performance/power

2 Performance/$

You seem to asume that cost is proportional to number of chips, however building a big single chip is way way way more expensive that building 4 with same the total agregated area. We may never know the real cost for google (google is very secretive about that) but i have no doubt that 6 TPU nodes cost (much) less than $149,000. Note: I follow your assumtion that a single DGX-1V has the performance of 6 TPU nodes, but actually a DGX-1V is closer to 5 TPU nodes than to 6.

3 Performance/volume

One TPU pod contains 64 TPU nodes @ 180 TOPS and uses the space of 2 racks.

Fujitsu is building a supercomputer based on DGX-1 nodes. 12 nodes use also 2 racks of similar size.

12 DGX-1V contain 96 V100s @ 120 TOPS

So i'ts a tie here, both have the same performance/volume efficiency.

You also asume that the computations involved with neural networks don't scale well with nodes (using Crysis as example is a joke) and that TPU's are only good for small Neural Nets. Do you really think that google's Neural Nets are small?. Actually, the computations mostly involve dense linear algebra matrix operations (this is why GPUs are well suited to them). Linear algebra matrix operations scale amazingly well with number of nodes. Supercomputers with thousands, even with millions of nodes have been used for decades to run such applications. Neural networks are no exception, they can be run on traditional CPU based supercomputers and achieve nearly linear speed-up with number of nodes. The reason they are not run on thousands of CPU nodes is not performance scalability. It's actually because:

1 Performance/power

2 Performance/$

3 Performance/volume

All that being said, I agree that a GPUs are more flexible than TPU's in the same way that CPUs are more flexible than GPU's. Specialization is the only way of improving the aforementioned points 1, 2 and 3, but you pay a cost in flexibility. The best hardware (CPU, GPU or TPU) depends really on your particular needs, but if you are so big as google and run thousands or millions of instances 24/7 speciallization is the way to go. -

redgarl Reply19703712 said:

Well, they're the leading GPU vendor, and machine learning is earning them quite a bit of revenue. But this was predictable - as long as they're still building general-purpose GPUs, they're going to be at a disadvantage relative to anyone building dedicate machine learning ASICs.19703240 said:Nvidia is selling shovel ware. They are selling an image of breakthrough while they are far from being even key players outside the GPU market.

I wish we knew power dissipation, die sizes, and what fab node. I'll bet Google's new TPU has smaller die size and burns less power. From their perspective, it'd probably be too risky to build dies as huge as the GV100.

Well, no, it can't run Crysis. Perhaps it can play it, with a bit of practice.19703511 said:But, can it play Crysis?

That they are selling more GPU is irrelevant. They have nothing more to offer than GPU even if they are talking about all this AI, self-driving cars, programming courses, etc... they are still only selling GPU.

-

renz496 Reply19706591 said:19703712 said:

Well, they're the leading GPU vendor, and machine learning is earning them quite a bit of revenue. But this was predictable - as long as they're still building general-purpose GPUs, they're going to be at a disadvantage relative to anyone building dedicate machine learning ASICs.19703240 said:Nvidia is selling shovel ware. They are selling an image of breakthrough while they are far from being even key players outside the GPU market.

I wish we knew power dissipation, die sizes, and what fab node. I'll bet Google's new TPU has smaller die size and burns less power. From their perspective, it'd probably be too risky to build dies as huge as the GV100.

Well, no, it can't run Crysis. Perhaps it can play it, with a bit of practice.19703511 said:But, can it play Crysis?

That they are selling more GPU is irrelevant. They have nothing more to offer than GPU even if they are talking about all this AI, self-driving cars, programming courses, etc... they are still only selling GPU.

and yet they are very successful at it despite "only" selling GPU.