IBM, Stone Ridge Technology, Nvidia Break Supercomputing Record

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

It's no secret that GPUs are inherently better than CPUs for complex parallel workloads. IBM's latest collaborative effort with Stone Ridge Technology and Nvidia shined a light on the efficiency and performance gains for reservoir simulations used in oil and gas exploration. The oil and gas exploration industry operates on the cutting edge of computing due to the massive data sets and complex nature of simulations, so it is fairly common for companies to conduct technology demonstrations using the taxing workloads.

The effort began with 30 IBM Power S822LC for HPC (Minsky) servers outfitted with 60 IBM POWER8 processors (two per server) and 120 Nvidia Tesla P100 GPUs (four per server). The servers employed Nvidia's NVLink technology for both CPU-to-GPU and peer-to-peer GPU communication and utilized Infiniband EDR networking.

The companies conducted a 100-billion-cell engineering simulation on GPUs using Stone Ridge Technology's ultra-scalable ECHELON petroleum reservoir simulator. The simulation modeled 45 years of oil production in a mere 92 minutes, easily breaking the previous record of 20 hours. The time savings are impressive, but they pale in comparison to the hardware savings.

ExxonMobil set the previous 100-billion-cell record in January 2017. ExxonMobil's effort was quite impressive--the company employed 716,800 processor cores spread among 22,400 nodes on NCSA's Blue Waters Super Computer (Cray XE6). That setup requires half a football field of floor space, whereas the IBM-powered systems fit within two racks and occupy roughly half of a ping-pong table. The GPU-powered servers also required only one-tenth the power of the Cray machine.

The entire IBM cluster weighs in at roughly $1.25 to $2 million depending upon the memory, networking and storage configuration, whereas the Exxon system would cost in the hundreds of millions of dollars. As such, IBM claims it offers faster performance in this particular simulation at 1/100th the cost.

Such massive simulations are rarely used in the field, but it does highlight the performance advantages of using GPUs instead of CPUs for this class of simulation. Memory bandwidth is a limiting factor in many simulations, so the P100's memory throughput is a key advantage over the Xeon processors used in the ExxonMobil tests. From Stone Ridge Technology's blog post outlining its achievement:

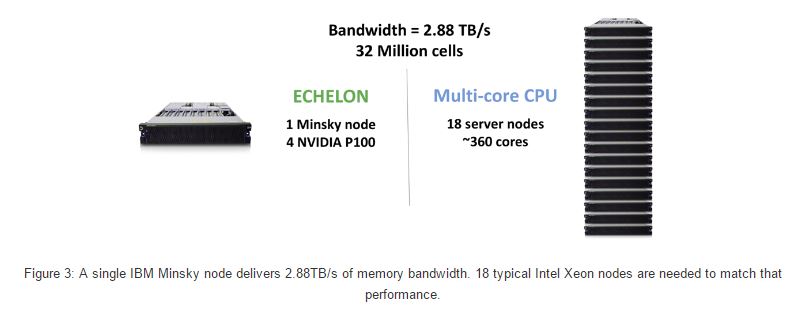

“On a chip to chip comparison between the state of the art NVIDIA P100 and the state of the art Intel Xeon, the P100 deliver 9 times more memory bandwidth. Not only that, but each IBM Minsky node includes 4 P100’s to deliver a whopping 2.88 TB/s of bandwidth that can address models up to 32 million cells. By comparison two Xeon’s in a standard server node offer about 160GB/s (See Figure 3). To just match the memory bandwidth of a single IBM Minsky GPU node one would need 18 standard Intel CPU nodes. The two Xeon chips in each node would likely have at least 10 cores each and thus the system would have about 360 cores.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Xeons are definitely at a memory throughput disadvantage, but it would be interesting to see how a Knight's Landing-equipped (KNL) cluster would stack up. With up to 500 GBps of throughput from on-package Micron HBM, they easily beat Xeon's memory throughput. Intel also claims KNL offers up to 8x the performance-per-Watt compared to Nvidia's GPUs, and though it's noteworthy that comparison is against previous-generation Nvidia products, that's a big gap that likely wasn't closed in a single generation.

IBM feels that its Power Systems paired with Nvidia GPUs can help other fields, such as computational fluid dynamics, structural mechanics, and climate modeling, among others, to reduce the amount of hardware and cost required for complex simulations. The massive nature of this simulation is hardly realistic for most oil and gas companies, but Stone Ridge Technology has also conducted 32-million-cell simulations on a single Minsky node, which might bring an impressive mix of cost and performance to bear for smaller operators.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bit_user ReplySuch massive simulations are rarely used in the field, but it does highlight the performance advantages of using GPUs instead of CPUs for this class of simulation.

Interestingly, Blue Waters still has about 10x - 20x the raw compute performance of the new system. It's also pretty old, employing Kepler-era GK110 GPUs.

https://bluewaters.ncsa.illinois.edu/hardware-summary

So, this really seems like a story about higher-bandwidth, lower-latency connectivity, rather than simply raw compute power. Anyway, progress is good.

it would be interesting to see how a Knight's Landing-equipped (KNL) cluster would stack up.

A Xeon Phi x200 is probably about half as fast as a GP100, for tasks well-suited to the latter. It's got about half the bandwidth and half the compute. Where it shines is if your task isn't so GPU-friendly, or if it's some legacy code that you can't afford (or don't have access to the source code) to rewrite using CUDA.

Regarding connectivity, the x200 Xeon Phi's are at a significant disadvantage. They've only got (optional) one 100 Gbps Omnipath link, whereas the GP100's each have 3x NVLink ports. -

Matt1685 Exxon and Stone Ridge Technology says they simulated a billion cell model, not 100 billion cell model.Reply

As far as Intel's claims about KNL, take them with a grain of salt. Firstly, they were probably making a comparison against Kepler processors, which is two generations from Pascal, not one. The P100 probably has 4 times the energy efficiency of the processor they used for the comparison. Secondarily any comparison of performance depends highly on the application. Thirdly, KNL uses vector processors which I think might be more difficult in practice to use at high efficiency than NVIDIA's scalar ALUs. Fourthly, KNL is restricted to a 1 processor per node architecture which makes it harder to scale a lot of applications when compared to Minsky's 2 CPU + 4 GPU per node topology. There would be much less high speed memory per node as well as much lower aggregate memory bandwidth per node. BIT_USER has already pointed out some of these things.

In reply to BIT_USER, the way it sounds, Exxon only used the XE CPU nodes for the simulation and not the XK GPU nodes. Each XE node consists of 2 AMD 6276 processors that came out in November 2011. NCSA counts each 6276 CPU as having 8 cores, but each CPU can run 16 threads concurrently and Exxon seems to be counting the threads to get their 716,800 processors. The XE nodes have 7.1 PF of peak compute according to NCSA. The P100s in the Stone Ridge simulation have about 1.2 PF. So, the increased performance has a lot to do with the increased memory bandwidth available, and perhaps with the topology (fewer, stronger nodes) and the more modern InfiniBand EDR interconnect.

Also, as far as using KNL when you can't afford to rewrite using CUDA, NVIDIA GPUs are generally used in traditional HPC using OpenACC or OpenMP. They don't need to use CUDA. They still seem to perform well used thus with respect to Xeon Phis, both in terms of performance and the time it takes to optimize code, as HPC customers are still using GPUs. Stone Ridge, however, surely has optimized CUDA code.

Blue Waters was built in 2012, so the supercomputer is 4-5 years old. The Stone Ridge Technology simulation is still very impressive, but the age of Blue Waters should be kept in mind when considering the power efficiency, cost, and performance comparisons. -

bit_user Reply

I was with you until this. Pascal's "Streaming Multiprocessor" cores each have 4x 16-lane (fp32) SIMD units. All GPUs rely on SIMD engines to get the big compute throughput numbers they deliver. KNL features only two such pipelines per core.19611898 said:Thirdly, KNL uses vector processors which I think might be more difficult in practice to use at high efficiency than NVIDIA's scalar ALUs.

Aside from getting good SIMD throughput, KNL (AKA Xeon Phi x200) cores are no more difficult to program than any other sort of x86 core. That said, its "cluster on a chip" mode is an acknowledgement of the challenges posed by offering cache coherency at such a scale.

Right. That's what I was getting at.19611898 said:So, the increased performance has a lot to do with the increased memory bandwidth available, and perhaps with the topology (fewer, stronger nodes) and the more modern InfiniBand EDR interconnect.

This still assumes even recompilation is an option. For most HPC code, this is a reasonable assumption. But, the KNL Xeon Phi isn't strictly for HPC.19611898 said:NVIDIA GPUs are generally used in traditional HPC using OpenACC or OpenMP. They don't need to use CUDA.

Right. I was also alluding to that.19611898 said:Blue Waters was built in 2012, so the supercomputer is 4-5 years old. The Stone Ridge Technology simulation is still very impressive, but the age of Blue Waters should be kept in mind when considering the power efficiency, cost, and performance comparisons.

Progress is good, but it's important to look beyond the top line numbers.

Anyway, the next place where HMC/HBM2 should have a sizeable impact is in mobile SoCs. We should anticipate a boost in both raw performance and power efficiency. And when it eventually makes its way into the desktop CPUs from Intel and AMD, high-speed in-package memory will remove a bottleneck that's been holding back iGPUs, for a while. -

bit_user When Intel decides it really wants to get serious about competing head-on with GPUs, it'll deliver a scaled up HD Graphics GPU. I see Xeon Phi and their FPGA strategy as playing at the margins - not really going for the jugular.Reply

x86 is fine for higher-power, lower-core count CPUs. But, when power efficiency really matters, x86's big front end and memory consistency model really start to take a toll.

-

Matt1685 I'm on shaky ground here, but I think something you said is misleading. NVIDIA uses an SIMT (single instruction, multiple thread) architecture. KNL uses AVX-512 vector units (which they have two of per core). I believe that SIMT allows flexibilty that KNL's vector units don't allow. That flexibility apparently makes it easier to extract data-level parallelism from applications. Of course there are downsides to it, as well. Here is an explanation better than I could give: http://yosefk.com/blog/simd-simt-smt-parallelism-in-nvidia-gpus.html.Reply

I think "KNL cores are no more difficult to program than another other sort of x86 core" is again a bit misleading. Strictly speaking, that's true. However, KNL cores are more difficult to program /well/ for. Code must be carefully optimized to properly extract parallelism in such a way that makes efficient use of the processor. In terms of achieving high performance, I haven't seen it claimed, except by Intel, that Xeon Phi processors or accelerators are easier to program for than GPUs.

I am confused by what you meant by "But, the KNL Xeon Phi isn't strictly for HPC." I only brought up HPC to provide a concrete example of GPUs being commonly used to run legacy code that users either can't afford to or simply don't want to rewrite using CUDA. It seems to me that the main advantages of Xeon Phi at the moment is that there are lots of programmers more comfortable with x86 programming and that Xeon Phi is an alternative to GPUs. Certain institutions don't want to be locked into any particular vendor or type of processor (any more than they already are with x86 I guess). They have lots of x86 legacy code and try to maintain their code in a more-or-less portable form as far as acceleration goes. So it's not difficult to use Xeon Phi to help ensure alternatives to GPUs are available. I haven't heard of many people being excited by Xeon Phi, though.

BTW, the last paragraph in my original message was not directed at you. After I sent the message I realized it might look like it was, sorry. -

Matt1685 Intel can't develop a GPU now. They've spent the last 5 years telling people they don't need GPUs. Even if Intel did now decide to risk pissing off their customers by moving full force to GPUs, I think they'd be at a big disadvantage. First it would take them years to develop a good GPU. Then after they had one, so much work would have to be done to optimize important code to work on Intel's new GPUs. They probably could have done it back in 2009, or whenever they started their Larrabee project. But instead they chose to try to leverage their x86 IP in the graphics world. As a result, graphics processors have been leveraged into their compute world.Reply

Besides, NVIDIA is a large, rich, ambitious, nimble, and focused company with expertise in both GPU hardware and software. Intel would not find it easy to directly compete with NVIDIA in GPUs. Intel couldn't just outspend NVIDIA and beat them technologically. The best they could hope for is to match them, and that wouldn't be good enough considering NVIDIA's lead with CUDA. As far as using their clout, Intel have already been in trouble with regulatory bodies for actions they took against AMD. I think they'd be on a short leash if they tried similar tricks to get customers to buy their GPUs over NVIDIA's. -

bit_user Reply

I don't know if Pascal still uses SIMT, but the distinction between that and SIMD is pretty minor. Reading that makes it sound like it could be implemented entirely as a software abstraction, so long as you've got scatter/gather memory access.19612395 said:I'm on shaky ground here, but I think something you said is misleading. NVIDIA uses an SIMT (single instruction, multiple thread) architecture. KNL uses AVX-512 vector units (which they have two of per core). I believe that SIMT allows flexibilty that KNL's vector units don't allow.

You're drinking too much of the Nvidia kool aide. KNL has 4-way SMT, in addition to SIMD. So, it's no big deal if one or two threads stall out. And you can always use OpenMP or OpenCL.19612395 said:Code must be carefully optimized to properly extract parallelism in such a way that makes efficient use of the processor. In terms of achieving high performance, I haven't seen it claimed, except by Intel, that Xeon Phi processors or accelerators are easier to program for than GPUs.

It's not an issue of programmer comfort, so much as it is that 99.999% of software in existence is written for single-threaded or SMP-style architectures. You could recompile that on an ARM, but porting most of it to a GPU involves a lot more than just a recompile.19612395 said:It seems to me that the main advantages of Xeon Phi at the moment is that there are lots of programmers more comfortable with x86 programming and that Xeon Phi is an alternative to GPUs. Certain institutions don't want to be locked into any particular vendor or type of processor (any more than they already are with x86 I guess).

KNL supports Windows 10 and enterprise Linux distros. You can literally run whatever you want on it. Webservers, databases, software-defined networking... anything. -

bit_user Reply

They'll go wherever they think the money is.19612425 said:Intel can't develop a GPU now. They've spent the last 5 years telling people they don't need GPUs. Even if Intel did now decide to risk pissing off their customers by moving full force to GPUs, I think they'd be at a big disadvantage.

done. and done. It's called their HD Graphics architecture, and they've been working on it at least that long. All they'd have to do is scale it up and slap some HMC2 on the side.19612425 said:First it would take them years to develop a good GPU. Then after they had one, so much work would have to be done to optimize important code to work on Intel's new GPUs. They probably could have done it back in 2009

They did a pretty good job at destroying the market for discrete, sub-$100 GPUs. Once they start shipping CPUs with in-package memory, I predict a similar fate could befall the $150 or even $200 segment. In revenue terms, that's the bread and butter of the consumer graphics market.19612425 said:Intel would not find it easy to directly compete with NVIDIA in GPUs.

Regulatory bodies have been toothless since about 2000, when the MS anti-trust case got dropped. I somehow doubt Trump is about to revive them, and the EU has bigger things to worry about.19612425 said:Intel have already been in trouble with regulatory bodies for actions they took against AMD. I think they'd be on a short leash if they tried similar tricks to get customers to buy their GPUs over NVIDIA's. -

bit_user BTW, I would agree that there's something holding Intel back from offering a non-x86 solution. I don't think it's their marketing department, however. I think they've learned the lesson too well not to stray from x86. In the past, they've only gotten burned when they've gone in that direction.Reply

But x86 has overheads that GPUs don't (and ARMs have is lesser degrees). You could even take their recent acquisitions of Movidius, Altera, and possibly even Mobileye & Nervana as acknowledgements of this fact. -

Matt1685 Pascal definitely still uses SIMT. So does AMD's GCN, even though they call their units vector units. (Their previous architecture, TerraScale, I think, was something they called Very Long Instruction Word, which worked a bit differently, but I'm not sure exactly how.)Reply

The difference that SIMT makes is not just a minor difference, to my understanding, as that blog post I sent you (and other things I have read, including this research paper from AMD https://people.engr.ncsu.edu/hzhou/ipdps14.pdf) suggests.

"You're drinking too much of the Nvidia kool aide. KNL has 4-way SMT, in addition to SIMD. So, it's no big deal if one or two threads stall out. And you can always use OpenMP or OpenCL."

I'm not drinking Kool Aid. My information doesn't come from NVIDIA. You apparently didn't read the blog post I sent you. Having an either or option of SMT or SIMD does not equal the data-parallelism extraction possibilities of SIMT. Without looking into it, I'm guessing that KNL offers SMT through the Silvermont cores, not the AVX-512 cores, not that it matters. Running 70 (cores) x 4 (threads per core)= 280 (threads) isn't going to give you much parallelization. And you can always use OpenMP or OpenCL? What are you on about? That makes no sense. How do you expect to extract data-parallelism without them?

"It's not an issue of programmer comfort, so much as it is that 99.999% of software in existence is written for single-threaded or SMP-style architectures. You could recompile that on an ARM, but porting most of it to a GPU involves a lot more than just a recompile."

Sure but that has NOTHING to do with Xeon Phi. No one in his right mind is going to purchase and install a Xeon Phi system in order to run legacy code without modification on its vastly sub-par scalar execution units. They'd just buy regular Xeons and get much better performance. My point quite obviously talked about the reasons to use Xeon Phis, not the reasons to use Xeons.

"KNL supports Windows 10 and enterprise Linux distros. You can literally run whatever you want on it. Webservers, databases, software-defined networking... anything."

Yes it can run whatever you want as slowly as you want. Most users, even those that could take advantage of parallelization, are sitting on the sidelines using CPUs because they are among that 99.999% who have software written for single threaded architectures or SMP architectures. The point there is that they aren't jumping to Xeon Phi despite the magical properties of your that you have mentioned. That's because there isn't an advantage to it. In order to take advantage of the Xeon Phi they have to do the same sort of work they would need to do to take advantage of GPUs. This isn't coming from NVIDIA, this is coming from the market, such as Cray.