Leaker says RTX 50-series GPUs will require substantially more power, with the RTX 5090 TDP jumping by over 100 watts

The RTX 5070 may require over 220 watts, while the RTX 5090 could need more than 550 watts of power, says popular leaker.

Popular hardware leaker @kopite7kimi claims from a source that the GeForce Blackwell GPUs will have a substantial increase in TGP (Total Graphics Power) requirements. According to their post on X, all SKUs would have “some increase in power consumption, with higher SKUs increasing more.” This news ties in with leaked information from Seasonic’s PSU wattage calculator, which showed the RTX 5070 requiring 220 watts (up by 20 watts from the 4070 and matching the 4070 Super) and the RTX 5090 needing 500 watts (up from 450 watts in the RTX 4090).

As with all leaks and rumors, there's at present no official information from Nvidia. Still, everything we know about Nvidia Blackwell suggests it's going to be big, and without a major bump in process node technology, higher power draw seems likely. At the same time, Nvidia could keep TGPs similar to the 40-series and leave the truly high power draw for factory overclocked AIB cards.

While the kopite7kimi agrees with the Seasonic leak that show the RTX 50-series GPUs having greater power consumption that the previous generation, they claimed that the power increases will be greater than what previous rumors. One response to their Tweet says, “I say 550W for 5090,” while another says, “Is 5070 more than the rumored 220?” and their response to both replies is a simple “more.”

This suggests that we could potentially need higher wattage PSUs just to accommodate the power demands of these bigger, badder GPUs. This news makes sense, especially as companies like Asus are building more efficient PSUs to help deliver the massive power requirements these GPUs demand.

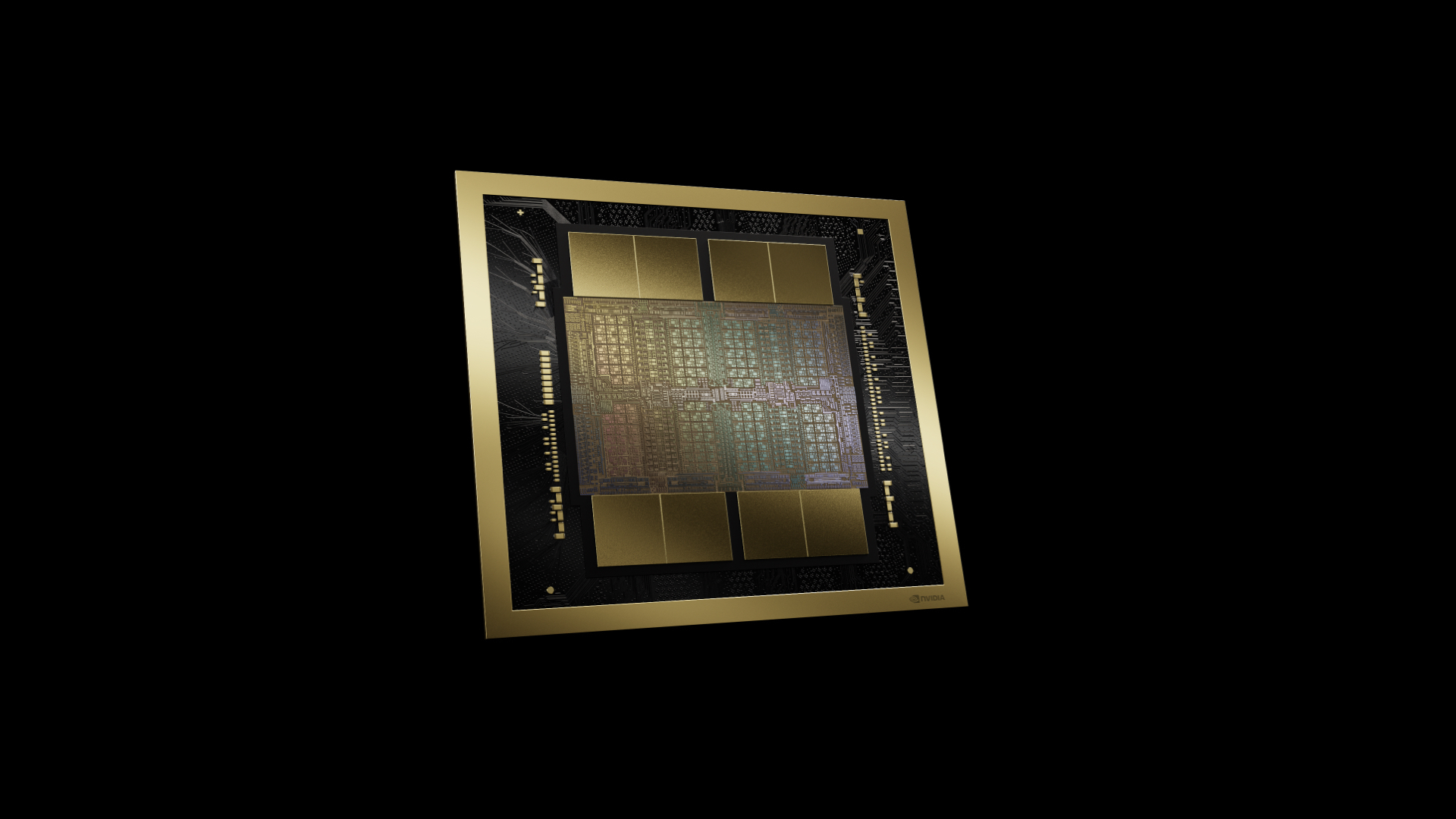

This increase in TGPs for consumer GPUs seems to be coming from the advancements in data center and AI GPUs. Nvidia is the current leader in this segment, reportedly accounting for 98% of the market share, and its most powerful offerings like the Hopper H100/H200 use as much as 700 watts of power. While consumer and gaming GPUs will likely not be as powerful as these data center chips, they typically use a similar architecture. So, if the latest AI and data center GPUs that use Blackwell chips will have an increase in power consumption, it stands to reason that Nvidia’s consumer GPUs will follow suit.

Although gamers and other professionals will likely appreciate the greater processing power that these GPUs should offer, the increased electricity consumption could soon become problematic, especially as even midrange gaming systems that run an RTX 5070 could soon require PSUs that are in the range of 500 watts or more. And if you're the type that likes to run your PSU at around 50% load, the CPU, GPU, and other system components would likely mean using at least an 800W PSU.

While this might not be an issue for individuals, if you consider the thousands or even millions of gamers that will upgrade their systems in the long run, this could potentially be taxing on the electrical grid. And if you add the proliferation of data centers and even EVs, we might soon see problems with the national and global power supply.

We appreciate the additional speed that new GPUs typically bring to us, but we hope companies like Nvidia will develop graphics card solutions that give more performance while running more efficiently than the previous generation. We want to avoid a potential future situation where our electricity supply can't keep up with the demands of our digital society.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

coolitic I choose my GPUs w/in a max TDP-range of 200W, no matter how the generation is skewed towards power-consumption. So, we'll see what actual gains there are in terms of performance-per-watt.Reply -

punkncat Honestly, this is ridiculous. We already have a generation using a ton of power, producing a lot of heat, and an ongoing issue with a new power connector melting and/or catching fire.Reply

Some dipstick at Nvidia "Hey, I know! Let's juice with even MORE wattage."

I thought one of the advantages of the more modern parts were increases in performance using less power. It seems that both the CPU and GPU manufacturers just threw all that out the window. -

andrep74 I also set my goal to 200W, and have even lowered my CPU power budget to achieve this. And while the limit is entirely arbitrary, I feel like a computer shouldn't draw the same power as *a hair dryer* just to play a video game.Reply

I can't but wonder if this death spiral of power consumption is driven by irresponsible users, or else greedy corporations. It's a certainty that governments will step in and make sure that consumers cannot purchase graphics cards above a certain threshold wattage (maybe 100W?) at some point. -

DougMcC Reply

Government action seems extremely unlikely. Particularly as a growing percentage of people who would have such high power PCs are now also running their own power plants.andrep74 said:I also set my goal to 200W, and have even lowered my CPU power budget to achieve this. And while the limit is entirely arbitrary, I feel like a computer shouldn't draw the same power as *a hair dryer* just to play a video game.

I can't but wonder if this death spiral of power consumption is driven by irresponsible users, or else greedy corporations. It's a certainty that governments will step in and make sure that consumers cannot purchase graphics cards above a certain threshold wattage (maybe 100W?) at some point. -

emike09 Reply

I probably fall under the irresponsible user category. Power is cheap where I live, and I have more than adequate cooling in my case. Outside of melting connectors, I'll take all the power I can get, as long as there has also been an improvement in efficiency per watt compared to the previous generation. The xx90 series (and previous x90 series) has always been about pushing the limits and maximizing performance. It's focused on power users, workstations, and enthusiasts who want or need everything they can get from a card. With the death of SLI, we can't just pop another card in and increase performance, though some workstation applications can scale with multi-GPU setups.andrep74 said:I can't but wonder if this death spiral of power consumption is driven by irresponsible users, or else greedy corporations.

It's too early to tell what efficiency improvements have been made on Blackwell but looking forward to seeing details. Gotta put that 1600w PSU to use somehow! -

Giroro Nvidia has 2.7 Trillion reasons to not care what you think about its AI accelerators GPUs.Reply -

Notton Some guess workReply

5070: 220W

5080: 250~300W

5090: it's 2x 5080 dies glued together

16-pin 12VHPWR spec defines 600W per connector

So it's either 600W, or go back to the "ugly" mess of 32 cables on one card 12VHPWR was trying to solve. -

Heiro78 Reply

It's scary to think that the card will run at 550w and only have a 50w buffer. I don't necessarily trust all the AIBs to properly cap the wattageNotton said:Some guess work

5070: 220W

5080: 250~300W

5090: it's 2x 5080 dies glued together

16-pin 12VHPWR spec defines 600W per connector

So it's either 600W, or go back to the "ugly" mess of 32 cables on one card 12VHPWR was trying to solve. -

Notton Reply

twin 16-pin cards already exist, btw.Heiro78 said:It's scary to think that the card will run at 550w and only have a 50w buffer. I don't necessarily

trust all the AIBs to properly cap the wattage

666W

https://www.galax.com/en/graphics-card/hof/geforce-rtx-4090-hof.html