Nvidia CEO admits next gen DGX systems necessitate liquid cooling - and the new systems are coming soon

Next gen is looking hot.

Nvidia's next-generation DGX servers will have liquid cooling, per comments from Nvidia CEO Jensen Huang at the 2024 SIEPR Economic Summit. In between saying the upcoming system was "magnificent" and "beautiful," Huang also mentioned the next DGX servers are "soon coming," indicating that perhaps Nvidia's next generation of graphics cards are on the horizon too.

Huang's comments weren't entirely clear, and he didn't explicitly mention anything about DGX. Instead, during a monologue about the power of modern GPUs and AI processors, he said "one of our computers" and "the next one, soon coming, is liquid cooled." Since Nvidia's sole lineup of AI-focused computers is the DGX, it's probably safe to say Huang is referring to DGX, or at least that's the take of some IT industry analysts.

Servers, even those in large and cutting-edge data centers, still rely primarily on air cooling for CPUs and GPUs, including the current generation DGX. Even Nvidia's high-end H100 and H200 graphics cards work well enough under air cooling, so the impetus to switch to liquid hasn't been that great. However, as Nvidia's upcoming Blackwell GPUs are said by Dell to consume up to 1,000 watts, liquid cooling may be required. Claims of Blackwell drawing 1,400 watts have also been thrown around.

2,000 watt processors might even be within the realm of possibility, as Intel researchers are attempting to design a liquid cooler that can handle that much heat. For years, processors have been getting hotter and more power-hungry, and to keep that trend going the industry will need to invest in cutting-edge cooling solutions.

All of this is in the interest of boosting performance, but not everyone is impressed by TDPs four digits long. Industry analyst Patrick Moorhead was particularly scathing about the idea of a liquid-cooled DGX, saying "So we’ve pulled nearly every lever so far to optimize performance at a kinda reasonable degree of heat/power... What’s next, liquid nitrogen?" Moorhead suggested hitting "the reset button" and implied integrating this technology just wouldn't be sustainable.

Realistically, there probably has to be a point where power can no longer be increased to boost performance, but power consumption has steadily increased from generation to generation. A decade ago, 300 watts was considered pretty excessive, and now the highest-end CPUs and GPUs easily surpass that figure. Further increases to power don't seem to be going away any time soon, as long as there's no better way to boost performance reliably.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

Alvar "Miles" Udell I'm not sure if this article was written to somehow shame nVidia, but as work density increases, then stronger cooling methods than air are required, and this applies everywhere and not just computers (hence my use of the term "work"), and as density increases then the number of systems required to perform the same amount of work decreases, thereby increasing overall efficency.Reply

Eventually the AI bubble will collapse, the deep pockets will run dry, and they (all GPU manufacturers) will need to go back to factoring in efficency and price, but right now there's no reason for them not to just care about performance and density. -

thisisaname Reply

The LLM version of AI bubble may collapse but I'm sure it will be replaced with another version if AI.Alvar Miles Udell said:I'm not sure if this article was written to somehow shame nVidia, but as work density increases, then stronger cooling methods than air are required, and this applies everywhere and not just computers (hence my use of the term "work"), and as density increases then the number of systems required to perform the same amount of work decreases, thereby increasing overall efficency.

Eventually the AI bubble will collapse, the deep pockets will run dry, and they (all GPU manufacturers) will need to go back to factoring in efficency and price, but right now there's no reason for them not to just care about performance and density. -

Alvar "Miles" Udell Replythisisaname said:The LLM version of AI bubble may collapse but I'm sure it will be replaced with another version if AI.

It'll collapse because a more efficient method will come along, or because the deepest pockets will run out, or because the saturation point will have been reached. A bubble is built on exponential growth, and once exponential turns linear and then logarithmic, or even to the negative, the bubble collapses. -

hotaru251 I am starting to see a future where most of a nations energy consumption is from LLM & data centers.Reply

collapse? no.Alvar Miles Udell said:Eventually the AI bubble will collapse

it will never collapse until Singularity (where an ai can effectively skip the training needed and learn on fly by itself)

WIll it lessen? likely once we hit point of diminishing advancement yr over yr.

won't happen.Alvar Miles Udell said:the deep pockets will run dry, and they (all GPU manufacturers) will need to go back to factoring in efficency and price

A restricted market has few vendors thus prices can be kept high as "where else ya gonna go?".

Efficiency is goal of arm versions & specific performance is ASIC benefit and in time they may replace general GPU of today, but GPU's for gaming shall likely never return to the old days...as every "gaming" gpu uses up fab allocation which is lost profit.

"ai" will never truly not be wanted...even if just by bad actors who will use it to make more advanced scams. -

JTWrenn Performance will continue to push until we hit the level of AI they are aiming for, then we will see actual efficiency gains. It is just how computing works. We didn't start getting efficient laptops until we go to a point where laptops felt fast enough that we were not giving anything up. This tech will get faster and more power hungry for the next 5 to 10 years, then we will see it back off once we have all the AI ideas they have come up with up and running. Right now doing more is worth more than doing it cheaper so they will push for those headlines, and use a ridiculous amount of power. Then once they have run out of new hurdles that catch clicks, they will start working on efficiency.Reply -

wwenze1 Well, duhReply

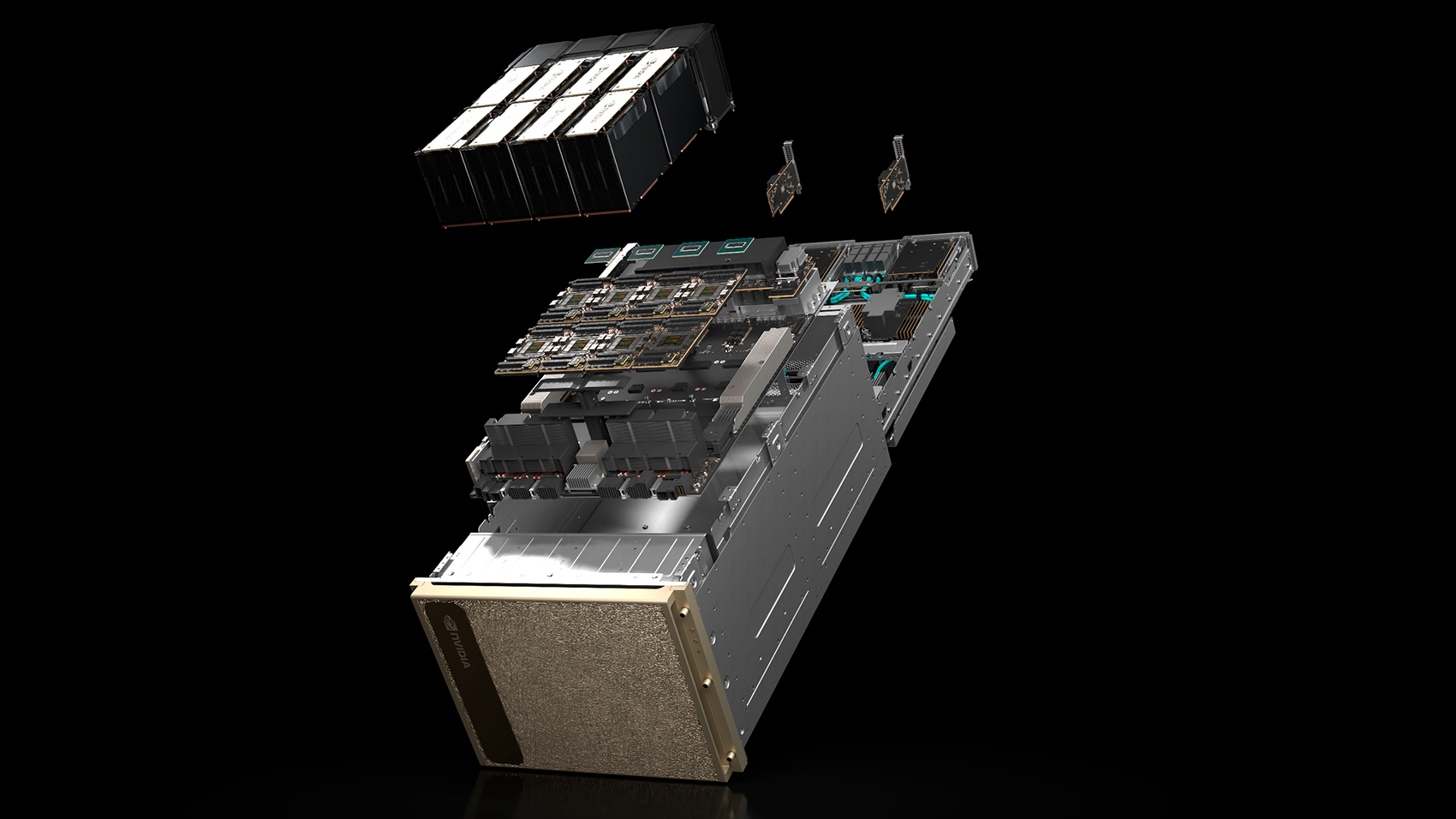

One DGX H100 has 8 x NVIDIA H100 and the whole module is spec'ed for 10kW, it's amazing it is even air-coolable.

Nothing was admitted, no new relevation. It's just a surprise if you're not part of the industry. -

edzieba What kind of liquid cooling is the question. Because datacentres have a few different flavours:Reply

- You have fully internal closed-loop cooling, which is what we are used to in desktop PCs. Waterblocks on hot components move heat to the coolant, the coolant circulates to radiators within the server chassis, cool air blows through the radiators and cools the coolant. AIO or discrete pump is a minor distinction here.

- Next is rackscale watercooling, Here, each server has no airflow, but instead a water inlet and outlet. These plumb into the rack, which hosts shared radiators to receive cool air and cool the coolant for all the servers in the rack. This is a fairly common setup despite being an 'inelegant hack', because it allows hardware density increases from non-airflow chassis but allows you to install these systems in a datacentre with a regular hot/cold aisle airflow setup, alongside regular air-cooled hardware, with no new site plumbing

- Finally, we have full site liquid cooling, Here, there is little to no AC airflow for the aisles, and instead the coolant is routed from the servers to massive shared chillers (usually with liquid/liquid heat exchangers in between, to aid in maintenance and leak volume mitigation). You need to design the datacentre around this architecture, so it's less common.

If DGX is just being designed around internal closed-loop liquid cooling, this is basically a nothingburger. If they have off-chassis liquid transport, that then becomes more interesting.