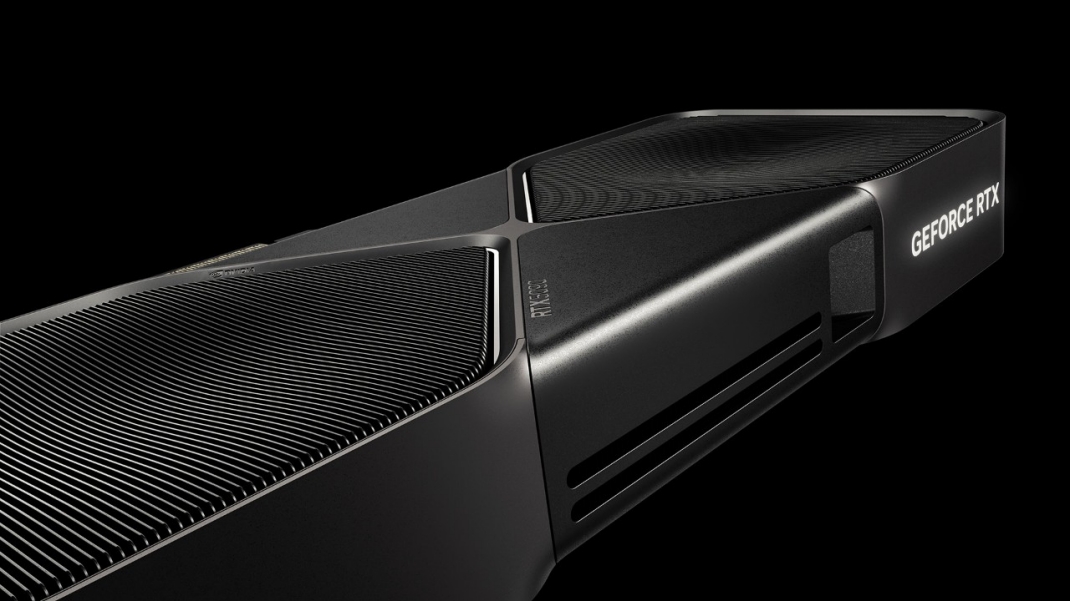

RTX 5070 allegedly delayed until early March to counter AMD RX 9070 launch

The only catch is availability.

A new rumor suggests that Nvidia's mid-range RTX 5070, previously slated for a launch later this month, has been delayed to early March, per MEGAsizeGPU at X. Its elder Ti-class sibling should still arrive on time. However, considering the infamous RTX 5090/RTX 5080 launch, we cannot comment on GPU's retail availability. If true, it's probably no coincidence that this "delay" coincides with AMD's Radeon RX 9070 series.

To recap, the RTX 5070 was unveiled by Jensen Huang at CES last month, wielding the GB205 GPU at its heart with 48 SMs (6,144 CUDA cores) and 12GB of GDDR7 memory. The GPU offers a 192-bit interface, equating to six memory modules, filled by 28 Gbps GDDR7 ICs for 672 GB/s of bandwidth. Nvidia has set a launch MSRP of $549 for the RTX 5070, which is $50 cheaper than the last-generation RTX 4070. Instead of declaring a definite launch date, Nvidia said the RTX 5070 would supposedly launch with the RTX 5070 Ti in February, but that's no longer true.

Renowned tipster MEGAsizeGPU, in a tweet, alleged that the RTX 5070 is delayed, with retail availability pushed back to early March, likely to thwart AMD's RDNA 4 launch. In light of recent leaked benchmarks putting the RX 9070 XT just behind the RTX 4080 Super (in raster), Nvidia's RTX 5070 marketing may hinge on pricing instead of performance.

The RTX5070 will be delayed. Instead of February, it will be on the shelf in early March.February 12, 2025

During the RTX 50 reveal, Jensen Huang very proudly asserted the RTX 5070 is equal to the RTX 4090 (with MFG), which might be misleading for many unsuspecting consumers. The RTX 5080 barely edges the RTX 4080 Super by just 9%, which is quite disappointing for a gen-on-gen uplift, especially considering the RTX 4080 was 38% faster than the RTX 3080 Ti. In terms of other capabilities, Blackwell offers many new features such as Reflex 2.0, Smooth Motion, Multi Frame Generation, and.... more AI horsepower. Still, it's best to temper expectations and anticipate a 15-20% uplift in (raster) performance, at best, versus the RTX 4070.

Both cards in the RX 9070 family are reportedly packed with 16GB of memory, and as a result, AMD is marketing these GPUs as 4K-ready. The same cannot be said about the RTX 5070, which might struggle with VRAM-hungry titles at higher resolutions. Even though Nvidia is working on Neural Texture Compression (NTC) technology to reduce texture memory footprint, it's still a ways off from mainstream adoption.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

valthuer 8 years after the 11 GB 1080 Ti, it’s nothing sort of disappointing that we ‘re witnessing the release of 12 GB GPUs, like 5070.Reply

With AAA gaming becoming more demanding with each passing day, 16 GB should be a bare minimum for graphics cards, IMHO. -

edzieba Reply

On the other hand, the first game directly impacted by not having 12GB vRAM available (at UHD render resolution) - The Last Circle - was only just released, whilst the first GPU with 12GB vRAM available was released a decade ago (2015's GTX Titan X). Real-world vRAM requirements in games have just not scaled up that much over time, and per-DRAM-die memory bandwidth has continued to grow without the need to brute-force it with wider bus widths (which requires more DRAM dies, which means more RAM capacity by default because leading-edge DRAM dies are only made in so small a capacity).valthuer said:8 years after the 11 GB 1080 Ti, it’s nothing sort of disappointing that we ‘re witnessing the release of 12 GB GPUs, like 5070.

With AAA gaming becoming more demanding with each passing day, 16 GB should be a bare minimum, IMHO. -

atomicWAR Looks like Nvidia wants to try and steal some of AMD's thunder. But considering the state of launched 50 series products I find it more likely Nvidia might end up helping AMD rather than hurt them. 50 series cards have been so disappointing. Unless AMD really botched their GPUs this may may well play out in their favor. Plus hearing rumors AMD might actually launch a higher end SKU now, I suspect their 'refresh' chips might have more umpff than Nvidia would like.Reply -

atomicWAR Reply

Hardly, 12GB of VRAM even at 1440P has been problematic since the launch of 40 series cards and even longer at 4k/UHD. Granted its not all games but its to many to be ignored. The simple truth is 12 GB is not enough VRAM for modern gaming. Games like Ratchet and Clank a Rift apart can exceed 12GB of VRAM at 1080P with max settings. Honestly 16GB should be the bare minimum cards launch with in this day and age IMO.edzieba said:On the other hand, the first game directly impacted by not having 12GB vRAM available (at UHD render resolution) - The Last Circle - was only just released, whilst the first GPU with 12GB vRAM available was released a decade ago (2015's GTX Titan X). Real-world vRAM requirements in games have just not scaled up that much over time, and per-DRAM-die memory bandwidth has continued to grow without the need to brute-force it with wider bus widths (which requires more DRAM dies, which means more RAM capacity by default because leading-edge DRAM dies are only made in so small a capacity). -

valthuer ReplyatomicWAR said:Games like Ratchet and Clank a Rift apart can exceed 12GB of VRAM at 1080P with max settings.

Yep. And Resident Evil 4 remake, requires 13.73 GBs of VRAM, at max 1080p settings.

Along with 2-3 more games, this was the very reason i decided to get rid of my 4070 Ti, back in 2023, and buy 4090.

I was getting CTDs with Direct3D fatal errors, due to my card's insufficient VRAM.

Haven't encountered any problems ever since.

Memory bandwidth, sure is helpful, but it's not enough to save you by itself. -

Shiznizzle Reply

I have a 3060 12GB. A comment i read this week stated that 8 GB VRAM was not enough or barely enough to run at 1080p. Since this is my monitor setup i fired up the most demanding game i had in my library. Tomb Raider Shadow, to see what it was demanding. I wanted to see for myself what the score was.atomicWAR said:Hardly, 12GB of VRAM even at 1440P has been problematic since the launch of 40 series cards and even longer at 4k/UHD. Granted its not all games but its to many to be ignored. The simple truth is 12 GB is not enough VRAM for modern gaming. Games like Ratchet and Clank a Rift apart can exceed 12GB of VRAM at 1080P with max settings. Honestly 16GB should be the bare minimum cards launch with in this day and age IMO.

This was a game which did run on my previous 1060 6GB, but ran nowhere near max settings. It was a game of compromises between getting decent refresh rates and eye candy. Lots of sacrifices. I would rather have the game be playable with playable refresh rates and no stuttering, than lots of eye candy and the FPS yo-yo-ing, which is noticeable.

So i fired up the 3060 and turned all to max at 1080p. My monitor is limited to 60Hz so that is my refresh rate. It did run it at max and at 60 FPS. Memory usage was at nearly 7 GB though. And that on a 1080p.

More and more people are wanting to move up to 1440p. I am one of them. With the up coming 5060 and its 8 GB i think that is not enough for me. But then again i am going AMD this time around no matter what the specs say. Linux is easier to deal with on an AMD card.

Me thinks the 5060 will be barely able to handle 1440P with sub 60 FPS with its 8GB of Vram. Lots of people are goign to be disappointment. -

RTX 2080 Reply

The XX60 series has been a 1080p-class card for at least the last decade. They can be used at 1440p, but they aren’t intended for it.Shiznizzle said:I have a 3060 12GB. A comment i read this week stated that 8 GB VRAM was not enough or barely enough to run at 1080p. Since this is my monitor setup i fired up the most demanding game i had in my library. Tomb Raider Shadow, to see what it was demanding. I wanted to see for myself what the score was.

This was a game which did run on my previous 1060 6GB, but ran nowhere near max settings. It was a game of compromises between getting decent refresh rates and eye candy. Lots of sacrifices. I would rather have the game be playable with playable refresh rates and no stuttering, than lots of eye candy and the FPS yo-yo-ing, which is noticeable.

So i fired up the 3060 and turned all to max at 1080p. My monitor is limited to 60Hz so that is my refresh rate. It did run it at max and at 60 FPS. Memory usage was at nearly 7 GB though. And that on a 1080p.

More and more people are wanting to move up to 1440p. I am one of them. With the up coming 5060 and its 8 GB i think that is not enough for me. But then again i am going AMD this time around no matter what the specs say. Linux is easier to deal with on an AMD card.

Me thinks the 5060 will be barely able to handle 1440P with sub 60 FPS with its 8GB of Vram. Lots of people are going to be disappointment.

When the 2060 super came out, everyone said to skip the 2070 for 1440p and buy the 2060 super instead. The same thing happened with the 3060 Ti. Problem is, they very quickly became inadequate for 1440p gaming.

The RTX 5060 is intended for 1080p. It’ll do that adequately. You want more VRAM so that you can play at 1440p? Buy a 5070. Want even more? Buy a 5070 Ti. Or an AMD card, it’s up to you. -

Gururu I just can't help but feel that the 90X0 line up is going to be smooth and polished. They've patiently waited and have been rewarded with news after news of nVidia falling on their face.Reply -

bourgeoisdude Reply

Never underestimate AMD's ability to mess up. I'm cautiously optimistic, but if they release the 9070xt at $700, they missed the point.Gururu said:I just can't help but feel that the 90X0 line up is going to be smooth and polished. They've patiently waited and have been rewarded with news after news of nVidia falling on their face.

That being said, I'm still pretty happy with my 7800xt. -

Mr Majestyk Reply

Did you see the leaked Canadian pricing. It's atrocious. AIB cards at $850+USDbourgeoisdude said:Never underestimate AMD's ability to mess up. I'm cautiously optimistic, but if they release the 9070xt at $700, they missed the point.

That being said, I'm still pretty happy with my 7800xt.