Why you can trust Tom's Hardware

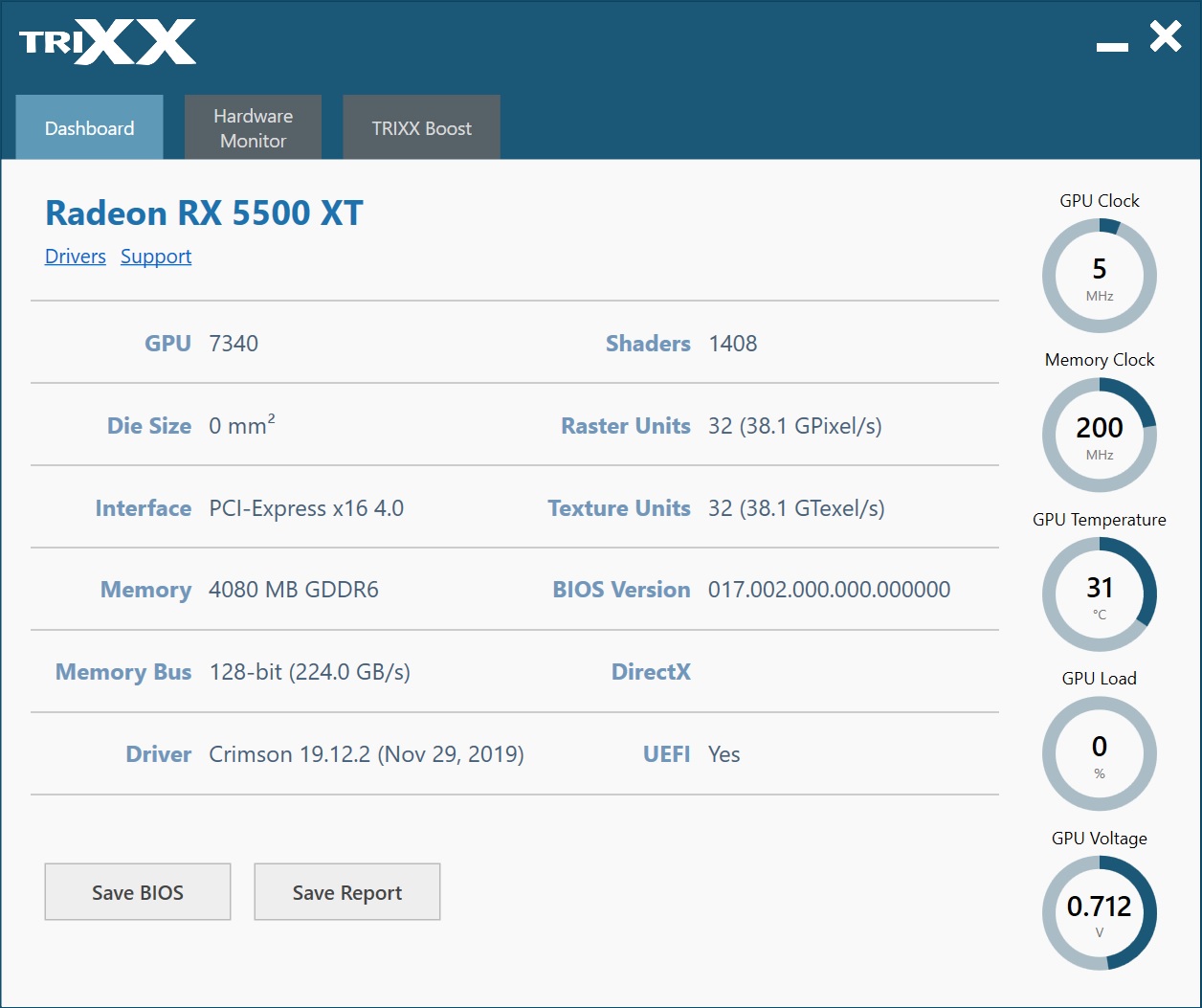

Sapphire recently released an updated version of the Trixx software with a newly designed GUI and other tweaks. The software’s opening screen, labeled Dashboard, includes GPU clock rate, memory clock rate, GPU temperature, and GPU load indicators. The GPU voltage field was greyed-out. We’ve seen this updated software twice recently, and there are no overclocking options available. Outside of the Trixx Boost section, the software for this card is essentially for for monitoring purposes.

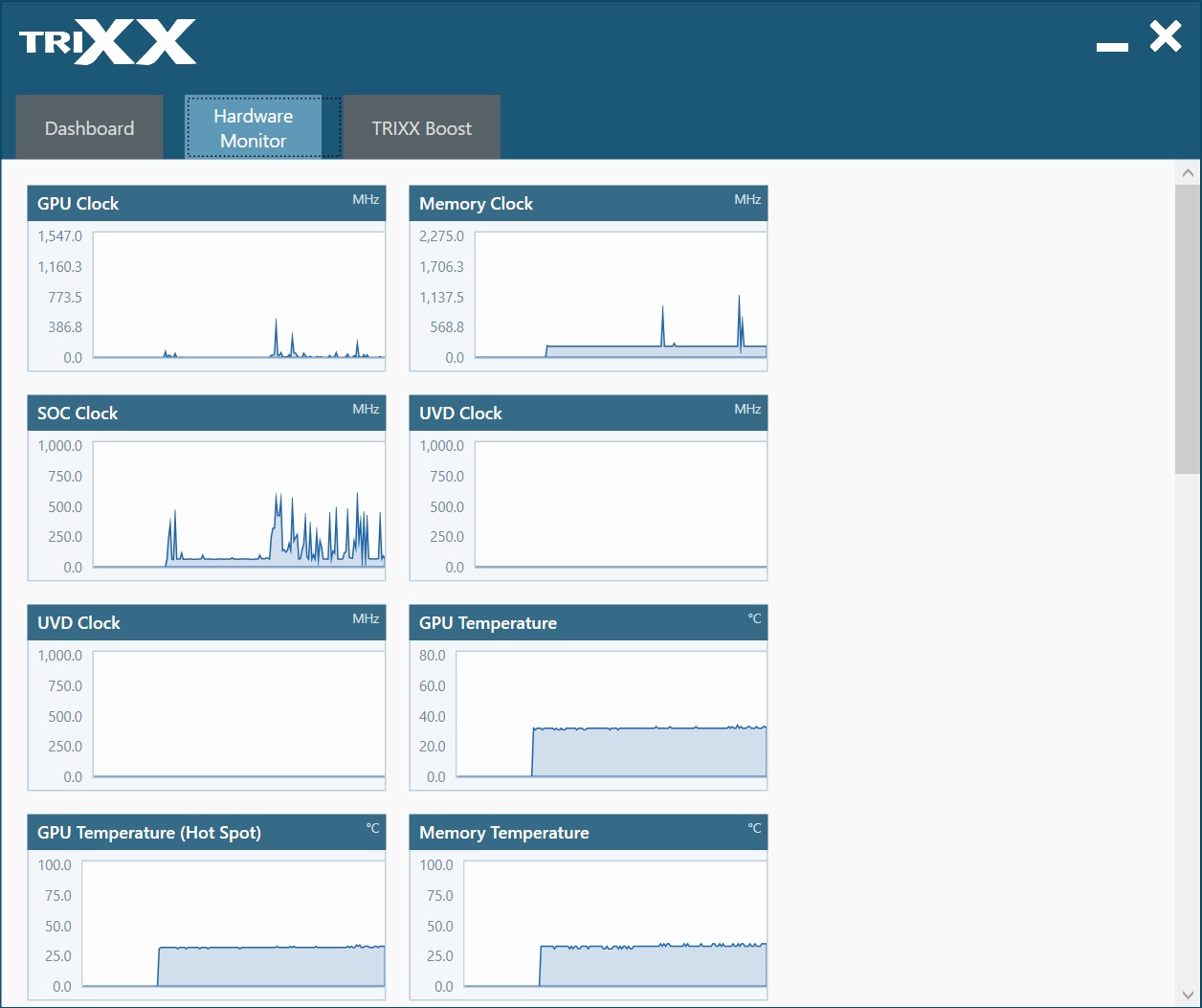

The Hardware Monitor tab conveys a lot of the same information already available in GPU-Z’s Sensors tab. It’s just reflected in line graph form exclusively.

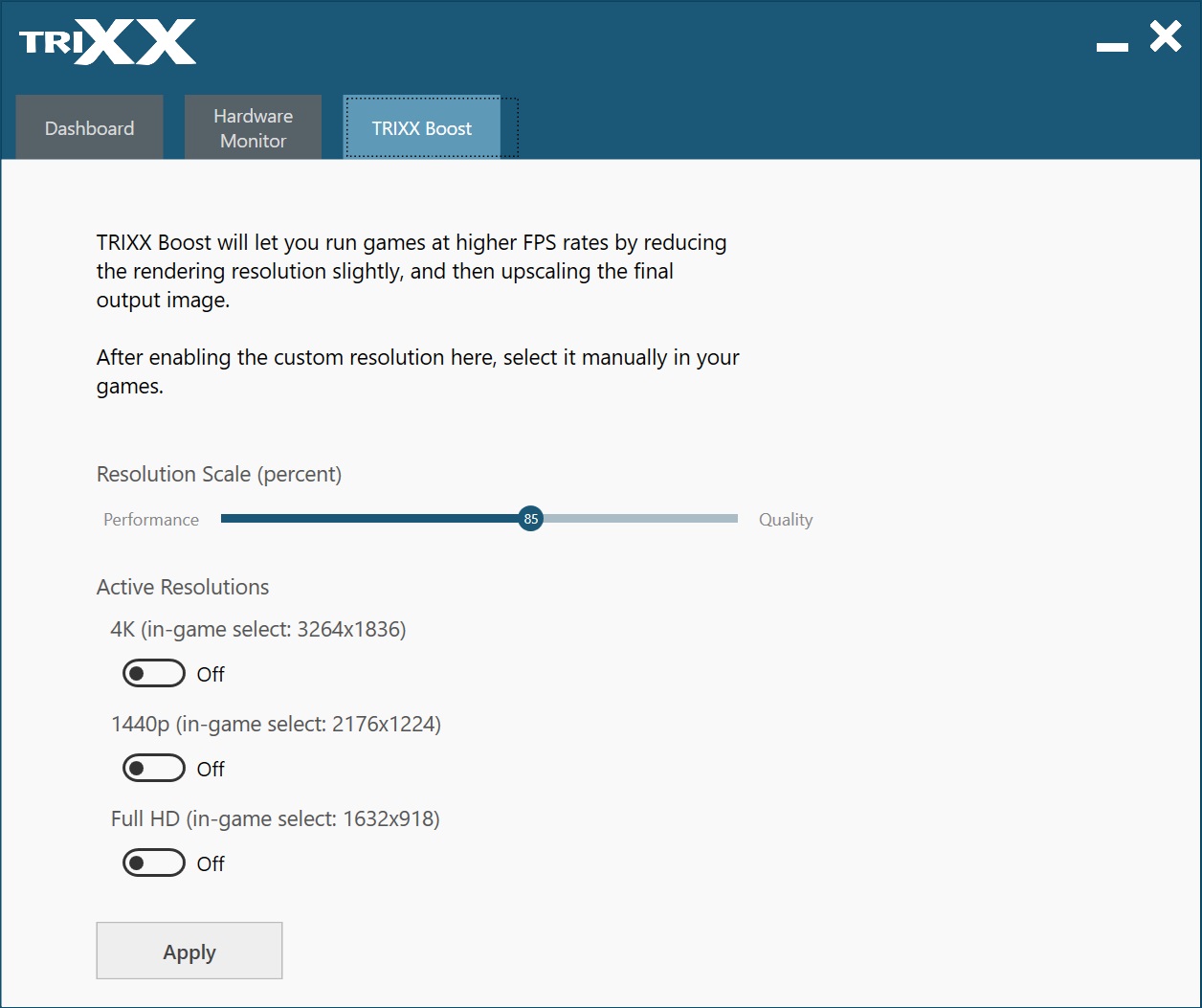

TRIXX Boost is Sapphire’s attempt at making upsampling plus Radeon Image Sharpening a more commonly-used combination of features. This was a big part of AMD’s Radeon RX 5700-series story, which we didn’t get to explore since a proper analysis requires careful consideration of image quality. After all, you’re running games at a lower resolution, then upscaling the final output, and applying a contrast-adaptive sharpening algorithm. By default, TRIXX Boost is set to use an 85% resolution scale. At 4K, the feature creates a custom 3264x1836 resolution, which you choose instead of 3840x2160. Just remember that RIS is limited to DirectX 9, DirectX 12, and Vulkan support for now.

Performance naturally improves quite a bit compared to native 4K. But you’ll have to decide for yourself if the trade-off in quality is acceptable. We played around with TRIXX Boost briefly in Shadow of the Tomb Raider and Metro Exodus and couldn’t tell the difference between 3264x1836 and 3840x2160. Every game is going to be different though, as will each gamer’s opinion of fidelity.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Software: Sapphire Trixx

Prev Page Power Consumption, Fan Speeds, Clock Rates and Temperature Next Page Conclusion

Joe Shields is a staff writer at Tom’s Hardware. He reviews motherboards and PC components.

-

tennis2 Can we please get an "average of all benchmarks" graph in the summary page of GPU reviews? It's difficult to glean a winner when cards flip-flop depending on the game tested.Reply -

King_V Interesting - and definitely confusing when trying to judge overall performance, given how it, does better at some games and worse at others compared to Nvidia's cards.Reply

Also, this gave me a bit of a reminder that I've kind of been underestimating the RX 590, though its power draw is just too much for what it does.

Also, on page 4:

The outgoing XFX RX 590 Fat Boy averaged 140W.

and

The XFX RX 590 Fat boy averages 149W. Nothing out of the ordinary here.

The first one is a little hard to tell, given how the 590's plot on the graph swings so wildlyabout, but that number on the second one seems wrong, considering that only once does a plot point for it ever dip below 150W. Eyeballing it, I want to say it's about 190-ish watts?

Overall, though, I'm glad it's at the least trading blows with the 1650 Super, and pleasantly surprised to see it occasionally flirting with 1660 territory. Still, odd in the places where it does fall short, as the 4GB doesn't seem to hurt the 1650 Super the same way.

I'm definitely looking forward to the test results when the 8GB variant is added to the graphs, and thus far, this seems to bode well for the upcoming RX 5600 XT.

And yes, power efficiency has improved significantly, though Nvidia hasn't stood still on this, so, AMD still has a bit of work to do.

Maybe Mini-Me might use his Christmas money from Grandma and Grandpa to go for an RX 5600 XT, when they come out in January, to add to his ChromaTron build, currently in progress. -

mitch074 I would like the review more if I could get a more direct comparison with my reference RX480 8GB - I bought it almost when it came out and it serves me well, but I don't see myself upgrading for less than a 50% performance increase.Reply -

tennis2 Reply

RX480/RX580 (exact same GPU) is about 15% slower than an RX590 (used in this review). Depending on how much you paid for your RX480 and how much of a sale you can find on a GTX1660Ti, that's the 50% improvement for the same $$ step you're asking about. Unfortunately AMD has a gaping hole between the 5500XT and the 5700. Ain't nobody buying Vega 56's.mitch074 said:I would like the review more if I could get a more direct comparison with my reference RX480 8GB - I bought it almost when it came out and it serves me well, but I don't see myself upgrading for less than a 50% performance increase. -

InvalidError Reply

The 5600 is coming, should drop somewhere in-between.tennis2 said:Unfortunately AMD has a gaping hole between the 5500XT and the 5700. -

cryoburner The 5500XT seems decidedly underwhelming from a pricing standpoint, considering it generally doesn't perform much faster than an RX 580, and that level of performance has been available in the $170-$200 range for quite a while already. Even the RX 480 had nearly this level of performance, and the 4GB variant of that card launched for $199 back in June of 2016. A full 3 1/2 years later, we're only getting somewhere around 10-15% more performance at a slightly lower price, at least for the 4GB version, and at this point you'll probably want the higher VRAM version to keep up with future games.Reply

And compared to Nvidia's current offerings, this brings nothing new to the table. The 4GB 5500XT appears to offer similar performance to a 1650 SUPER at a slightly higher MSRP. And the 8GB version might cope a little better with games that have high VRAM requirements, but at a 25% higher MSRP than the 1650 SUPER. Around that price level, you can snag a faster 1660 for just a little more.

The only notable advantage over AMD's existing sub-$200 lineup would be the reduced power consumption, and in turn heat output, with the new cards being much closer to Nvidia's offerings. Again, that only brings them nearly on-par with what the competition is already offering though, and not providing any real advantage over products already on the market. These cards should have been priced at least $20 lower at launch to differentiate them from existing models. I suspect they didn't do that to give RX 500 series cards a chance to clear out first though, since it sounds like there's still a lot of inventory around. It's also possible that limited 7nm production might have played a role, with them not wanting to make the lower-margin products any more attractive than necessary. Perhaps prices will level down in the coming months though. -

tennis2 I'll say that AMDs superior software features are a scale-tipper for me. Wattman and Chill are fantastic and I'd really miss those features if I went Nvidia. Haven't tried the new Performance Boost scaling yet.Reply

On pricing, yes the 5500XT is a bit expensive comparatively. More than likely, prices will quickly correct. Not to forget that AMD is probably having to pass along a "7nm tax" in its products since 7nm is bleeding edge right now and not many fabs even have the capability. -

King_V I would be a little surprised if, for no other reason, the holidays drive the prices down some - the 5500 XT, which in turn would create downward pressure on the Polaris cards.Reply

I've been wrong before, though. -

InvalidError Reply

The Navi dies are about half the size of equivalent performance Polaris dies, the "7nm" tax would need to be in the nearly 100% for AMD to need to raise MSRPs to maintain gross margins.tennis2 said:Not to forget that AMD is probably having to pass along a "7nm tax" in its products since 7nm is bleeding edge right now and not many fabs even have the capability.

How many fabs "have the capability" does not really matter for bulk clients like AMD, they pay a predetermined cost per wafer for whatever volume they contracted for the duration of that contract and most of those contracts for 2020 got nailed down a long time ago. The companies who may have to pay the big bucks for wafers are smaller customers fighting over whatever capacity is left.

Pretty sure the launch pricing is just a pre-xmas cash grab and prices will come down a fair amount early next year, maybe sooner if enough people pick the 1650S over the 5500XT. -

digitalgriffin Replyadmin said:AMD’s 4GB Radeon RX 5500 XT is a capable 1080p gamer at $169. But if you need to play at Ultra, memory becomes an issue and you might want to step up to the 8GB model or Nvidia’s GTX 1660.

AMD Radeon RX 5500 XT Review: 7nm RDNA on a Budget : Read more

Current market price of $229 for 8Gigs makes it DOA.

$170 RX590's are common and there's just not enough of a difference to justy $60 price jump.

$195 Maybe. But that's pushing it.