Why you can trust Tom's Hardware

With a starting price of $180, Intel is clearly going after the mainstream budget gaming market. Some users may have higher resolution displays, but 1080p will be the primary use case for the Arc A580, so that's where we'll start.

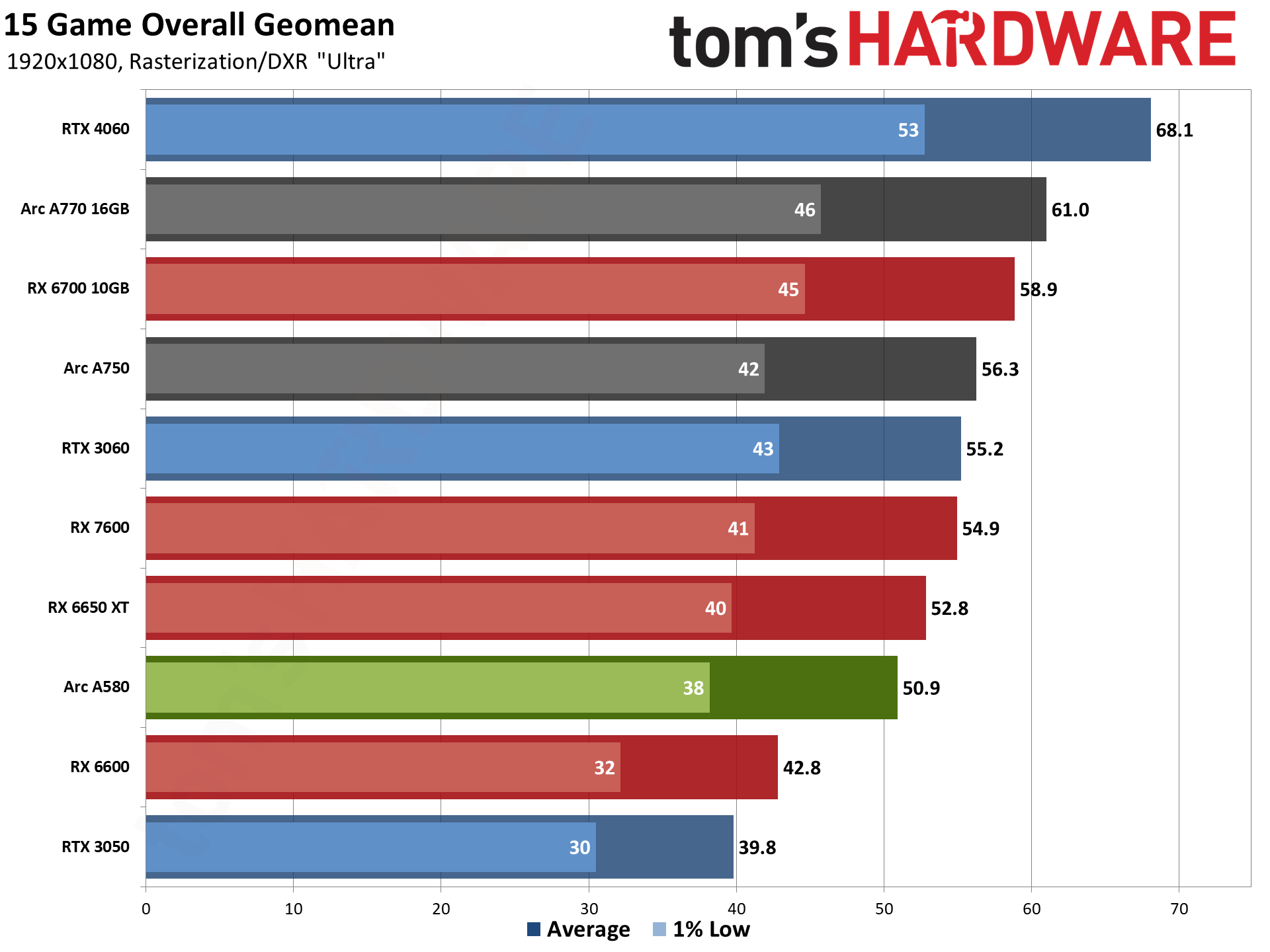

Our current test regimen gives us a global view of performance using the geometric mean of all 15 games in our test suite, including both the ray tracing and rasterization test suites. Then we've got separate charts for the rasterization and ray tracing suites, plus charts for the individual games. If you don't like the "overall performance" chart, the other two are the same views we've previously shown.

Our test suite is intentionally heavier on ray tracing games than what you might normally encounter. That's largely because ray tracing games are the most demanding games you'll encounter, so if a new card can handle ray tracing reasonably well, it should do just fine with less demanding games. Still, as a sub-$200 graphics card, maxed out ray tracing performance probably isn't the first consideration of anyone looking at this card, and we'll keep that in mind as we sift through the results.

As we expected, given the price, the Arc A580 sits near the bottom end of our charts. However, keep in mind that it's currently the least expensive card in the charts as well. The closest card, price-wise, is the RX 6600 from AMD, and the A580 clearly beats that card and comes relatively close to the RX 6650 XT.

Nvidia's RTX 3050 sits at the bottom of the chart, as you'd expect. The RTX 3060 meanwhile delivers about 10% higher performance than the A580, and the same goes for the Arc A750. Finally, for the higher priced Arc A770 16GB, RX 6700 10GB, and RTX 4060, Nvidia claims the top spot with its latest architecture. We're not even including the potential for DLSS or Frame Generation either, though upscaling at 1080p tends to have more noticeable artifacts than at 1440p and 4K.

This global view of performance also includes ray tracing where AMD's GPUs struggle more than their Intel and Nvidia counterparts. Let's move on to the rasterization charts to see where things stand for a more realistic suite of games that you'd play on the A580.

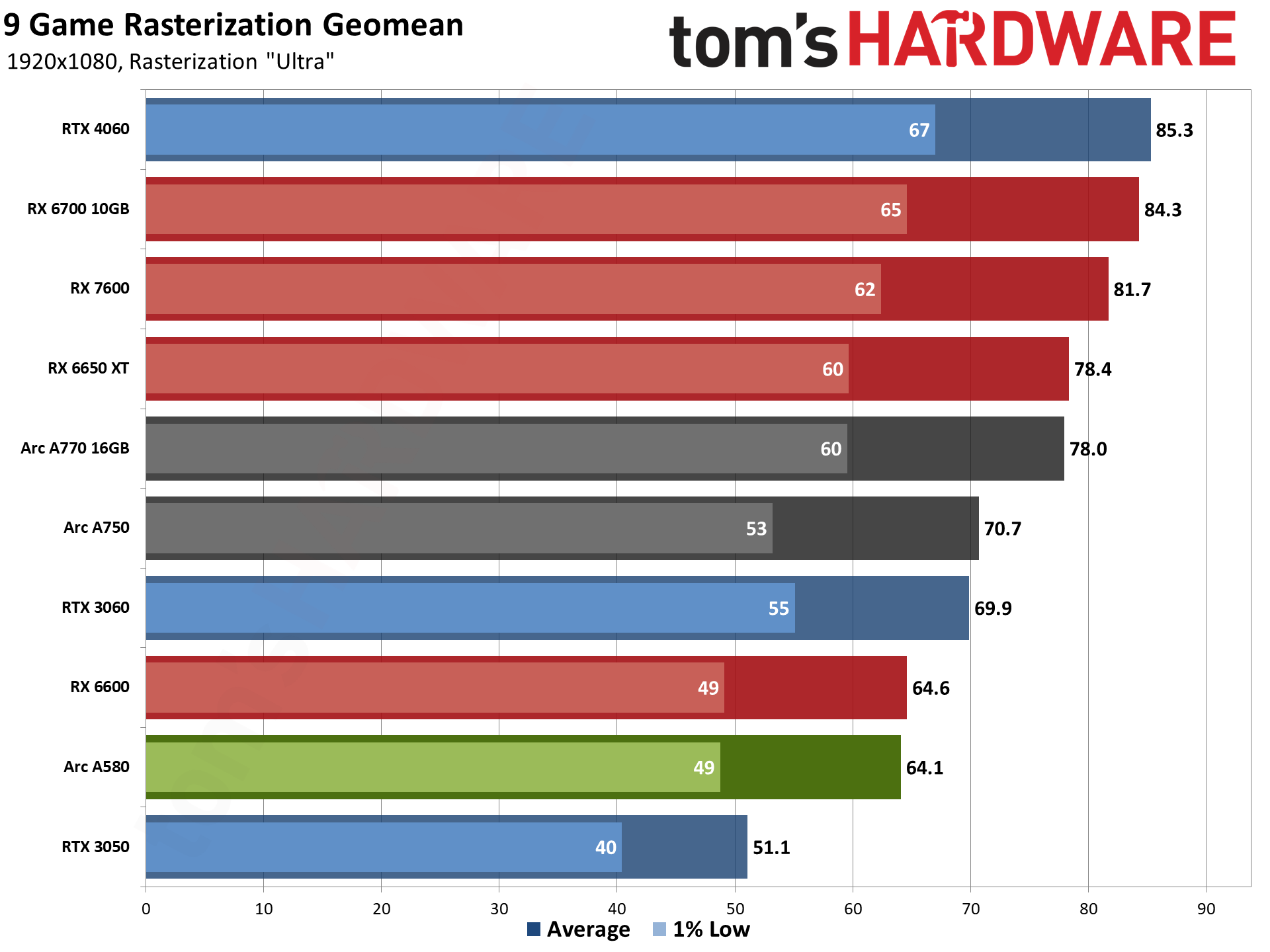

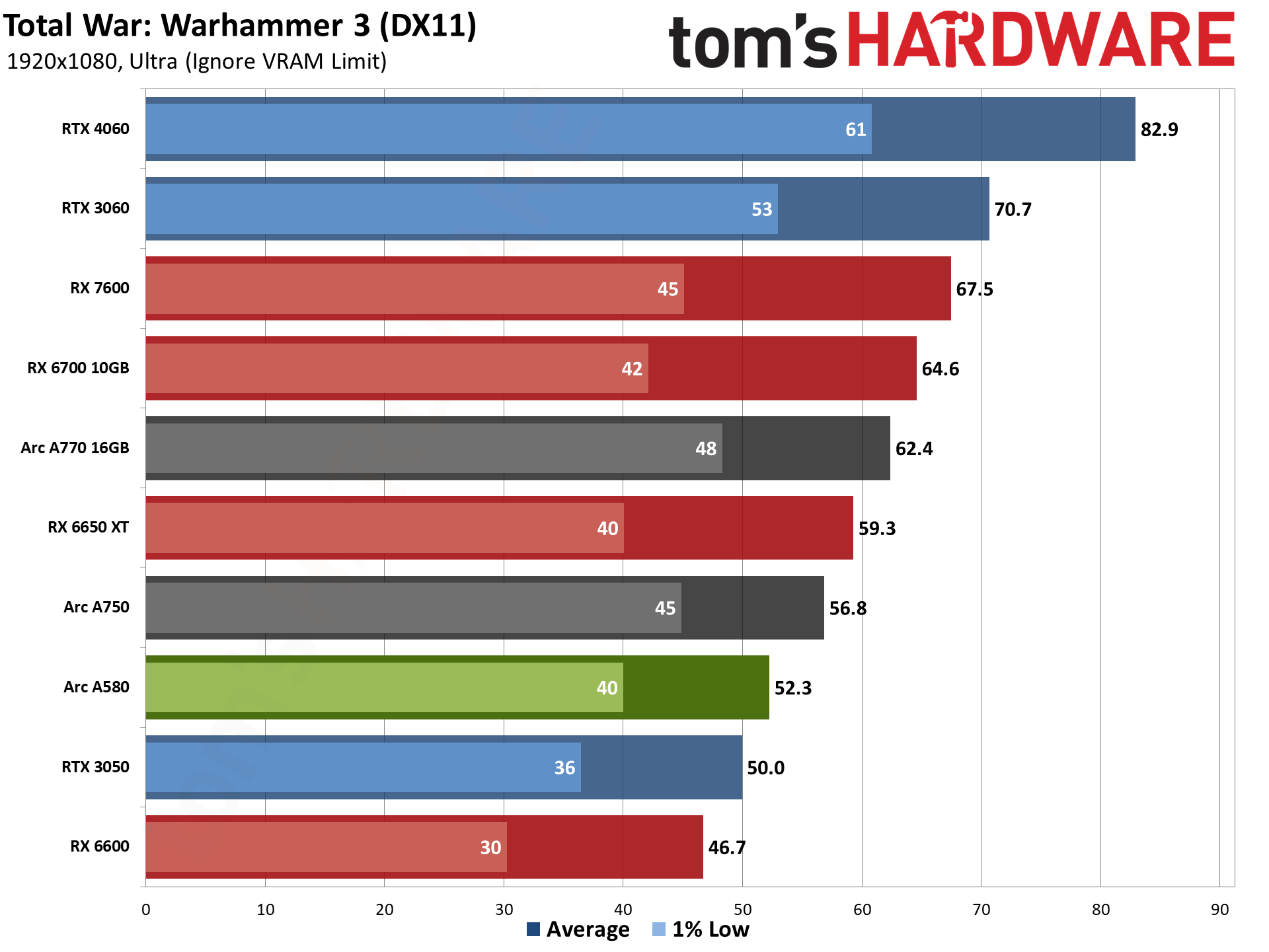

Looking just at the nine rasterization games in our test suite, the Arc A580 and RX 6600 are basically tied, with the very slight advantage for AMD's GPU. The A750 is again about 10% faster, as is the RTX 3060, though the 1% lows are quite a bit better on Nvidia's card.

AMD's step up from the RX 6600 delivers quite a jump in performance, with the RX 6650 XT and RX 7600 offering 22% and 28% higher performance, respectively. Note also that even the fully enabled Arc A770 16GB can't quite match the rasterization performance offered by the RX 6650 XT, and Nvidia's RTX 4060 outpaces even AMD's RX 6700 10GB. While there's still some question whether 8GB of memory is "enough" for modern games, if you're planning on gaming at 1080p, it generally won't be a problem.

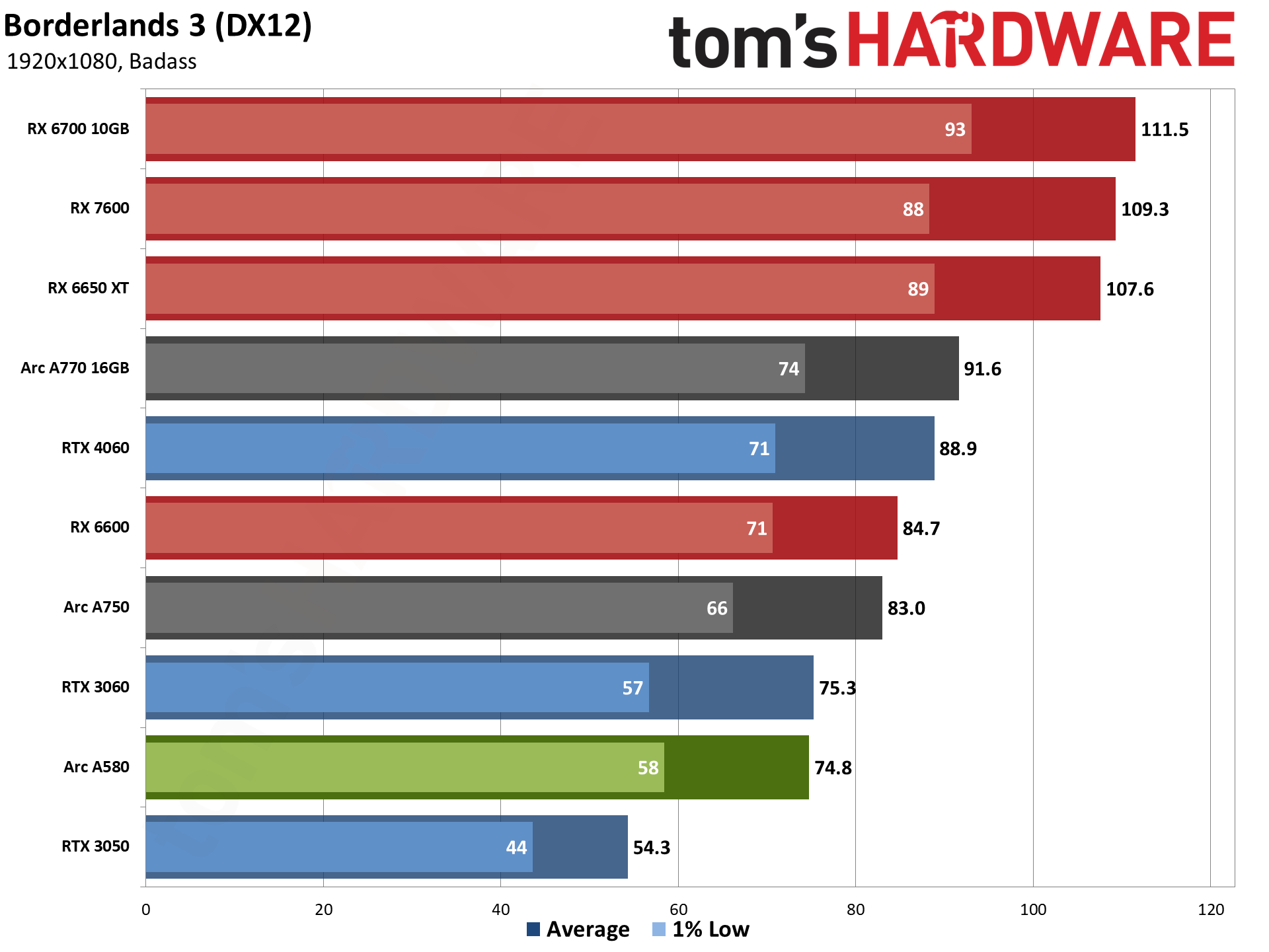

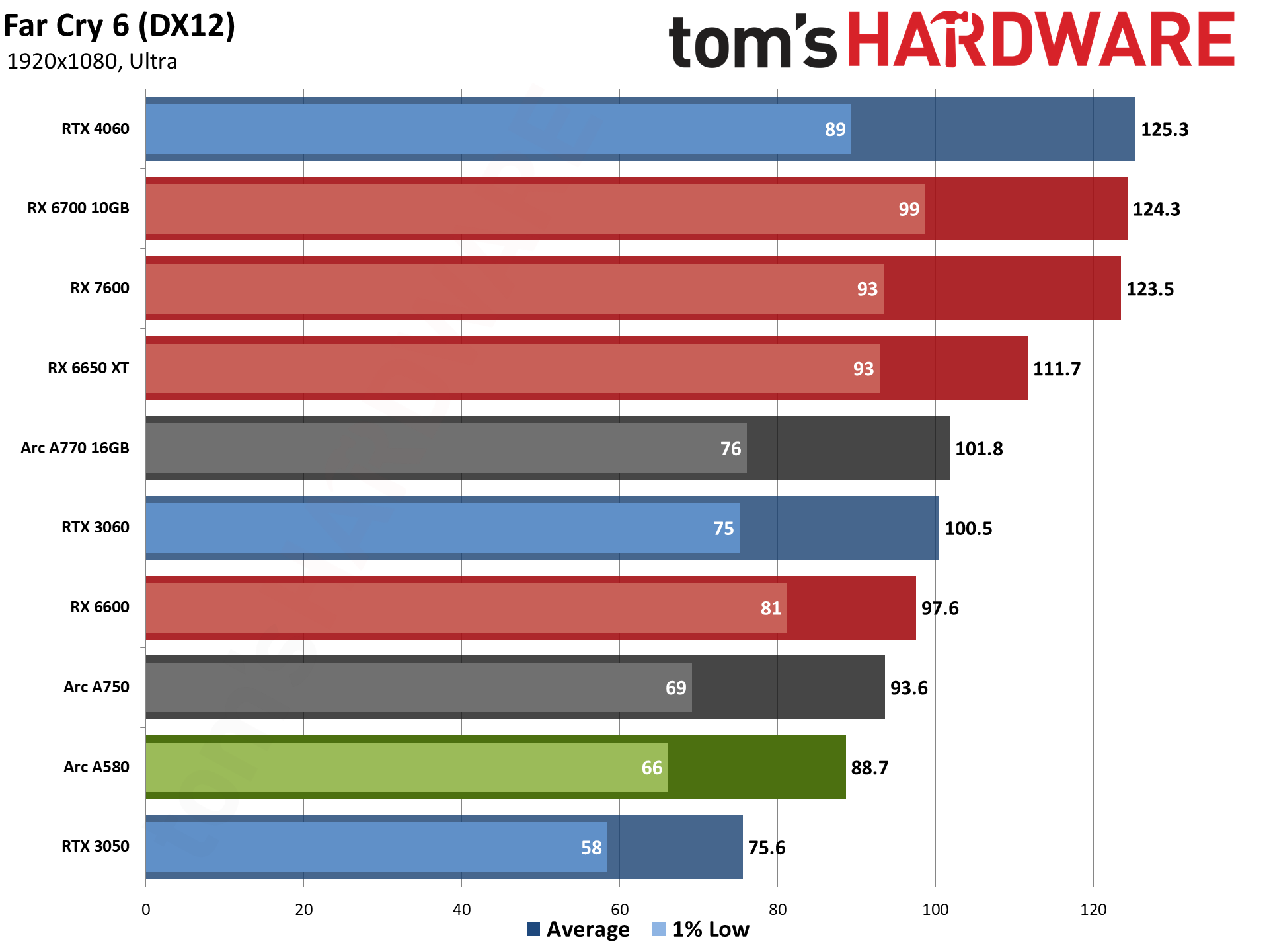

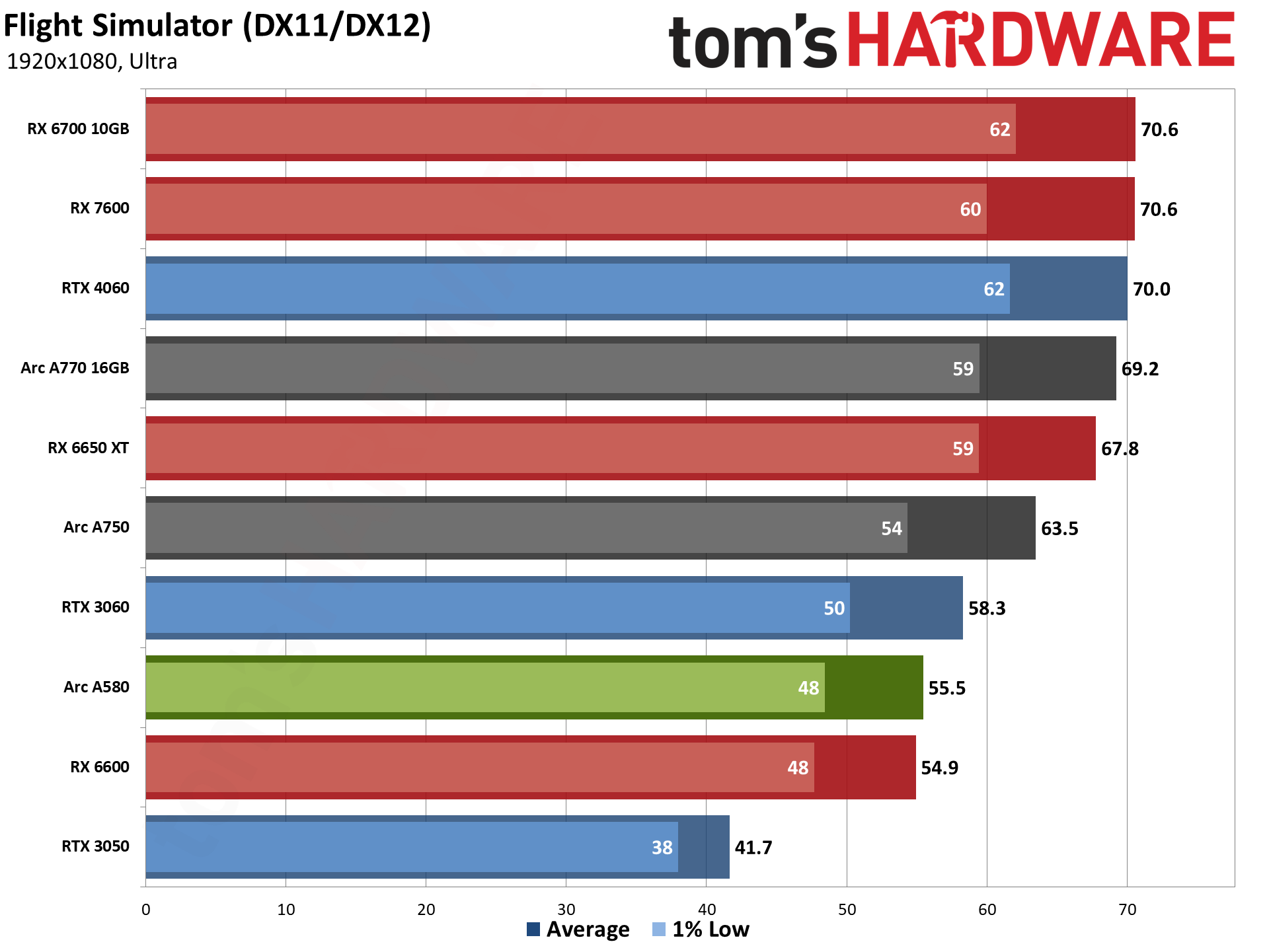

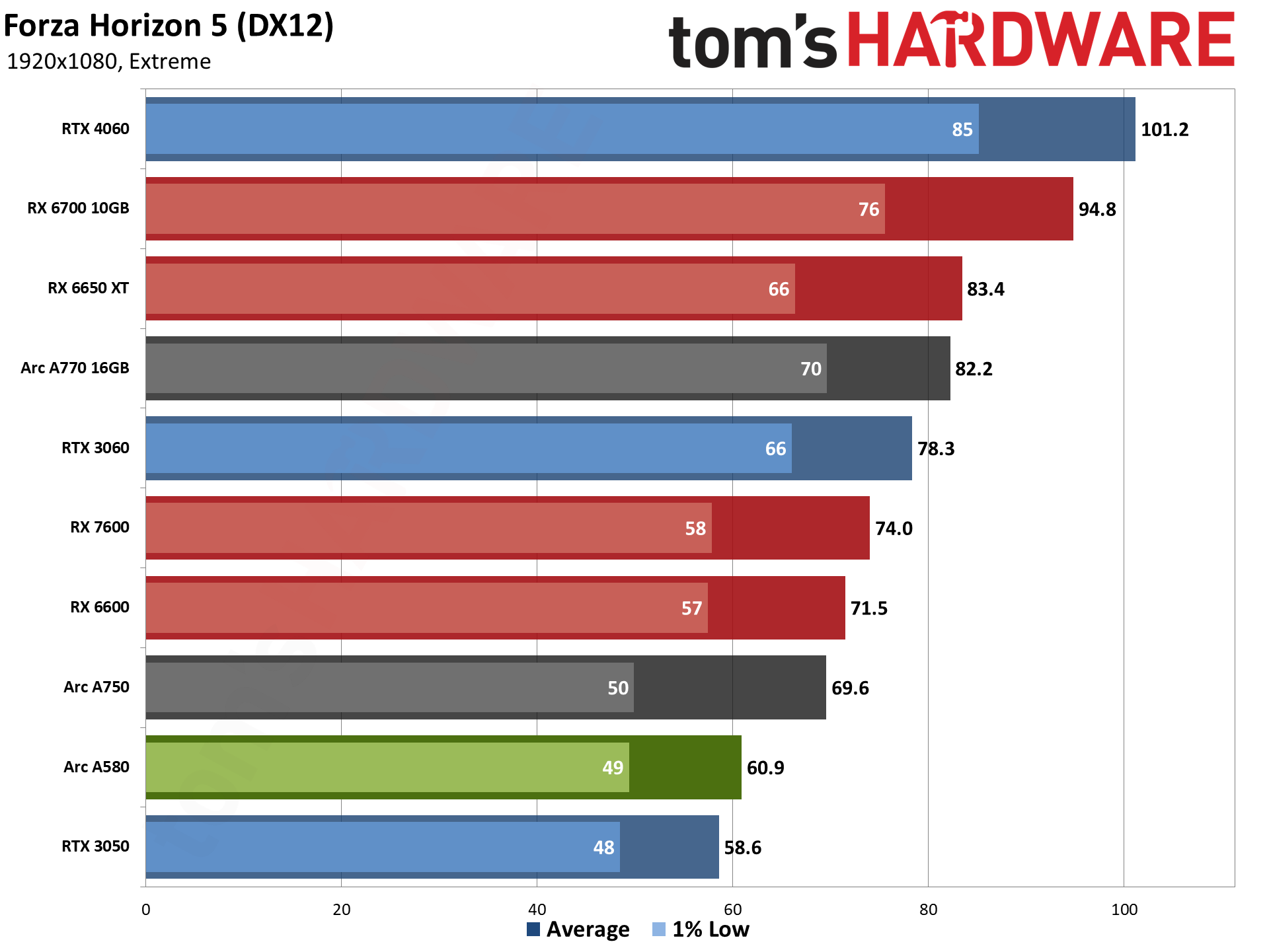

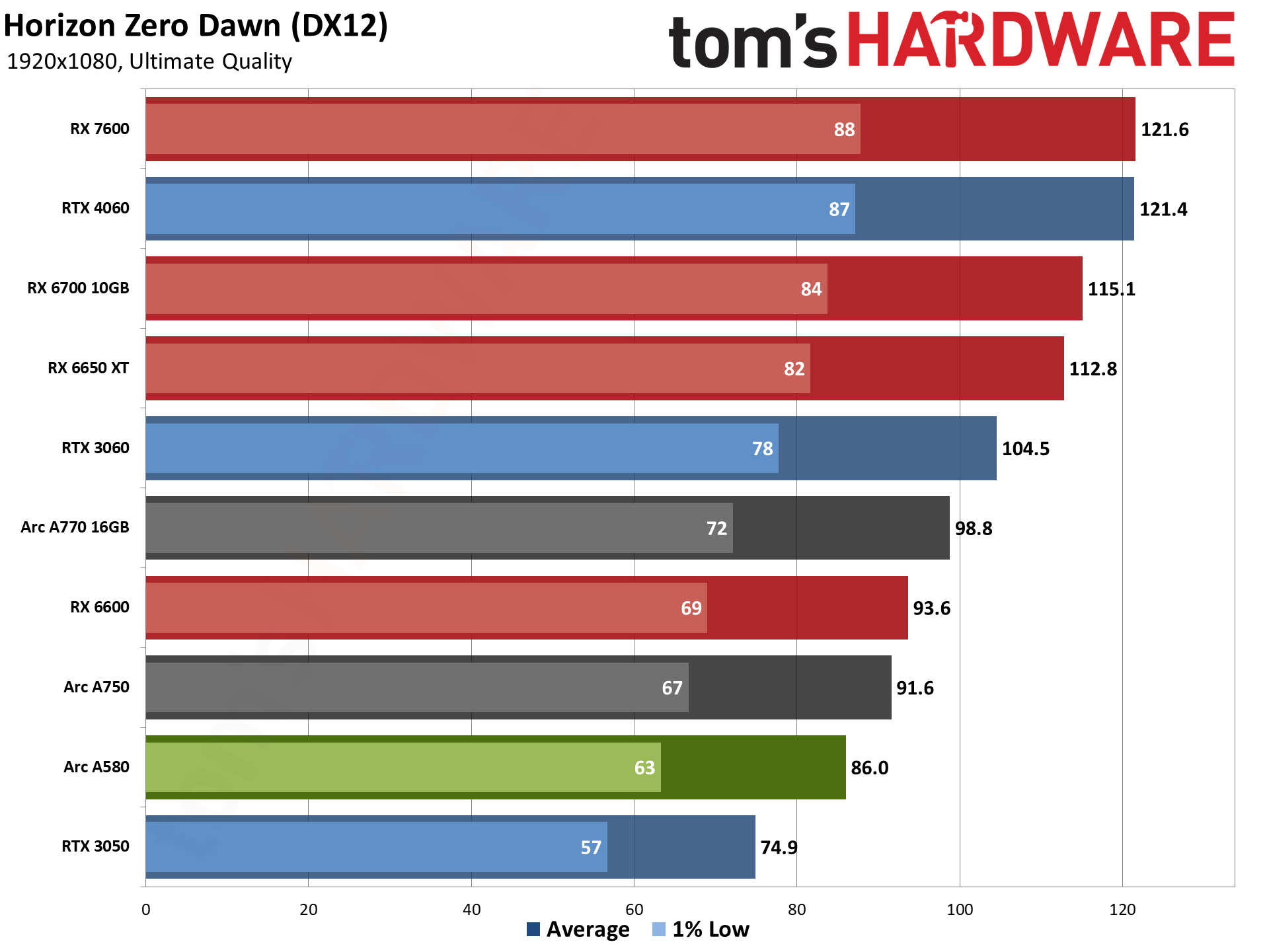

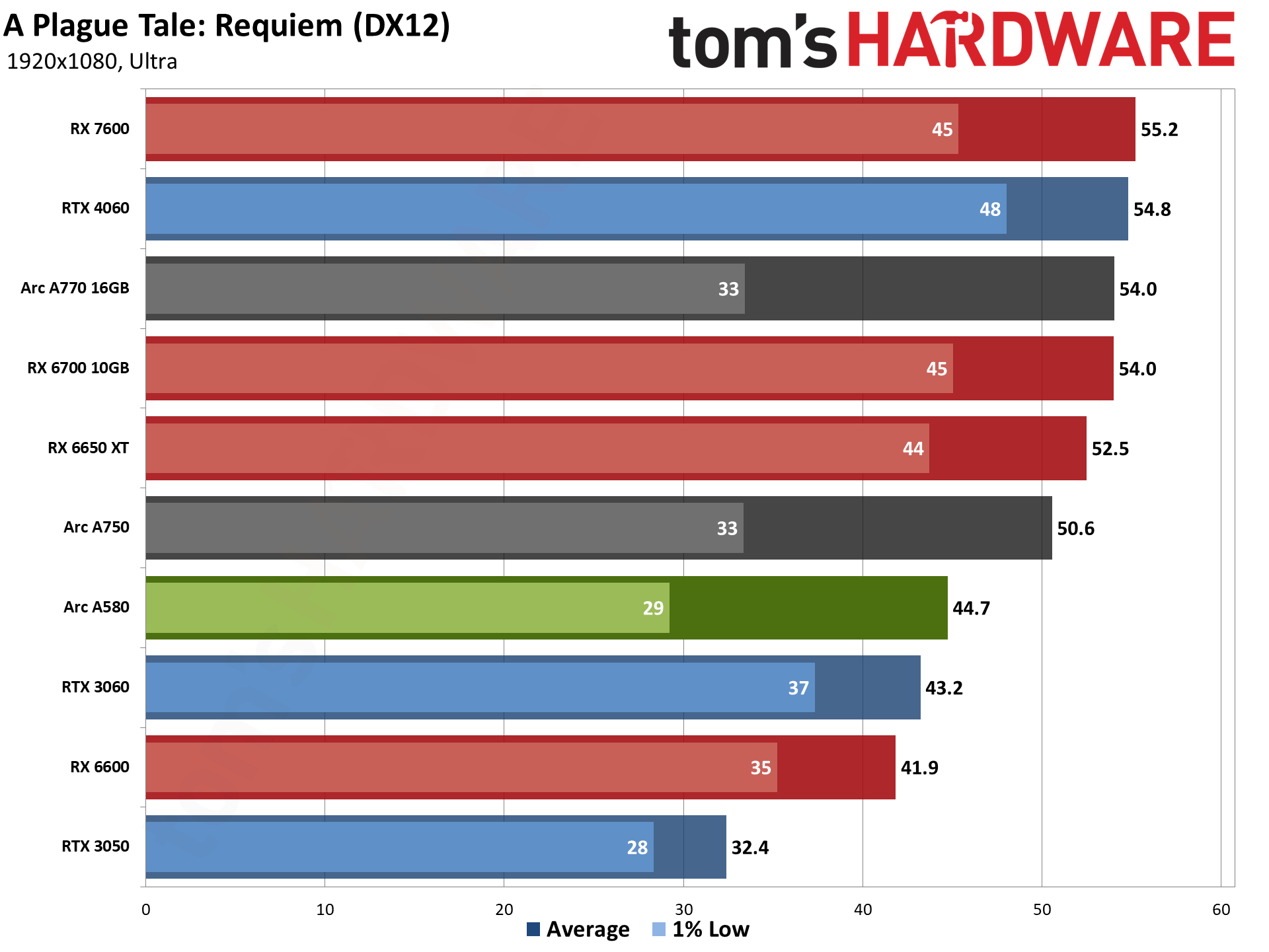

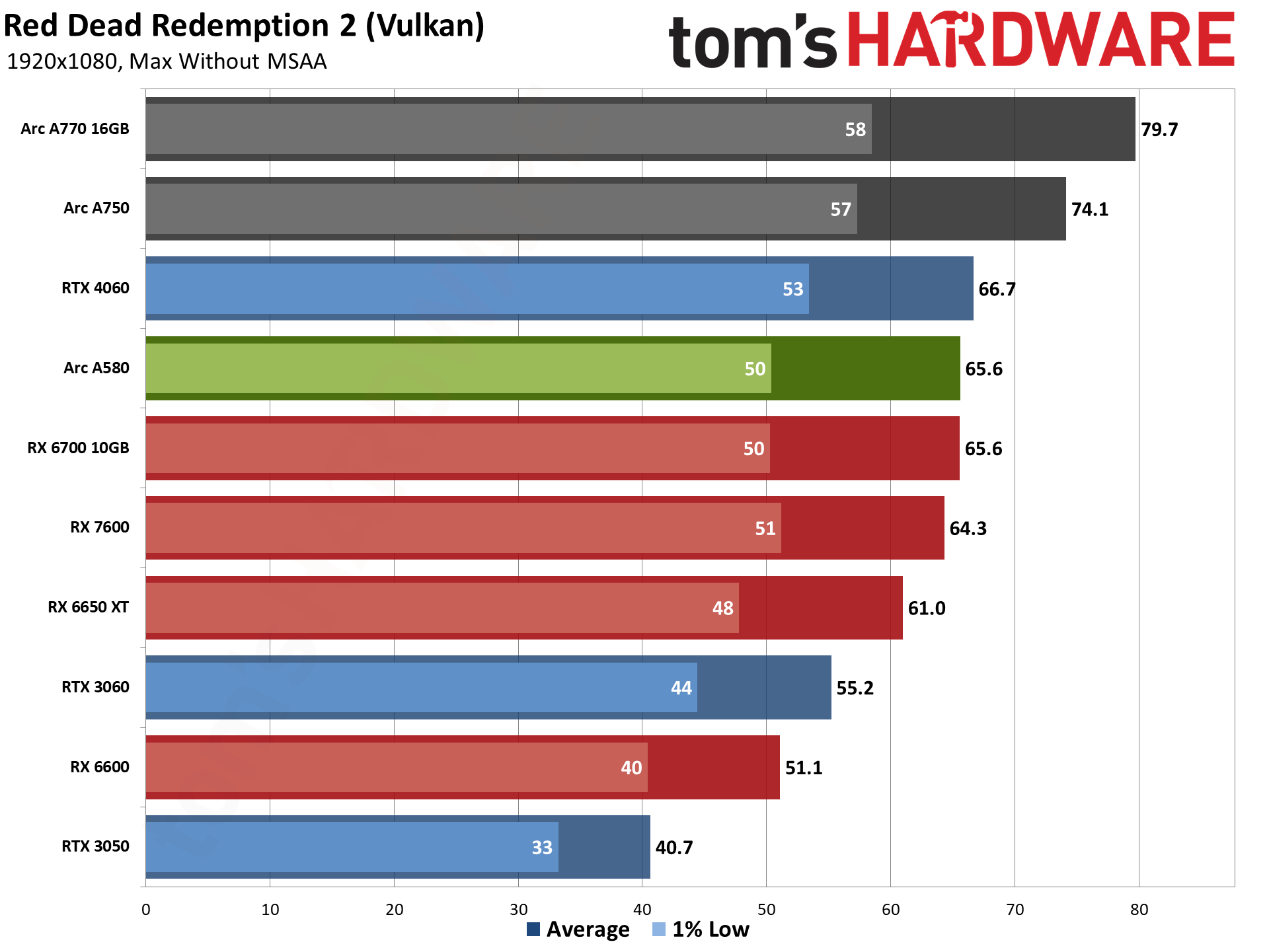

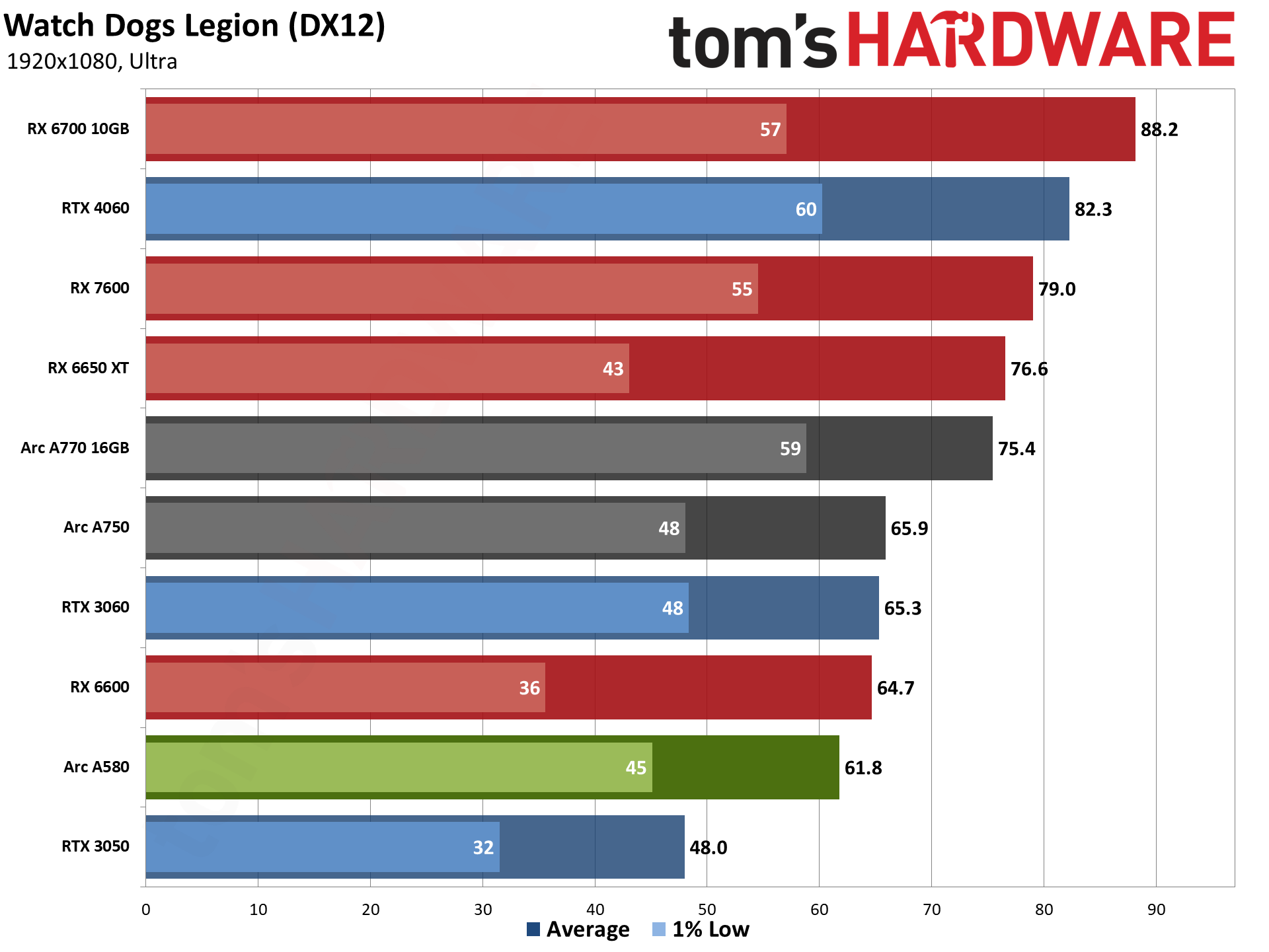

Looking at the individual charts, the rankings swap around quite a bit. At times, the A580 can match or even surpass the RTX 3060. Other times, it falls quite a bit behind Nvidia's previous generation part. But it's universally faster than the RTX 3050, just in case you were considering picking up one of those. Intel's best results overall are in Red Dead Redemption 2, which is also our sole Vulkan API game, so that's sort of interesting.

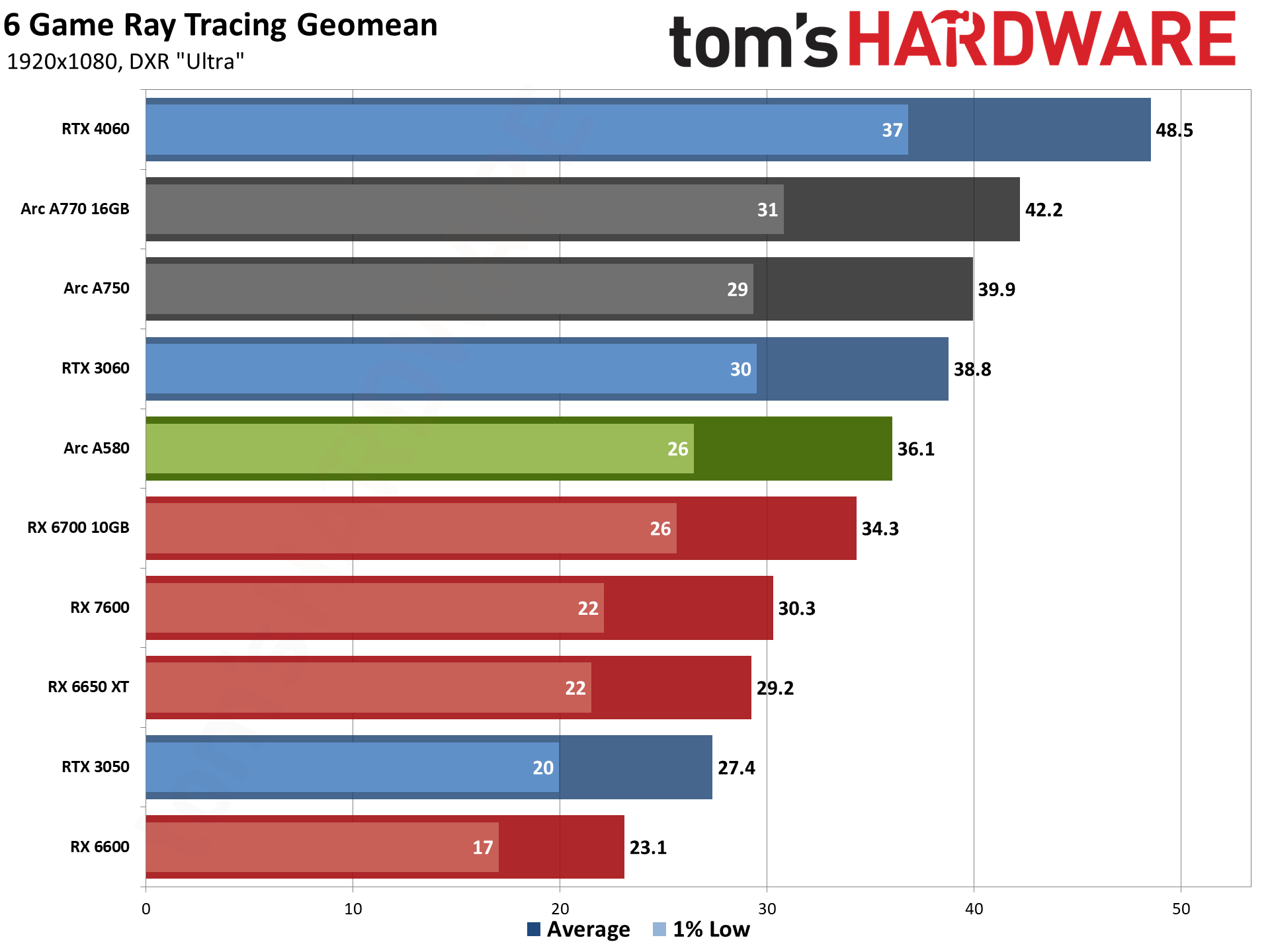

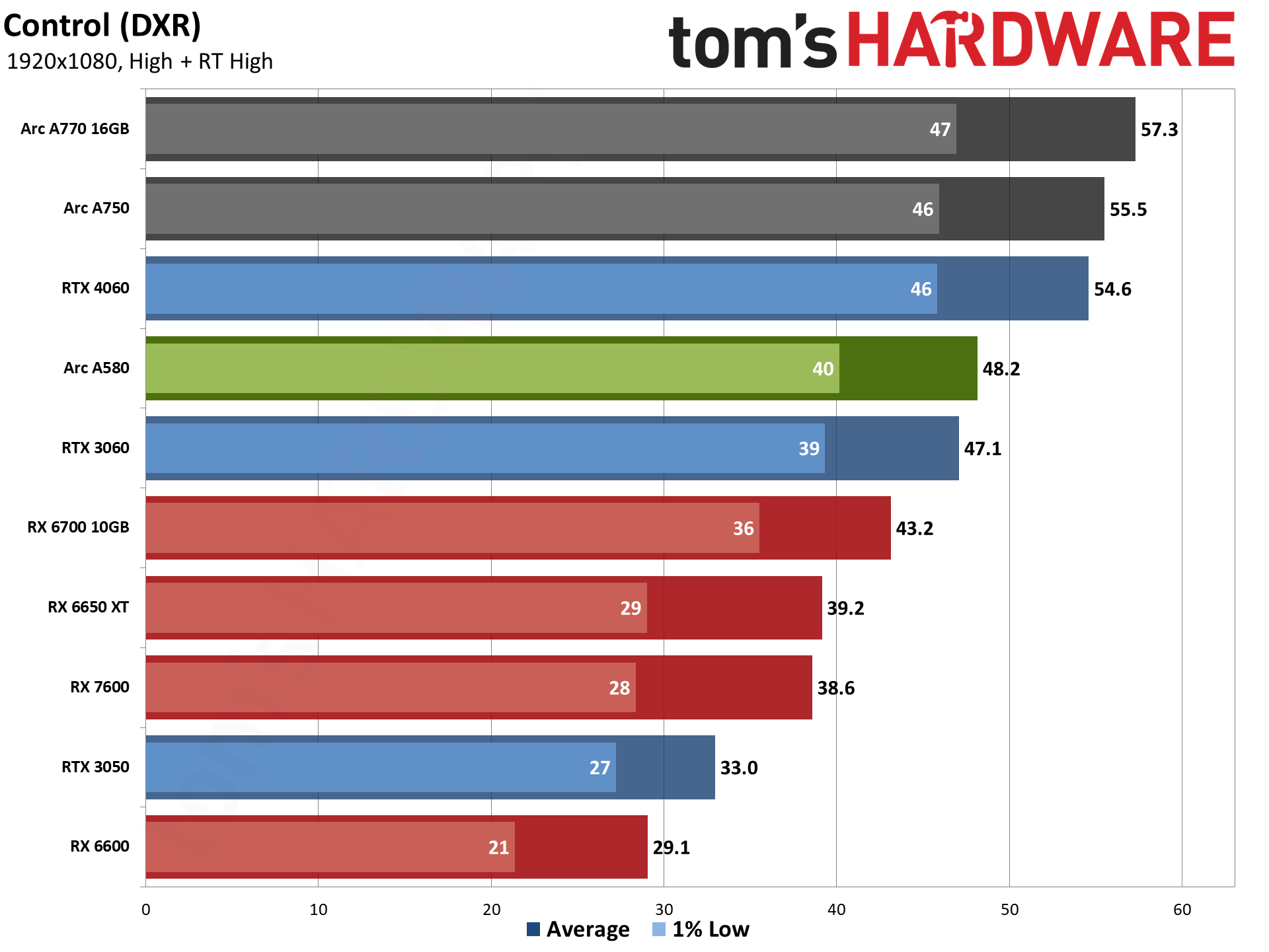

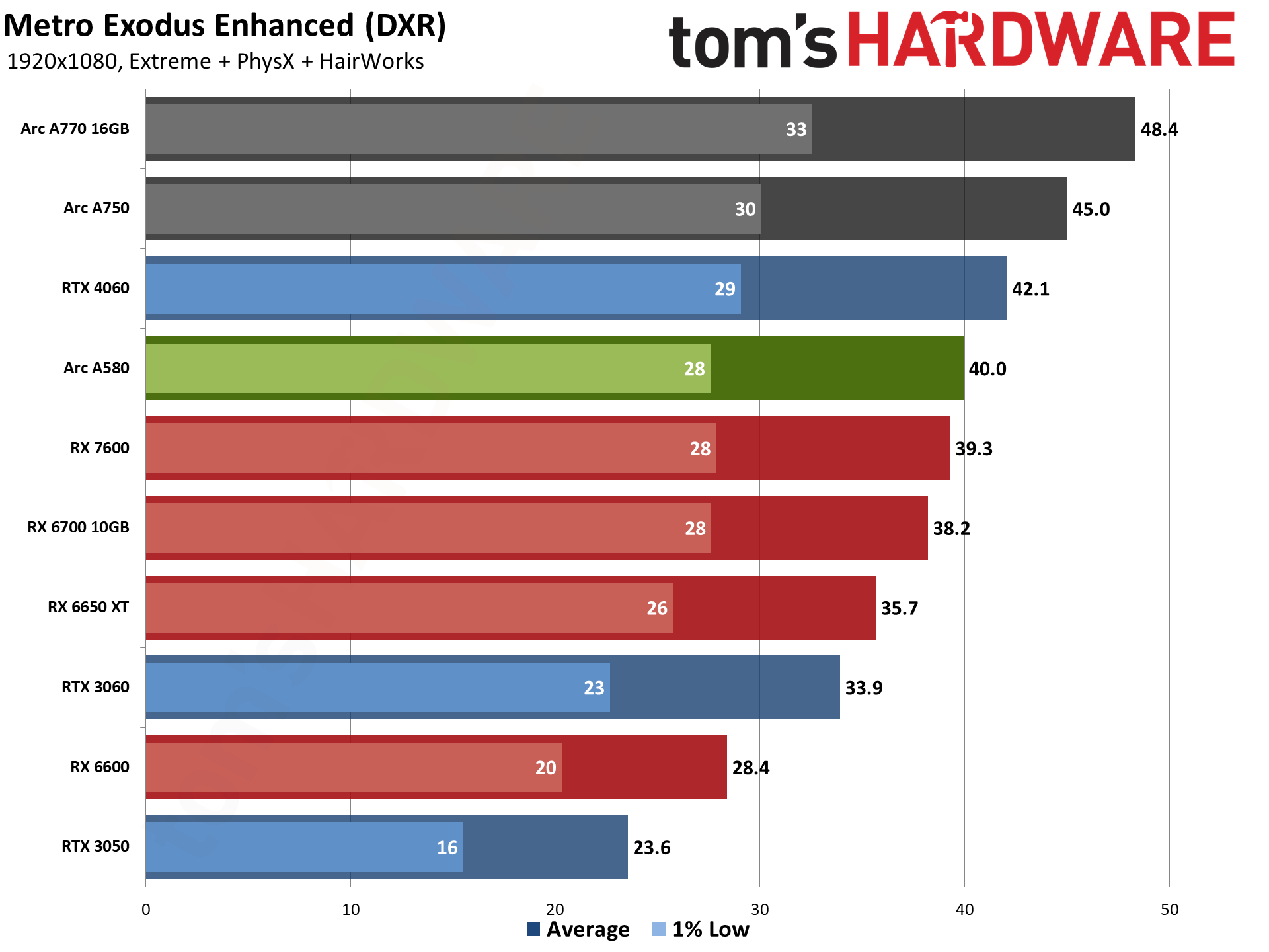

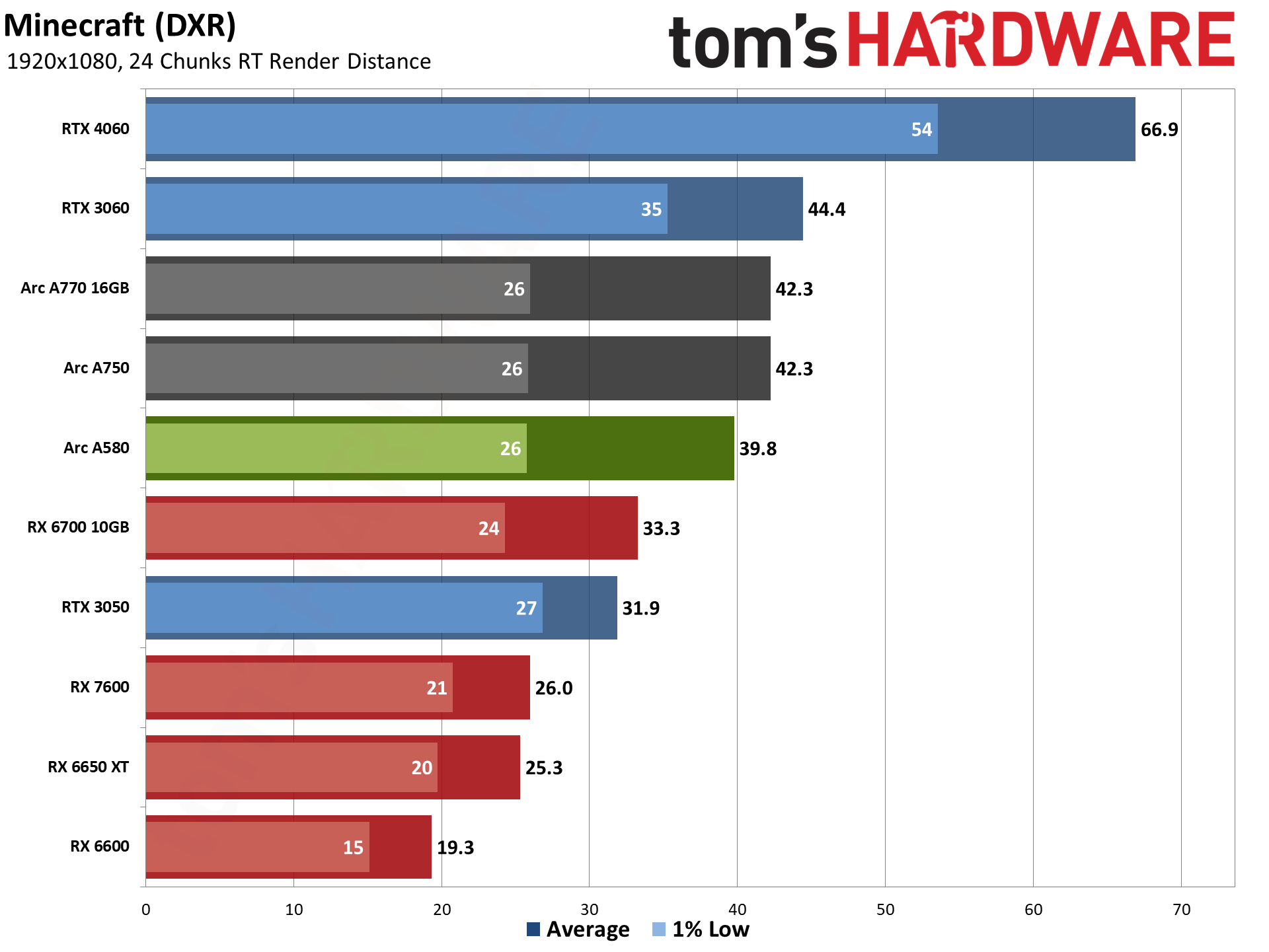

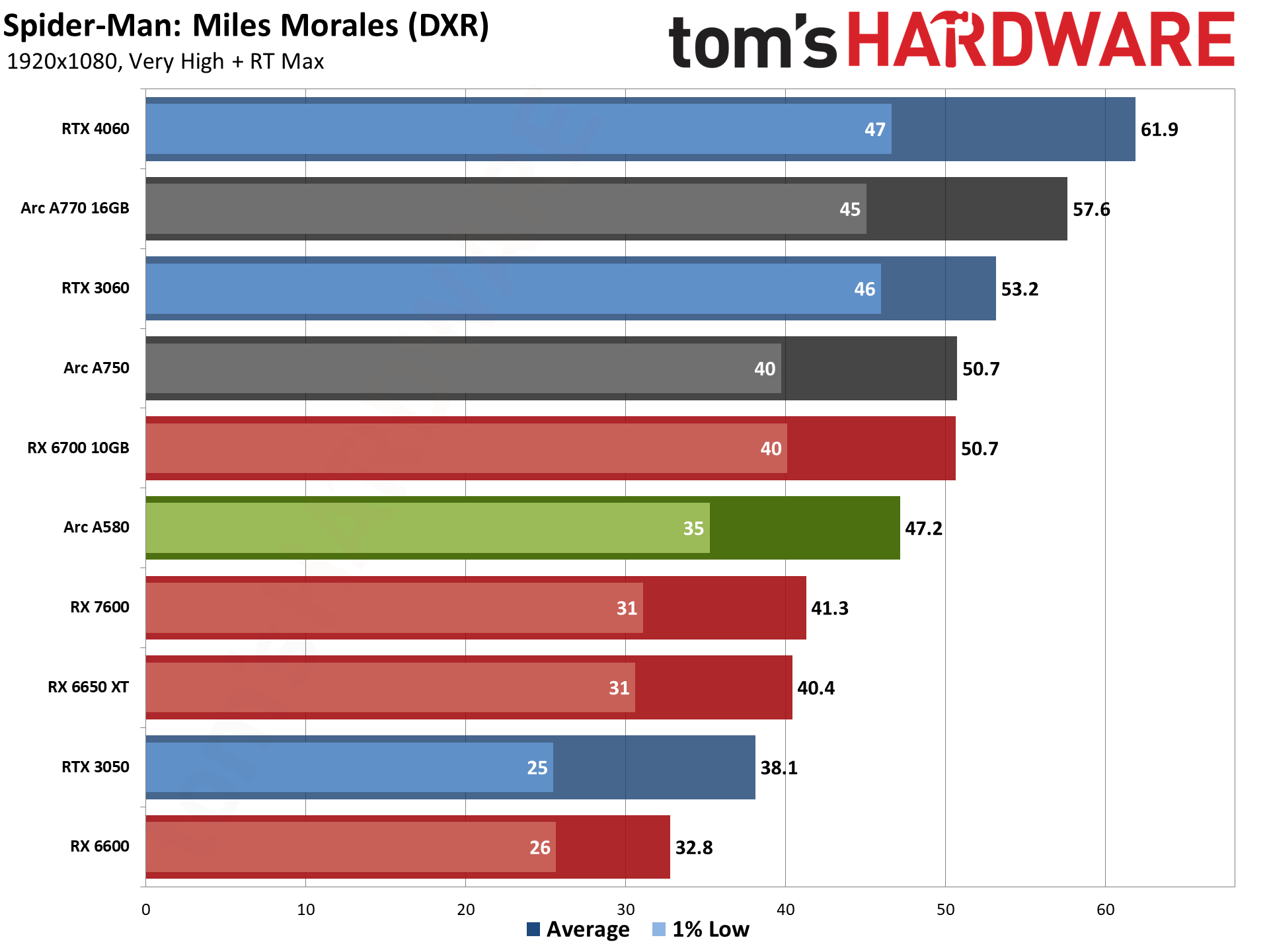

The ray tracing story might feel a bit of a letdown, considering the Arc A580 only averages 36 fps across our test suite. But like Nvidia, Intel's Arc GPUs easily outperform AMD's GPUs when it comes to ray tracing performance. The A580 leads the RX 6700 10GB by 5% percent overall, and comes in 7% behind the RTX 3060. It's also 56% faster than AMD's RX 6600.

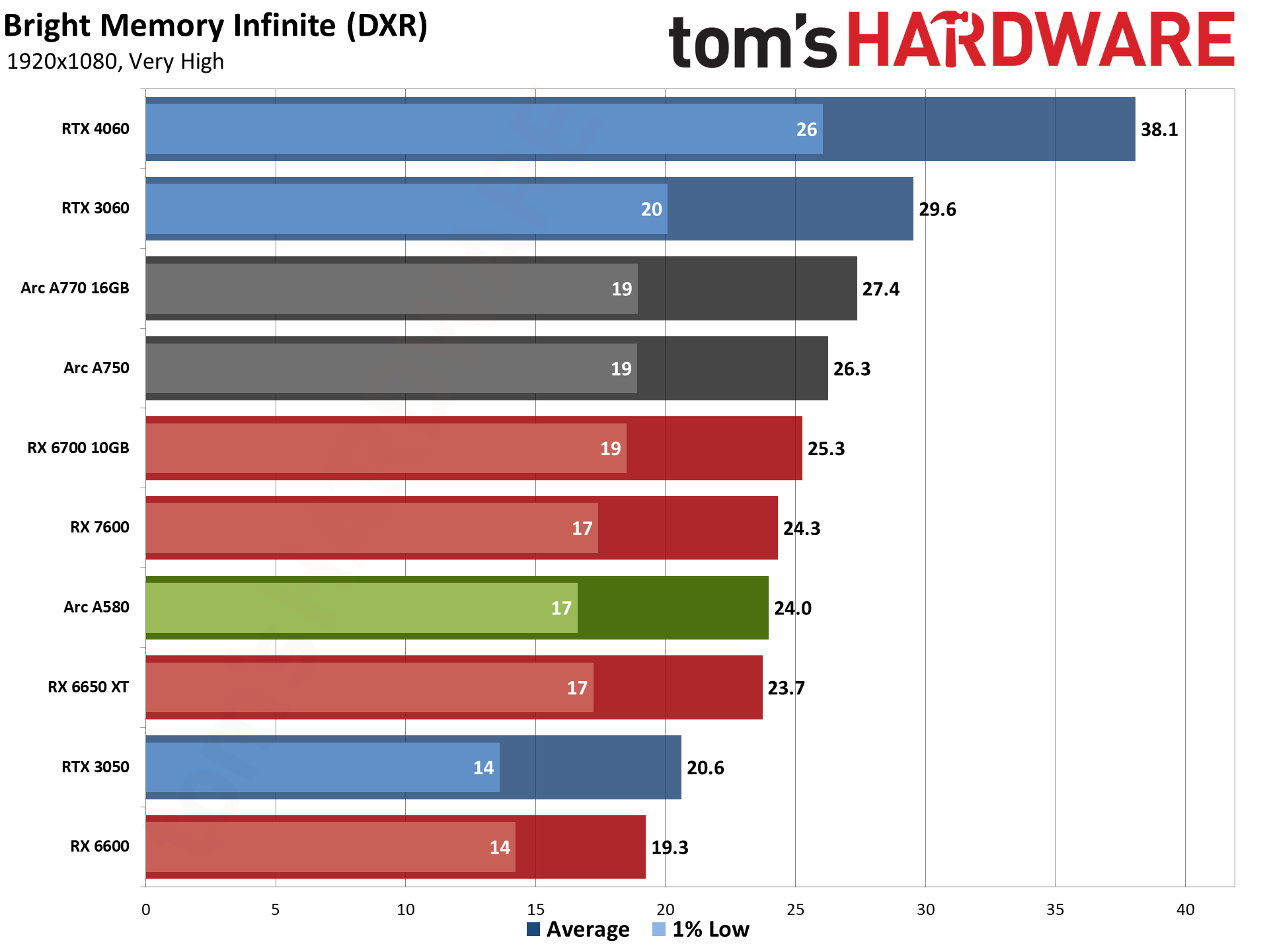

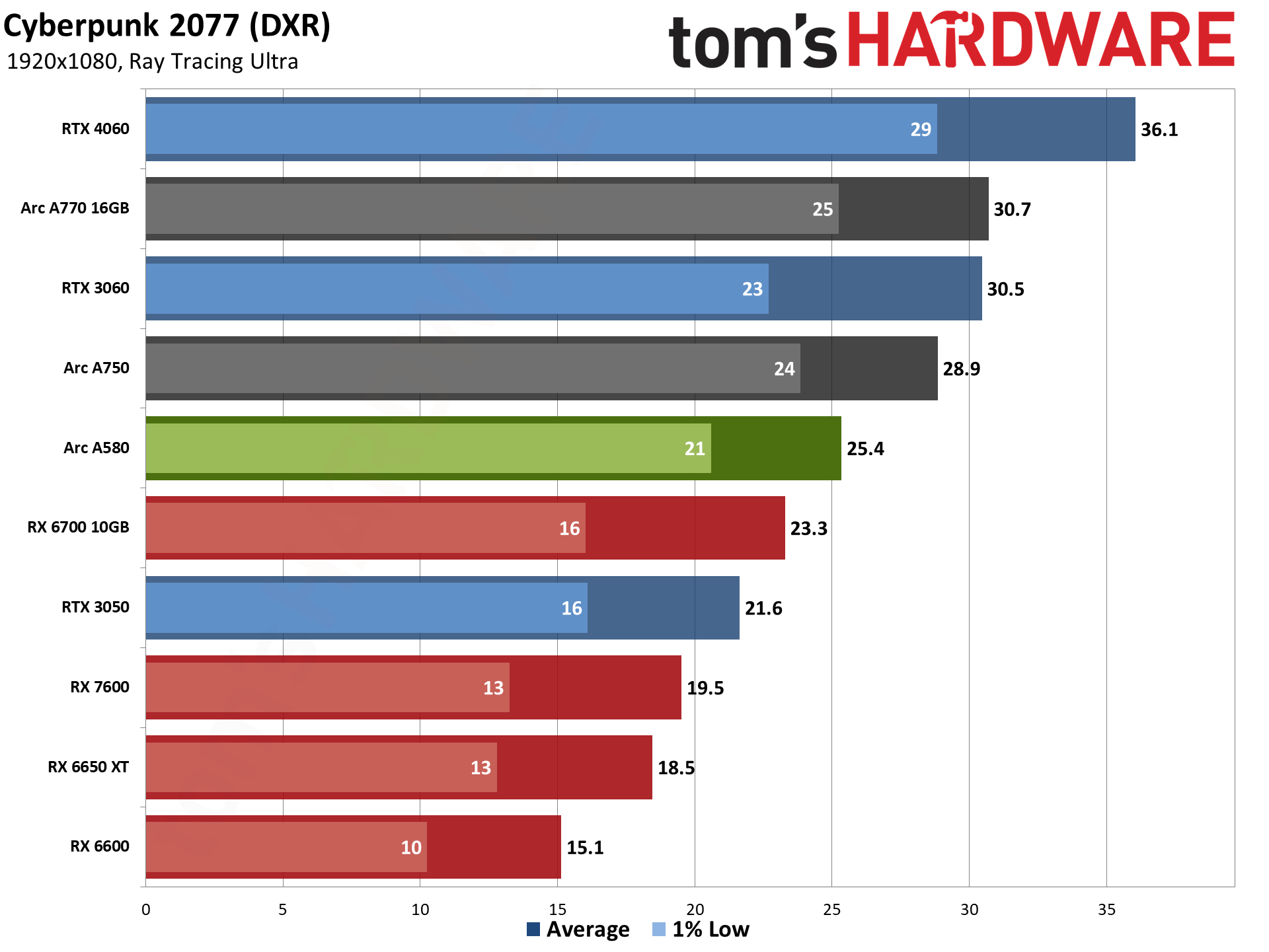

That's the big picture, however, and we do need to look at the details. Bright Memory Infinite benchmark fails to break 30 fps on all of the cards tested with the exception of the RTX 4060. Cyberpunk 2077 likewise falls below 30 fps on most of the cards, with the RTX 3060, Arc A770 16GB, and RTX 4060 breaking into "playable" territory. So two of the DXR (DirectX Raytracing) games should be played without DXR on most GPUs that cost less than $300.

Still, with XeSS or FSR2 upscaling (depending on the game and what it supports), you should be able to get a pretty good experience even from the Arc A580. We still generally find DLSS 2 upscaling to provide the best image quality, followed by XeSS and then FSR2. Unfortunately, XeSS support isn't nearly as widespread as DLSS or FSR2.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Arc A580: 1080p Ultra Gaming Performance

Prev Page Sparkle Arc A580 Orc OC Card Next Page Intel Arc A580: 1080p Medium Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

AgentBirdnest Awesome review, as always!Reply

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

JarredWaltonGPU Reply

Yeah, the $180 MSRP just feels like wishful thinking right now rather than reality. I don't know what supply of Arc GPUs looks like from the manufacturing side, and I feel like Intel may already be losing money per chip. But losing a few dollars rather than losing $50 or whatever is probably a win. This would feel a ton better at $150 or even $160, and maybe add half a star to the review.AgentBirdnest said:Awesome review, as always!

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

hotaru.hino Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.Reply -

JarredWaltonGPU Reply

That's the assumption I'm going off: Intel is willing to take a short-term / medium-term loss on GPUs in order to bootstrap its data center and overall ambitions. The consumer graphics market is just a side benefit that helps to defray the cost of driver development and all the other stuff that needs to happen.hotaru.hino said:Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.

But when you see the number of people who have left Intel Graphics in the past year, and the way Gelsinger keeps divesting of non-profitable businesses, I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Intel's GPU has a lot of room to improve, not just on drivers but on power and performance. Basically, look at Ada Lovelace and that's the bare minimum we need from Battlemage if it's really going to be competitive. We already have RDNA 3 as the less efficient, not quite as fast, etc. alternative to Intel, and AMD still has better drivers. Matching AMD isn't the end goal; Intel needs to take on Nvidia, at least up to the 4070 Ti level. -

mwm2010 If the price of this goes down, then I would be very impressed. But because of the $180 price, it isn't quite at its full potential. You're probably better off with a 6600.Reply -

btmedic04 Arc just feels like one of the industries greatest "what ifs' to me. Had these launched during the great gpu shortage of 2021, Intel would have sold as many as they could produce. Hopefully Intel sticks with it, as consumers desperately need a third vendor in the market.Reply -

cyrusfox Reply

What other choice do they have? If they canned their dGPU efforts, they still need staff to support for iGPU, or are they going to give up on that and license GPU tech? Also what would they do with their datacenter GPU(Ponte Vechio subsequent product).JarredWaltonGPU said:I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Only clear path forward is to continue and I hope they do bet on themselves and take these licks (financial loss + negative driver feedback) and keep pushing forward. But you are right Pat has killed a lot of items and spun off some great businesses from Intel. I hope battlemage fixes a lot of the big issues and also hope we see 3rd and 4th gen Arc play out. -

bit_user Thanks @JarredWaltonGPU for another comprehensive GPU review!Reply

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it! -

JarredWaltonGPU Reply

The evil CMS overlords won't let us have nice tables. That's basically the way things shake out. It hurts my heart every time I try to put in a bunch of GPUs, because I know I want to see all the specs, and I figure others do as well. Sigh.bit_user said:Thanks @JarredWaltonGPU for another comprehensive GPU review!

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it!

As for RT and AI, it's decent for sure, though I guess I just got sidetracked looking at the A750. I can't help but wonder how things could have gone differently for Intel Arc, but then the drivers still have lingering concerns. (I didn't get into it as much here, but in testing a few extra games, I noticed some were definitely underperforming on Arc.)