Why you can trust Tom's Hardware

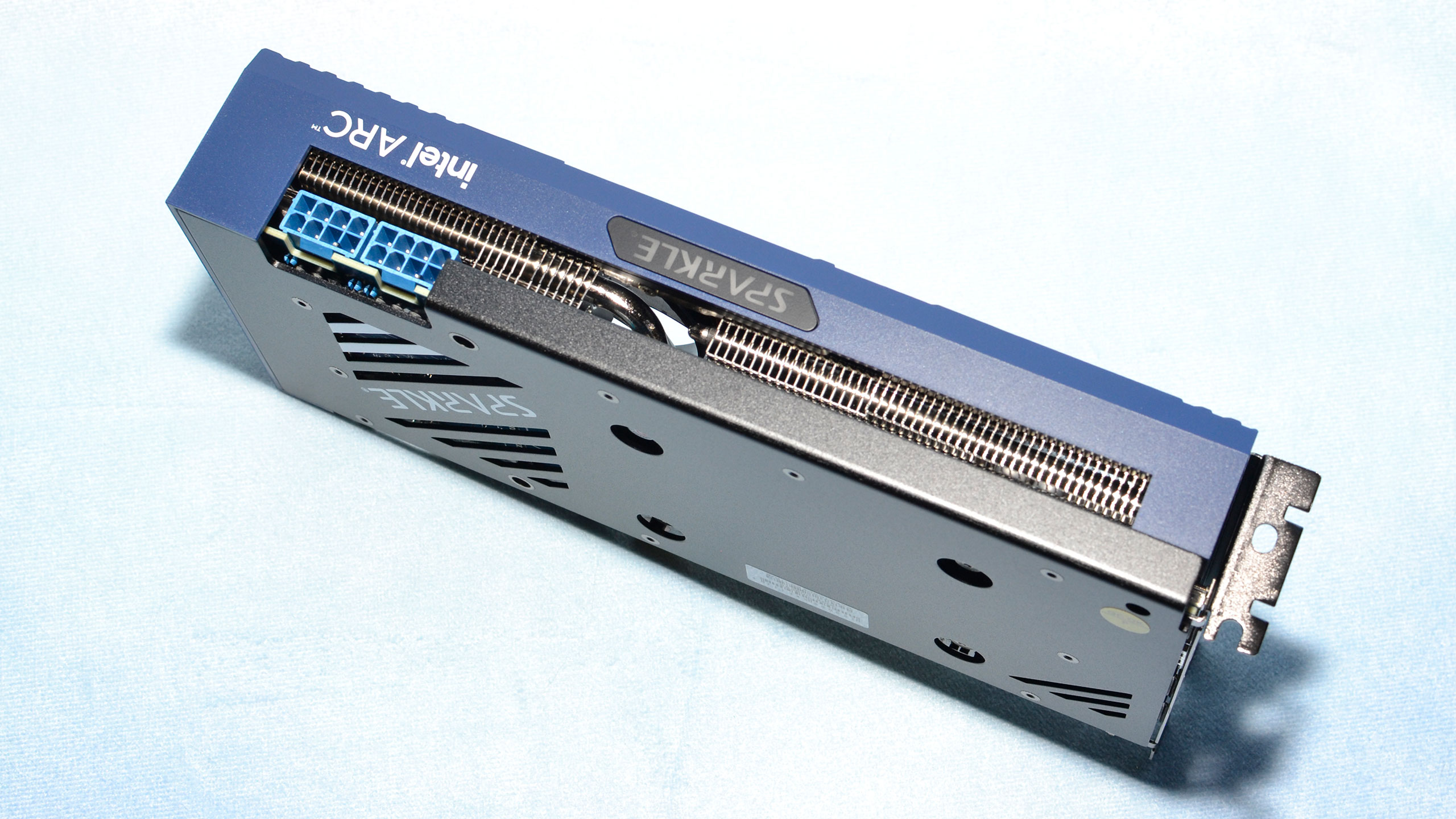

Sparkle Arc A580 Orc OC Card

There's no Intel reference Arc A580, but we received the Sparkle Arc A580 Orc OC for this review. There are at least two other A580 cards coming out today, an ASRock model and one from Gunnir, but we haven't tested either of those. We suspect performance will largely be the same as this Sparkle card, though noise levels, temperatures, and lighting options are going to be different.

This is also the first ever review we've had a of a Sparkle graphics card. The company entered the GPU market earlier this year, and so far it's only selling Intel Arc-based cards. It covers nearly the whole suite of offerings, with the A770 8GB being the only omission. Besides graphics cards, Sparkle also dabbles in motherboards, FPGAs, and Thunderbolt/USB docks. Sparkle Power Inc. has also been selling PSUs for decades.

The packaging is pretty typical for a graphics card. There's plenty of foam padding, and the card comes in an anti-static bag. Bonus points (from me) for not putting plastic cling wrap all over the card. Besides the card, there's an Intel free game activation code included... or at least there was in our review sample. Except, the card says it's only valid until July 10, 2023. Hopefully that date gets extended for anyone else who wants Ghostbusters: Spirits Unleashed or Gotham Knights. (Neither game fared very well among critics, if you're wondering.)

The Sparkle Arc A580 Orc OC measures 223x113x39.5 mm, and weighs 738g. It's relatively compact, in other words, though not exactly small. It's a pretty boxy design as well, eschewing fancy curves and any RGB lighting in favor of functionality.

The card has two fans, both the same moderately large 89mm model with an integrated rim. That's good to see, as often less expensive graphics cards opt for cheaper and less effective fans that need to spin at higher RPMs to keep the GPU cool. Except the weight of the card suggests maybe the fans might need to work harder to keep the GPU cool — just under 740g for a card that can pull around 200W suggests the heatsink and heatpipes aren't as robust as we've seen on cards from other manufacturers.

Somewhat curiously, the Sparkle A580 comes with dual 8-pin power connectors. We suspect that's because it reuses the existing A750 Orc design, though even there an 8-pin plus 6-pin configuration should have been more than sufficient. At the same time, 8-pin connectors are pretty ubiquitous these days, usually via 6-pin plus 2-pin connectors. Perhaps Sparkle just felt it was easier to only use 8-pin connectors.

The only lighting on the card is the Sparkle logo on top, which lights up when powered on. In an interesting twist, the color of the lighting changes based on GPU temperature, ranging from white (coolest) to blue, then on to green, yellow, orange, and red. During testing, it mostly stayed blue in our experience, though at first power on it was white (coolest).

Intel Arc A580 Test Setup

We updated our GPU test PC at the end of last year with a Core i9-13900K, though we continue to also test reference GPUs on our 2022 system that includes a Core i9-12900K for our GPU benchmarks hierarchy. (We'll be updating that with the A580 numbers in the next few days; we haven't yet run those tests due to time constraints.) For this review, we use the 13900K results for gaming tests, which ensures, as much as possible, that we're not CPU limited. We also use the 2022 PC for AI tests and professional workloads.

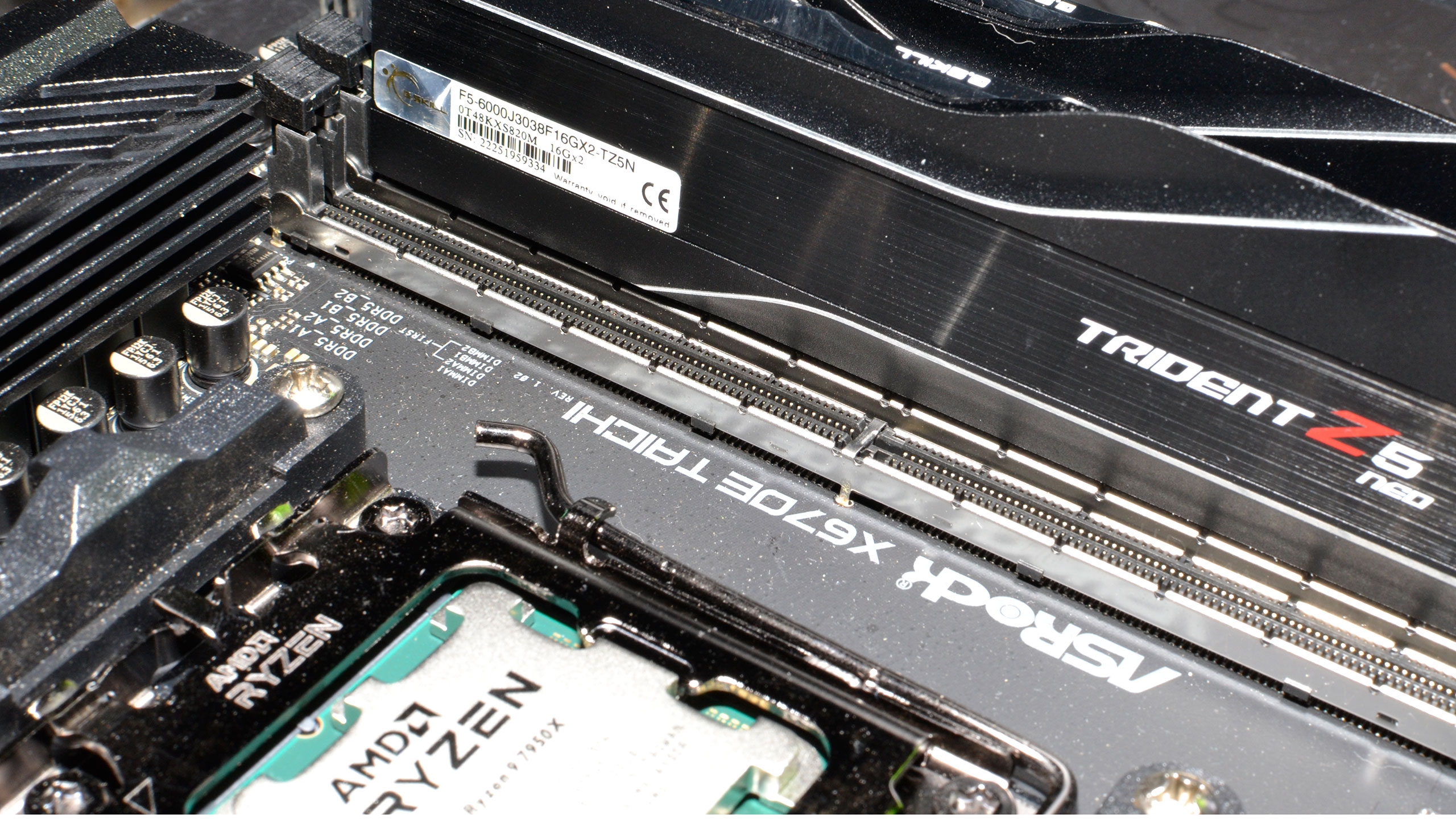

TOM'S HARDWARE 2023 Gaming PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

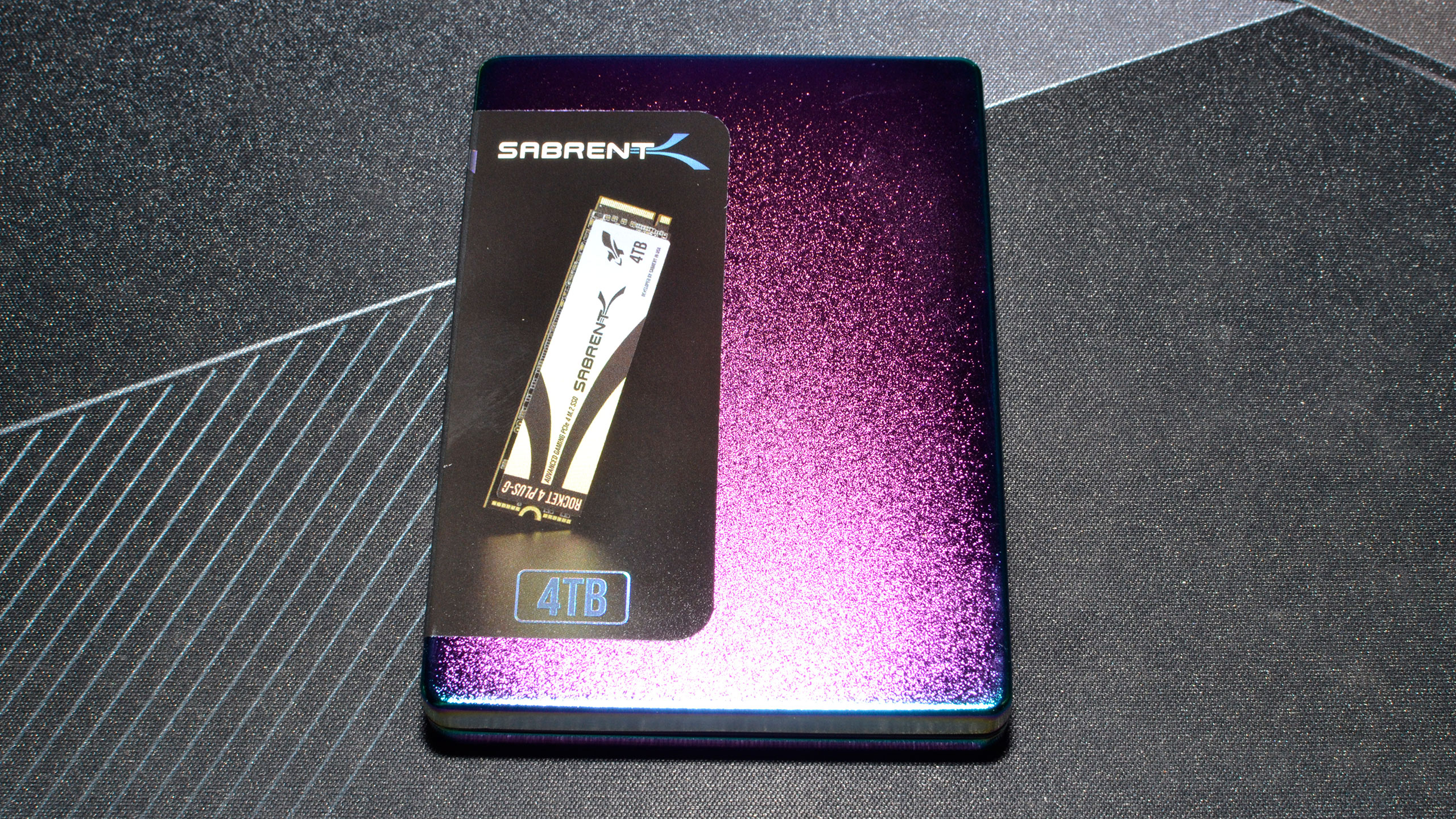

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE 2022 AI/ProViz PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

GRAPHICS CARDS

Intel Arc A580 (Sparkle)

Intel Arc A750

Intel Arc A770 16GB

AMD RX 6600

AMD RX 6650 XT

AMD RX 6700 10GB

Nvidia RTX 3050

Nvidia RTX 3060 12GB

Nvidia RTX 4060

We're using preview drivers from Intel, version 4830. The latest public Intel drivers are 4885, but those don't support the A580. Results from other cards are from the past few months, and we've retested a few specific games where there were apparent anomalies in performance, including retesting the Arc A750 using the same 4830 drivers.

We're including current generation and previous generation AMD and Nvidia GPUs in our charts, nominally priced in the $180–$300 range. Obviously, the Arc A580 falls at the bottom of that price range and thus we shouldn't expect it to top the performance charts. What we really want to know is how it stacks up against the similarly priced RX 6600, and the more expensive RX 6650 XT and RTX 3050/3060.

Our current test suite consists of 15 games. Of these, nine support DirectX Raytracing (DXR), but we only enable the DXR features in six games. The remaining nine games are tested in pure rasterization mode. While many of the games in our test suite support upscaling — twelve support DLSS 2, five support DLSS 3, five support FSR 2, and four support XeSS — we're primarily interested in native resolution performance. GPUs generally show similar scaling if you enable DLSS/FSR2/XeSS.

We tested the Arc A580 at 1080p (medium and ultra), 1440p ultra, and 4K ultra in a few games — ultra being the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling). This is primarily a 1080p gaming solution, however, so that will be our focus.

Our PC is hooked up to a Samsung Odyssey Neo G8 32, one of the best gaming monitors around, allowing us to fully experience some of the higher frame rates that might be available. G-Sync and FreeSync were enabled, as appropriate. As you can imagine, getting anywhere close to the 240 Hz limit of the monitor proved difficult, as we don't have any esports games in our test suite.

We installed all the then-latest Windows 11 updates when we assembled the new test PC. We're running Windows 11 22H2, but we've used InControl to lock our test PC to that major release for the foreseeable future (though critical security updates still get installed monthly).

Our new test PC includes Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We'll cover those results on our page on power use.

Finally, because GPUs aren't purely for gaming these days, we've run some professional application tests, and we also ran some Stable Diffusion benchmarks to see how AI workloads scale on the various GPUs.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Sparkle Arc A580 Orc OC Card

Prev Page Intel Arc A580 Review Next Page Intel Arc A580: 1080p Ultra Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

AgentBirdnest Awesome review, as always!Reply

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

JarredWaltonGPU Reply

Yeah, the $180 MSRP just feels like wishful thinking right now rather than reality. I don't know what supply of Arc GPUs looks like from the manufacturing side, and I feel like Intel may already be losing money per chip. But losing a few dollars rather than losing $50 or whatever is probably a win. This would feel a ton better at $150 or even $160, and maybe add half a star to the review.AgentBirdnest said:Awesome review, as always!

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

hotaru.hino Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.Reply -

JarredWaltonGPU Reply

That's the assumption I'm going off: Intel is willing to take a short-term / medium-term loss on GPUs in order to bootstrap its data center and overall ambitions. The consumer graphics market is just a side benefit that helps to defray the cost of driver development and all the other stuff that needs to happen.hotaru.hino said:Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.

But when you see the number of people who have left Intel Graphics in the past year, and the way Gelsinger keeps divesting of non-profitable businesses, I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Intel's GPU has a lot of room to improve, not just on drivers but on power and performance. Basically, look at Ada Lovelace and that's the bare minimum we need from Battlemage if it's really going to be competitive. We already have RDNA 3 as the less efficient, not quite as fast, etc. alternative to Intel, and AMD still has better drivers. Matching AMD isn't the end goal; Intel needs to take on Nvidia, at least up to the 4070 Ti level. -

mwm2010 If the price of this goes down, then I would be very impressed. But because of the $180 price, it isn't quite at its full potential. You're probably better off with a 6600.Reply -

btmedic04 Arc just feels like one of the industries greatest "what ifs' to me. Had these launched during the great gpu shortage of 2021, Intel would have sold as many as they could produce. Hopefully Intel sticks with it, as consumers desperately need a third vendor in the market.Reply -

cyrusfox Reply

What other choice do they have? If they canned their dGPU efforts, they still need staff to support for iGPU, or are they going to give up on that and license GPU tech? Also what would they do with their datacenter GPU(Ponte Vechio subsequent product).JarredWaltonGPU said:I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Only clear path forward is to continue and I hope they do bet on themselves and take these licks (financial loss + negative driver feedback) and keep pushing forward. But you are right Pat has killed a lot of items and spun off some great businesses from Intel. I hope battlemage fixes a lot of the big issues and also hope we see 3rd and 4th gen Arc play out. -

bit_user Thanks @JarredWaltonGPU for another comprehensive GPU review!Reply

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it! -

JarredWaltonGPU Reply

The evil CMS overlords won't let us have nice tables. That's basically the way things shake out. It hurts my heart every time I try to put in a bunch of GPUs, because I know I want to see all the specs, and I figure others do as well. Sigh.bit_user said:Thanks @JarredWaltonGPU for another comprehensive GPU review!

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it!

As for RT and AI, it's decent for sure, though I guess I just got sidetracked looking at the A750. I can't help but wonder how things could have gone differently for Intel Arc, but then the drivers still have lingering concerns. (I didn't get into it as much here, but in testing a few extra games, I noticed some were definitely underperforming on Arc.)