Why you can trust Tom's Hardware

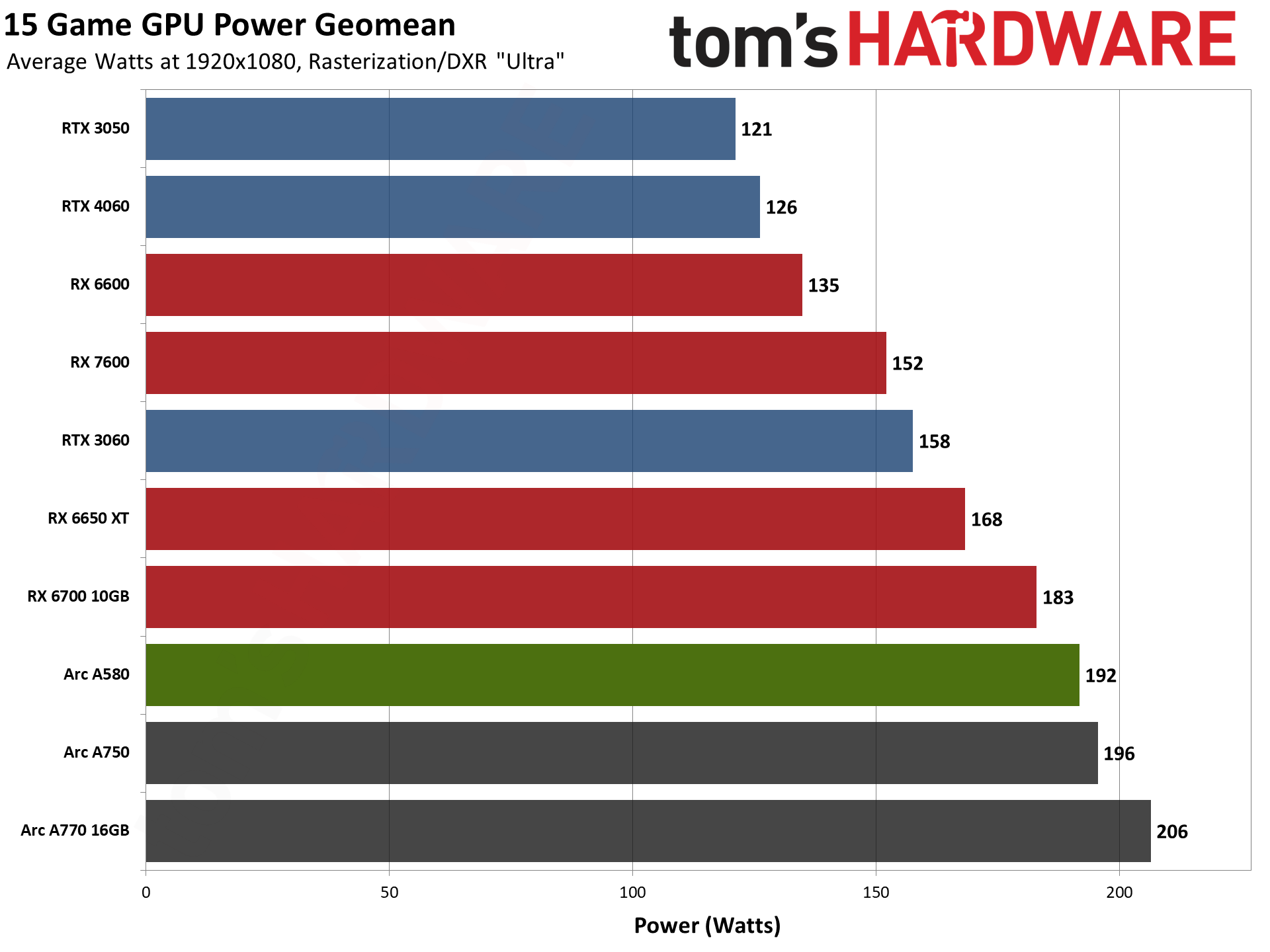

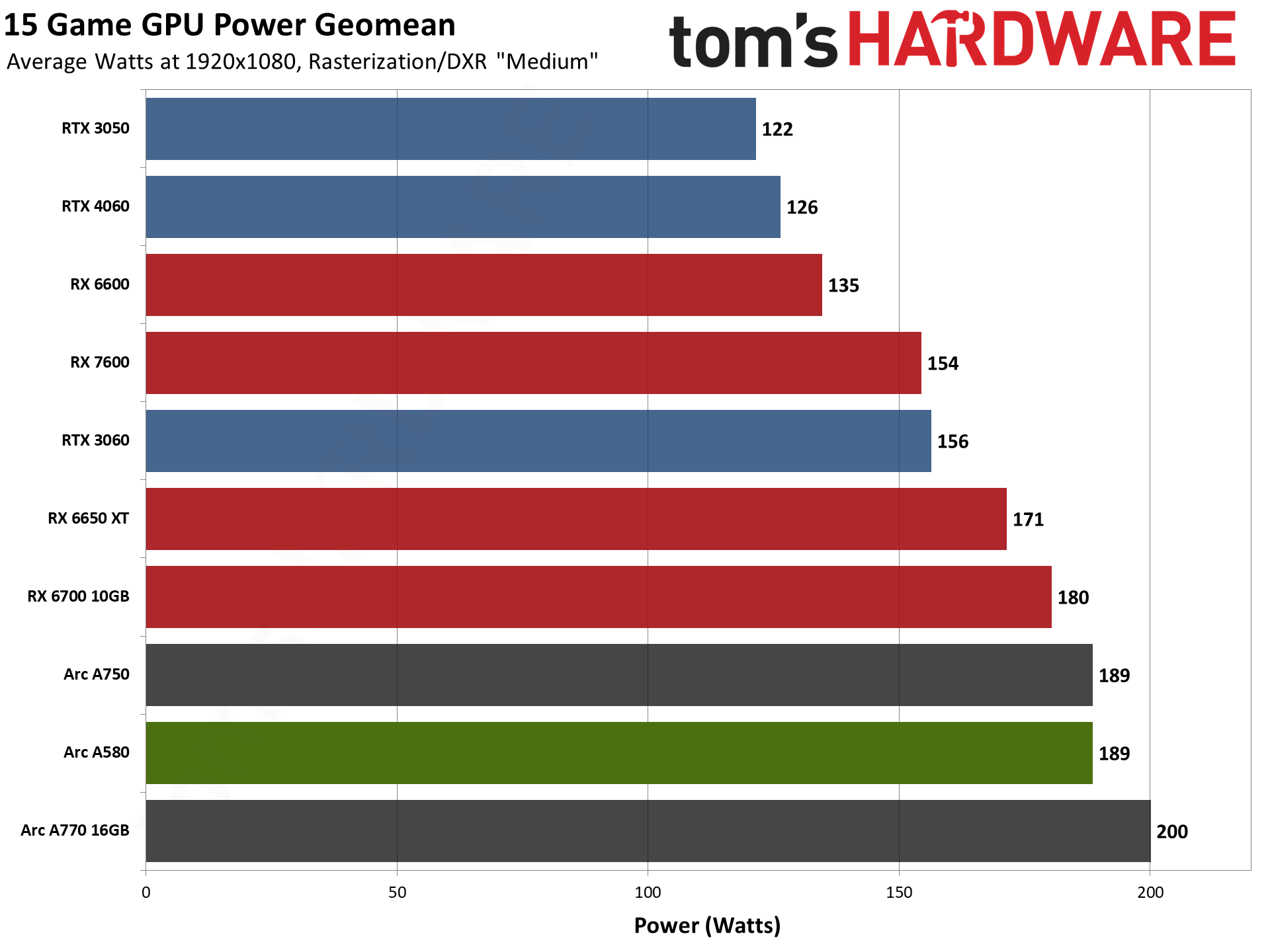

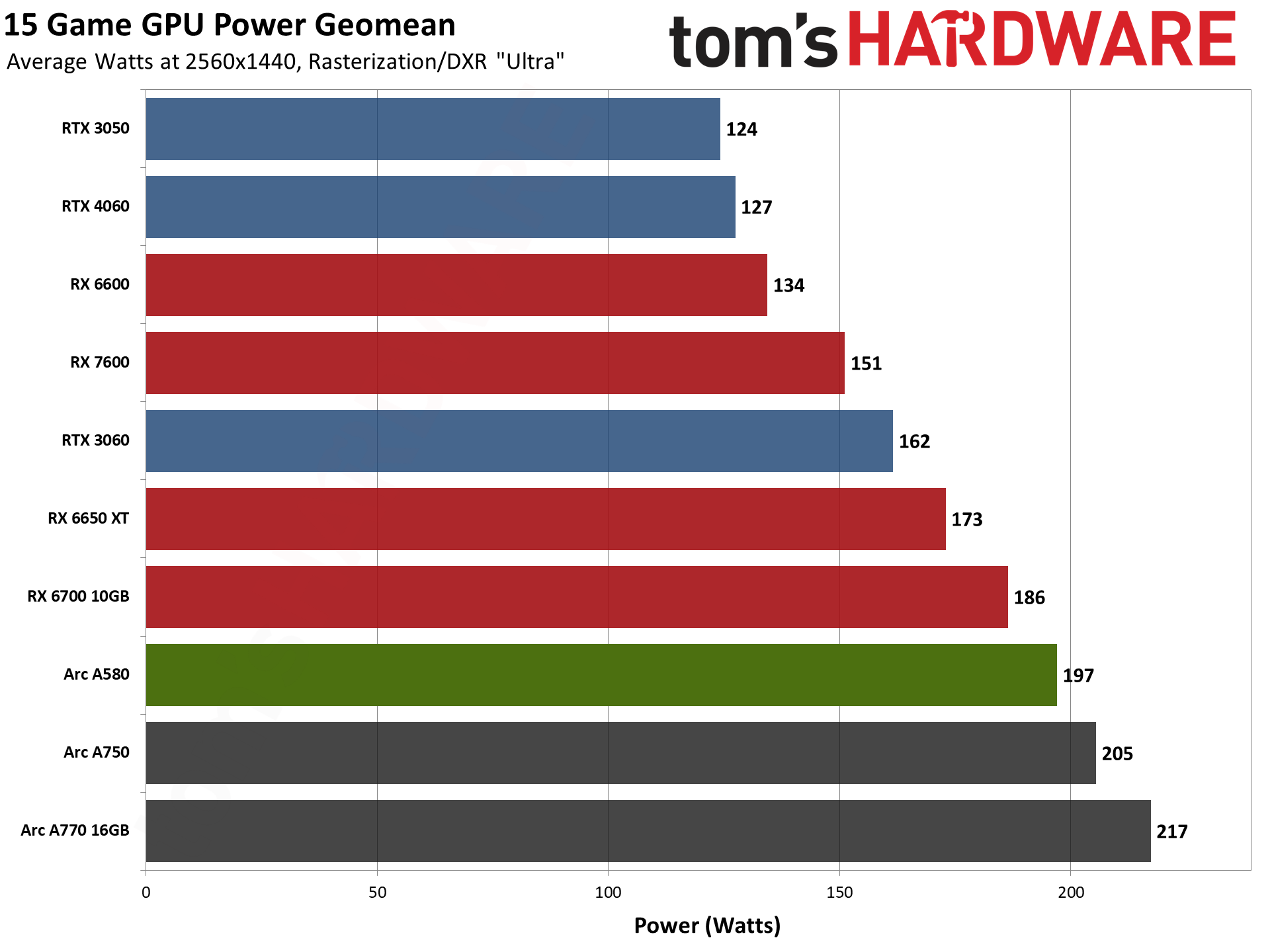

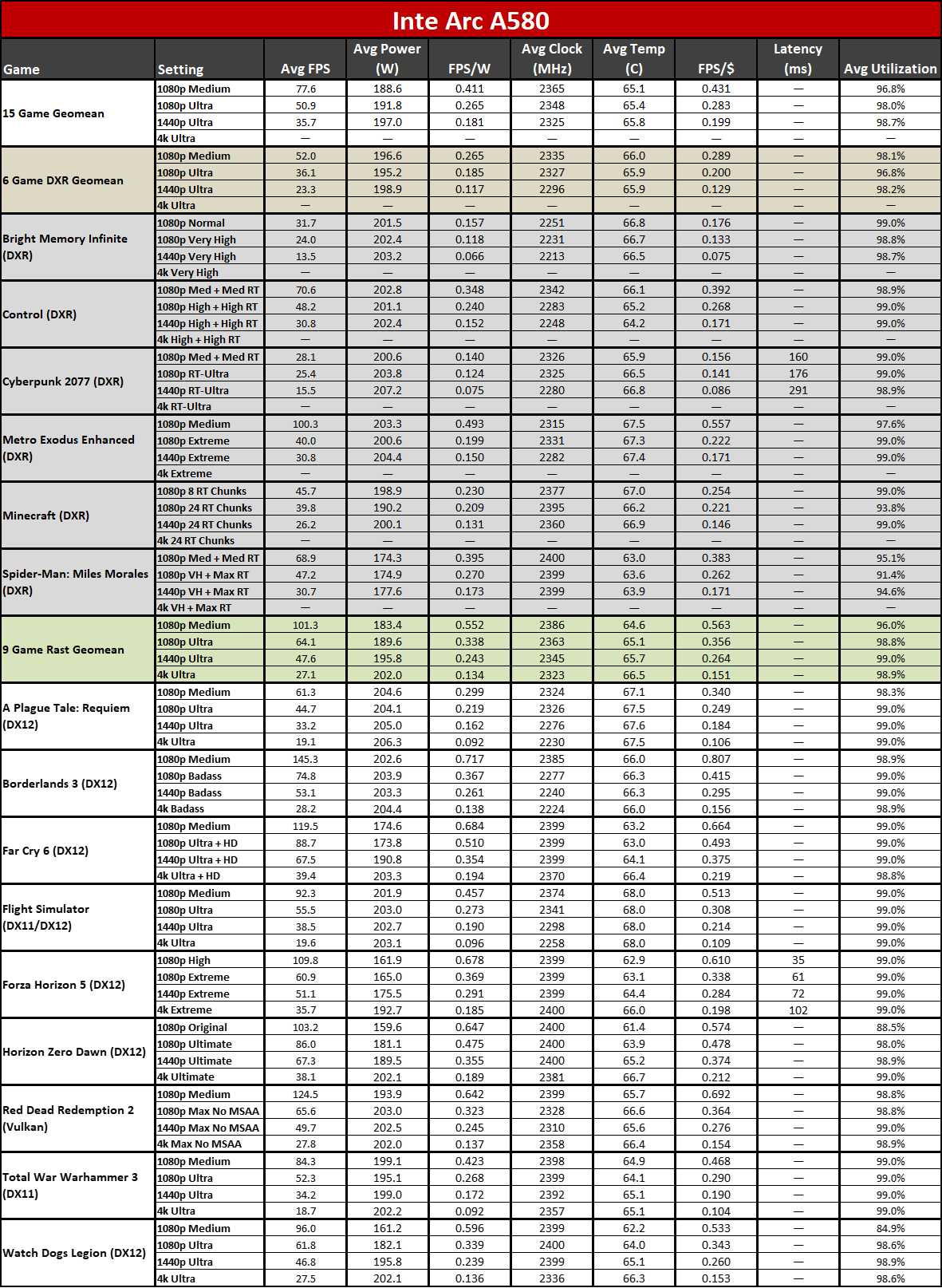

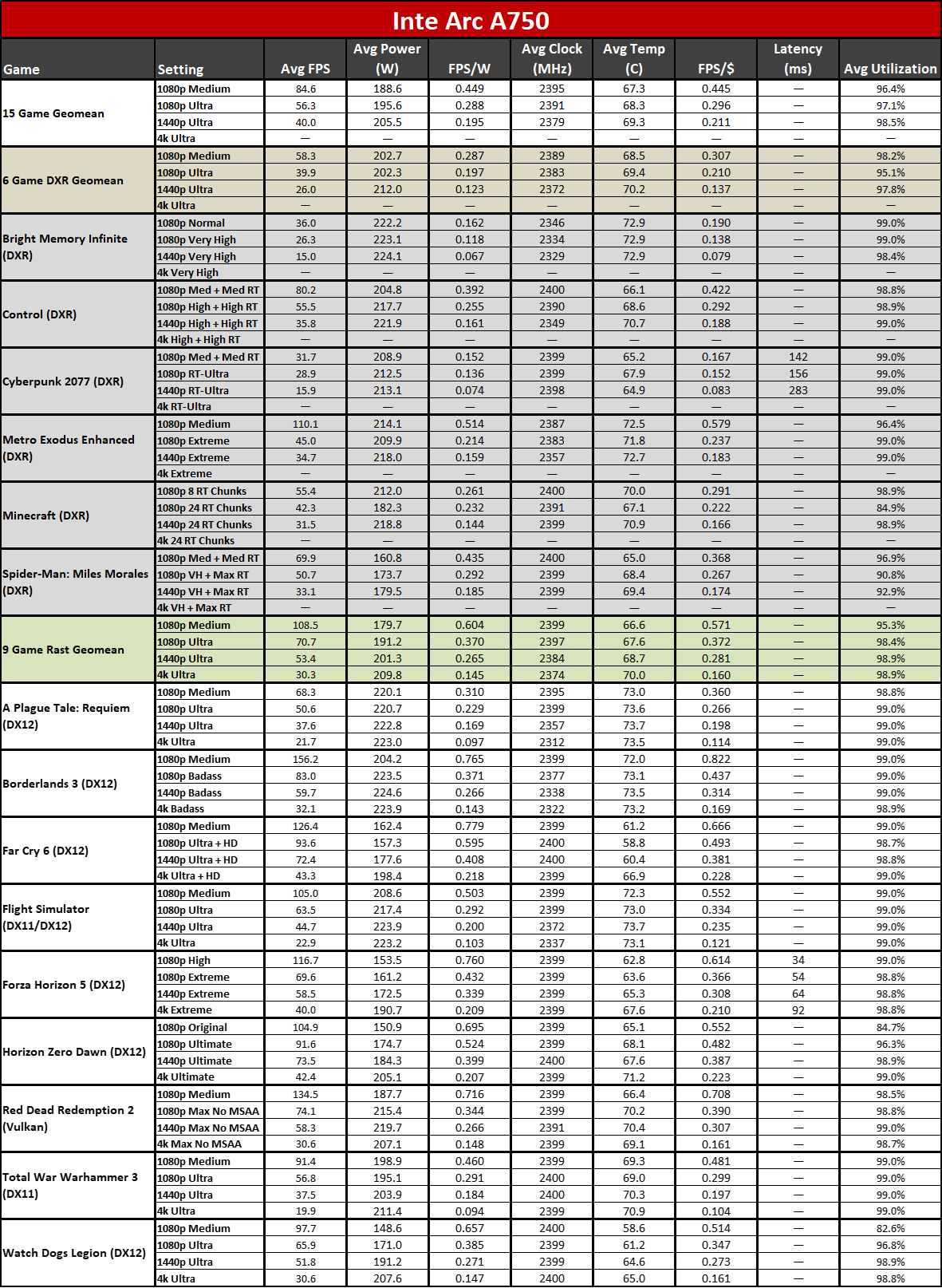

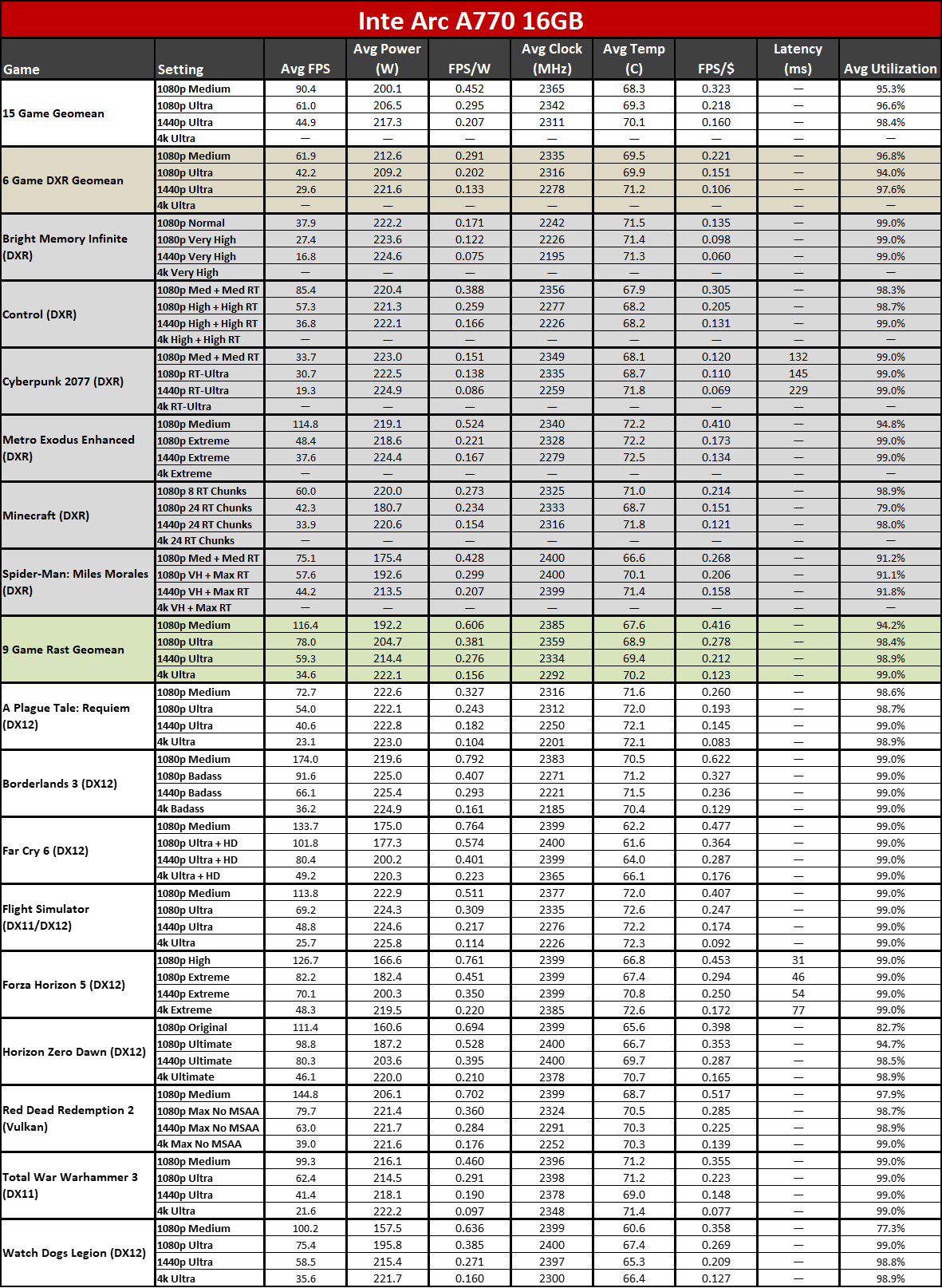

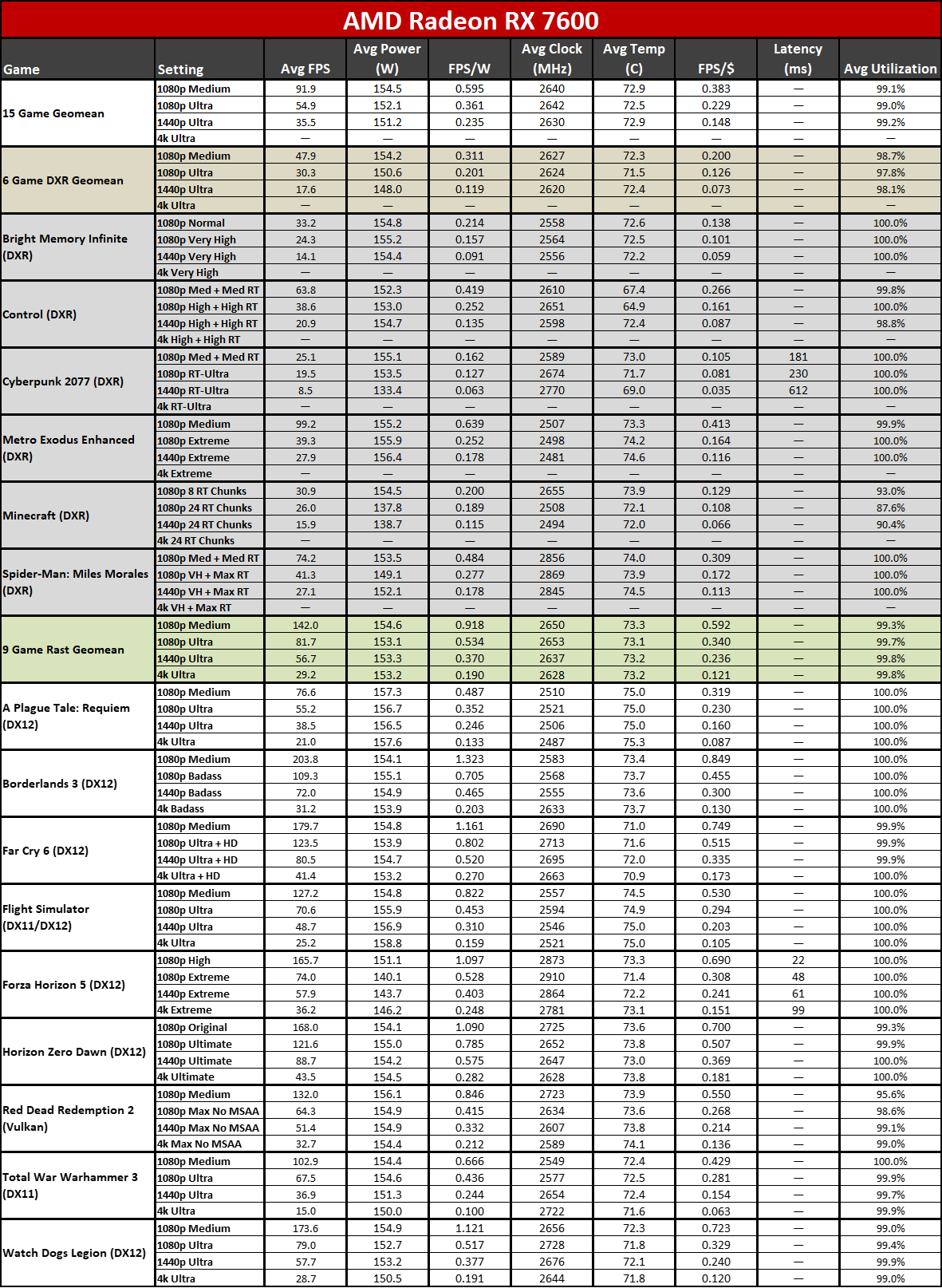

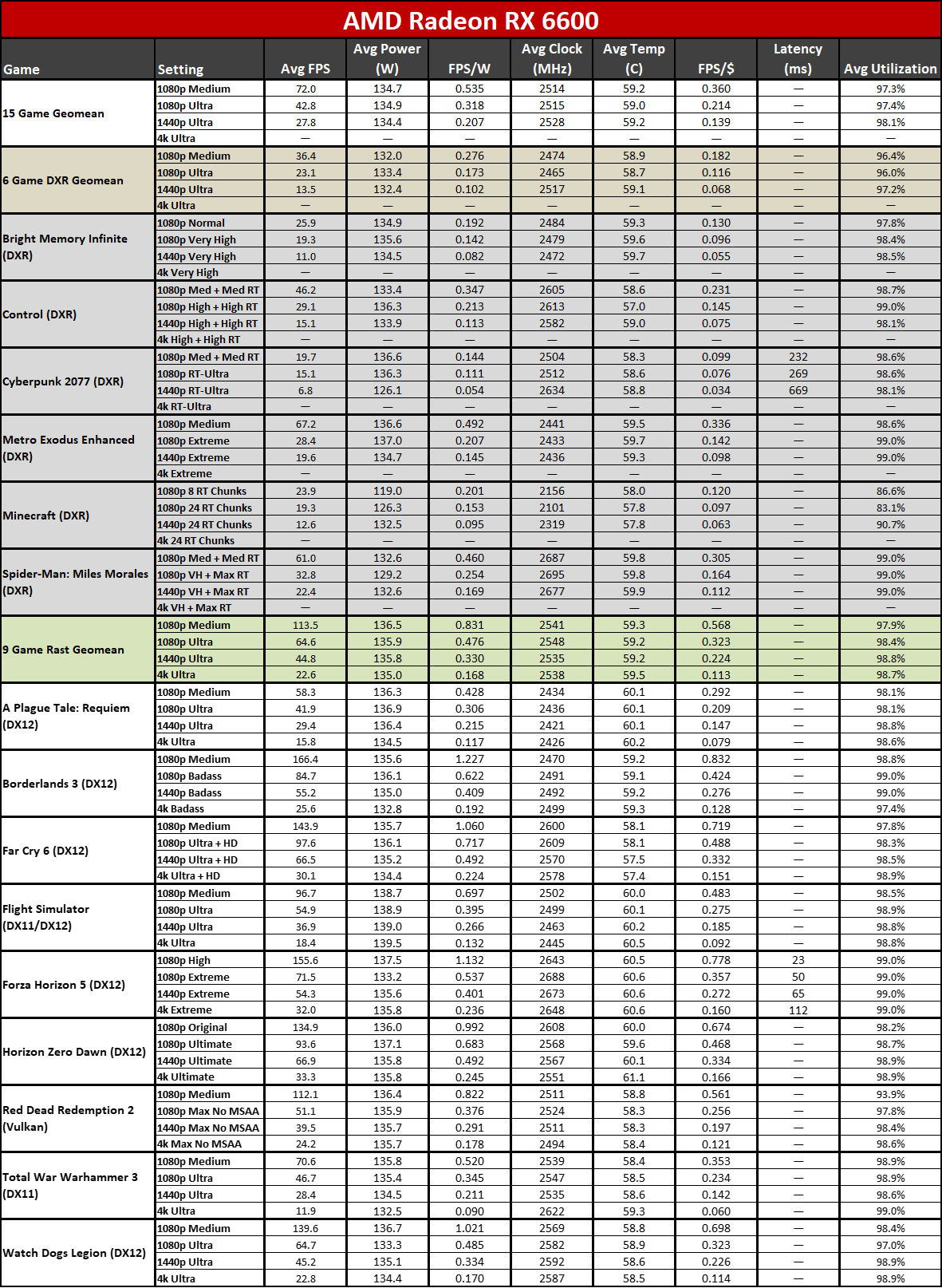

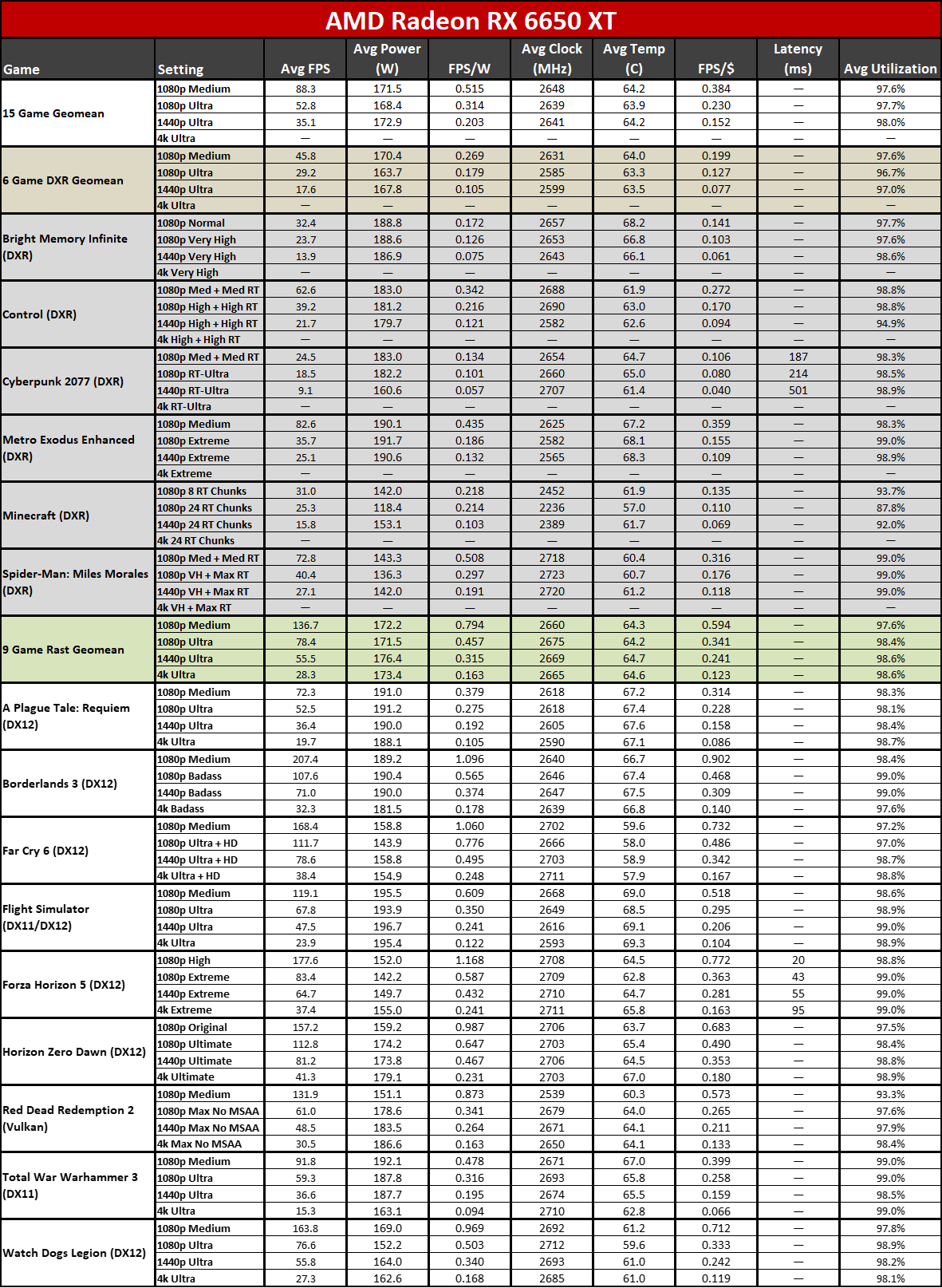

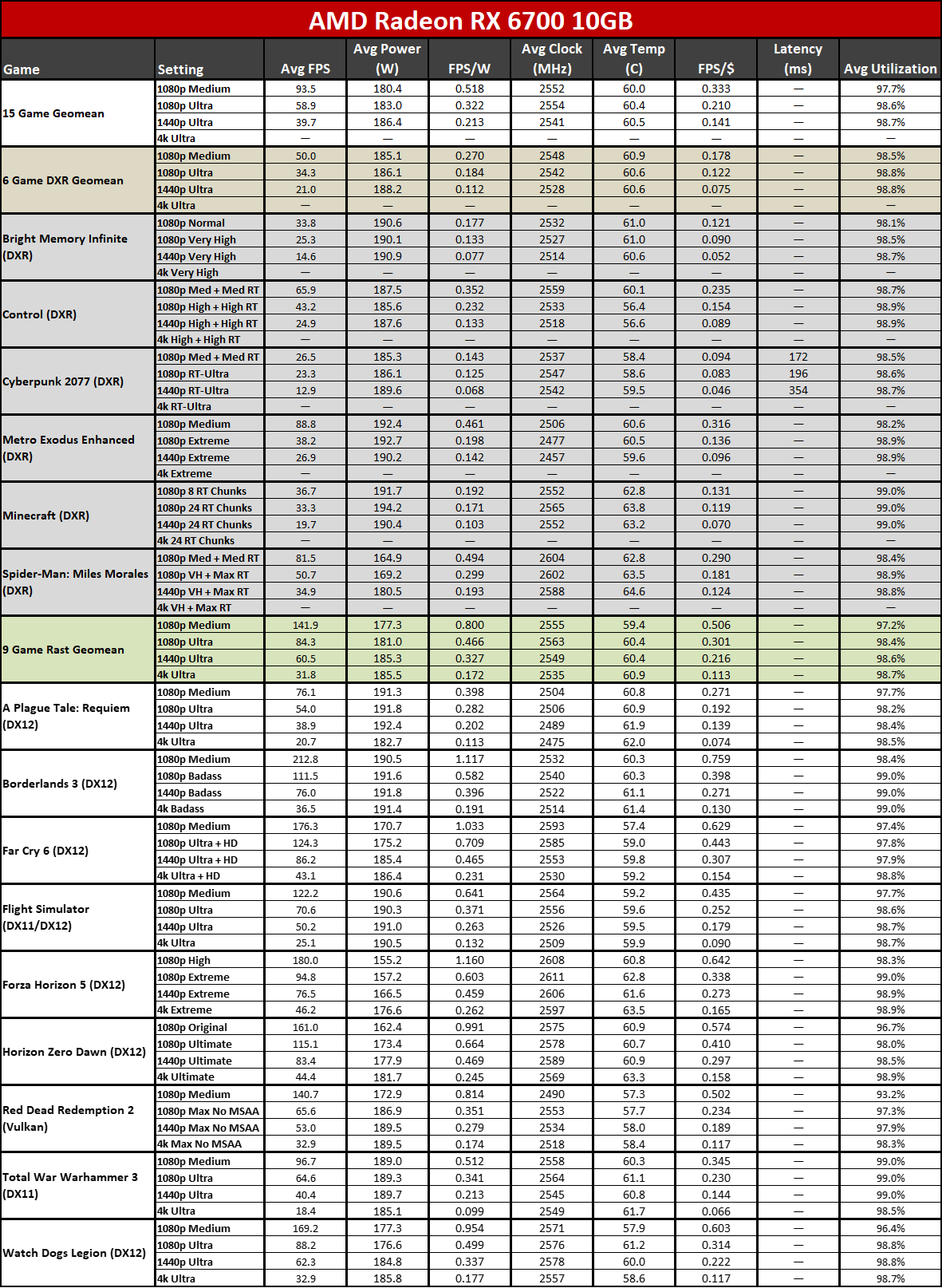

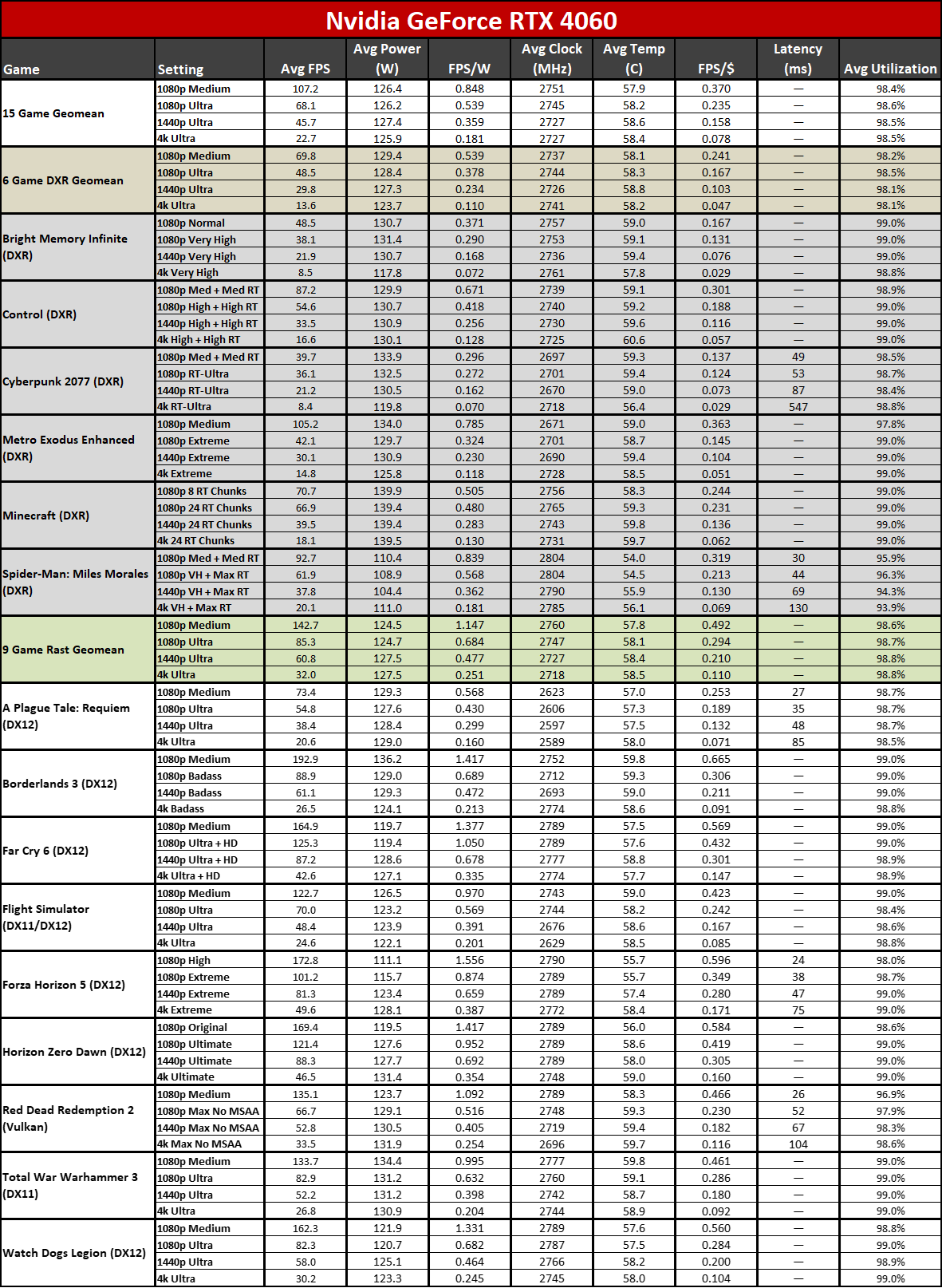

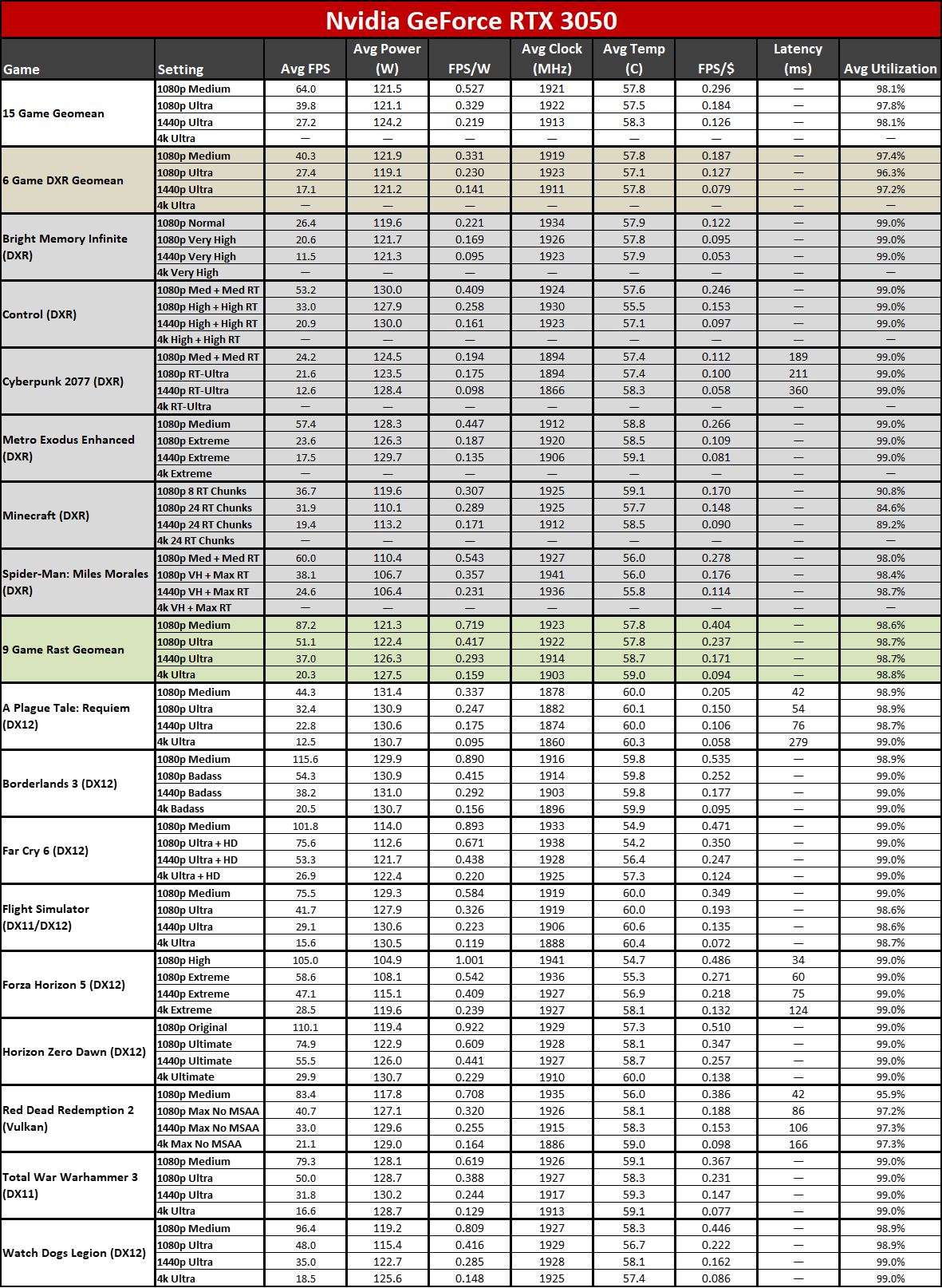

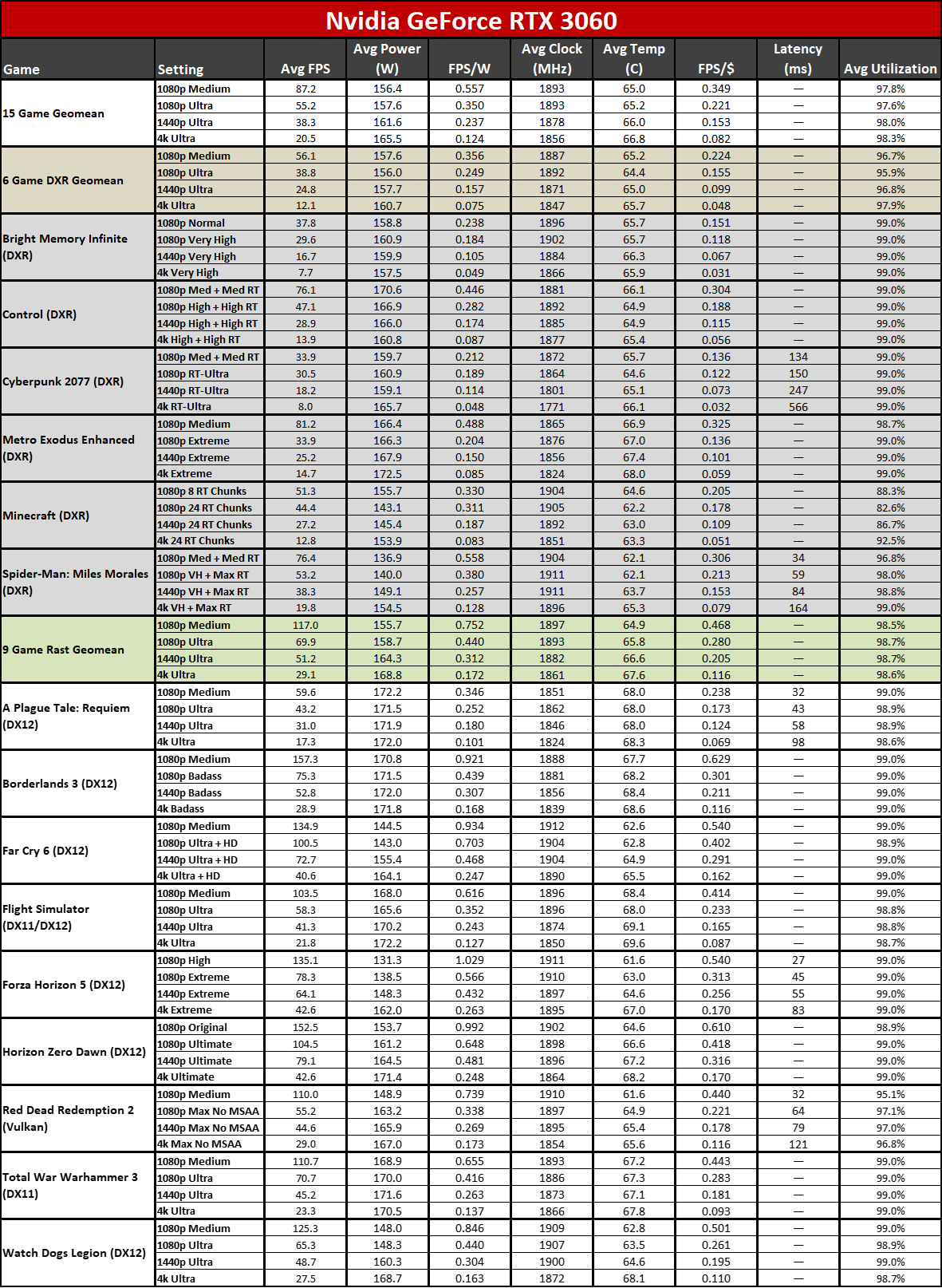

Our new test PC uses an Nvidia PCAT v2 device, and we've switched from the Powenetics hardware and software we've previously used to PCAT, as it gives us far more data without the need to run separate tests. PCAT with FrameView allows us to capture power, temperature, and GPU clocks from our full gaming suite. The charts below are the geometric mean across all 15 games, though we also have full tables showing the individual results further down the page.

If you're wondering: No, PCAT does not favor Nvidia GPUs in any measurable way. We checked power with our previous setup for the same workload and compared that with the PCAT, and any differences were well within the margin of error (less than 1%). PCAT is external hardware that simply monitors the power draw of the PCIe power cables as well as the PCIe x16 slot by sitting between the PSU/motherboard and the graphics card.

We have separate charts for 1080p medium, 1080p ultra, and 1440p ultra below. (4K doesn't get charts as only nine of the fifteen games were tested... but you can see those results in the tables at the bottom of the page if you're interested.) We also have noise level test results further down the page.

Despite the dual 8-pin power connectors, the Sparkle Arc A580 comes nowhere near needing that much power. Those two connectors could supply up to 300W in spec, plus an additional 75W from the PCIe x16 slot. We measured power use of up to 197W on average at 1440p, with peak power draw of nearly 210W.

That's less than the A750, but not by much, and it's also quite a bit more than competing GPUs like the RX 6600, never mind latest generation Nvidia cards like the RTX 4060. Intel's first dedicated GPU architecture in decades isn't bad, but it's not able to match Nvidia's best. The use of TSMC N6 (compared to 4N for Nvidia) also hinders efficiency a bit.

Intel's official spec calls for a 185W TBP / TDP for the A580, but Sparkle overclocked the card a bit and lets it consume basically as much power as it wants. This plays into the boost clocks below, which tend to be decently high considering the relatively low 1700 MHz "Game Clock" that Intel lists as the spec.

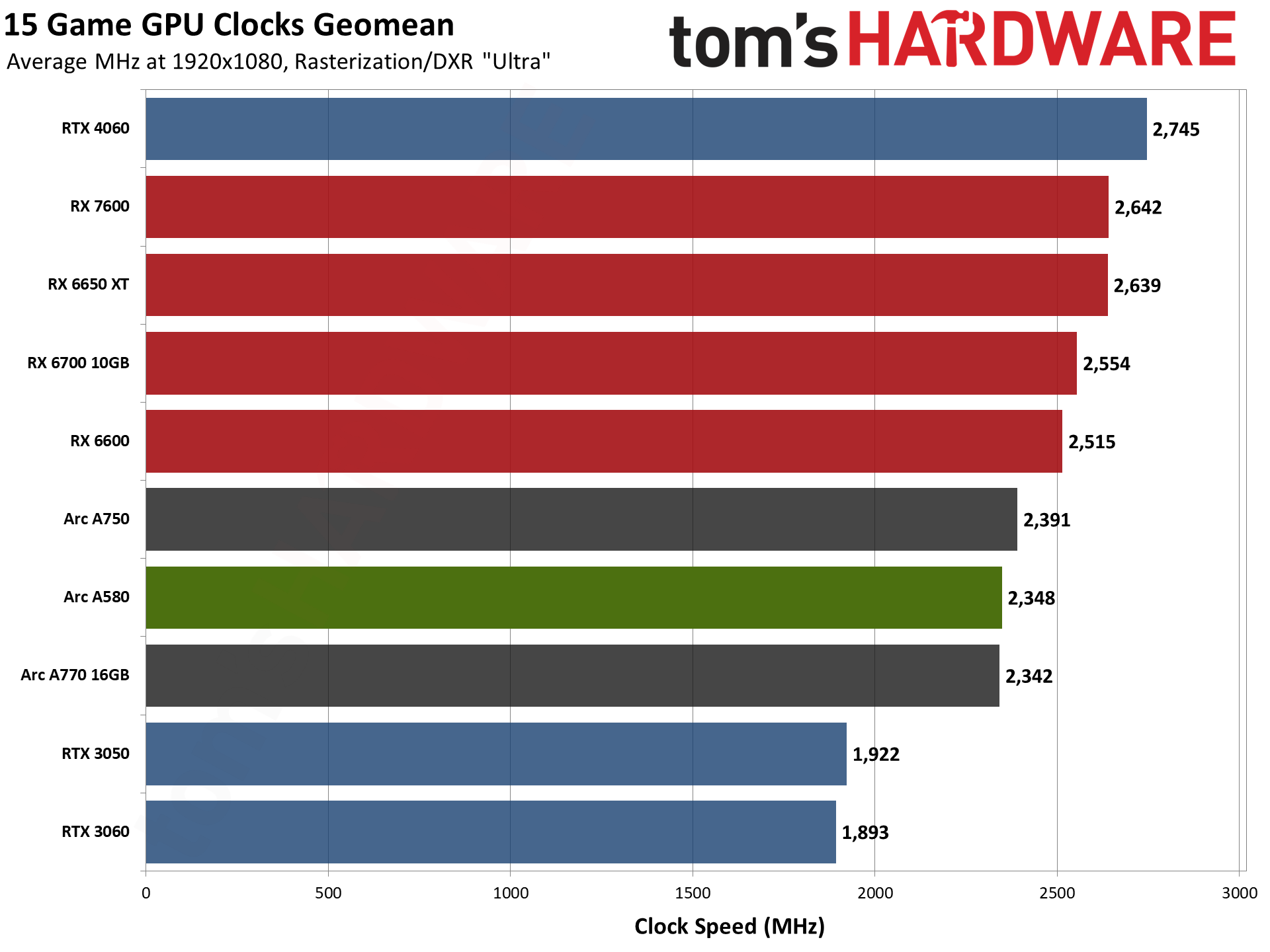

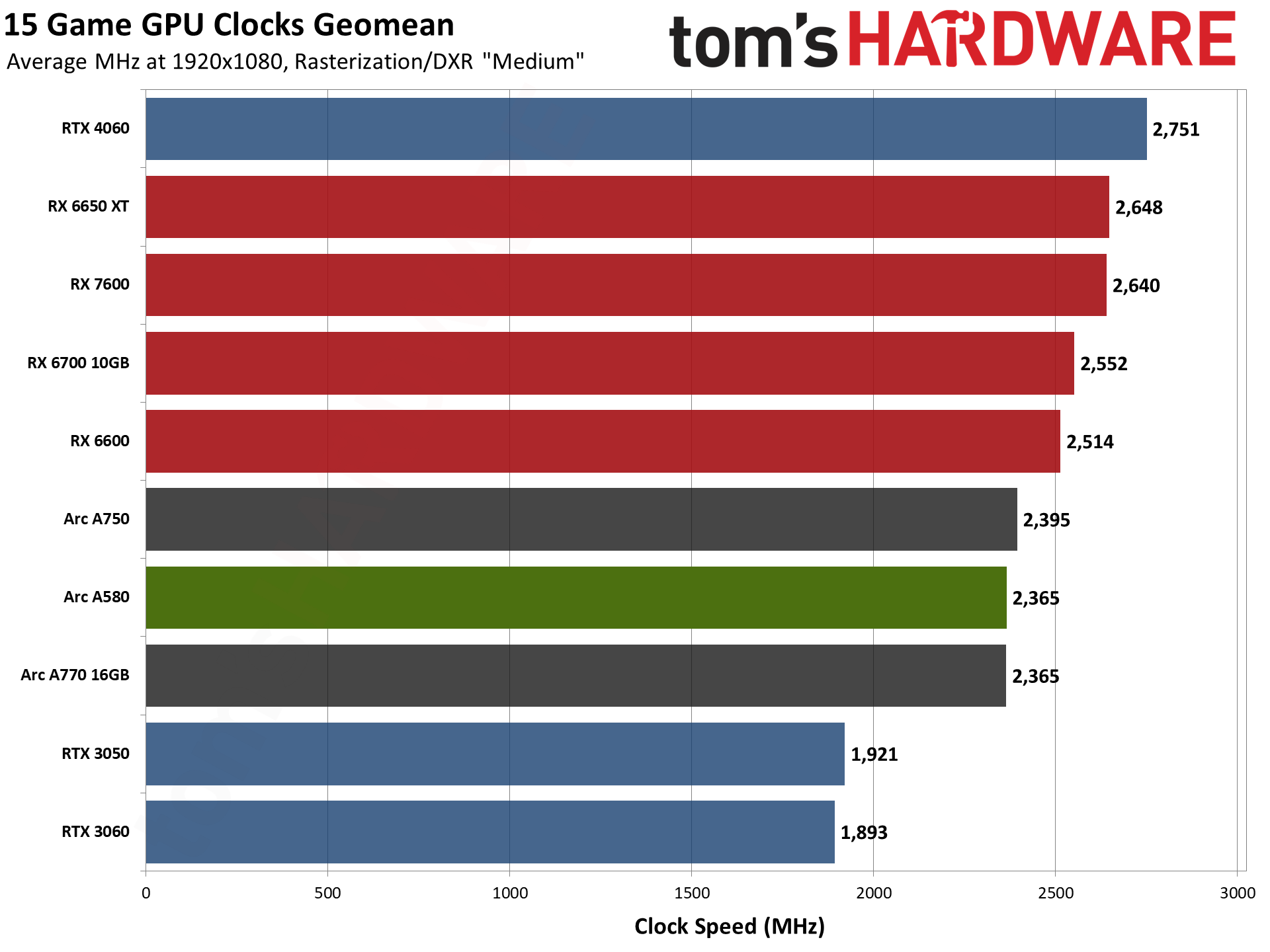

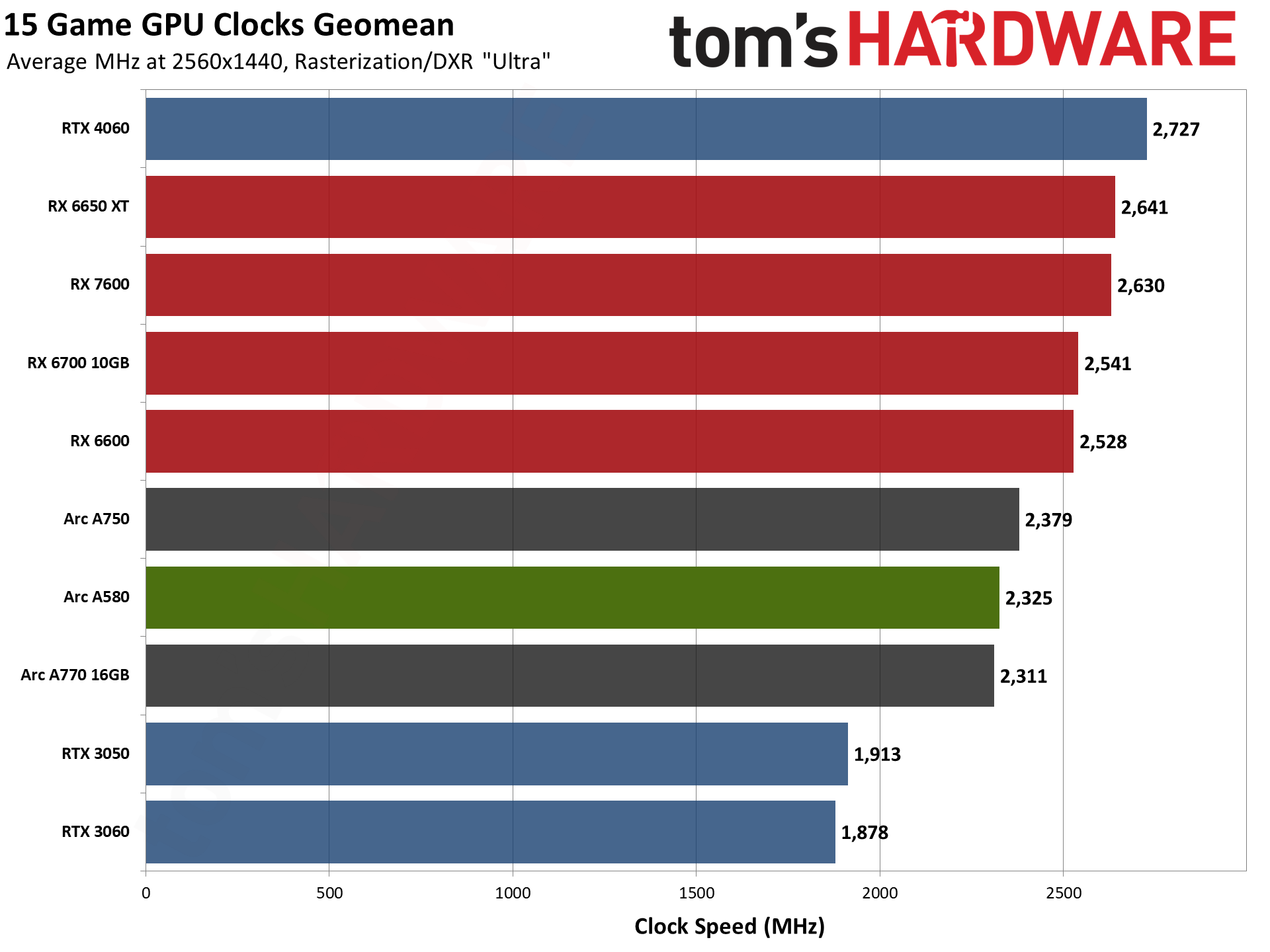

GPU clock speeds on their own don't say too much, but all the modern architectures are at least clocking at more than 2 GHz — a barrier we didn't pass until AMD RX 6000-series cards in 2020, and Nvidia RTX 40-series in 2022. Intel seems to have a hard cap of 2400 MHz on most of the Arc GPUs, though we have seen A380 hit 2450 MHz on occasion. The Arc A580 averages around 2.35 GHz, give or take 25 MHz depending on the game and resolution.

Let's quickly talk about the 1.7 GHz Game Clock, though, and what that actually means. Intel runs a selection of games as a test to see what clocks its GPUs hit. Then it uses the lowest number as the Game Clock — it's basically the minimum guaranteed clock. In practice, very few games will run anywhere near the lower limit, and we can't help but wonder if maybe the testing initially used old drivers where a few games simply weren't properly optimized or something.

Anyway, the lowest average clocks we saw in our test suite were still above 2.2 GHz on the Arc A580. Perhaps a different A580 card might clock lower, but with no reference cards, we suspect most will run at similar clocks to the Sparkle A580.

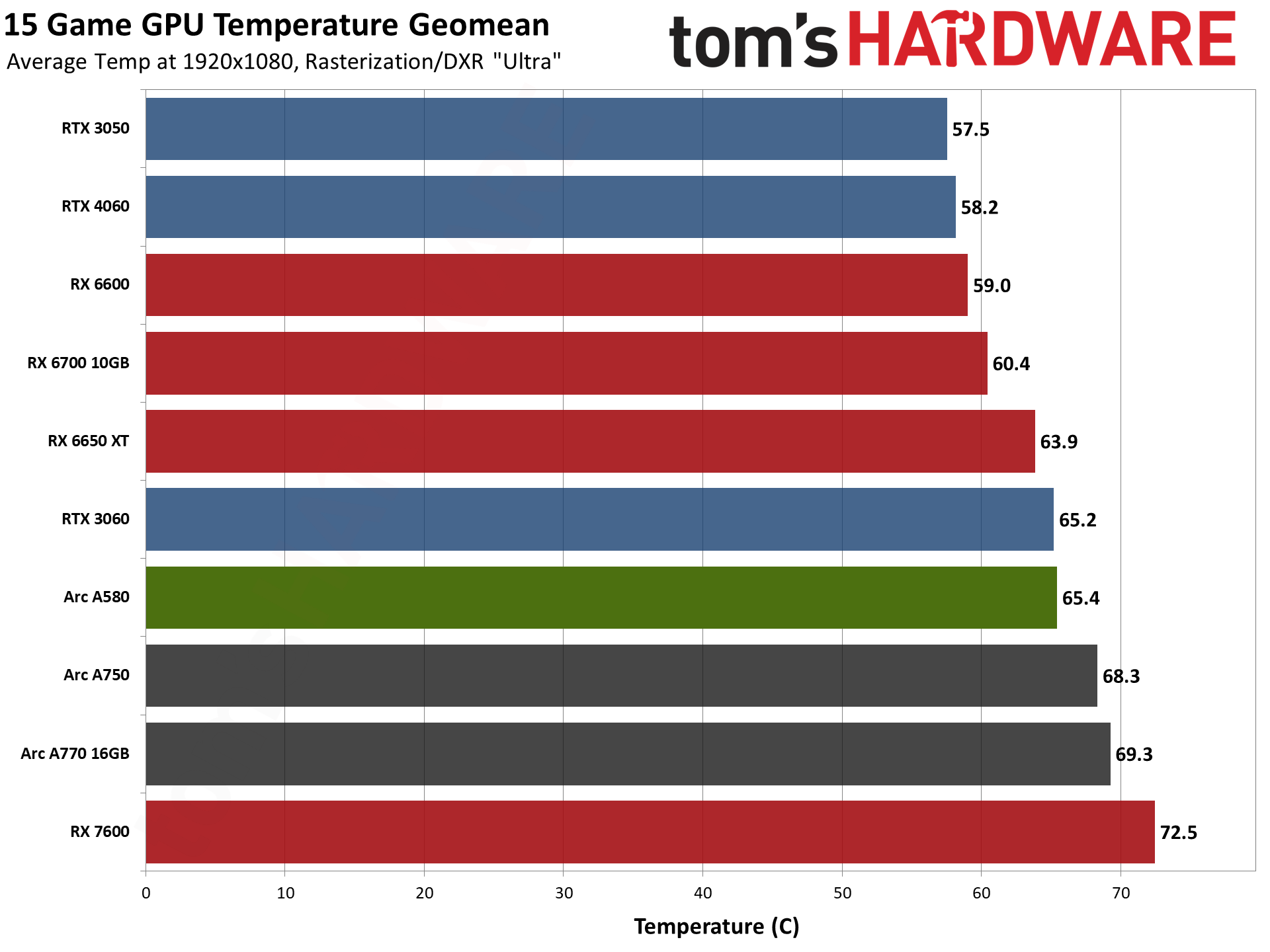

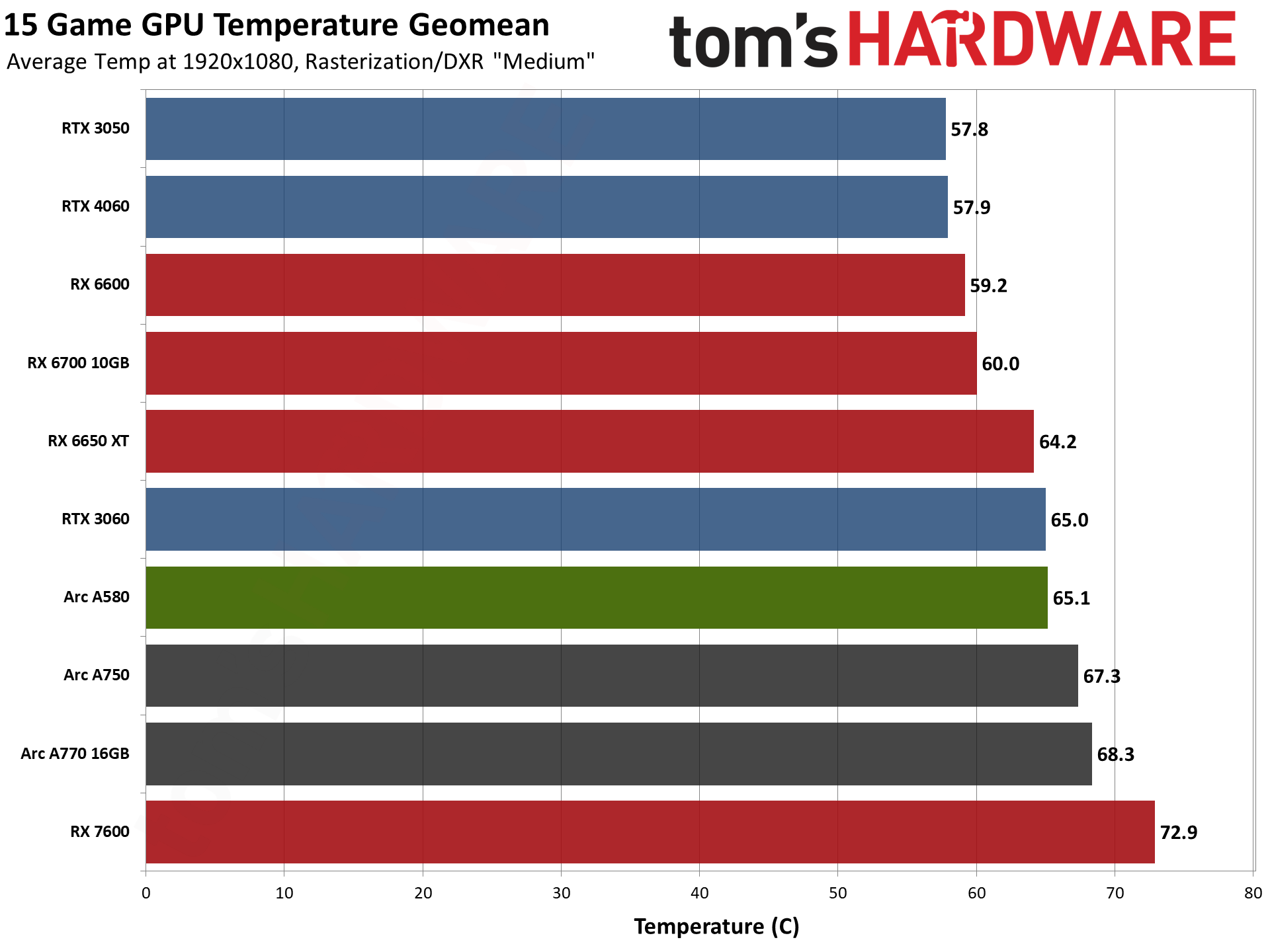

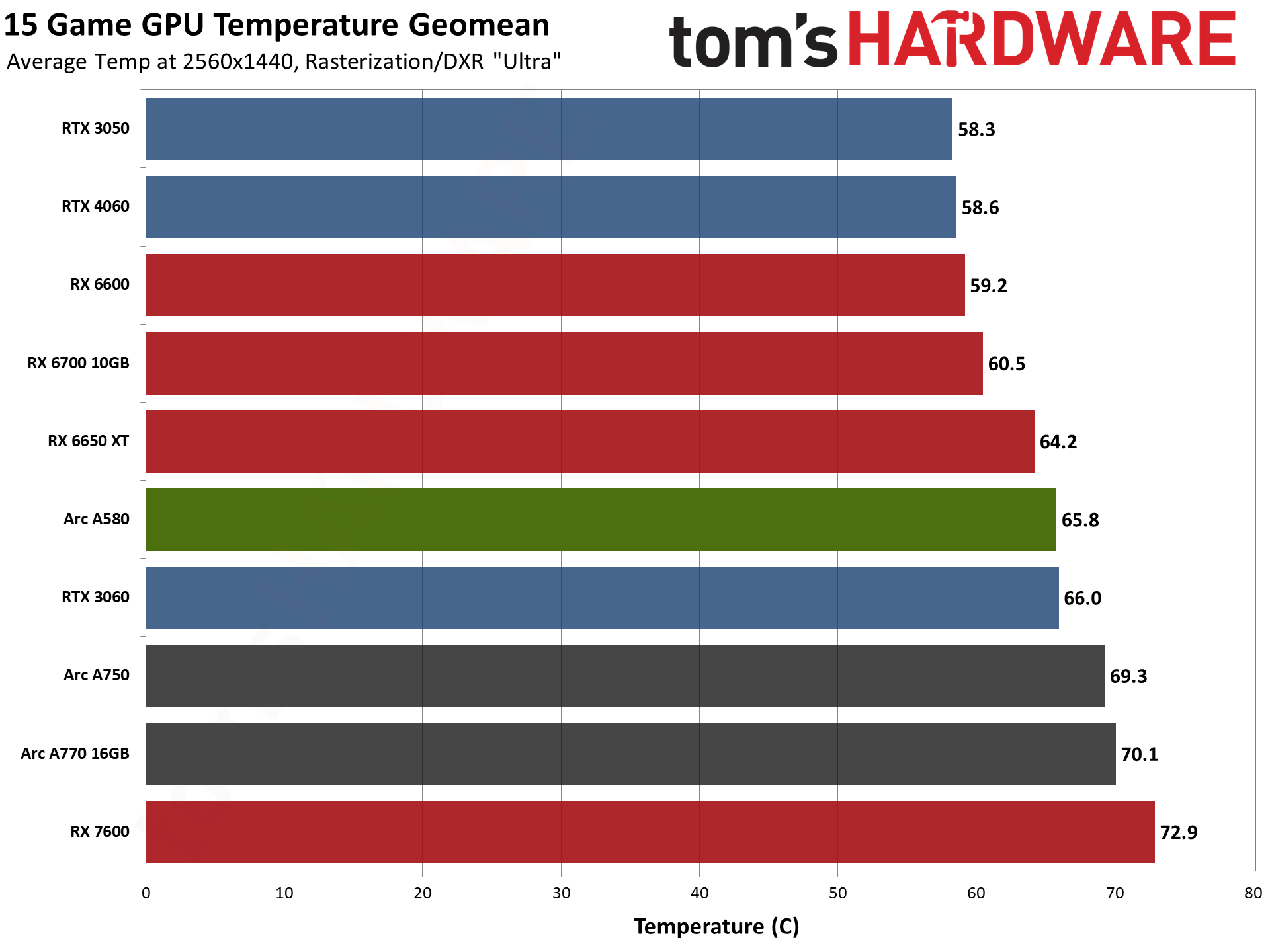

Sparkle's Arc A580 uses more power than a lot of the competing GPUs that are in our charts, and this pushes GPU temperatures up a bit higher. The only cards that ran hotter are the two Intel Arc Limited Edition reference cards and the AMD RX 7600 reference card — and that last is arguably not a great design, as most RX 7600 cards we've seen run much cooler and quieter.

Considering the power draw, Sparkle's design does okay on thermals. It uses 10W more power than the Sapphire RX 6700, and nearly 50W more power than the RX 7600, while maintaining temperatures below 70C. The highest temperature we saw in our testing was 68C, and that would have been an excellent result just a few years ago. Even now, it's more than acceptable.

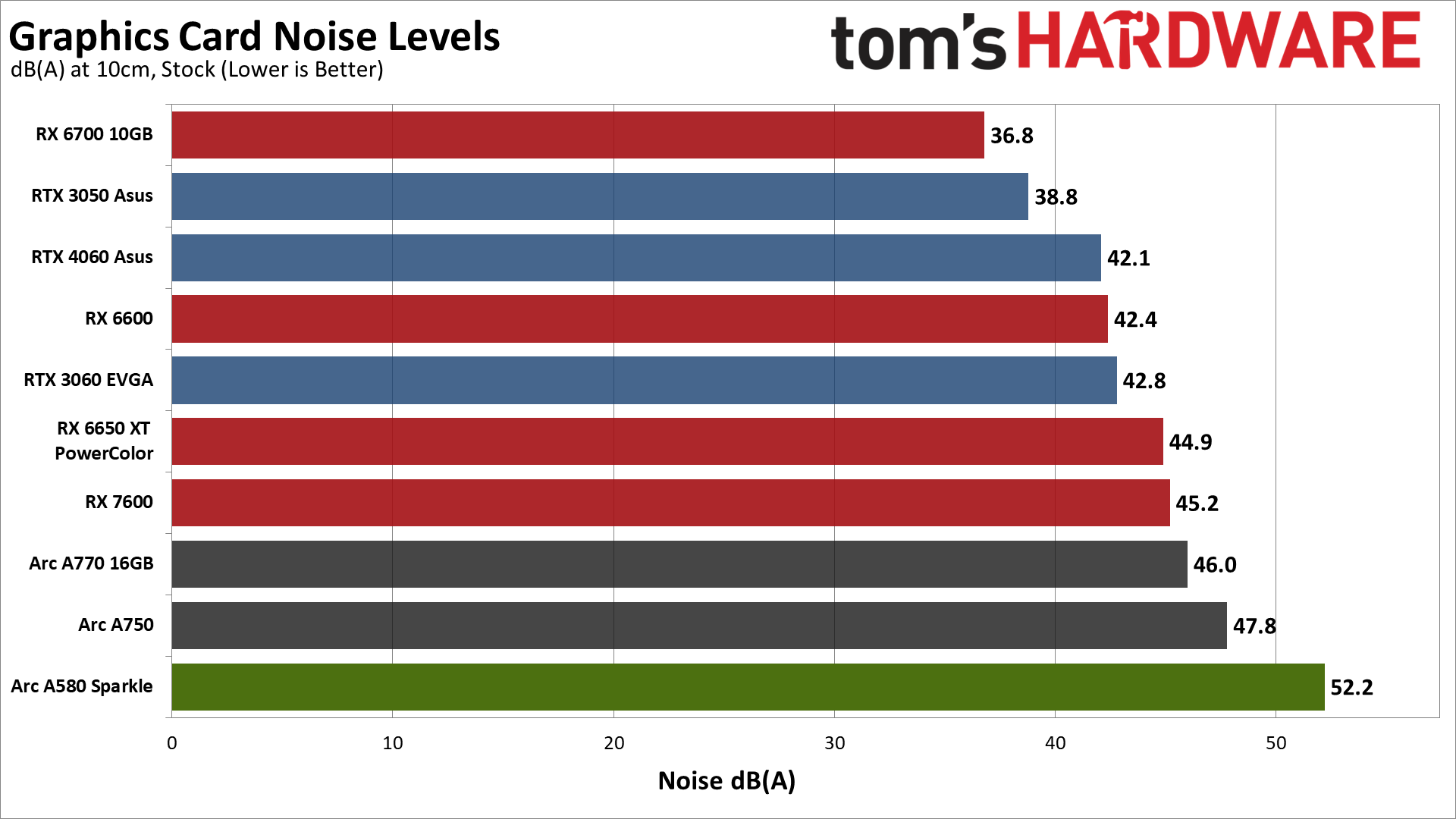

But we also need to look at noise levels, which is where things take a turn for the worse.

Sparkle Arc A580 Orc OC Noise Levels

Oh, Sparkle... what have you done? If you're in a quiet environment, the Sparkle A580 is absolutely audible. What's worse, the fan speeds aren't constant. Even at idle, the fans would often turn on for a few seconds and then shut off again, repeating that every 15 seconds or so. You can see this in the above video that I captured.

The problem is that Sparkle appears to switch the fans off below 50C, which ought to be okay... but idle power draw on the card is around 50W and so it eventually breaks 50C and the fans spin up. A few seconds later, it's back below 50C and the fans turn back off. Sparkle would be far better served by dropping to 20% fan speed below 50C, or setting the fans off temperature to 40C and leaving the fan on temperature at 50C. But it's not just idle noise that's a problem.

Under load, where fan speeds usually settle in at a relatively fixed speed, the Sparkle card again keeps changing the fan speed. We could hear the fans go from about 50 dB(A) and 2,000 RPM to 52 dB(A) and 2,200 RPM, and then back down to 50 dB(A) again. It's not entirely clear why that's happening, as the GPU temperature seemed relatively static at around 66C (give or take 1C). But while the fluctuating fan speed can definitely be annoying, noise levels in general just aren't very good.

Out of all the cards tested for this review, the Sparkle A580 was the noisiest. Using the peak 52.2 dB(A) noise level we measured during testing, that's 4.4 dB(A) more than the next closest card... a card that uses slightly more power and doesn't even have fans with integrated rims. And compared to the better cards in our group, like the Sapphire RX 6700 10GB, the difference between 37 dB(A) and 52 dB(A) is absolutely massive.

Keep in mind that decibels use a logarithmic scale. Every 3 dB represents a doubling of the sound energy, while an increase of around 10 dB is typically perceived as being twice as loud. Frequency also comes into play, however, along with some other factors like the above mentioned changing noise levels. However you want to slice it, we found the Sparkle card tended to make an annoying amount of noise.

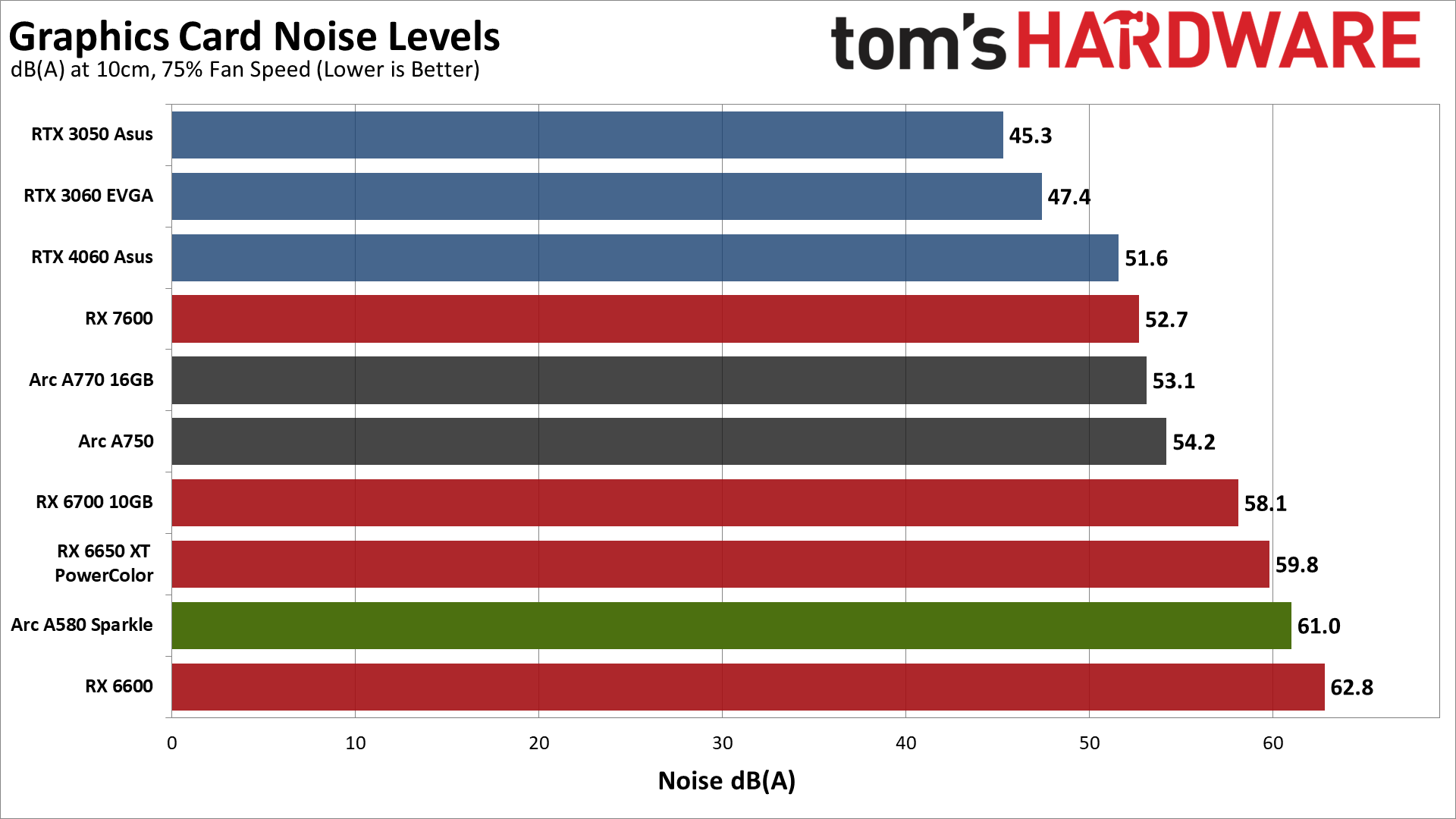

You can at least somewhat fix this by setting your own custom fan speed curve, but the Sparkle card in general doesn't seem to want to hold a steady RPM. When we used the Intel Arc Control Panel to assign a static 75% fast speed, for example, we still saw fluctuations in fan speed and noise. Maybe this can be fixed, but out of the box, we didn't much care for Sparkle's fan profiles.

For reference, our noise test consists of running Metro Exodus, as it's one of the more power hungry games. We load a save, with graphics set to appropriately strenuous levels (1080p ultra in this case), and then let the game sit for at least 15 minutes before checking noise levels. We place the SPL (sound pressure level) meter 10cm from the card, with the mic aimed at the center of the back fan. This helps minimize the impact of other noise sources, like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 31–32 dB(A).

Intel's control panel doesn't directly report fan speed as a percentage, but it looks to be around 50% — and it varied between 2000 and 2200 RPM. We also tested with a static fan speed of 75%, which resulted in noise levels of 60–64 dB(A). We don't expect the Sparkle card would normally get that loud, but again, the constantly shifting fan speed makes it more noticeable than other cards.

| Graphics Card | Price (MSRP) | Value - FPS/$ | Power | Efficiency — FPS/W |

|---|---|---|---|---|

| Intel Arc A750 | $190 ($250) | 1 — 0.292 | 199W | 9 — 0.279 |

| Intel Arc A580 | $180 ($180) | 2 — 0.279 | 194W | 10 — 0.259 |

| GeForce RTX 4060 | $290 ($300) | 3 — 0.232 | 127W | 1 — 0.531 |

| Radeon RX 6650 XT | $230 ($400) | 4 — 0.229 | 171W | 7 — 0.308 |

| Radeon RX 7600 | $240 ($270) | 5 — 0.227 | 153W | 2 — 0.357 |

| GeForce RTX 3060 | $250 ($330) | 6 — 0.220 | 160W | 3 — 0.344 |

| Intel Arc A770 16GB | $280 ($350) | 7 — 0.217 | 210W | 8 — 0.289 |

| Radeon RX 6600 | $200 ($330) | 8 — 0.213 | 135W | 6 — 0.316 |

| Radeon RX 6700 10GB | $281 ($430) | 9 — 0.207 | 184W | 5 — 0.317 |

| GeForce RTX 3050 | $216 ($250) | 10 — 0.184 | 123W | 4 — 0.323 |

Here's the full rundown of all our testing, including performance per watt and performance per dollar metrics. The prices are based on the best retail price we can find for a new card, at the time of writing. These values can fluctuate, though we don't know what will ultimately happen with A580 pricing yet.

Based on the current retail prices, the best GPU value of the cards used in this review is the Arc A750. The A580 ranks second, followed by the RTX 4060 and RX 7600. we've listed above (at 1440p and in FPS/$) is the RTX 4060, followed by the RTX 4060 Ti. But while the base price to performance ratio may look good, we also need to discuss efficiency.

The Arc A580, or at least the Sparkle card we used for testing, ranked as the least efficient GPU out of the ten included in this review. The Arc A750 came in second worst. Not surprisingly, the RTX 4060 was the most efficient GPU in this group, followed by the RX 7600, RTX 3060, and RTX 3050.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Arc A580: Power, Clocks, Temps, and Noise

Prev Page Intel Arc A580: Professional Content Creation and AI Performance Next Page Intel Arc A580: Very Late to the Party

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

AgentBirdnest Awesome review, as always!Reply

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

JarredWaltonGPU Reply

Yeah, the $180 MSRP just feels like wishful thinking right now rather than reality. I don't know what supply of Arc GPUs looks like from the manufacturing side, and I feel like Intel may already be losing money per chip. But losing a few dollars rather than losing $50 or whatever is probably a win. This would feel a ton better at $150 or even $160, and maybe add half a star to the review.AgentBirdnest said:Awesome review, as always!

Dang, was really hoping this would be more like $150-160. I bet the price will drop before long, though; I can't imagine many people choosing this over the A750 that is so closely priced. Still, it just feels good to see a card that can actually play modern AAA games for under $200. -

hotaru.hino Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.Reply -

JarredWaltonGPU Reply

That's the assumption I'm going off: Intel is willing to take a short-term / medium-term loss on GPUs in order to bootstrap its data center and overall ambitions. The consumer graphics market is just a side benefit that helps to defray the cost of driver development and all the other stuff that needs to happen.hotaru.hino said:Intel does have some cash to burn and if they are selling these cards at a loss, it'd at least put weight that they're serious about staying in the discrete GPU business.

But when you see the number of people who have left Intel Graphics in the past year, and the way Gelsinger keeps divesting of non-profitable businesses, I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Intel's GPU has a lot of room to improve, not just on drivers but on power and performance. Basically, look at Ada Lovelace and that's the bare minimum we need from Battlemage if it's really going to be competitive. We already have RDNA 3 as the less efficient, not quite as fast, etc. alternative to Intel, and AMD still has better drivers. Matching AMD isn't the end goal; Intel needs to take on Nvidia, at least up to the 4070 Ti level. -

mwm2010 If the price of this goes down, then I would be very impressed. But because of the $180 price, it isn't quite at its full potential. You're probably better off with a 6600.Reply -

btmedic04 Arc just feels like one of the industries greatest "what ifs' to me. Had these launched during the great gpu shortage of 2021, Intel would have sold as many as they could produce. Hopefully Intel sticks with it, as consumers desperately need a third vendor in the market.Reply -

cyrusfox Reply

What other choice do they have? If they canned their dGPU efforts, they still need staff to support for iGPU, or are they going to give up on that and license GPU tech? Also what would they do with their datacenter GPU(Ponte Vechio subsequent product).JarredWaltonGPU said:I can't help but wonder how much longer he'll be willing to let the Arc experiment continue. I hope we can at least get to Celestial and Druid before any final decision is made, but that will probably depend on how Battlemage does.

Only clear path forward is to continue and I hope they do bet on themselves and take these licks (financial loss + negative driver feedback) and keep pushing forward. But you are right Pat has killed a lot of items and spun off some great businesses from Intel. I hope battlemage fixes a lot of the big issues and also hope we see 3rd and 4th gen Arc play out. -

bit_user Thanks @JarredWaltonGPU for another comprehensive GPU review!Reply

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it! -

JarredWaltonGPU Reply

The evil CMS overlords won't let us have nice tables. That's basically the way things shake out. It hurts my heart every time I try to put in a bunch of GPUs, because I know I want to see all the specs, and I figure others do as well. Sigh.bit_user said:Thanks @JarredWaltonGPU for another comprehensive GPU review!

I was rather surprised not to see you reference its relatively strong Raytracing, AI, and GPU Compute performance, in either the intro or the conclusion. For me, those are definitely highlights of Alchemist, just as much as AV1 support.

Looking at that gigantic table, on the first page, I can't help but wonder if you can ask the appropriate party for a "zoom" feature to be added for tables, similar to the way we can expand embedded images. It helps if I make my window too narrow for the sidebar - then, at least the table will grow to the full width of the window, but it's still not wide enough to avoid having the horizontal scroll bar.

Whatever you do, don't skimp on the detail! I love it!

As for RT and AI, it's decent for sure, though I guess I just got sidetracked looking at the A750. I can't help but wonder how things could have gone differently for Intel Arc, but then the drivers still have lingering concerns. (I didn't get into it as much here, but in testing a few extra games, I noticed some were definitely underperforming on Arc.)