Can Lucidlogix Right Sandy Bridge’s Wrongs? Virtu, Previewed

Quick Sync was Intel's secret Sandy Bridge weapon, kept quiet for five years and unveiled at the very last moment. But there's a chance that desktop enthusiasts will miss out on that functionality. That is, unless Lucidlogix has anything to do with it.

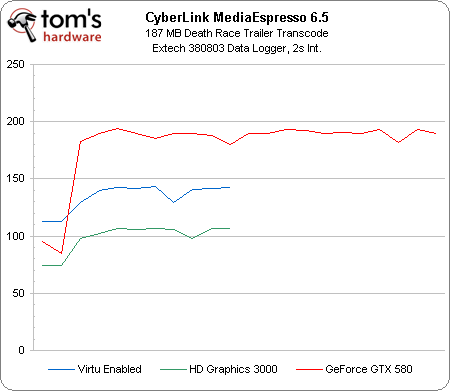

Benchmark Results: Power, Analyzed

Lucidlogix is touting power-saving as one of the reasons to use Virtu—and that’s one of the biggest reasons why it’s using Intel’s integrated graphics engine as a native adapter, rather than the discrete card. Purportedly, it’s possible to power down the add-in card, saving power when it’s not needed.

Our power test shows that isn’t necessarily the case in this version of the software, though. Charting a run of the same Death Race trailer transcode, Intel’s HD Graphics 3000 on its own uses the least amount of power. Drop in a GeForce GTX 580, which just sits there idling while Quick Sync does its work, and power consumption jumps up by about 40 W.

Disable Virtu and use the GeForce card on its own, employing CUDA-accelerated transcoding, and power peaks even higher. Not only that, but the workload takes more than twice as long to complete, too. And as a nail in the coffin, transcode quality is sub-standard (for more on transcoding quality, check out: Video Transcoding Examined: AMD, Intel, And Nvidia In-Depth).

To reaffirm our results, I let all three configurations rest at idle to see if Virtu would turn off the GTX 580.

| Power Consumption At Idle | Watts |

|---|---|

| HD Graphics 3000 + GeForce GTX 580 | 112 W |

| HD Graphics 3000 | 49 W |

| GeForce GTX 580 | 108 W |

The Sandy Bridge-based configuration, sans GeForce GTX 580, delicately sips 49 W. With the HD Graphics 3000 core disabled and a GeForce GTX 580 driving our display, I recorded 108 W of power use. Then, with Virtu enabled and the two display adapters idling along, power consumption jumped to 112 W.

Hopefully we’ll see a future revision of Virtu that follows through on the promises of power reduction. For now, I don’t see this as an issue on the desktop. It’d be more of a concern in the mobile space.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: Power, Analyzed

Prev Page Benchmark Results: The Exceptions, Explained Next Page Conclusion-

mister g I'm pretty sure that Fusion only works with AMD parts, but the idea whould be the same. Anybody else remember this company's ads on the side of some of Tom's articles?Reply -

I suppose a multi-monitor setup, main screen for gaming on the discrete card (assuming game only uses that one screen), secondary on the Z68 Output of the Intel HD card, will not have any need for this, and just run perfectly.Reply

Thats how i will roll, once Z68 gets out. -

haplo602 seems like we are heading to what voodoo graphics and TV tuners were doing long long time ago. just now over the PCIe bus.Reply

I wonder why it's so difficult to map framebuffers and create virtual screens ? -

RobinPanties This sounds like software technology that should be built straight into OS's, instead of added as separate layers... maybe OS manufacturer's need to wake up (*cough* Microsoft)Reply -

truehighroller I already sent back my sandy bridge setup, that's to bad. Guess it's Intel's loss huh?Reply -

lradunovic77 This is another absolutely useless piece of crap. Why in the world would you put deal with another stupid layer and why would you use Intel integrated graphic chip (or any integrated solution) along with your dedicated video card???Reply

Conclusion of this article is...don't go with such nonsense solution.