Nvidia GeForce GTX 1070 Ti 8GB Review: Vega In The Crosshairs

Why you can trust Tom's Hardware

Temperature & Clock Rates

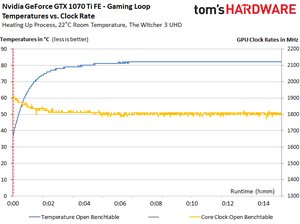

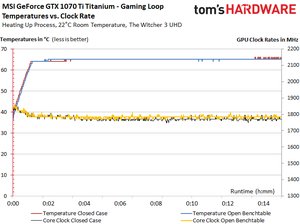

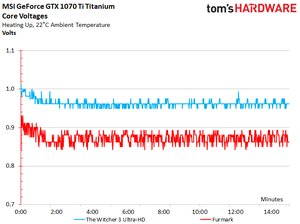

Comparing the GeForce GTX 1070 Ti Titanium 8G’s gaming frequencies to those of Nvidia’s Founders Edition card yields an interesting finding: the latter achieves a slightly higher GPU Boost clock rate in spite of significantly higher temperatures. Did we get a bad sample from MSI or a great one from Nvidia? A comparison using boards from Zotac, Gigabyte, Colorful, and Gainward suggests that we really hit the jackpot with our Founders Edition card, similar to previous launches.

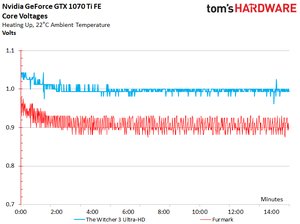

Otherwise, the two boards act as you'd expect them to. Subjected to rising temperatures, Nvidia's GeForce GTX 1070 Ti FE drops from its initial 1911 MHz to alternating numbers just above the 1800 MHz mark, whereas MSI's GeForce GTX 1070 Ti Titanium settles right below the Founders Edition’s range.

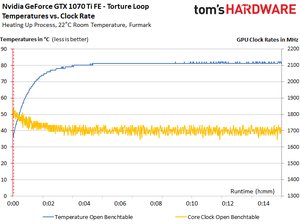

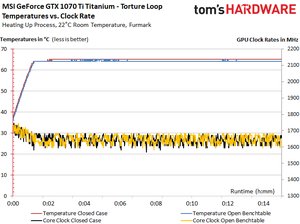

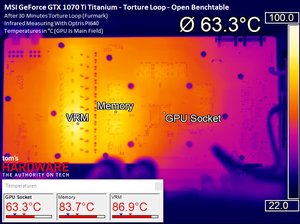

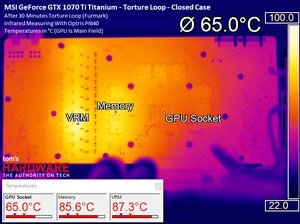

The stress test results paint a similar picture. Running the MSI card in an open or closed case doesn’t really seem to make much of a difference.

Our voltage measurements shed some light on those clock rate results. The Founders Edition card hosts a gem of a GPU. Its slightly higher voltages allow it to hit more aggressive frequencies. What are the chances we'd see two stellar samples? In fact, our U.S. and German labs both scored real winners, so maybe someone who pre-ordered a card from geforce.com could chime in with their experience using the comments section.

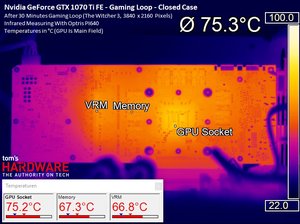

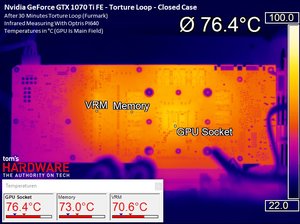

More Data: Nvidia GeForce GTX 1070 Ti FE Infrared Pictures

The GeForce GTX 1070 Ti FE exhausts all of its waste heat out the I/O bracket, so there's no point in taking measurements using an open test bench. Bottom line: Nvidia’s design is great for cooling, regardless of your case.

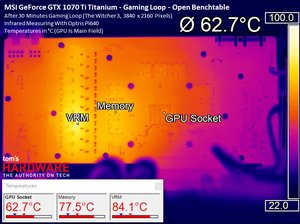

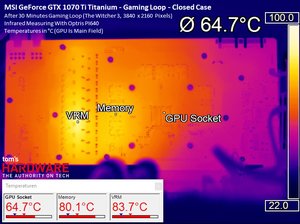

More Data: MSI GeForce GTX 1070 Ti Titanium Infrared Pictures

MSI’s Twin Frozr VI thermal solution does its job well, and is almost inaudible to boot. Small changes to the GTX 1080 Gaming X’s cooler clearly have some noticeable effects. Most important, the memory hot-spot we complained about previously is gone. Some credit for this goes to Nvidia's use of GDDR5, rather than hotter GDDR5X.

The memory’s maximum temperature of 95° is never reached during our stress test, either. These are great temperatures to report.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

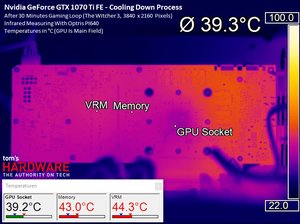

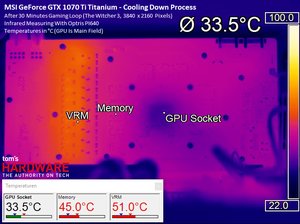

More Data: Cool Down Process Infrared Pictures

These pictures illustrate the cold spots we get from MSI's and Nvidia's coolers. The GeForce GTX 1070 Ti FE cools down uniformly across its surface, whereas MSI's Titanium 8G board has a much cooler spot above its GPU package.

Thermal performance is lost due to the lack of contact between MSI's VRM sink and the main cooler.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

-

10tacle Yaaayyy! The NDA prison has freed everyone to release their reviews! Outstanding review, Chris. This card landed exactly where it was expected to, between the 1070 and 1080. In some games it gets real close to the 1080, where in other games, the 1080 is significantly ahead. Same with comparison to the RX 56 - close in some, not so close in others. Ashes and Destiny 2 clearly favor AMD's Vega GPUs. Can we get Project Cars 2 in the mix soon?Reply

It's a shame the overclocking results were too inconsistent to report, but I guess that will have to wait for vendor versions to test. Also, a hat tip for using 1440p where this GPU is targeted. Now the question is what will the real world selling prices be vs. the 1080. There are $520 1080s available out there (https://www.newegg.com/Product/Product.aspx?Item=N82E16814127945), so if AIB partners get closer to the $500 pricing threshold, that will be way too close to the 1080 in pricing. -

samer.forums Vega Still wins , If you take in consideration $200 Cheaper Freesync 1440p wide/nonwide monitors , AMD is still a winner.Reply -

SinxarKnights So why did MSI call it the GTX 1070 Ti Titanium? Do they not know what Ti means?Reply

ed: Lol at least one other person doesn't know what Ti means either : If you don't know Ti stands for "titanium" effectively they named the card GTX 1070 Titanium Titanium. -

10tacle Reply20334482 said:Vega Still wins , If you take in consideration $200 Cheaper Freesync 1440p wide/nonwide monitors , AMD is still a winner.

Well that is true and always goes without saying. You pay more for G-sync than Freesync which needs to be taken into consideration when deciding on GPUs. However, if you already own a 1440p 60Hz monitor, the choice becomes not so easy to make, especially considering how hard it is to find Vegas. -

10tacle For those interested, Guru3D overclocked their Founder's Edition sample successfully. As expected, it gains 9-10% which puts it square into reference 1080 territory. Excellent for the lame blower cooler. The AIB vendor dual-triple fan cards will exceed that overclocking capability.Reply

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_ti_review,42.html -

mapesdhs Chris, what is it that pummels the minimums for the 1080 Ti and Vega 64 in BF1 at 1440p? And why, when moving up to UHD, does this effect persist for the 1080 Ti but not for Vega 64?Reply

Also, wrt the testing of Division, and comparing to your 1080 Ti review back in March, I notice the results for the 1070 are identical at 1440p (58.7), but completely different at UHD (42.7 in March, 32.7 now); what has changed? This new test states it's using Medium detail at UHD, so was the March testing using Ultra or something? The other cards are affected in the same way.

Not sure if it's significant, but I also see 1080 and 1080 Ti performance at 1440p being a bit better back in March.

Re pricing, Scan here in the UK has the Vega 56 a bit cheaper than a reference 1070 Ti, but not by much. One thing which is kinda nuts though, the AIB versions of the 1070 Ti are using the same branding names as they do for what are normally overclocked models, eg. SC for EVGA, AMP for Zotac, etc., but of course they're all 1607MHz base. Maybe they'll vary in steady state for boost clocks, but it kinda wrecks the purpose of their marketing names. :D

Ian.

PS. When I follow the Forums link, the UK site looks different, then reverts to its more usual layout when one logs in (weird). Also, the UK site is failing to retain the login credentials from the US transfer as it used to.

-

mapesdhs Reply20334510 said:Well that is true and always goes without saying. You pay more for G-sync than Freesync which needs to be taken into consideration when deciding on GPUs. ...

It's a bit odd that people are citing the monitor cost advantage of Freesync, while article reviews are not showing games actually running at frame rates which would be relevant to that technology. Or are all these Freesync buyers just using 1080p? Or much lower detail levels? I'd rather stick to 60Hz and higher quality visuals.

Ian.

-

FormatC @Ian:Reply

The typical Freesync-Buddy is playing in Wireframe-Mode at 720p ;)

All this sync options can help to smoothen the output, if you are too sensitive. This is a fact, but not for everybody with the same prio. -

TJ Hooker Reply

From other benchmarks I've seen, DX12 performance in BF1 is poor. Average FPS is a bit lower than in DX11, and minimum FPS far worse in some cases. If you're looking for BF1 performance info, I'd recommend looking for benchmarks on other sites that test in DX11.20334648 said:Chris, what is it that pummels the minimums for the 1080 Ti and Vega 64 in BF1 at 1440p? And why, when moving up to UHD, does this effect persist for the 1080 Ti but not for Vega 64? -

10tacle Reply20334667 said:It's a bit odd that people are citing the monitor cost advantage of Freesync, while article reviews are not showing games actually running at frame rates which would be relevant to that technology. Or are all these Freesync buyers just using 1080p? Or much lower detail levels? I'd rather stick to 60Hz and higher quality visuals.

Well I'm not sure I understand your point. The benchmarks show FPS exceeding 60FPS, meaning maximum GPU performance. It's about matching monitor refresh rate (Hz) to FPS for smooth gameplay, not just raw FPS. But regarding the Freesync argument, that's usually what is brought up in price comparisons between AMD and Nvidia. If someone is looking to upgrade from both a 60Hz monitor and a GPU, then it's a valid point.

However, as I stated, if someone already has a 60Hz 2560x1440 or one of those ultrawide monitors, then the argument for Vega gets much weaker. Especially considering their limited availability. As I posted in a link above, you can buy a nice dual fan MSI GTX 1080 for $520 on NewEgg right now. I have not seen a dual fan MSI Vega for sale anywhere (every Vega for sale I've seen is the reference blower design).